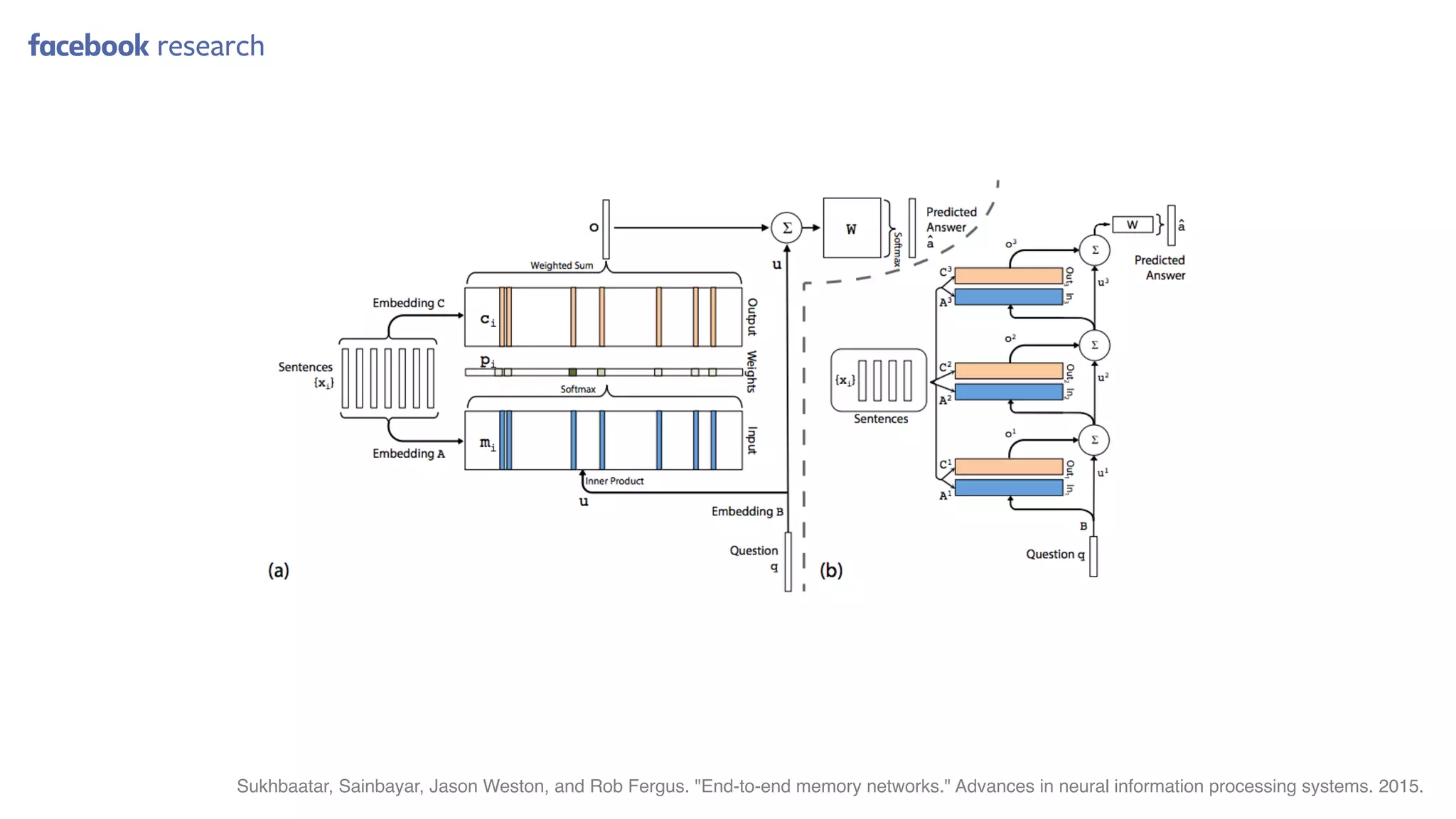

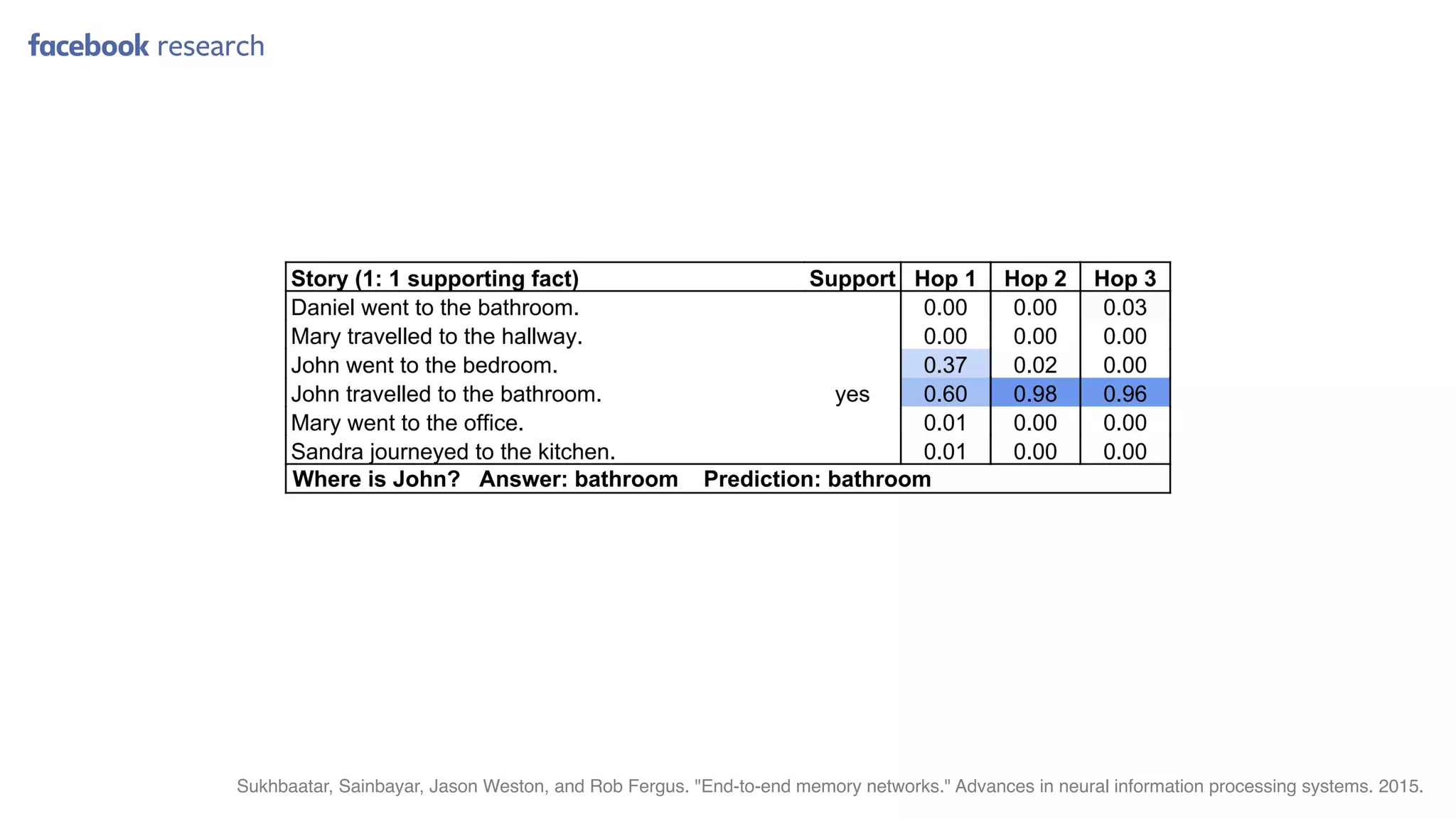

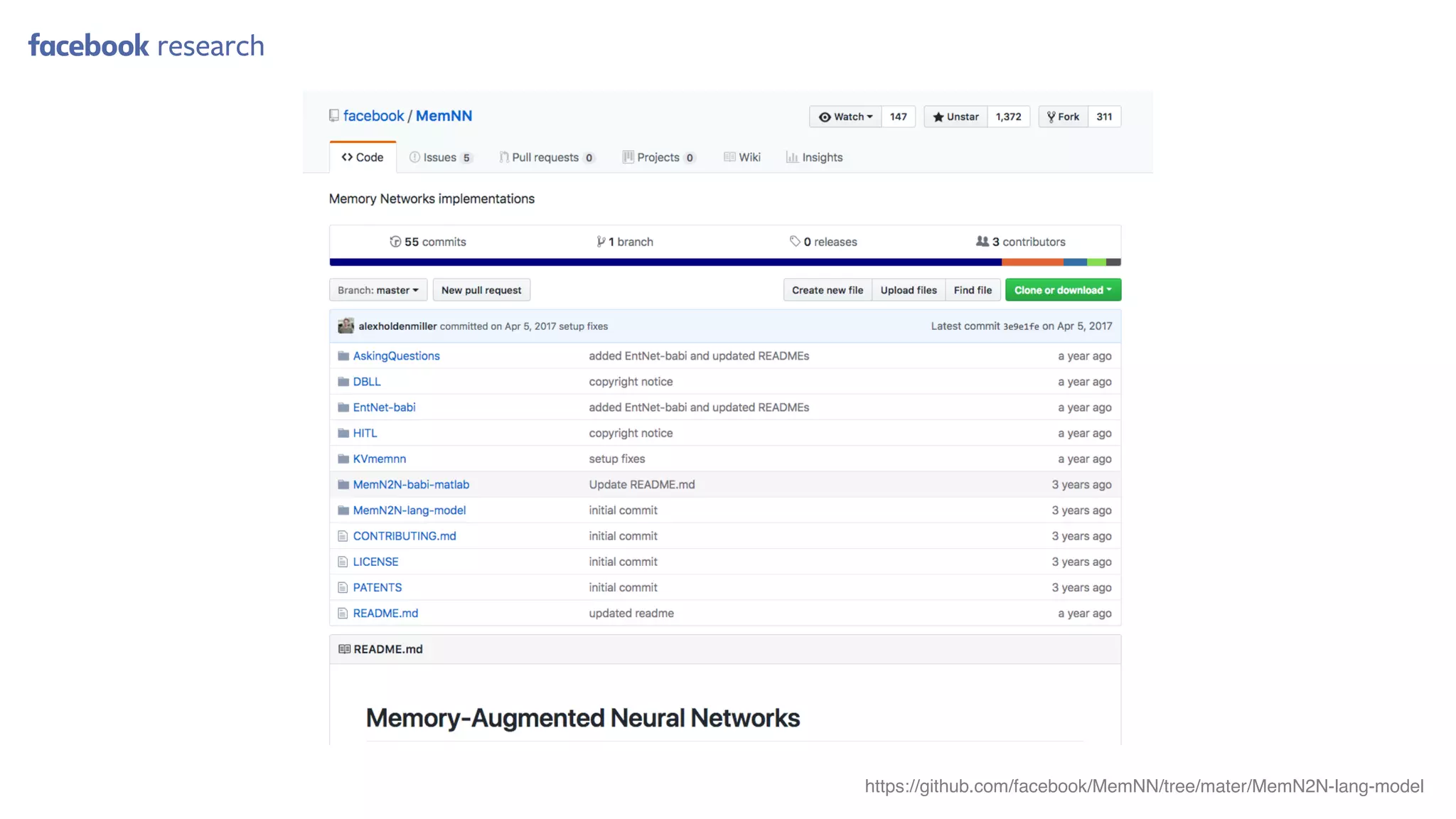

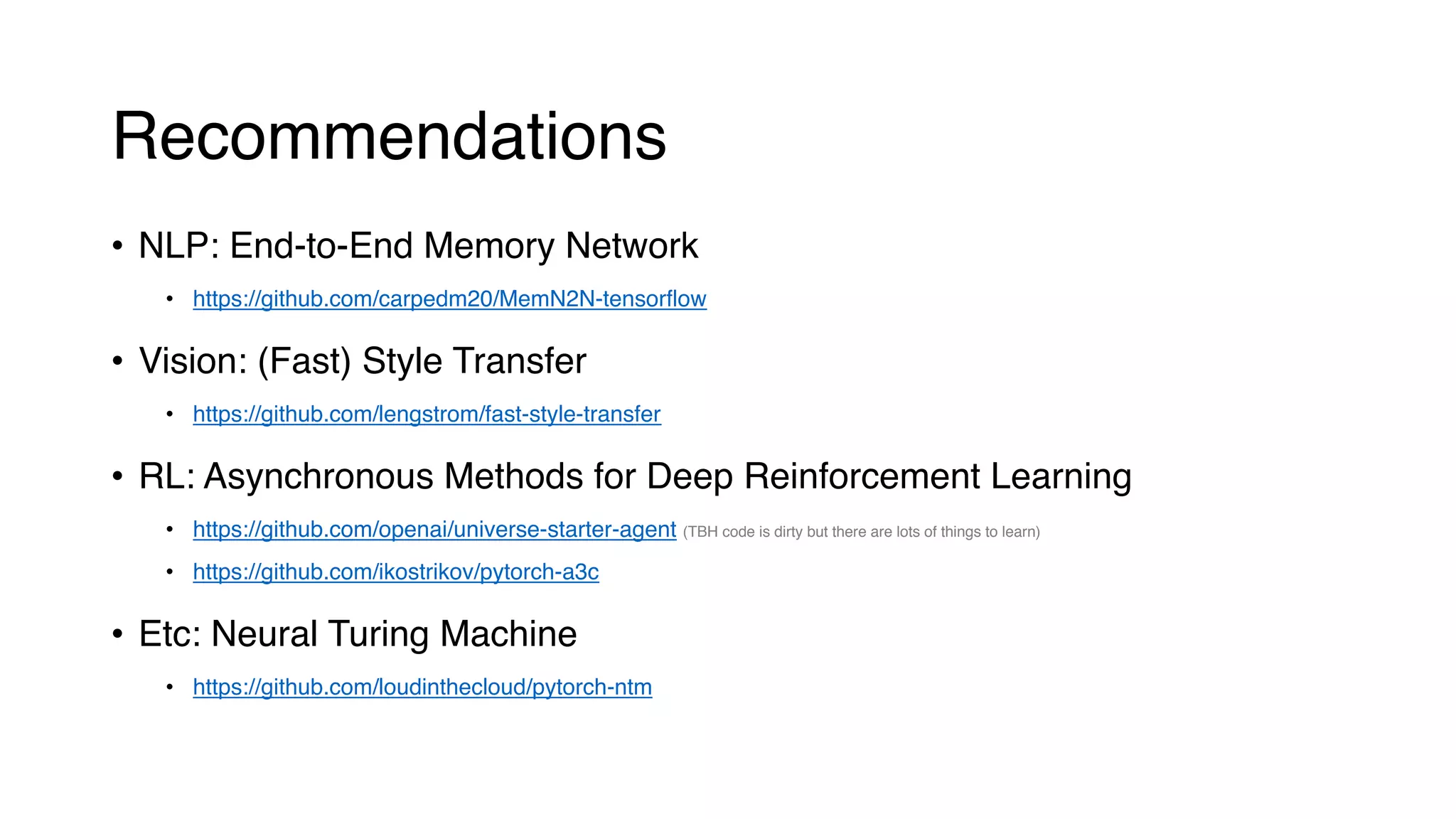

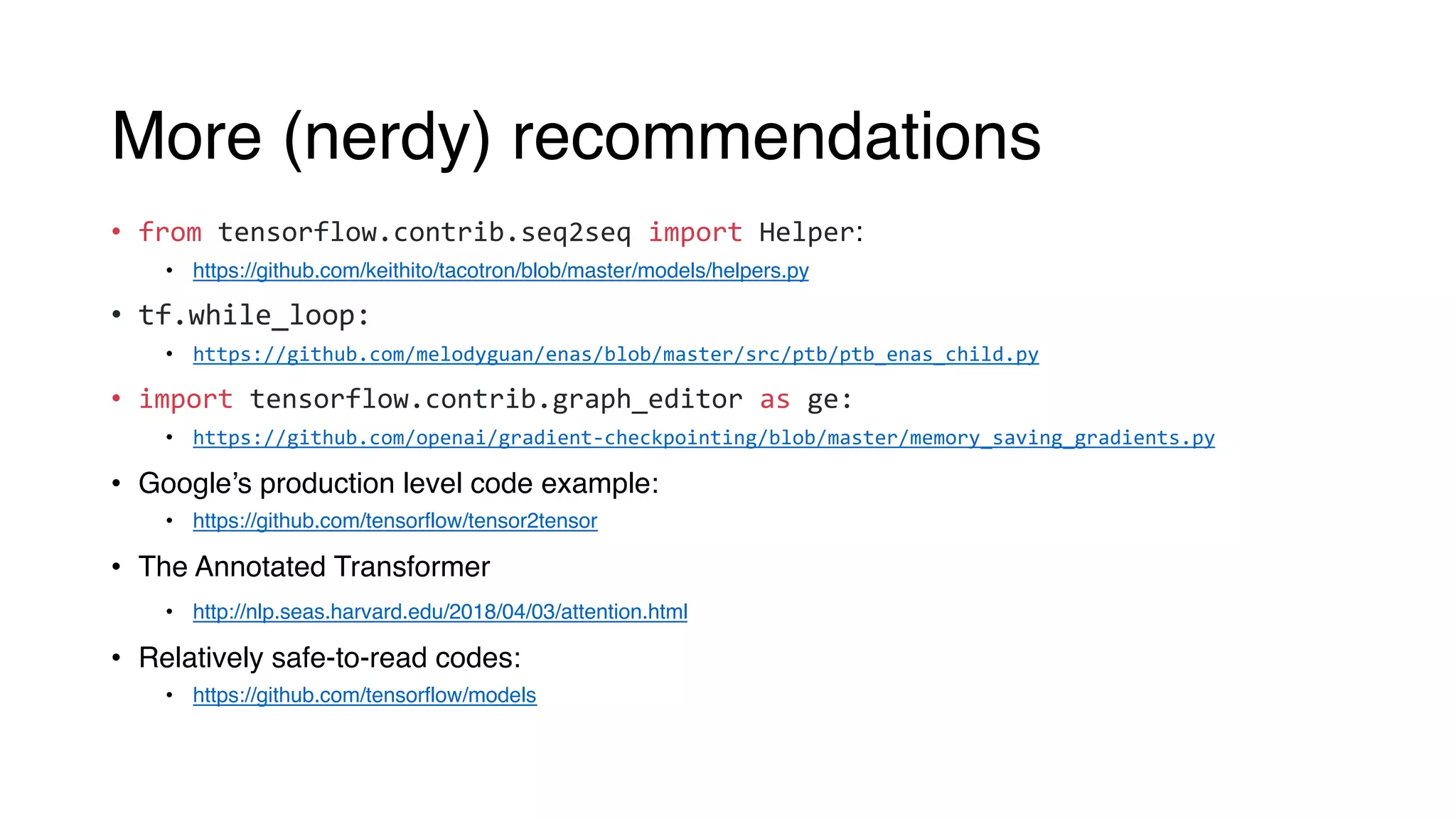

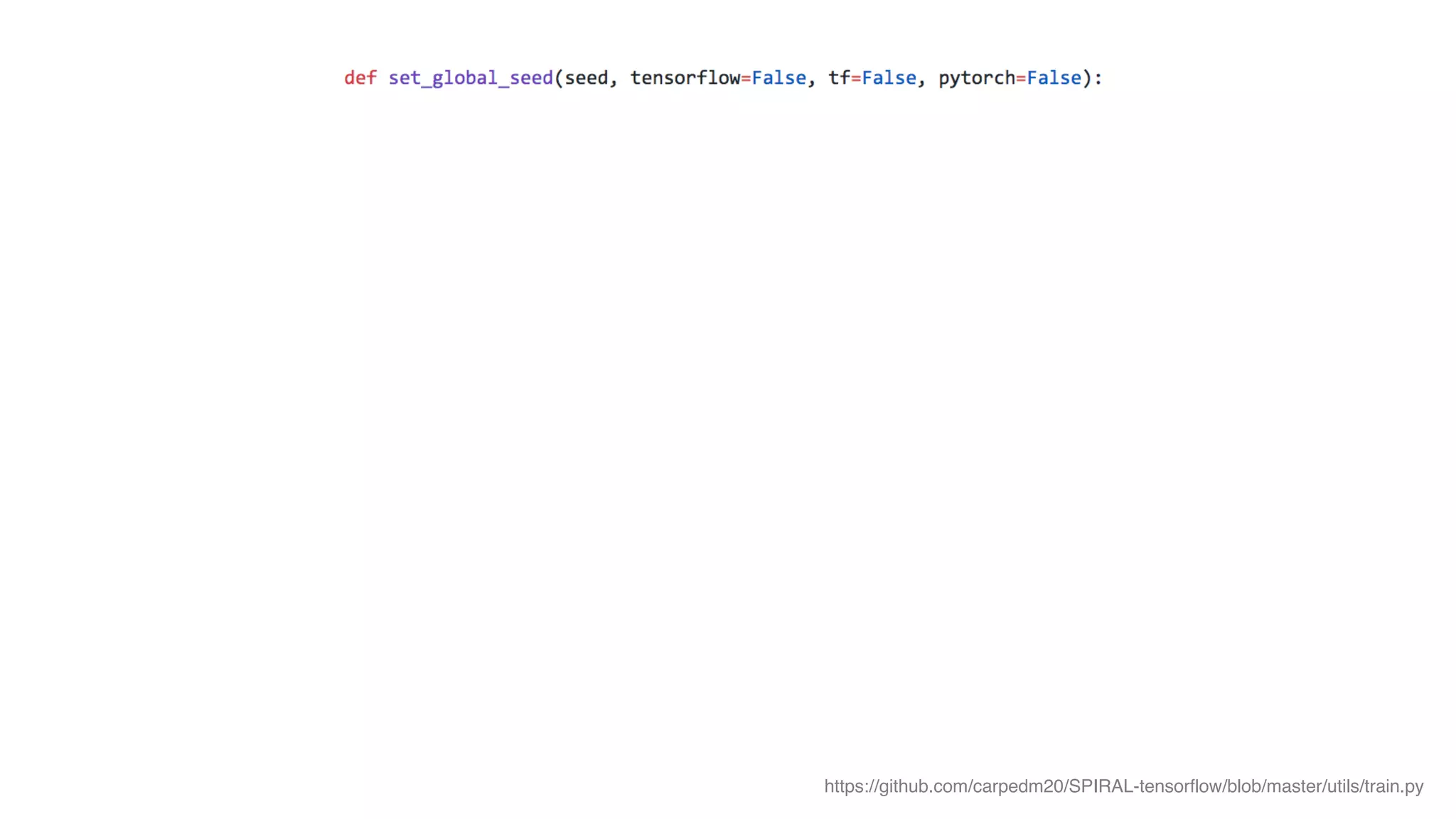

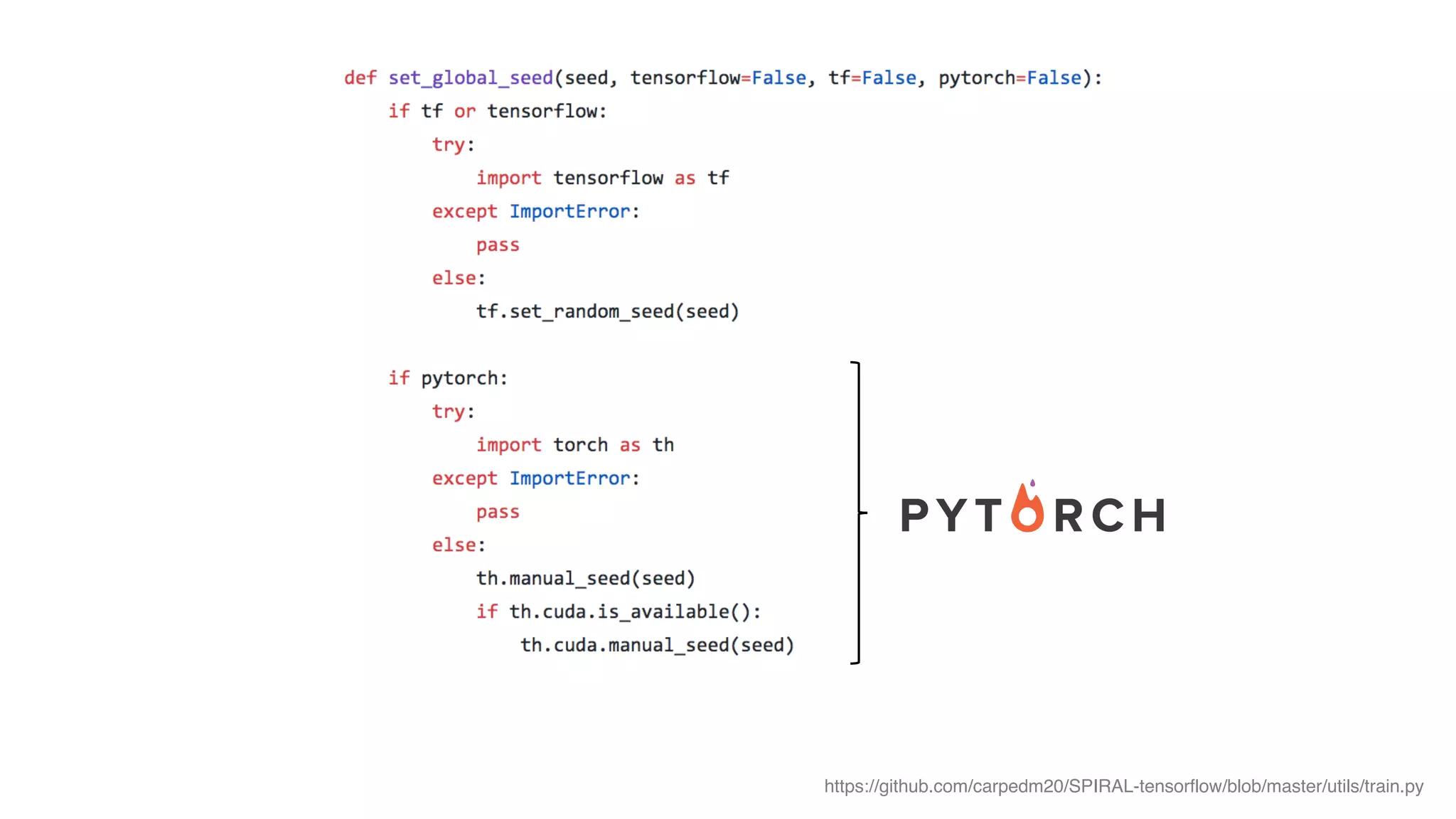

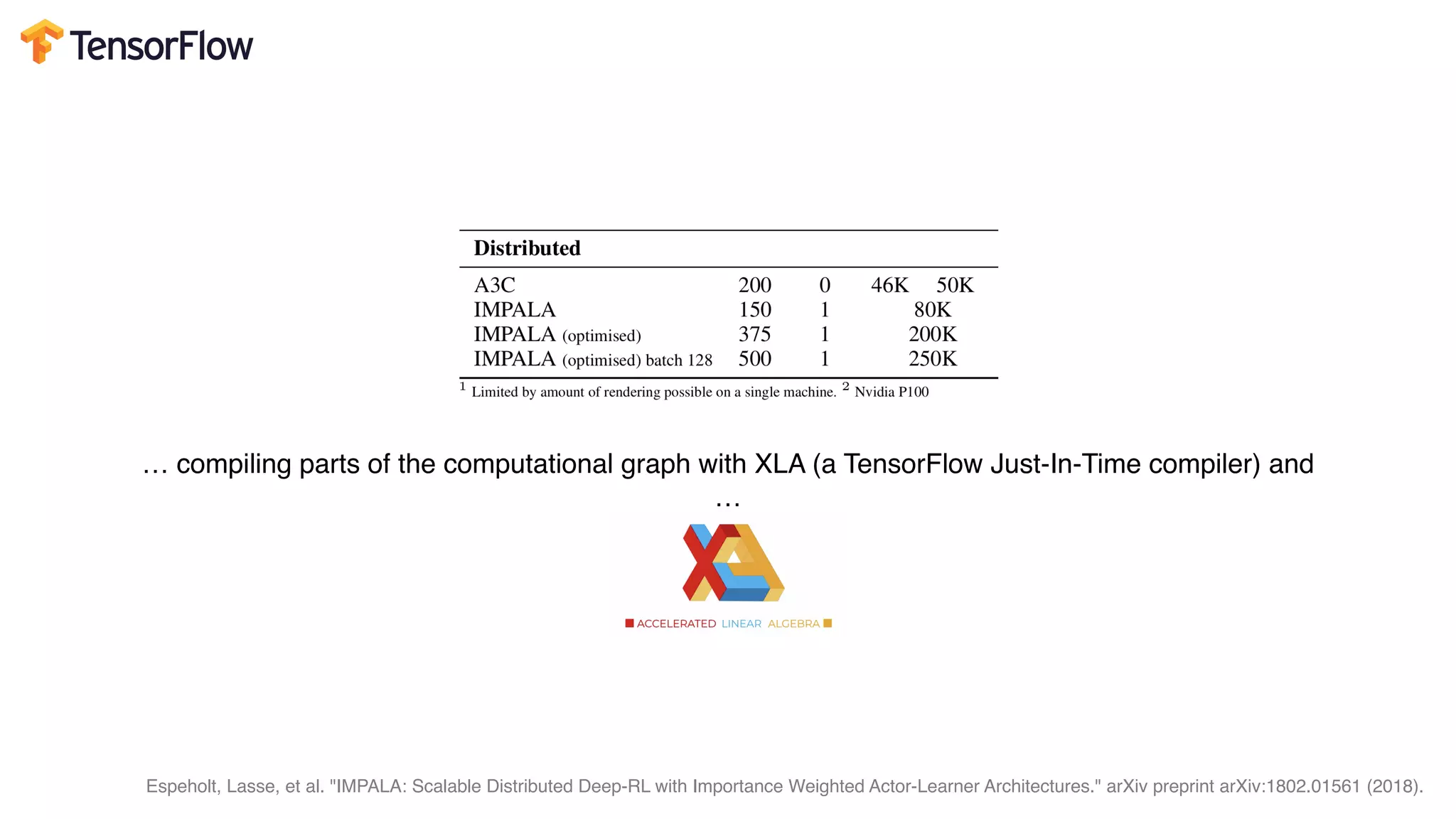

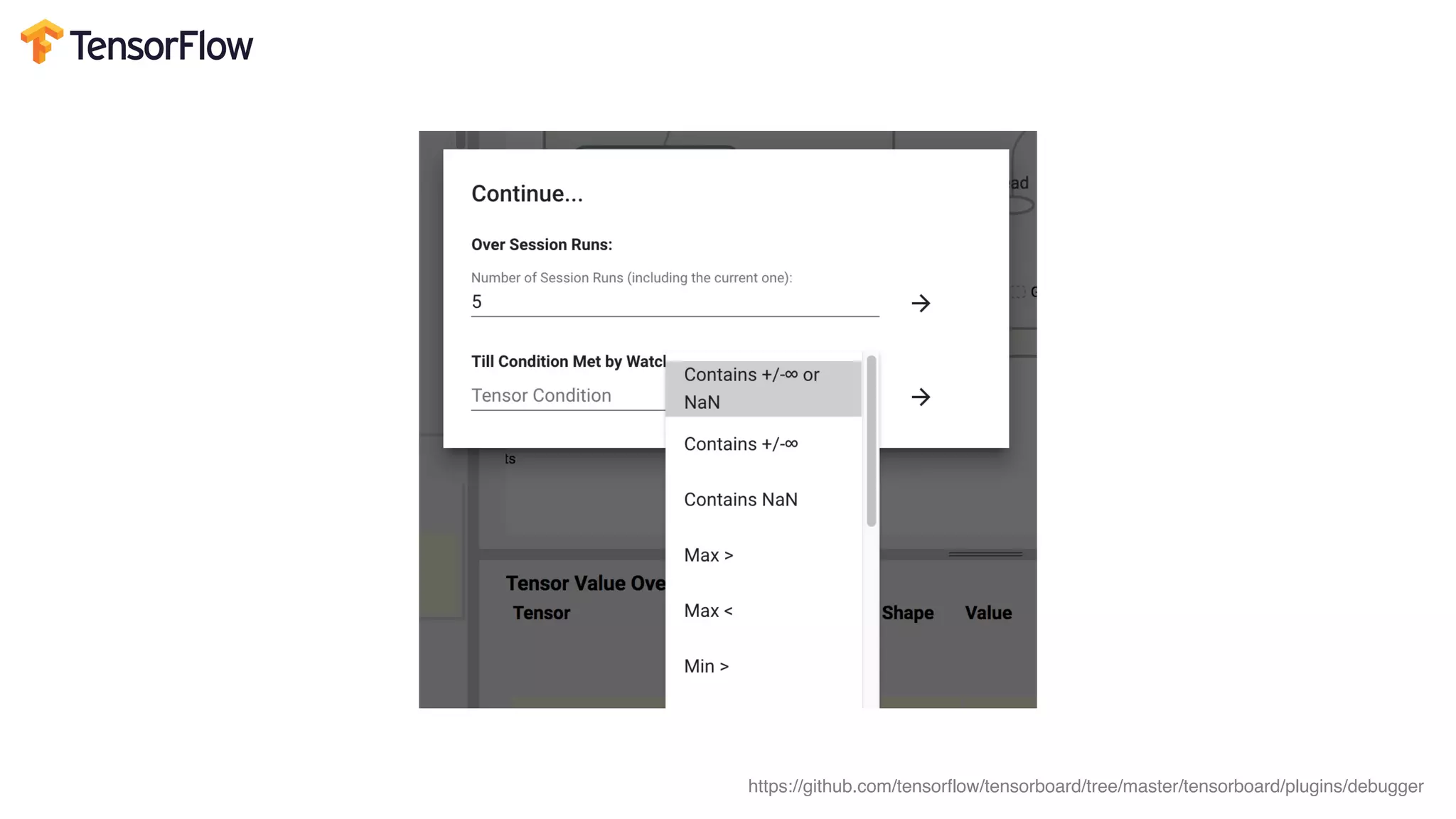

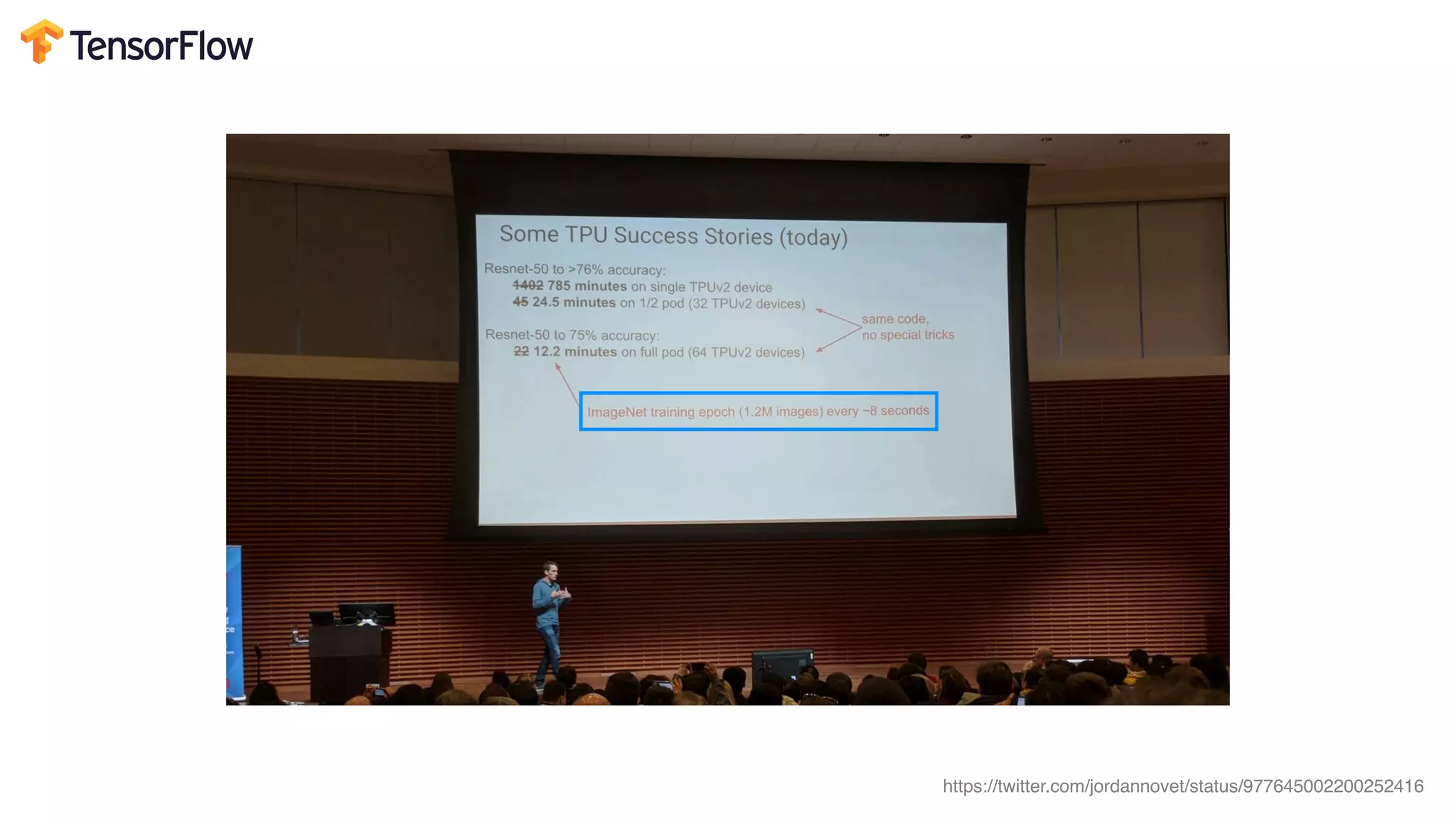

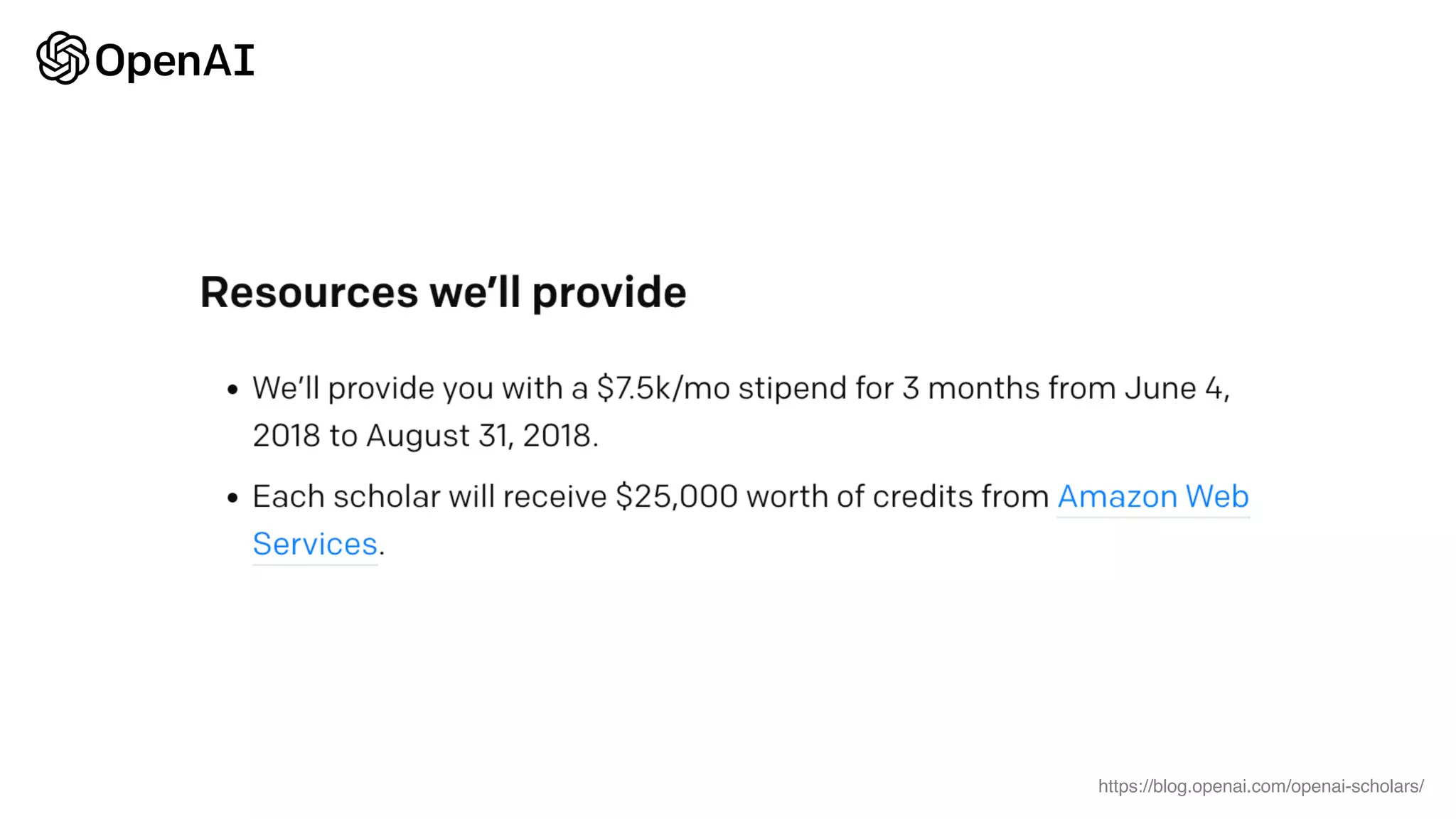

This document provides random thoughts on implementing machine learning papers. It discusses what types of papers to implement, including computer vision, NLP, reinforcement learning and more. It recommends specific papers and code repositories. It also discusses whether to use TensorFlow or PyTorch and mentions grants and competitions for implementing papers.