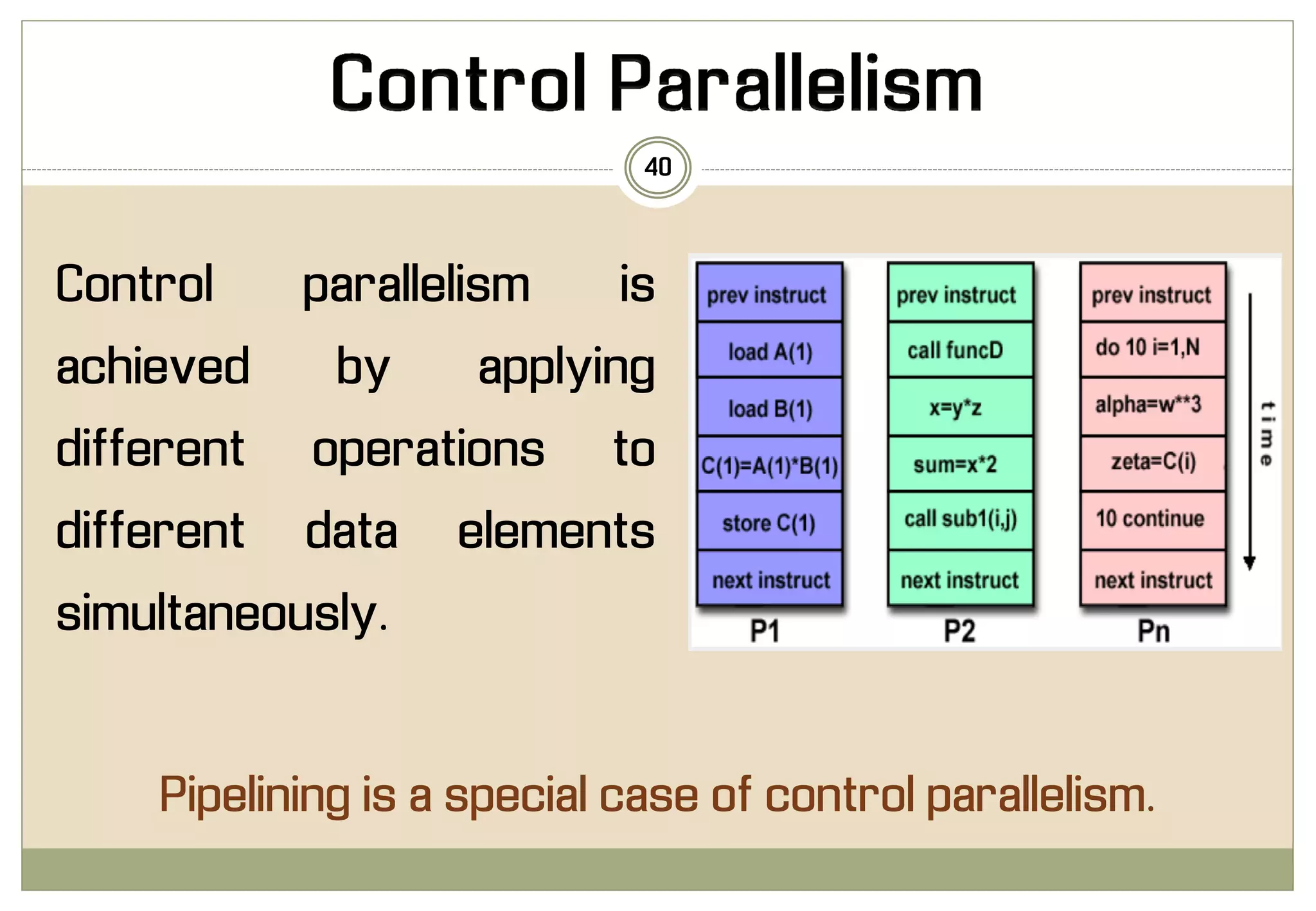

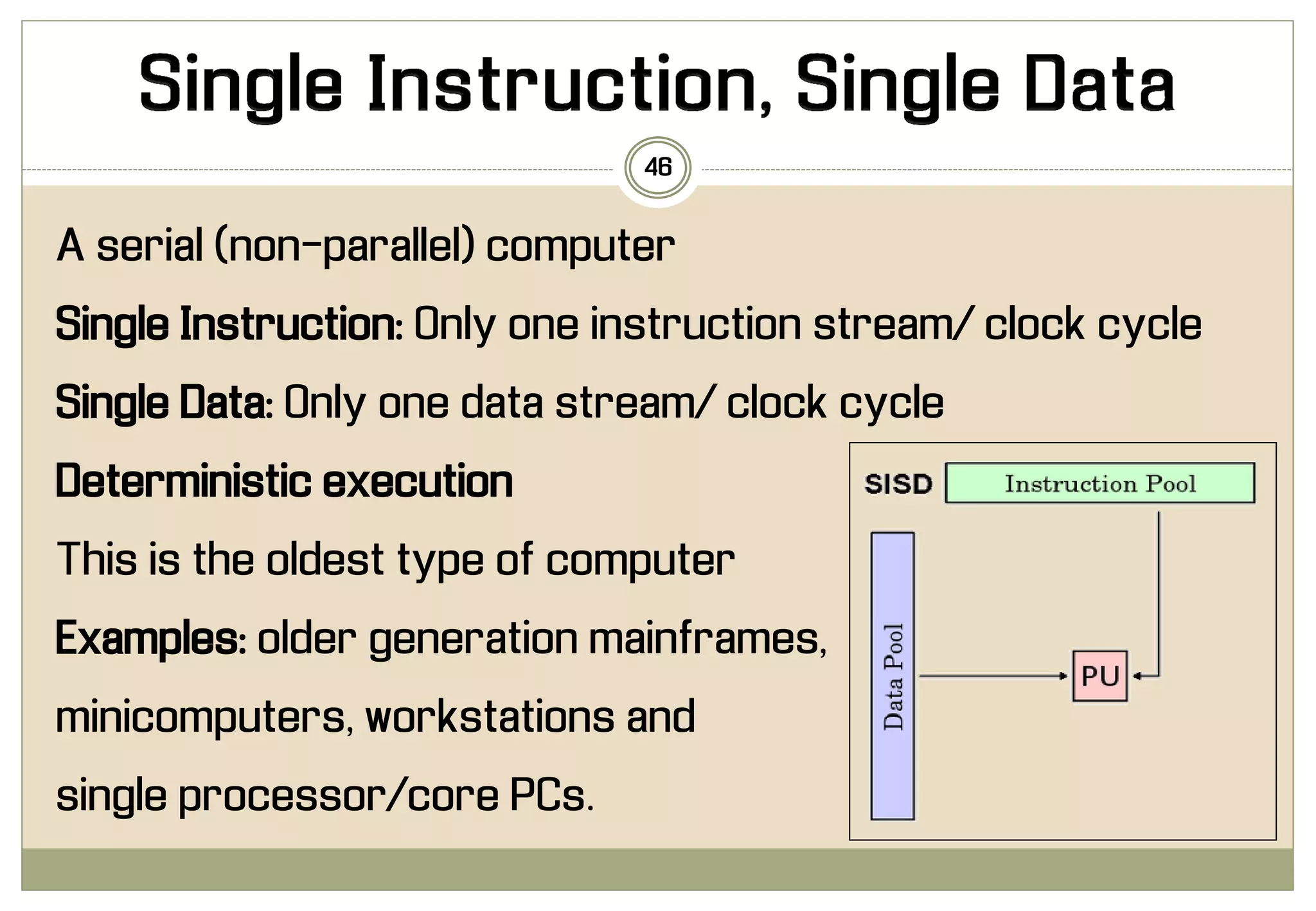

The document discusses advancements in high-speed computing and the evolution of parallel computers, highlighting the limitations of traditional physical experiments and the increasing reliance on numerical simulations. It outlines the critical role of parallel computing in solving complex scientific problems and describes various architectures and classifications of parallel computers. Additionally, it explores the benefits of data and control parallelism, scalability of algorithms, and provides insight into Flynn's taxonomy for classifying parallel computer architectures.