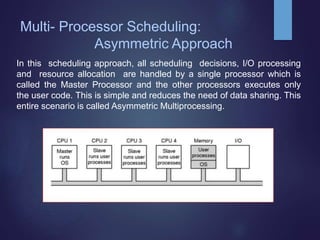

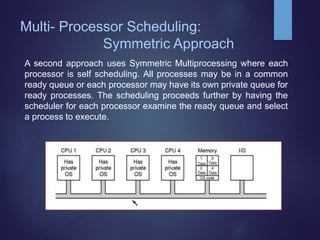

The document discusses multi-processor scheduling, highlighting the complexity compared to single-processor scheduling and categorizing it into asymmetric and symmetric approaches. It addresses critical issues such as locking systems, shared data, cache coherence, and processor affinity, explaining their impact on performance and data consistency. Load balancing is also examined, outlining its importance in utilizing multiple processors effectively and describing methods like push and pull migration to distribute workloads.