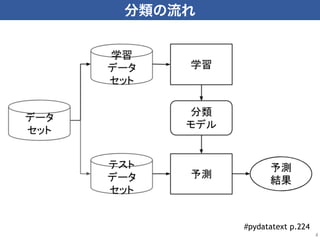

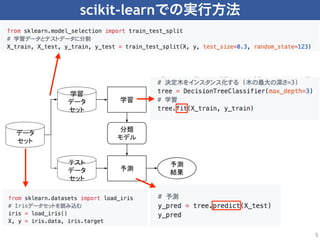

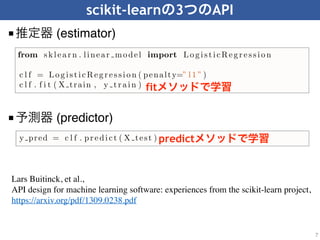

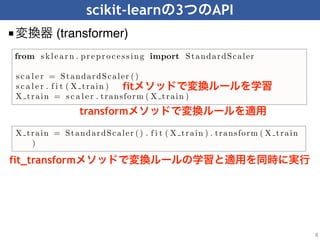

Scikit-learn is a popular machine learning library for Python that provides simple and efficient tools for data mining and data analysis. It includes algorithms for classification, regression, clustering and dimensionality reduction. The scikit-learn API is designed for consistency, with common estimator, predictor and transformer interfaces that allow algorithms to be used interchangeably. This standardized interface helps users easily try different algorithms and preprocessing techniques for their machine learning tasks.