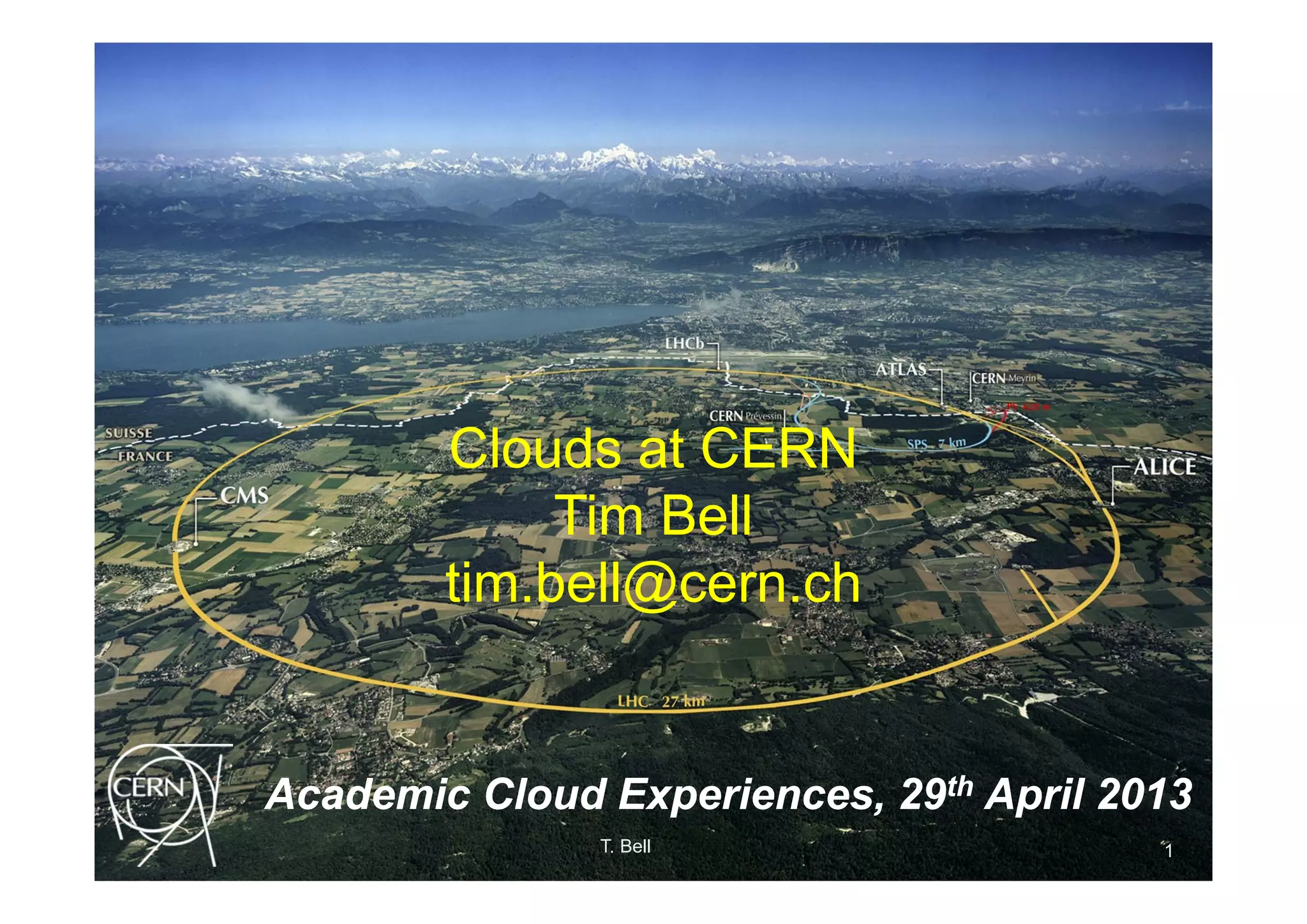

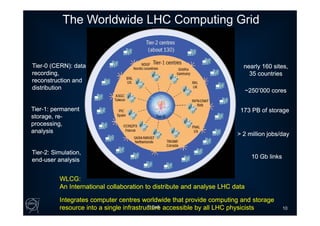

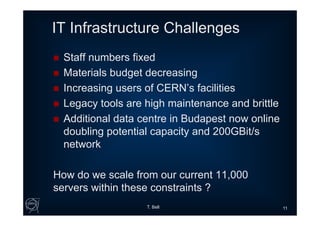

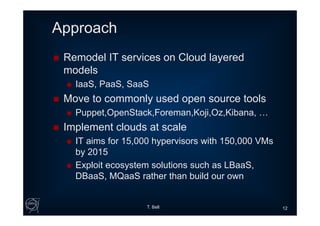

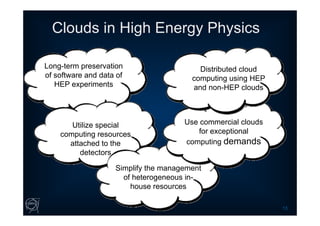

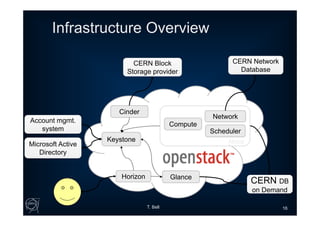

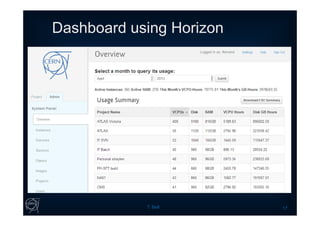

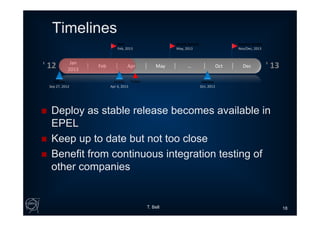

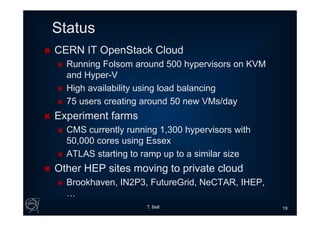

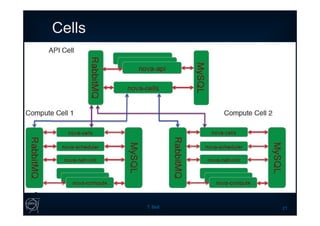

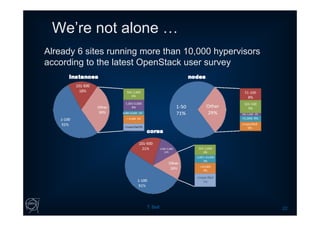

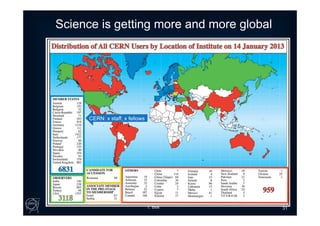

This document discusses clouds at CERN. It provides background on CERN, including that it was founded in 1954 by 12 European states for "Science for Peace" and now has 20 member states. It notes CERN has around 2300 staff, 1000 other paid personnel, and over 11,000 users. The document discusses challenges in scaling IT infrastructure with fixed staff and budgets. It outlines CERN's approach of moving to cloud models using open source tools. The status provides details on OpenStack deployments at CERN and experiments. It outlines next steps such as moving to new OpenStack releases and using cells to scale capacity.