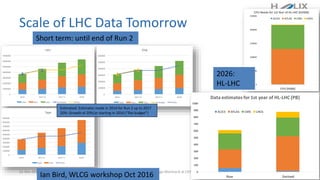

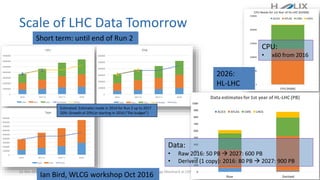

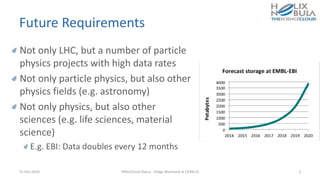

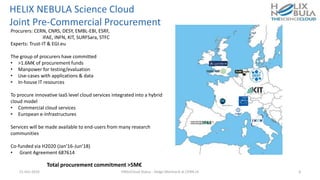

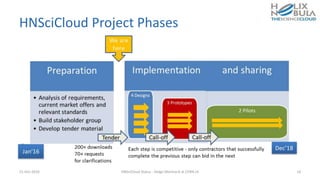

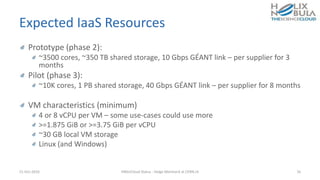

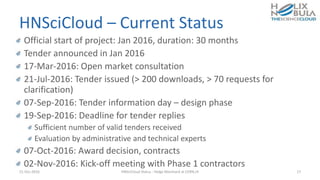

The Helix Nebula Science Cloud project aims to develop a hybrid cloud platform for the European research community, with a budget exceeding €5 million and co-funding from the European Commission. This platform is expected to enhance the computing capabilities of scientific research infrastructures, particularly in handling large volumes of data from projects like the LHC. The initiative addresses various technical challenges associated with public cloud integration, aiming to adapt procurement processes to better utilize cloud services in the research sector.