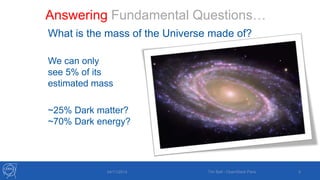

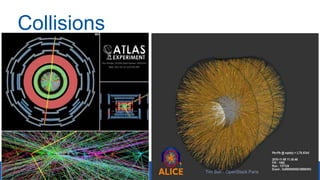

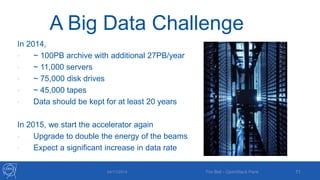

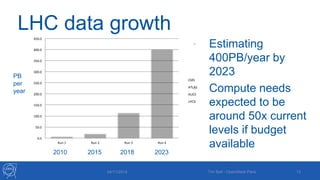

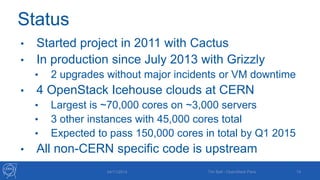

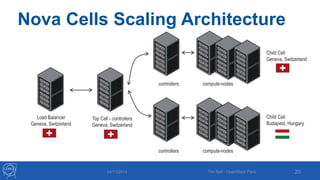

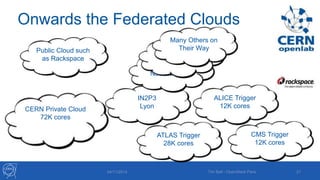

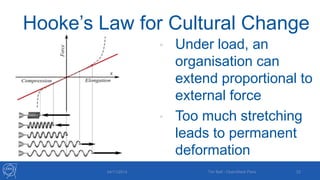

Tim Bell gave a presentation on 04/11/2014 in Paris about using OpenStack at CERN to help answer fundamental physics questions. Some key challenges discussed included the large amount of data generated from particle collisions, which is expected to grow to 400PB/year by 2023. OpenStack has been in production at CERN since 2013 and is used across multiple clouds totaling over 150,000 cores. The presentation covered CERN's experience migrating to OpenStack and addressed cultural barriers to adoption.