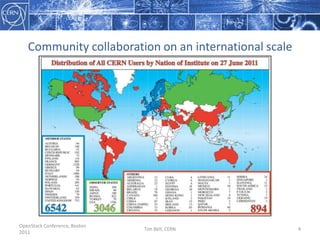

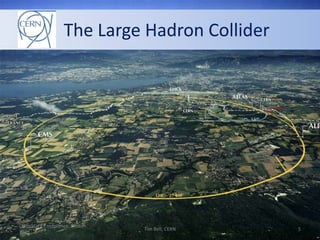

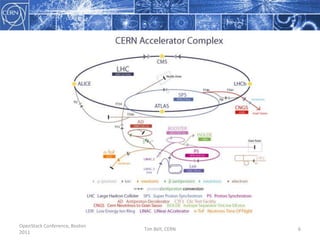

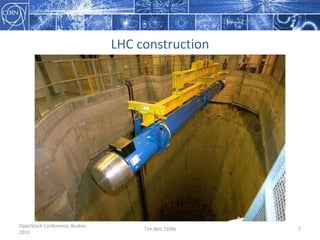

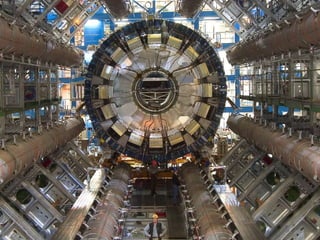

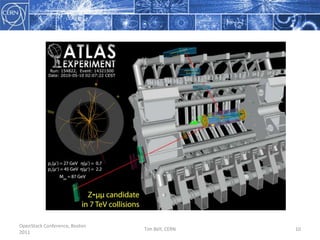

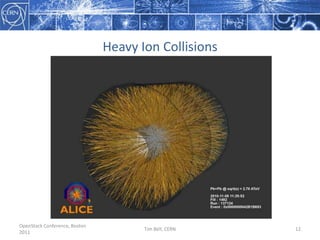

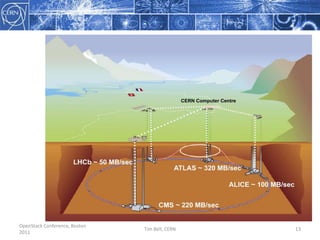

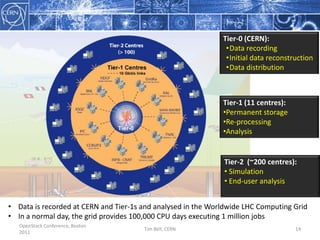

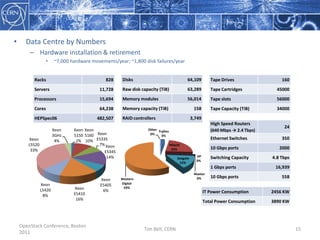

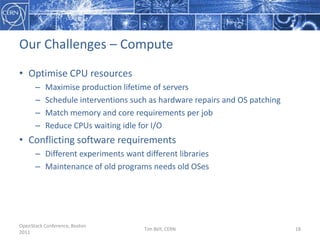

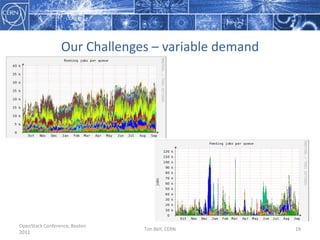

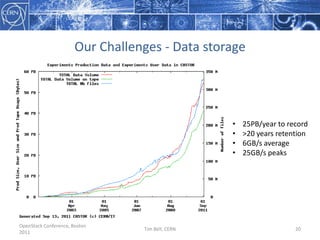

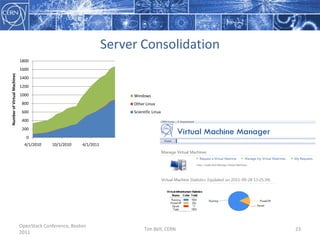

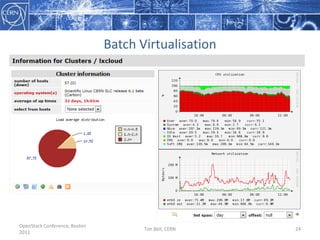

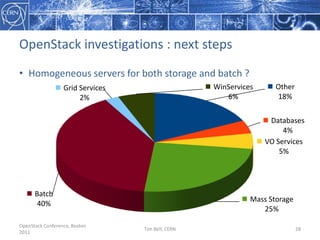

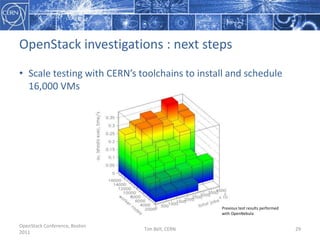

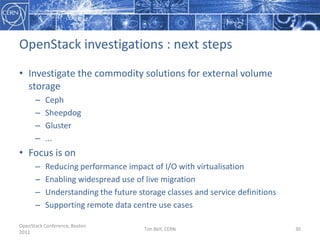

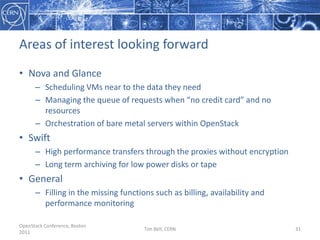

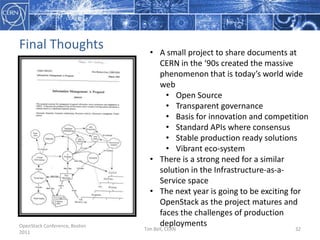

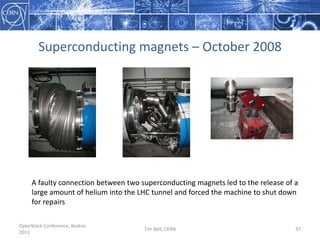

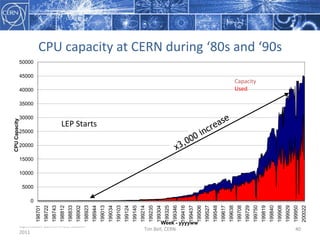

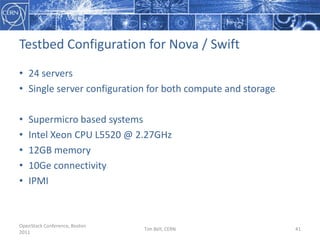

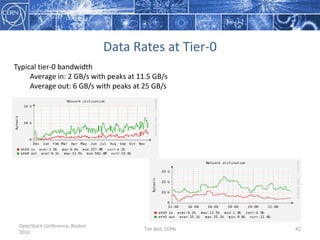

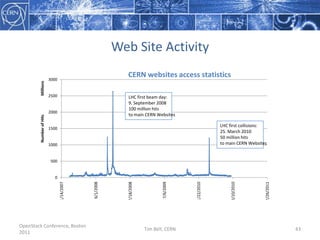

The document discusses CERN's transition towards an agile infrastructure using OpenStack, detailing the organization's role in fundamental physics and the challenges it faces in managing vast amounts of data from the Large Hadron Collider (LHC). It outlines the current state of CERN’s computing infrastructure, including virtualization efforts and the need for an Infrastructure as a Service (IaaS) model to increase efficiency and support for global collaboration. Additionally, the document highlights ongoing challenges such as data storage, compute resource optimization, and the necessity for stable, scalable solutions in research environments.