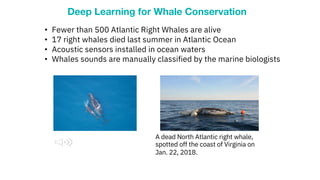

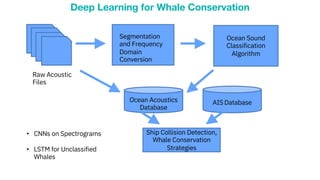

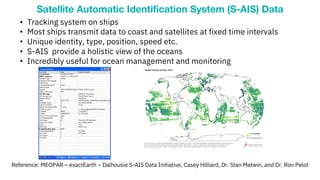

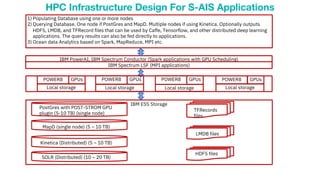

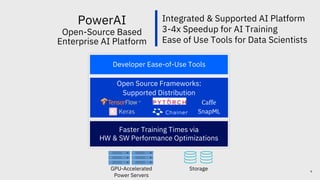

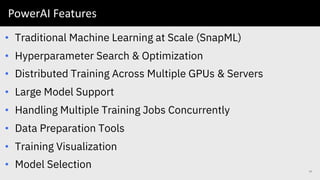

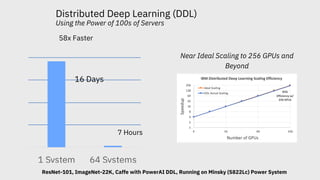

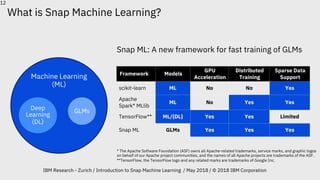

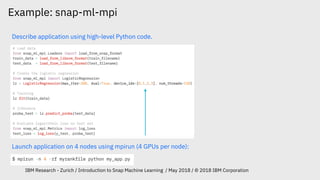

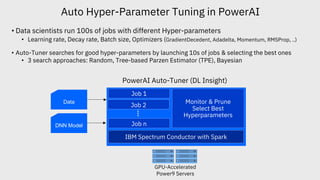

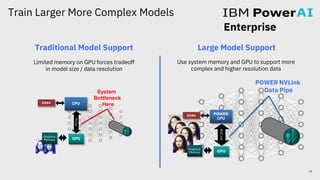

This document discusses several use cases for deep learning in ocean engineering including whale conservation using acoustic and AIS ship tracking data, as well as cognitive applications of AIS data. It describes how acoustic sensors are used to monitor whale sounds which are currently manually classified, and how deep learning could be used for automatic classification of whale sounds from raw audio files. It also discusses how AIS and satellite AIS data provide ship tracking information that could be used with deep learning for applications like ship collision detection and whale conservation strategies. Finally, it summarizes an HPC infrastructure design for AIS applications using IBM Power systems, GPUs, and AI software like PowerAI.