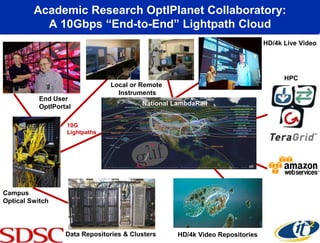

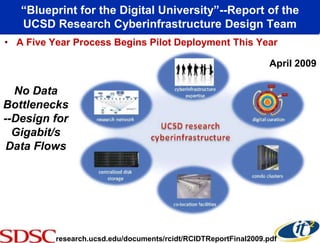

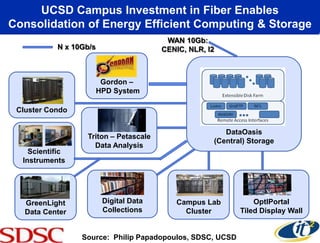

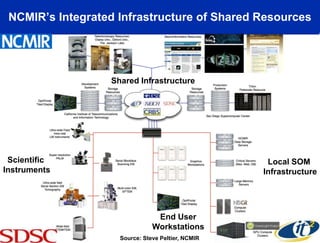

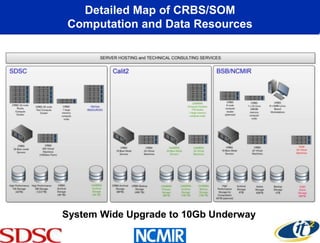

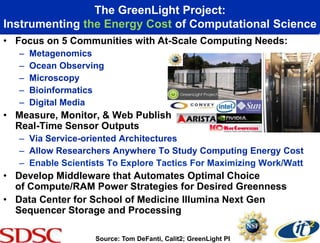

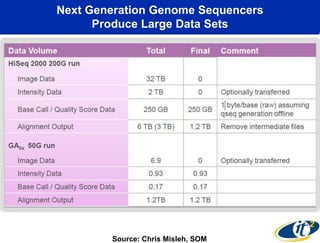

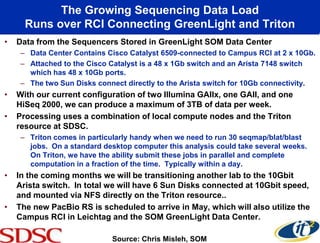

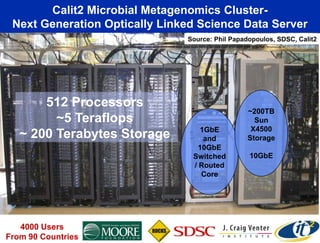

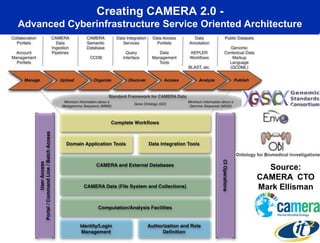

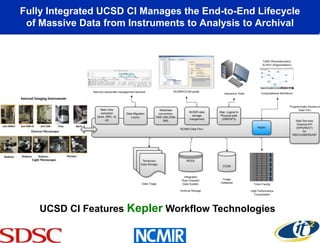

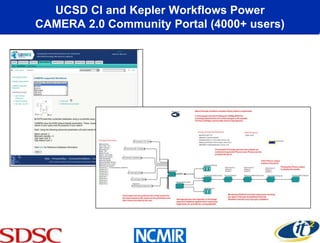

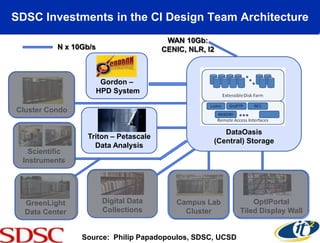

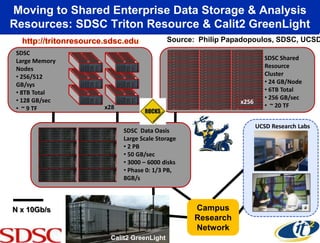

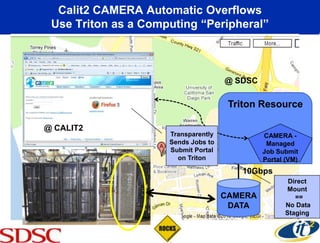

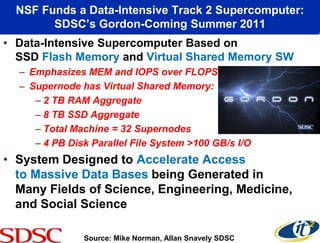

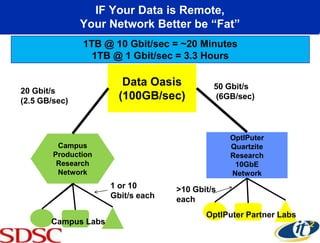

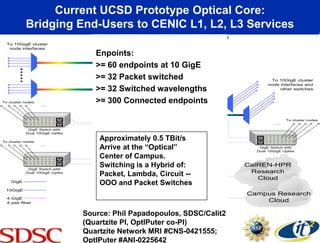

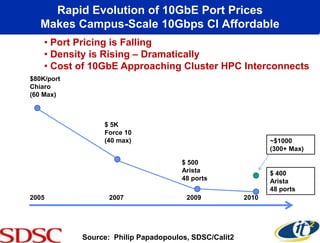

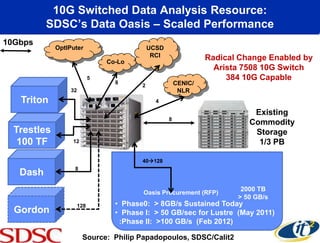

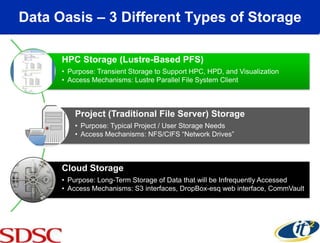

The document outlines the development of a high-performance cyberinfrastructure aimed at enhancing data-driven research in biomedical sciences at UCSD, detailing the deployment of a 10gbps network and substantial data storage capabilities. It discusses the challenges of large-scale data storage, the evolving applications for research, and specific projects leveraging the infrastructure, such as genomic sequencing and microbial metagenomics. The initiative emphasizes the integration of advanced computing resources to facilitate efficient data management and analysis in various scientific fields.