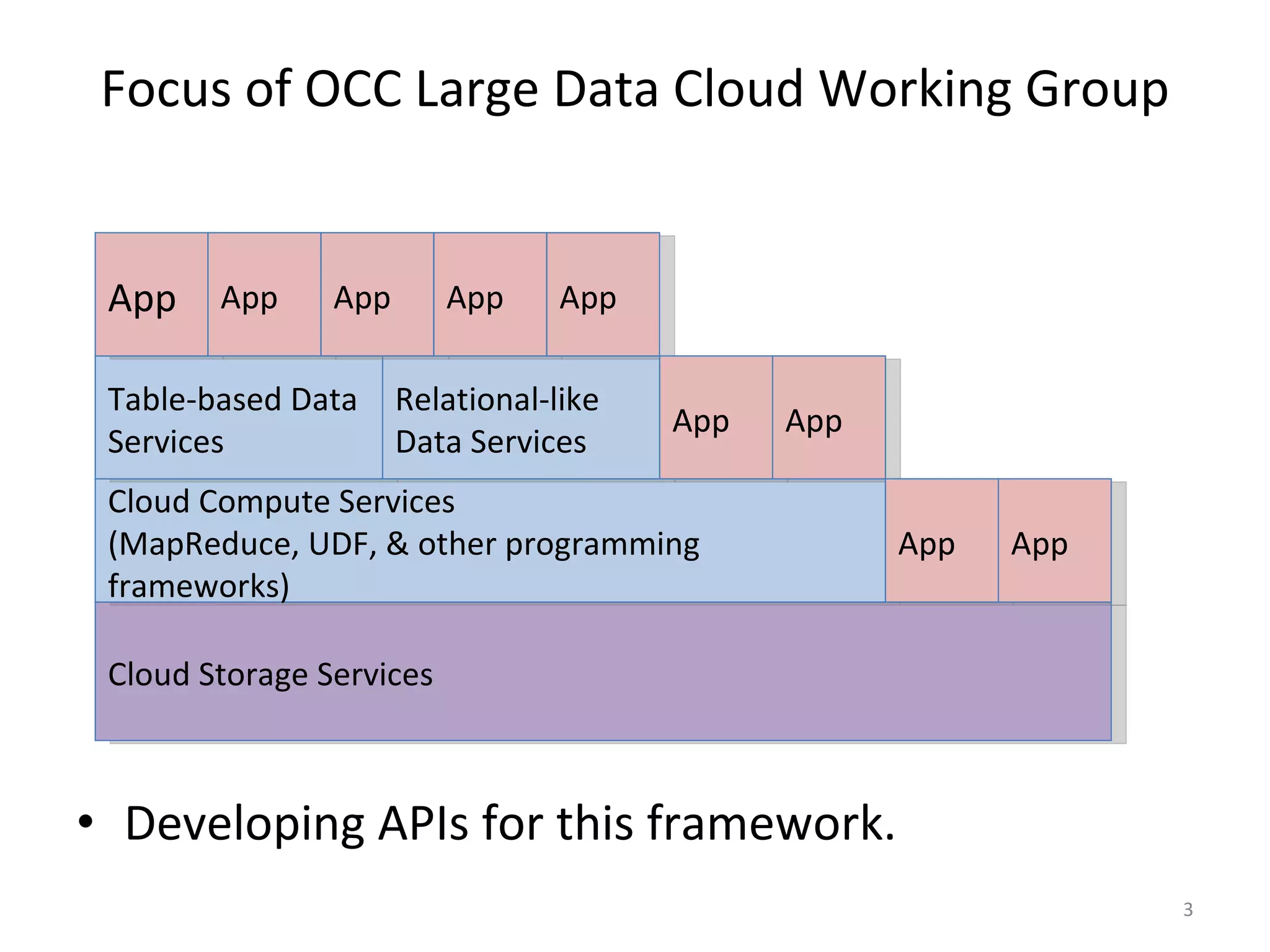

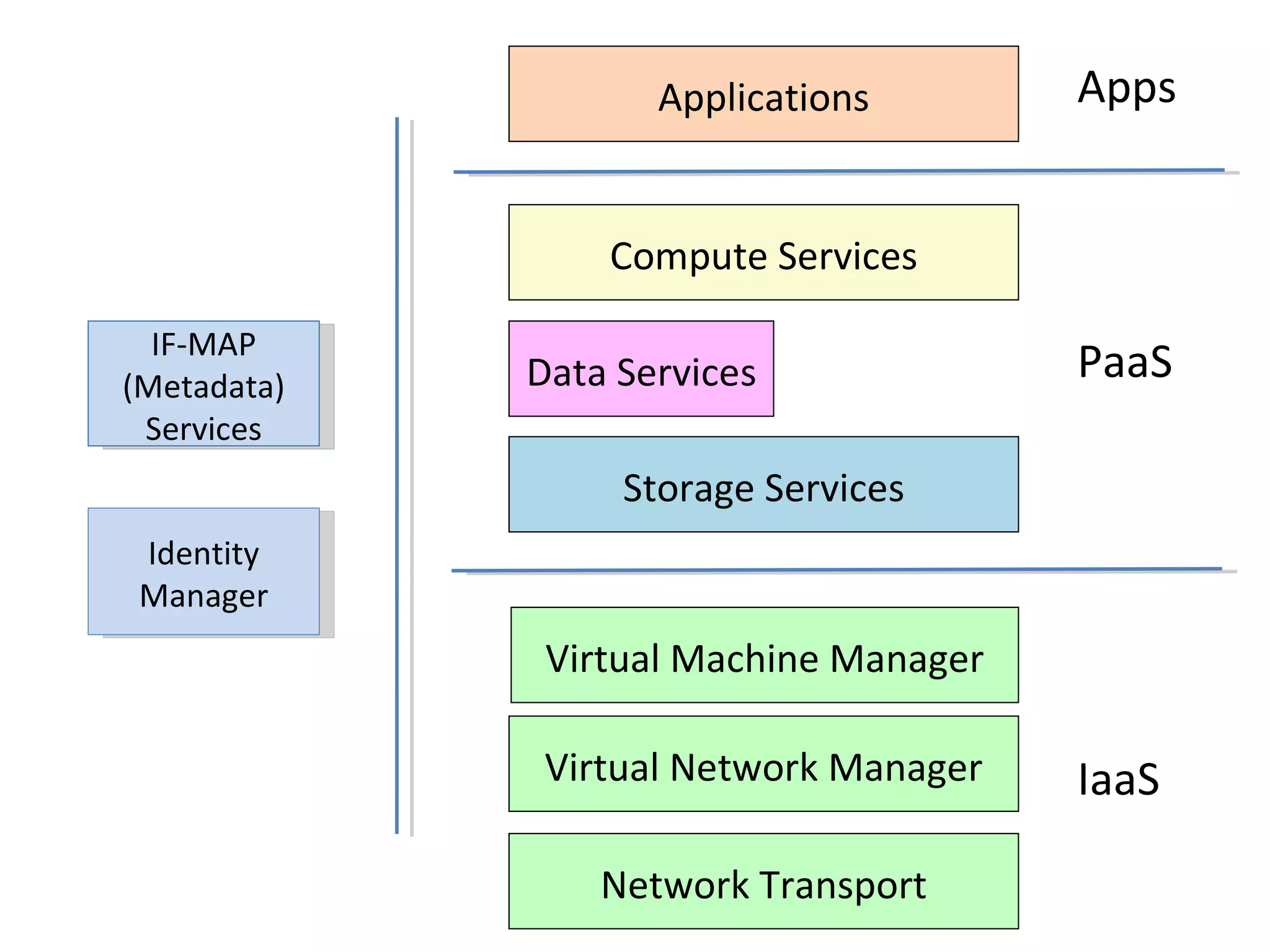

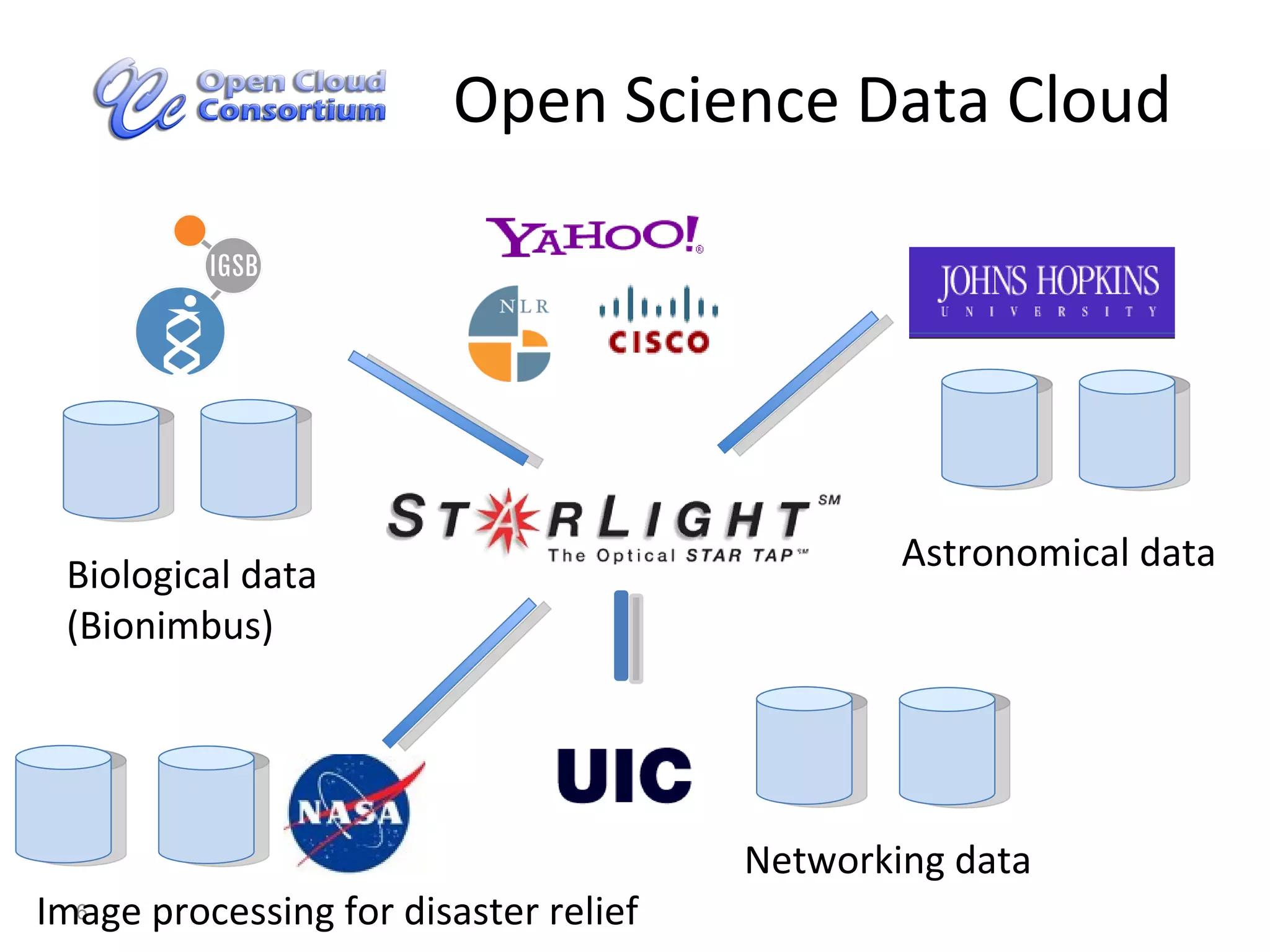

The document discusses the development of large-scale on-demand image processing capabilities for disaster relief by the Open Cloud Consortium, focusing on cloud technology frameworks and data interoperability. It details the functionalities of a testbed supporting high-performance image processing applications, utilizing a structured approach to manage large data sets. Upcoming steps include the establishment of a persistent cloud infrastructure for image processing by May 15, 2010, aimed at aiding disaster relief efforts.

![For More Information [email_address] www.opencloudconsortium.org](https://image.slidesharecdn.com/cloud-based-image-processing-for-disaster-relief-10-v1-100228160652-phpapp02/75/Large-Scale-On-Demand-Image-Processing-For-Disaster-Relief-12-2048.jpg)