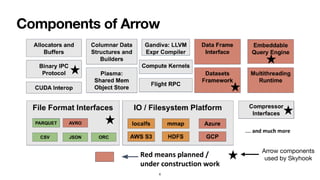

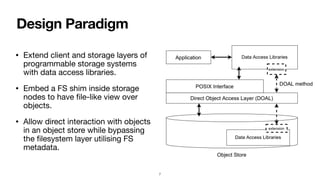

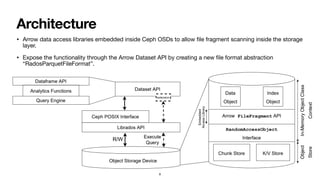

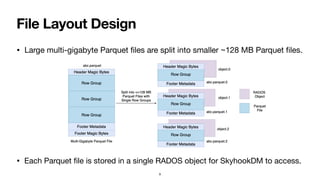

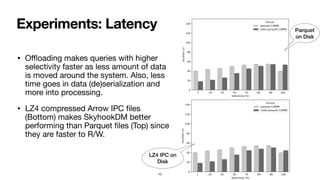

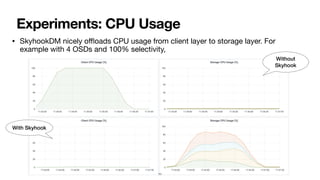

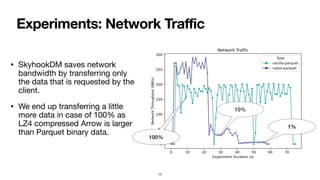

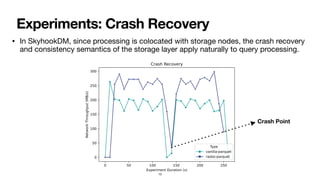

This document introduces SkyhookDM, a system that offloads computation from clients to storage nodes. It does this by embedding Apache Arrow data access libraries inside Ceph object storage devices (OSDs). This allows large Parquet files to be scanned and processed directly on the OSDs without needing to move all the data to clients. Experiments show SkyhookDM reduces latency, CPU usage, and network traffic compared to traditional approaches. It has also been integrated with the Coffea analysis framework. Ongoing work involves optimizing Arrow serialization for network transfers.

![41.5%

30.5%

24.6%

3

.

3

4

%

0.103%

0.0324%

0.00855%

0.00511%

[6] Serialize Result Table

[5] Scan Parquet Data

[7] Result Transfer

[4] Disk I/O

[3] Deserialize Scan Request

[1] Stat Fragment

[8] Deserialize Result Table

[2] Serialize Scan Request

Sending uncompressed IPC

Ongoing Work

• Arrow’s memory layout requires internal memory copies to serialize it to a

contiguous on the wire format and this has a very high overhead.

48.3%

29.5%

11.7%

5.37%

5.11%

0.0513%

0.0304%

0.00771%

[5] Scan Parquet Data

[6] Serialize Result Table

[7] Result Transfer

[8] Deserialize Result Table

[4] Disk I/O

[3] Deserialize Scan Request

[1] Stat Fragment

[2] Serialize Scan Request

Sending LZ4 compressed IPC

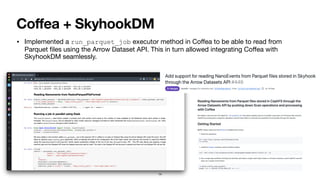

• Collaborating with ServiceX and Co

ff

ea team to integrate SkyhookDM into the

larger analysis facility ecosystem.

15](https://image.slidesharecdn.com/chakraborty30juneiris-hep-210630183224/85/SkyhookDM-Towards-an-Arrow-Native-Storage-System-15-320.jpg)