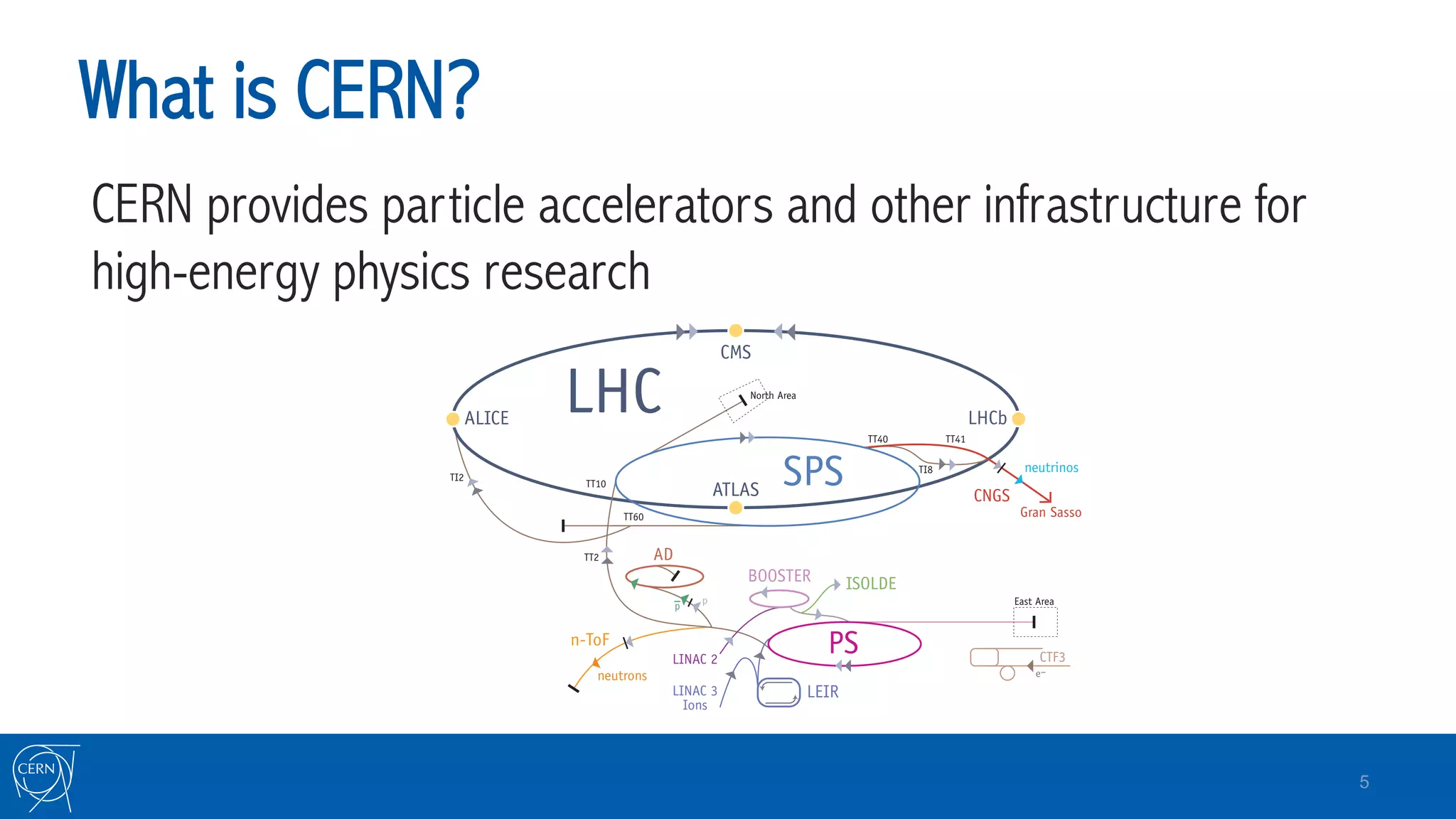

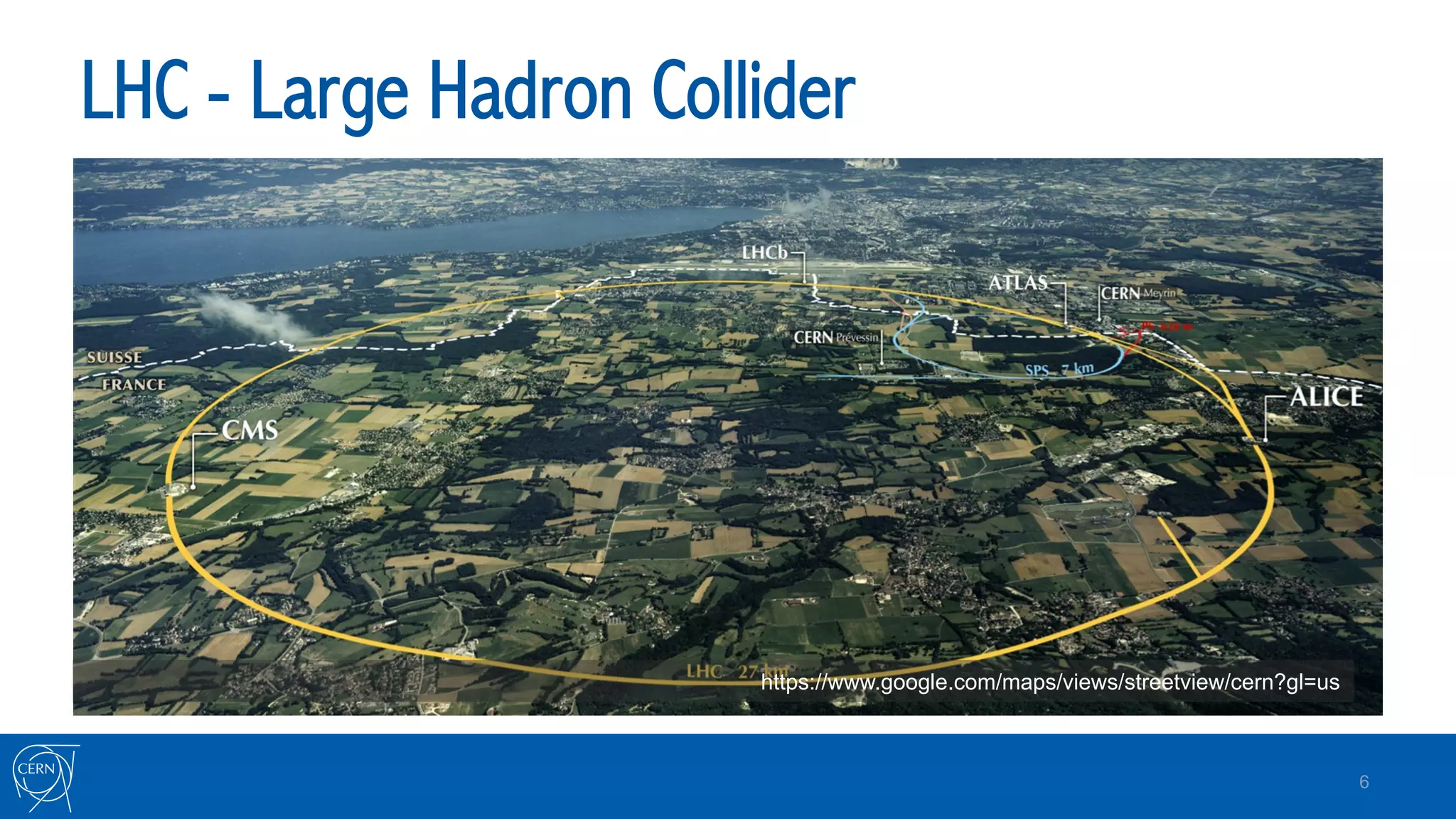

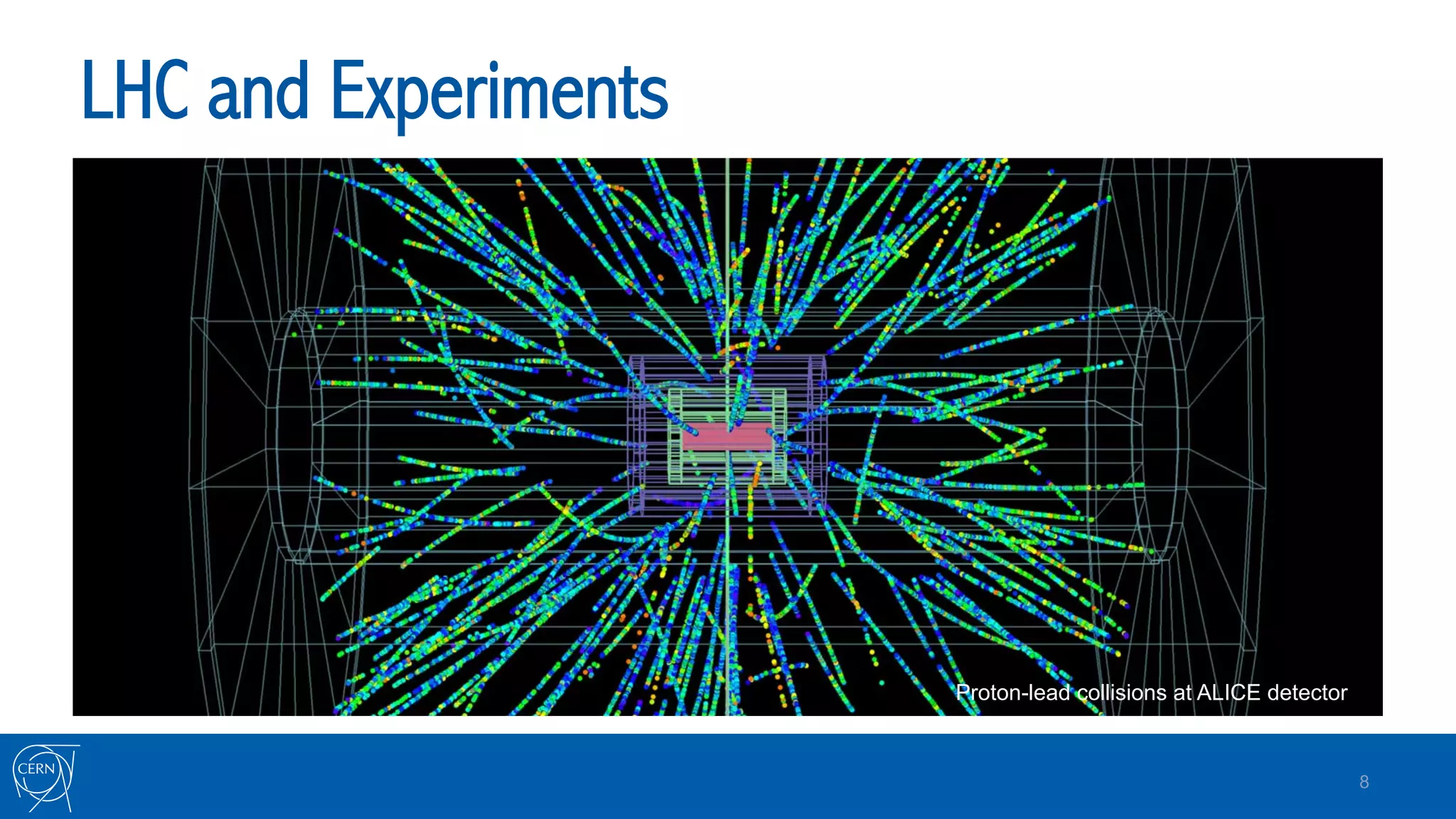

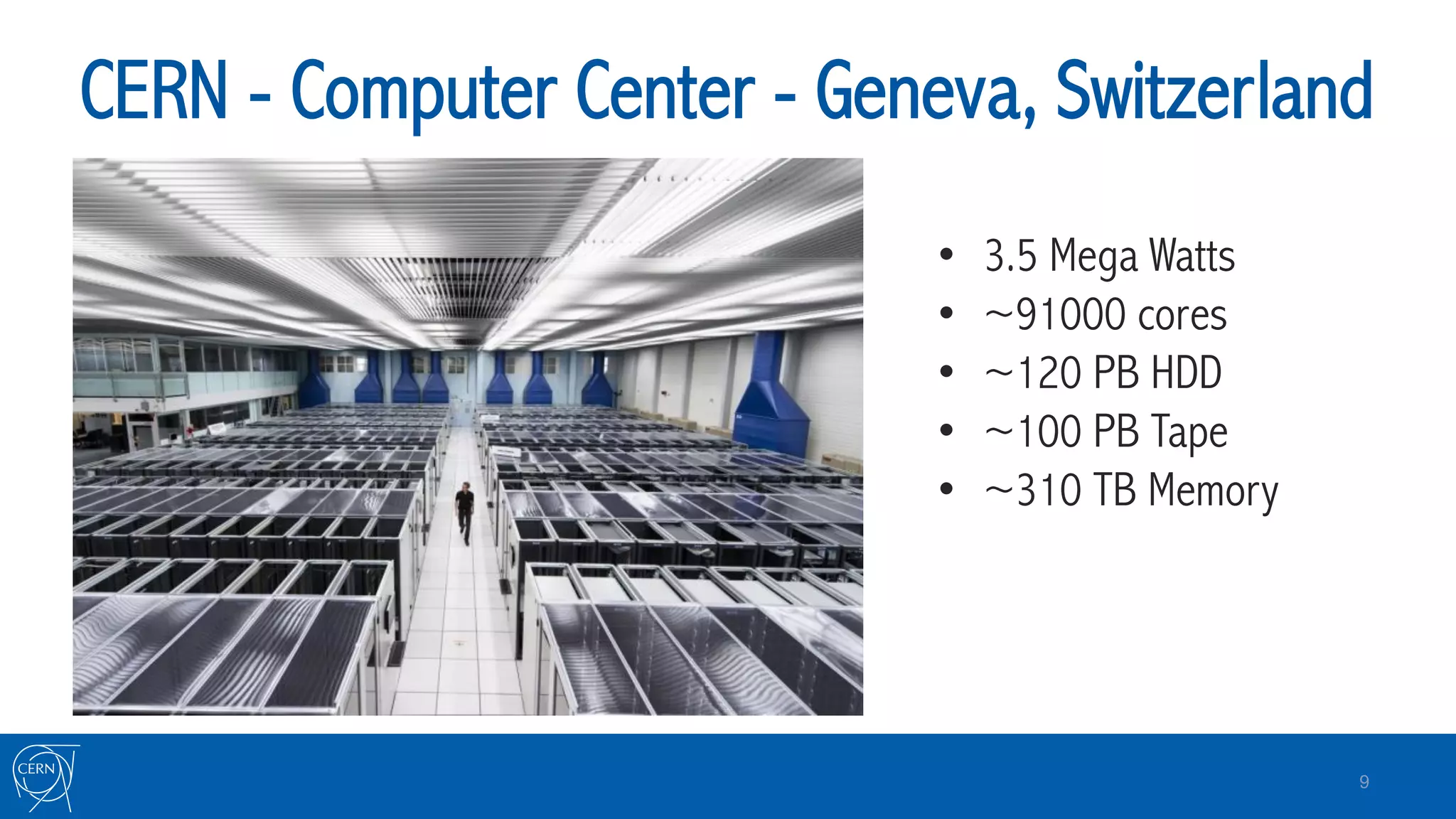

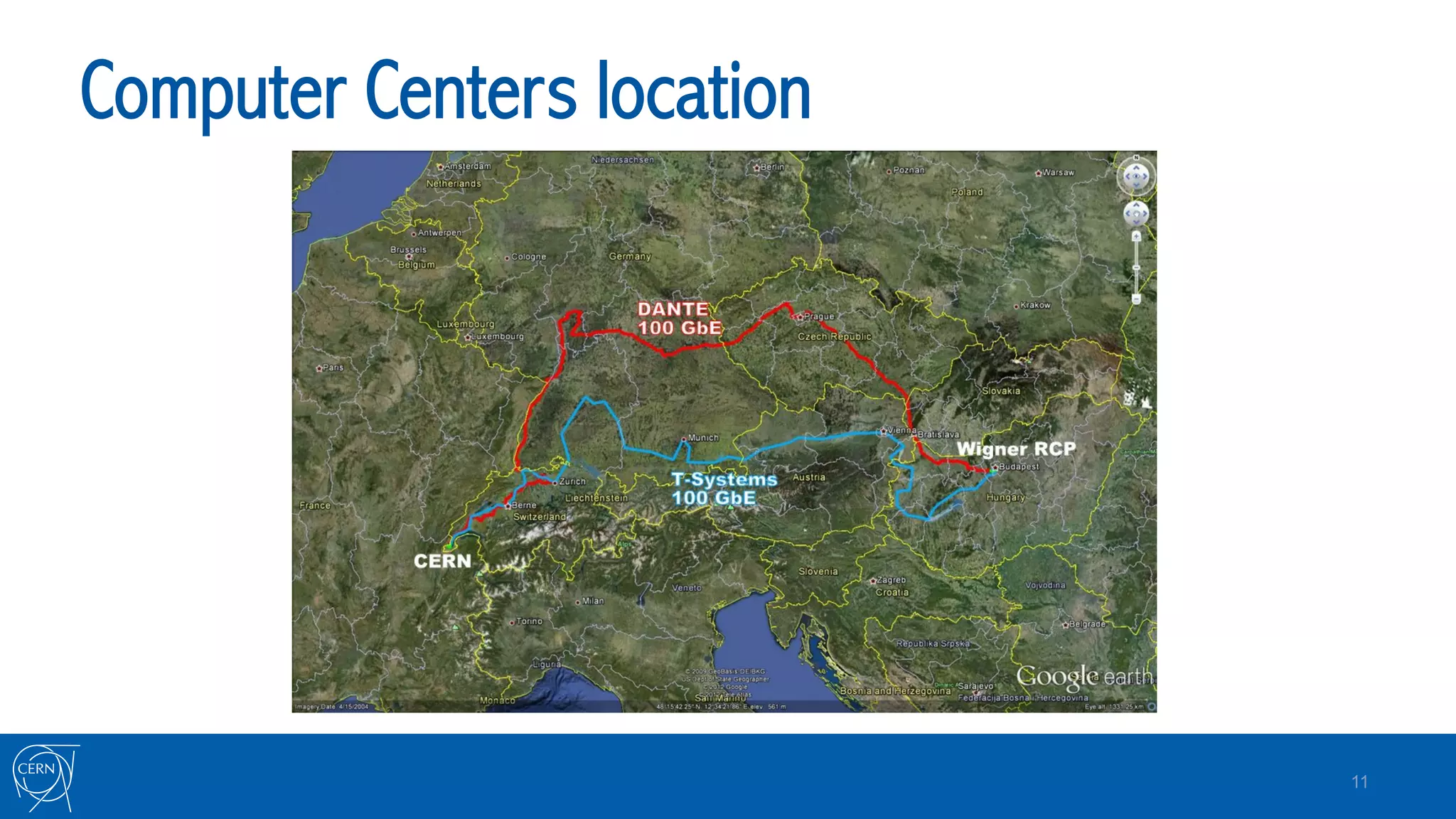

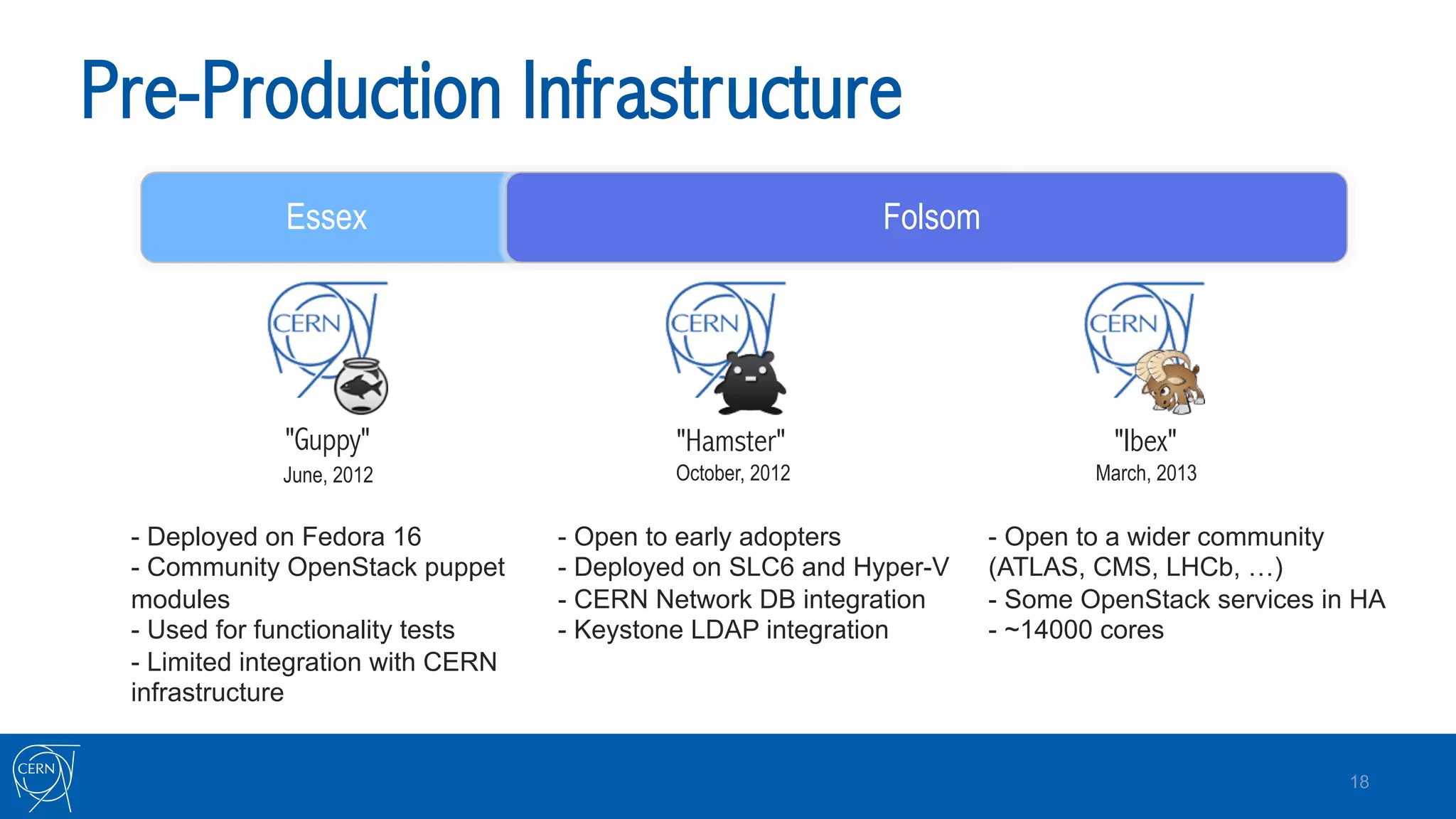

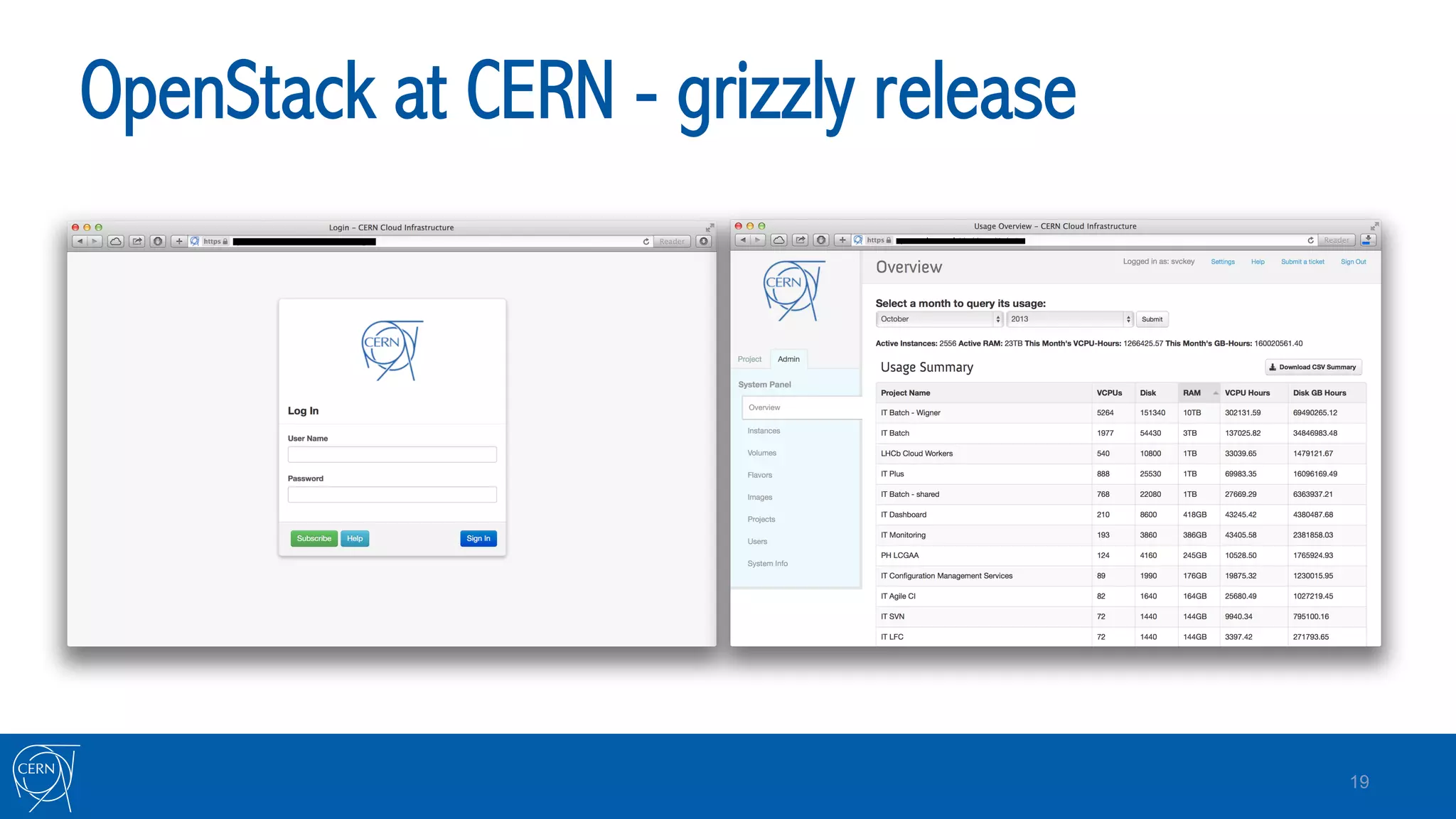

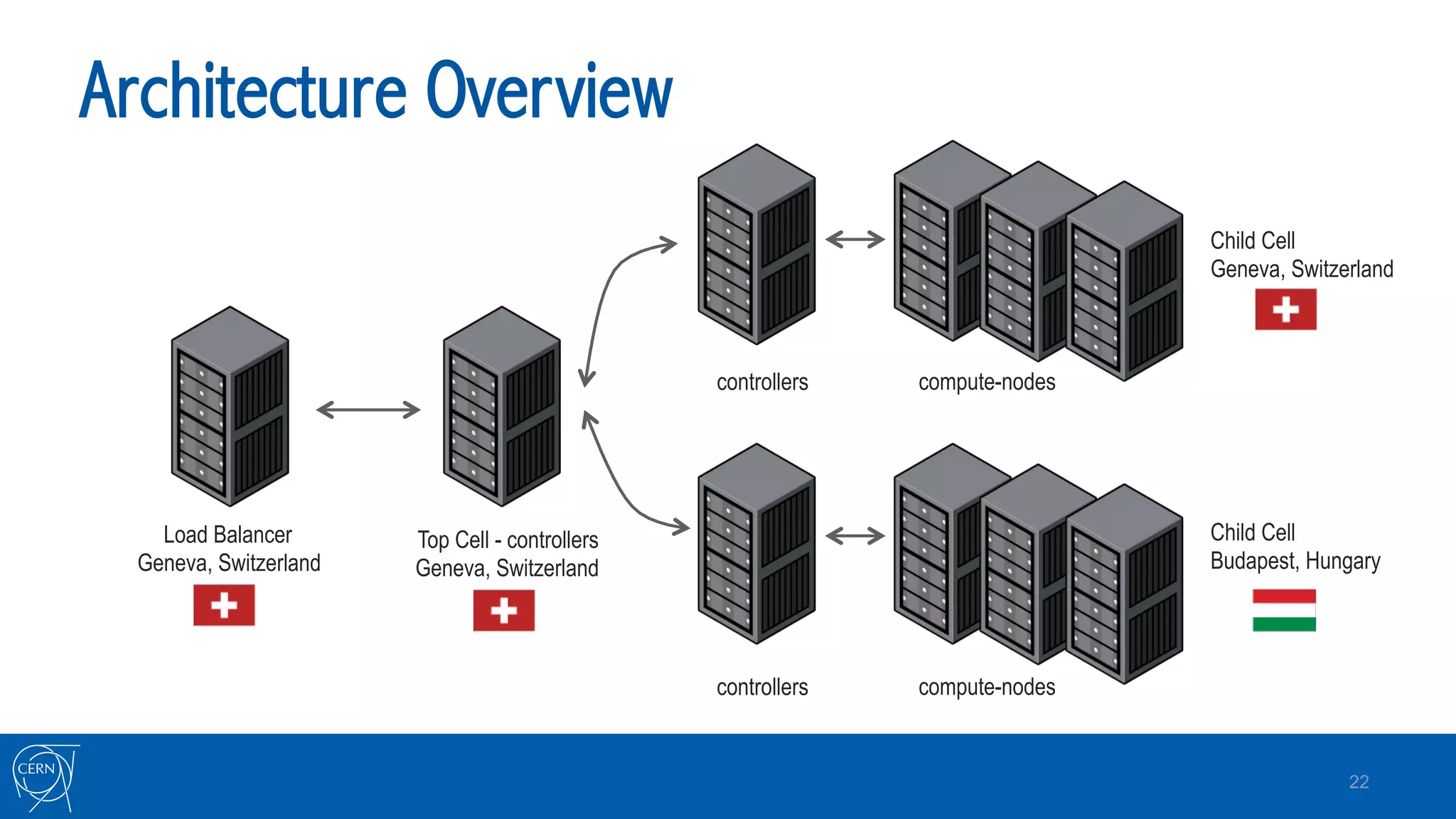

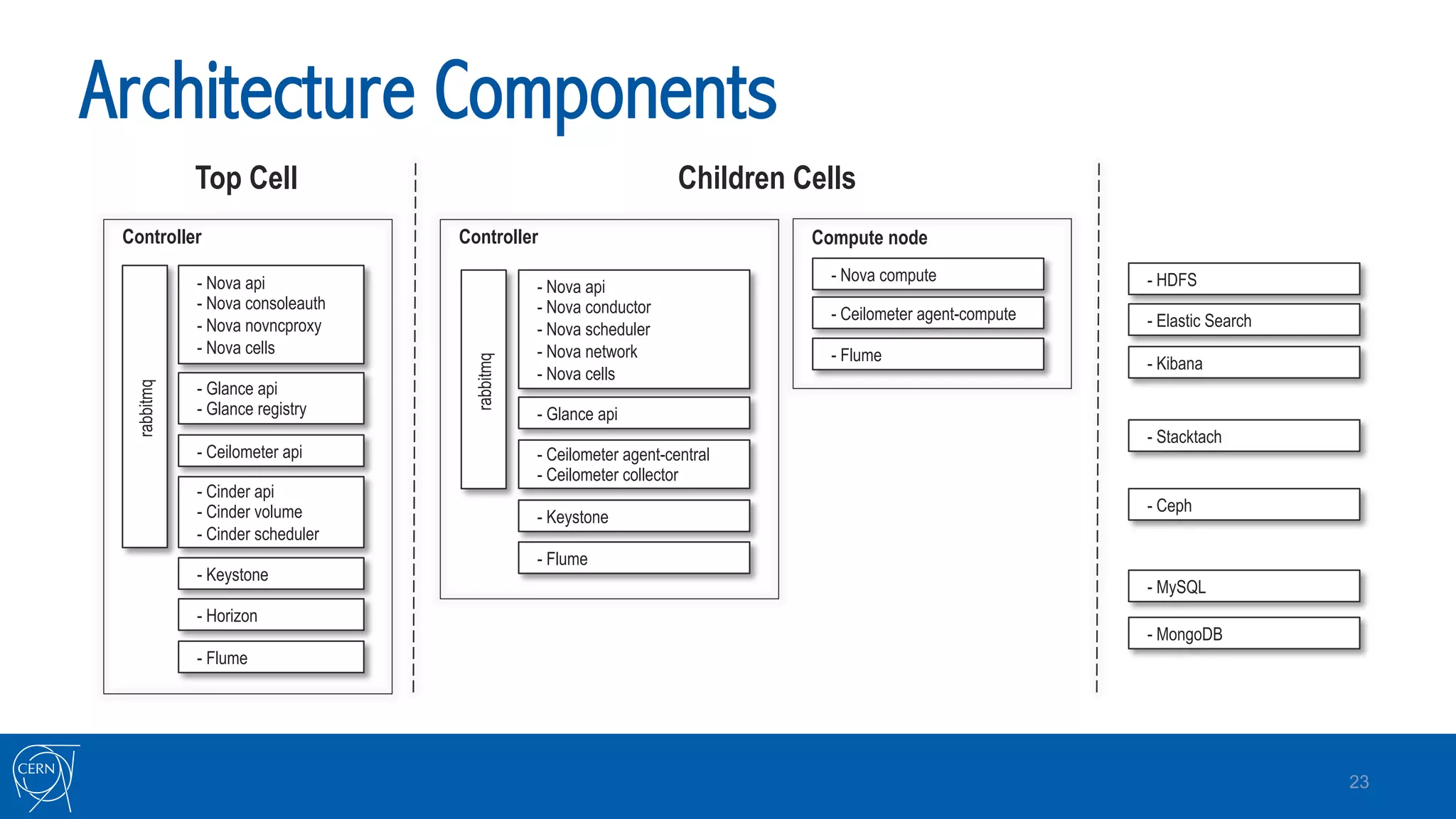

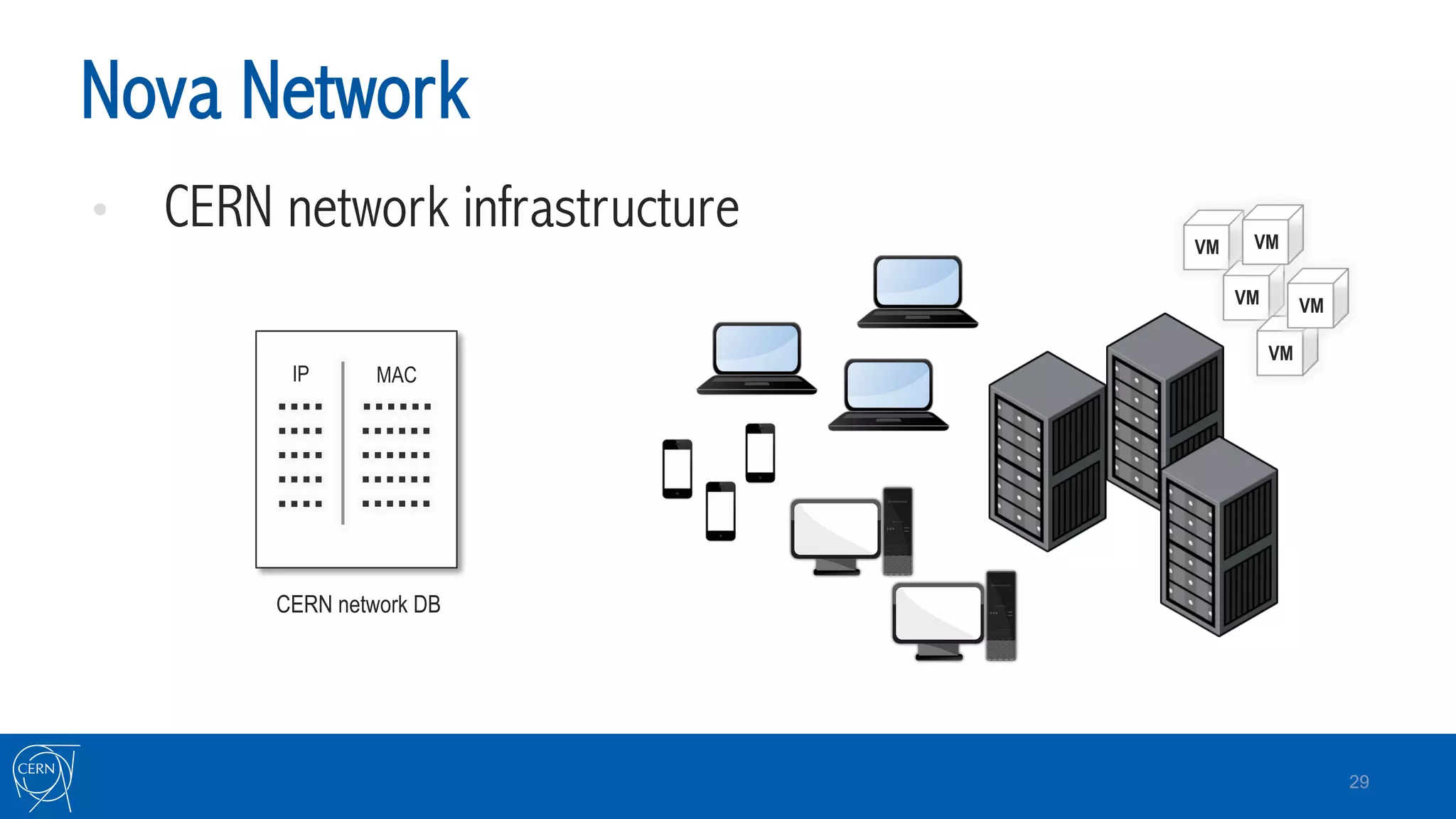

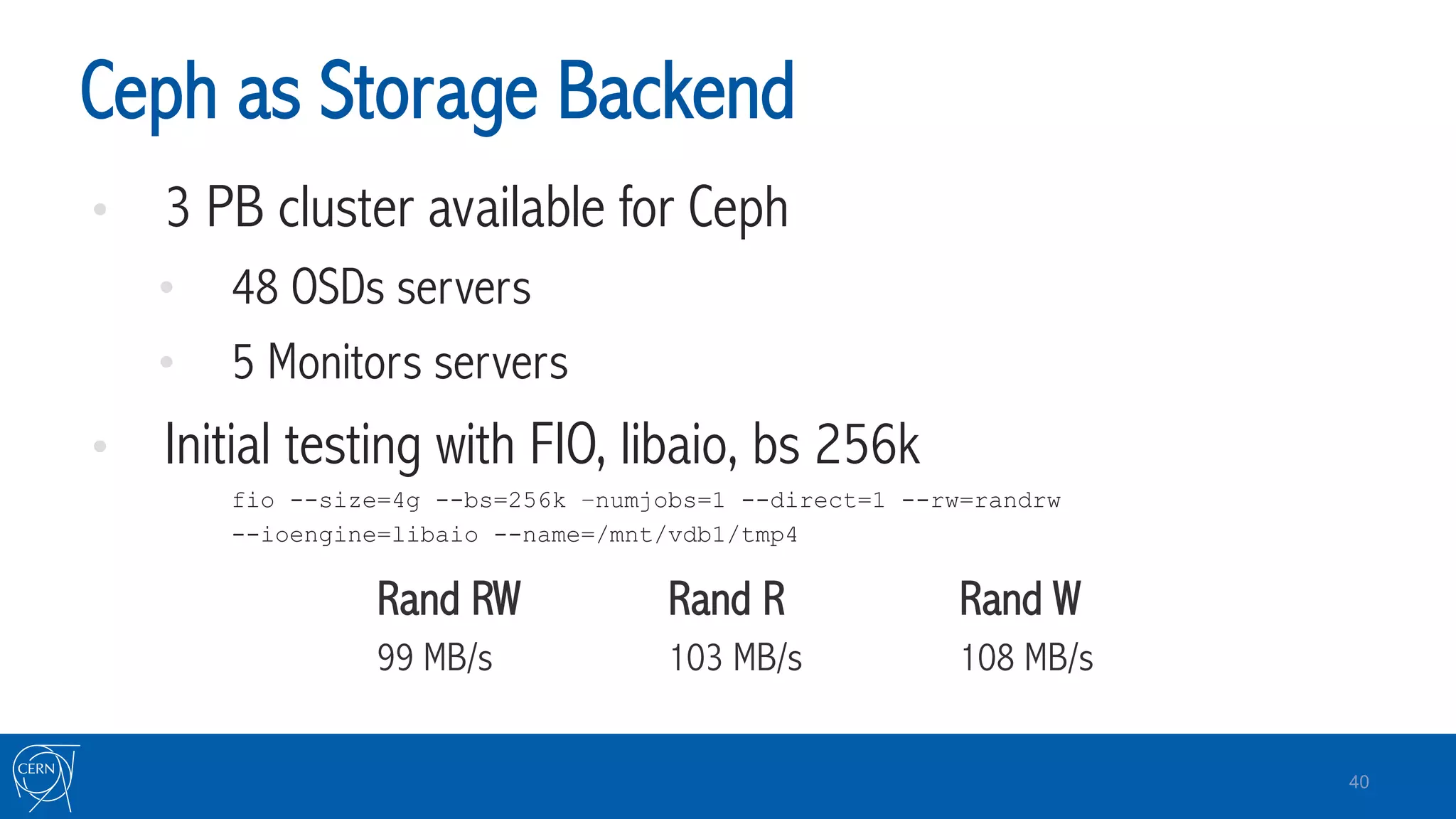

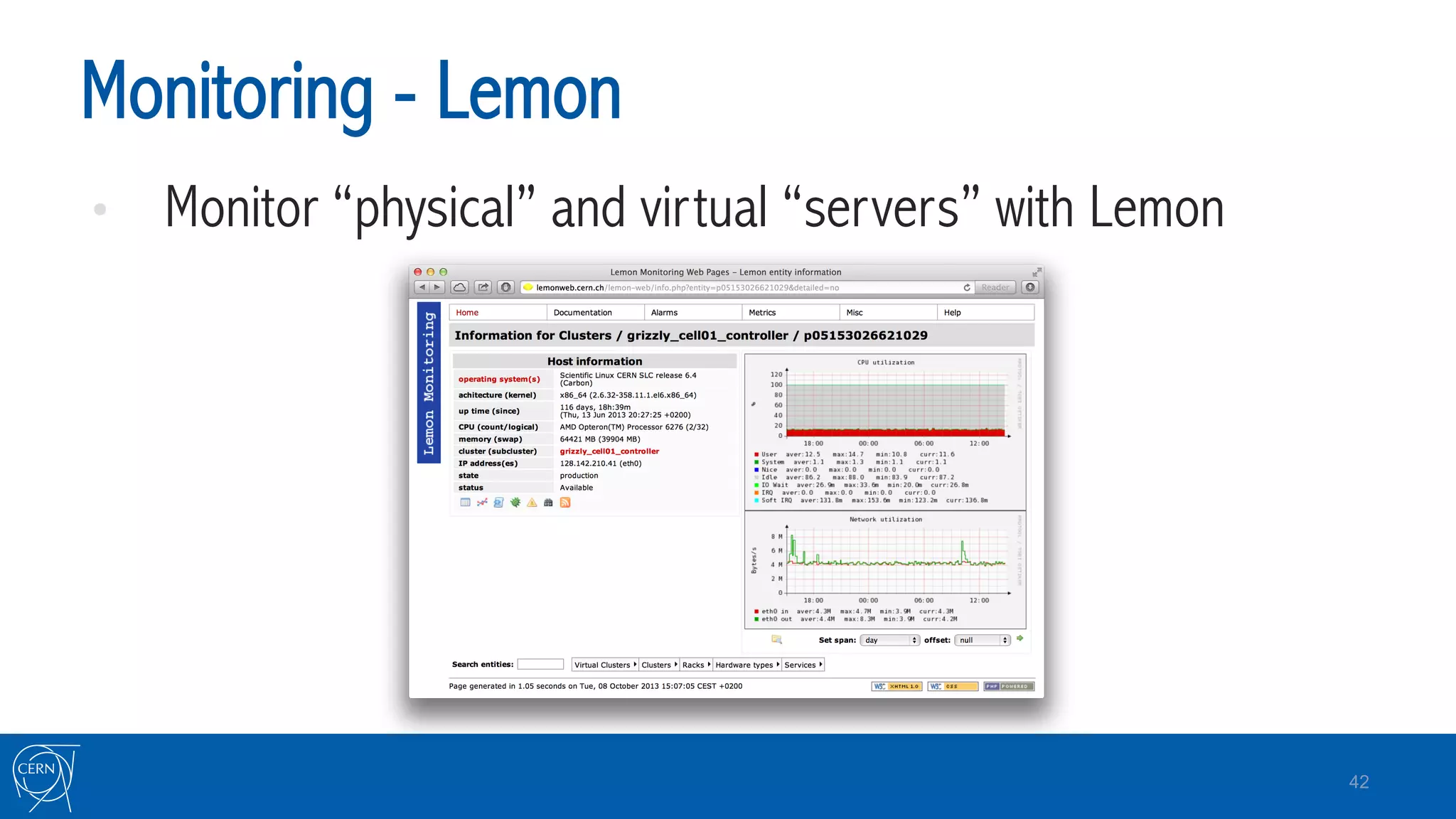

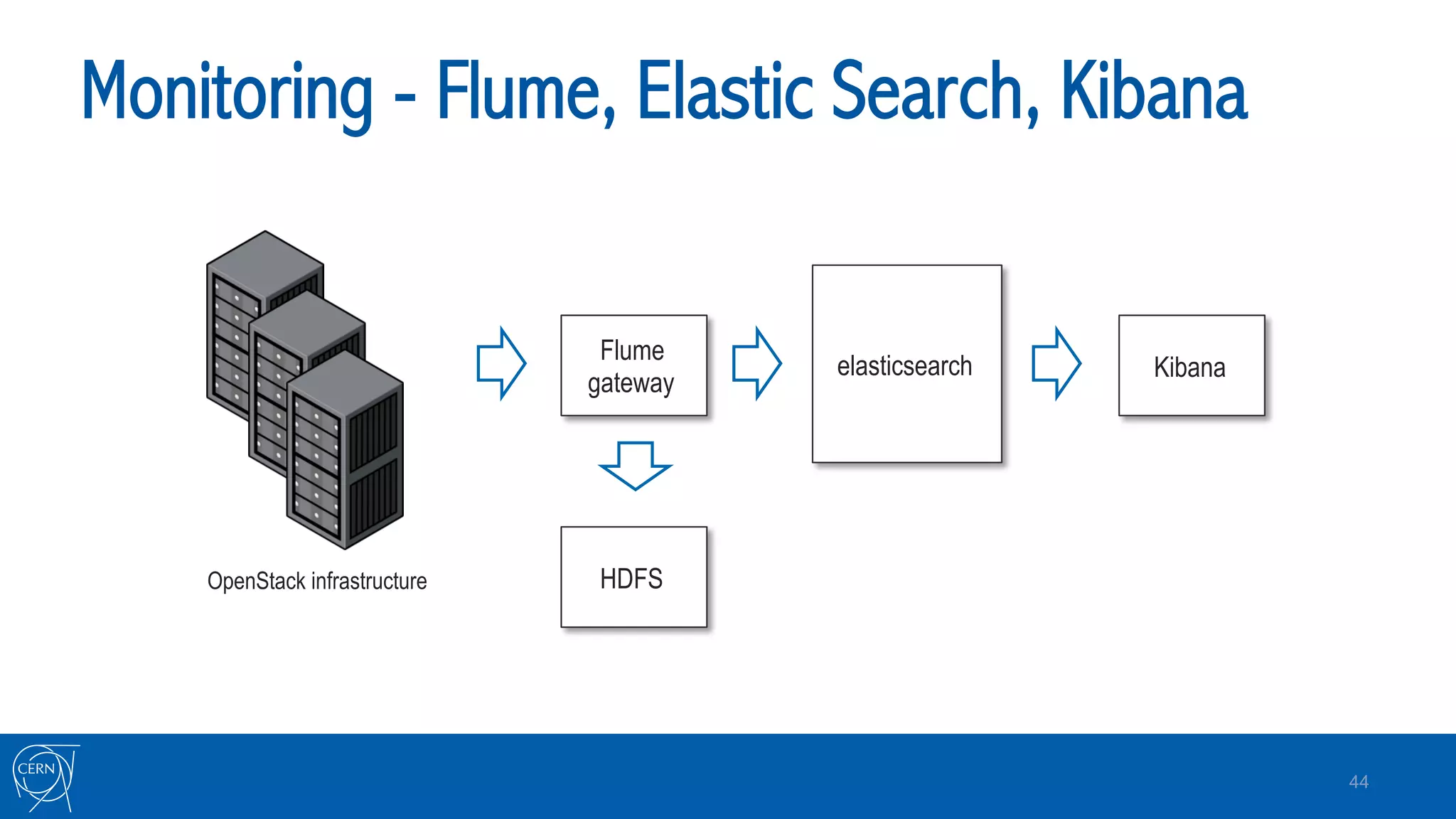

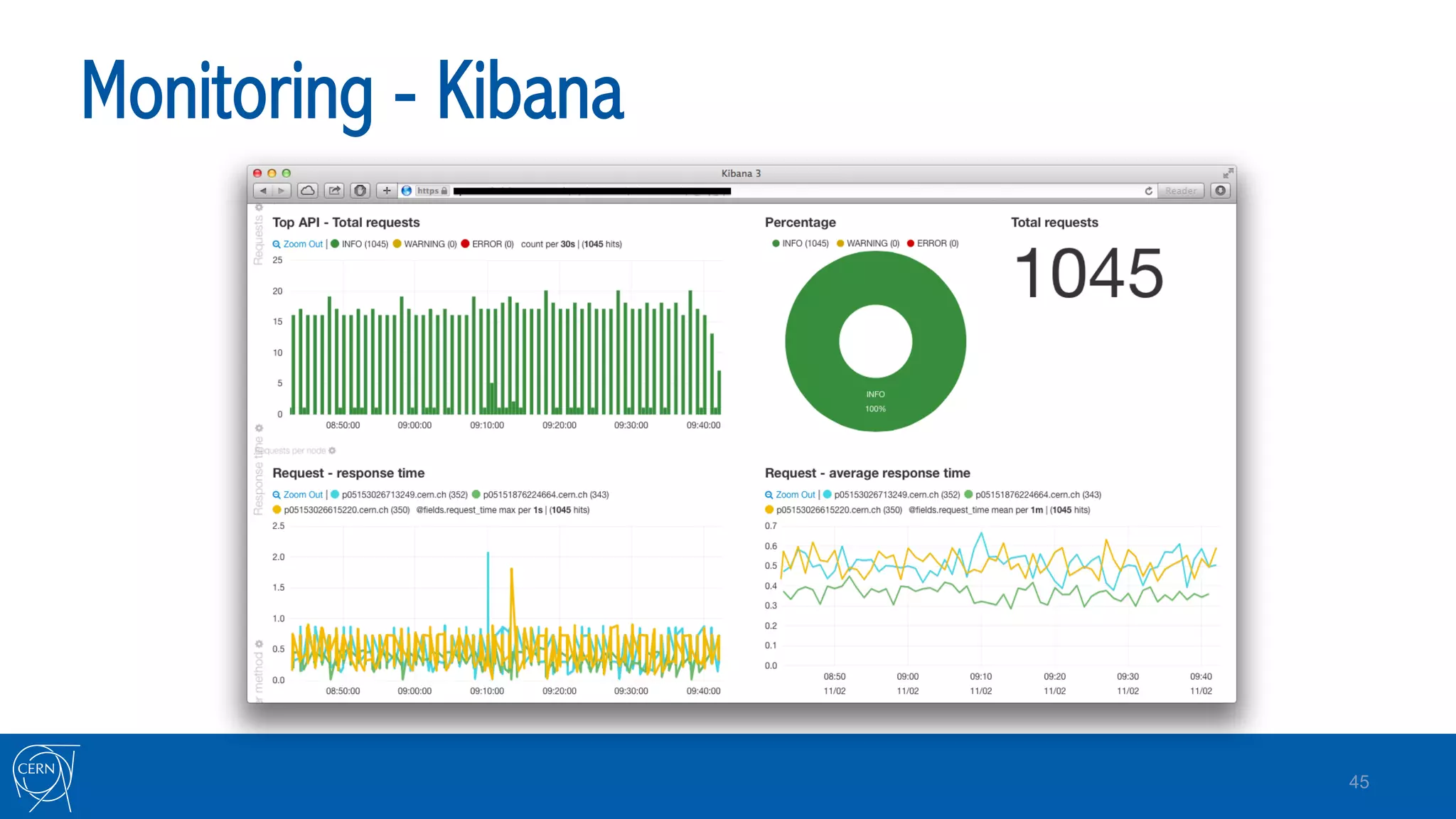

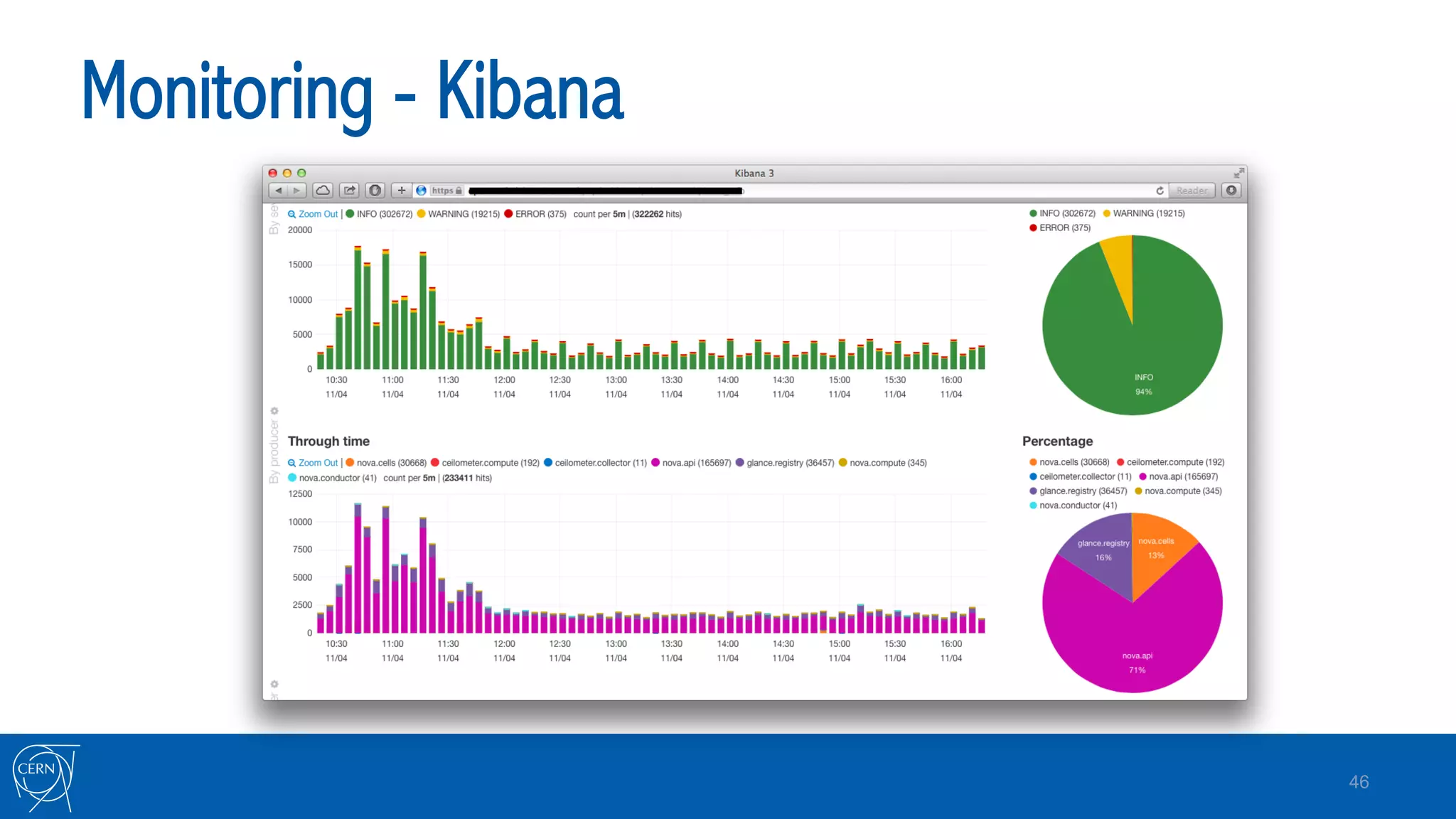

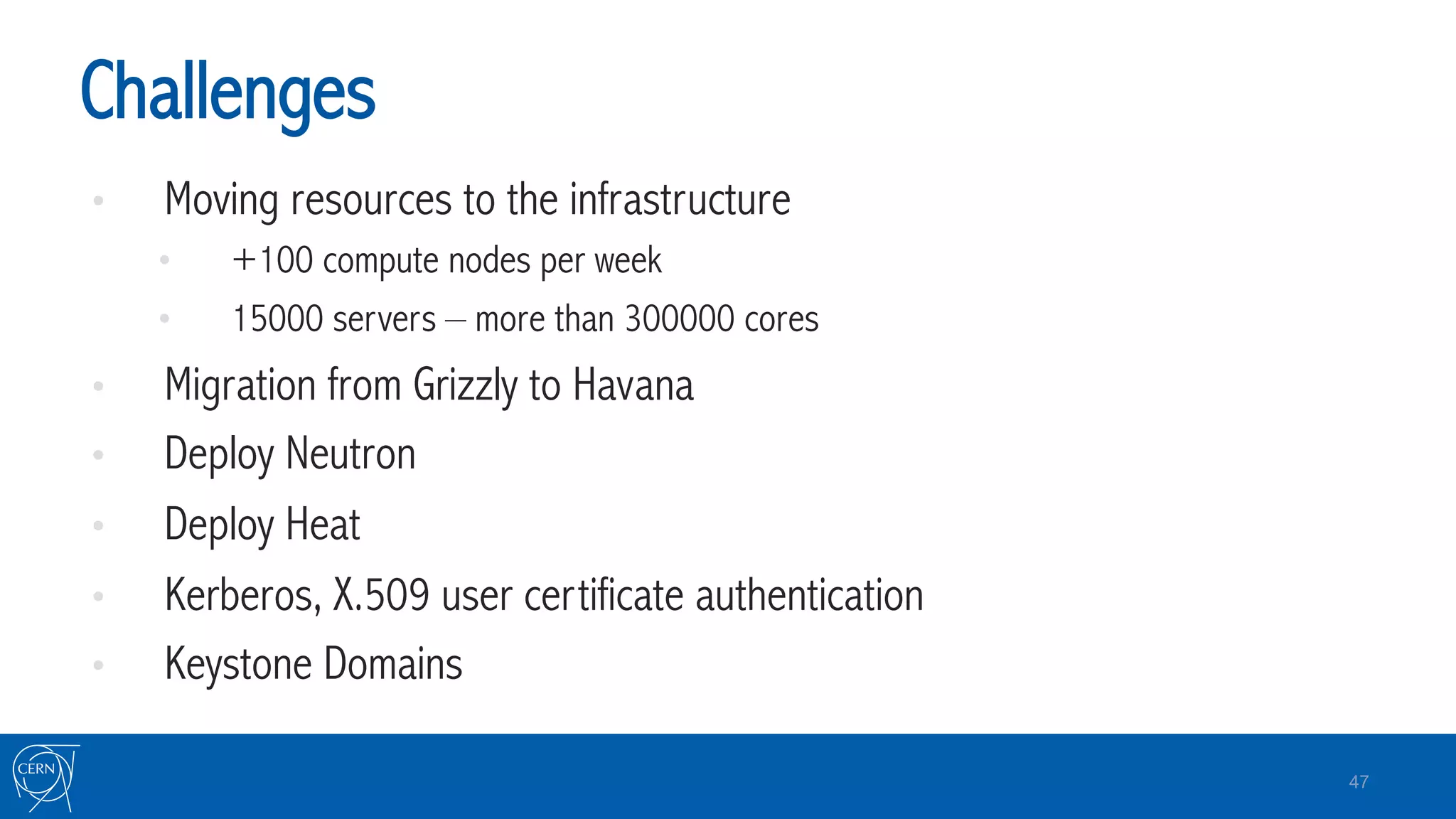

This document summarizes CERN's OpenStack cloud infrastructure. It describes CERN as a nuclear research organization with large computing needs. CERN deployed OpenStack across two computer centers containing over 20,000 CPU cores to improve efficiency and responsiveness. Key aspects included using Puppet for configuration, a multi-cell architecture across sites, and integration with CERN's identity management and network. Monitoring tools like Flume, Elasticsearch and Kibana were also implemented to visualize the cloud infrastructure status.