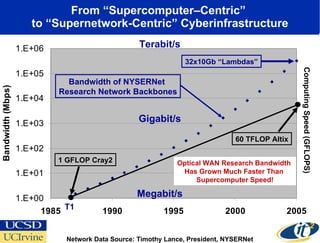

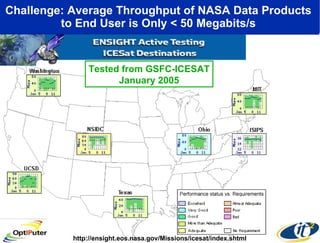

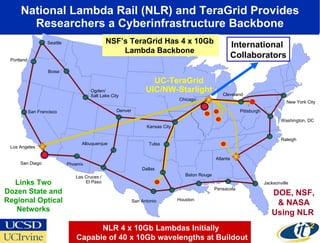

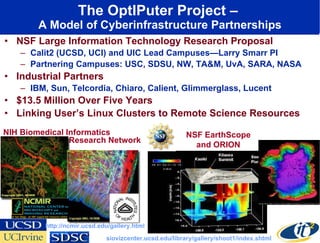

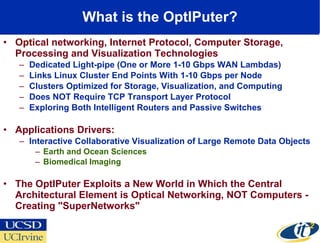

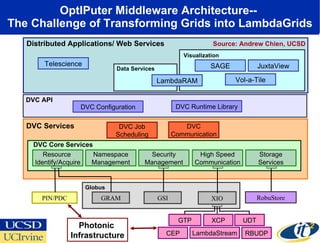

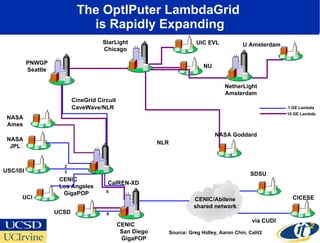

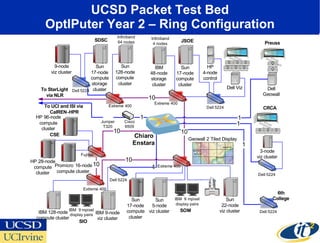

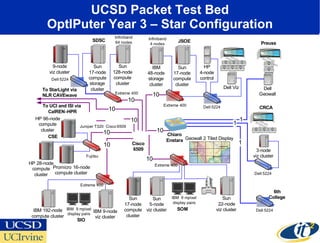

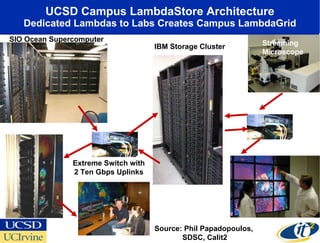

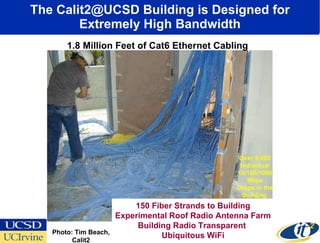

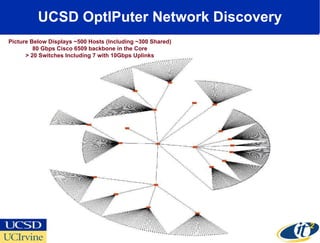

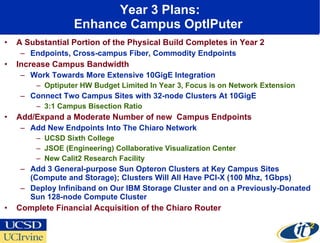

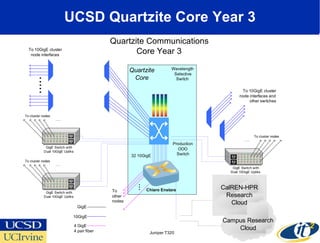

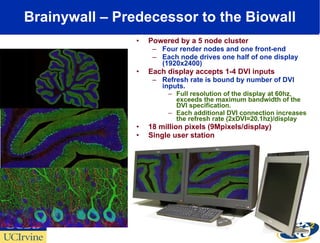

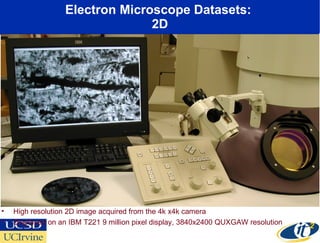

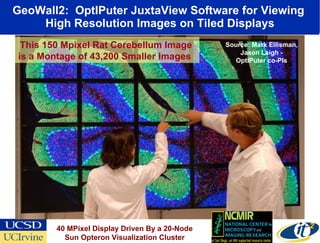

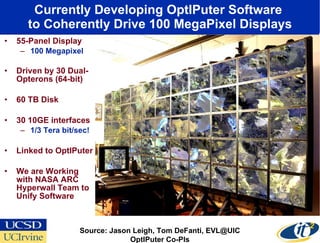

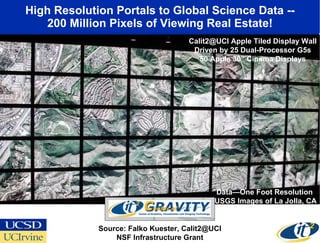

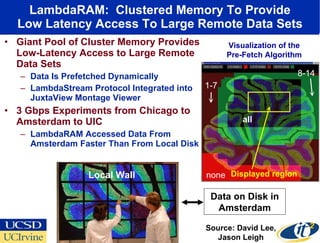

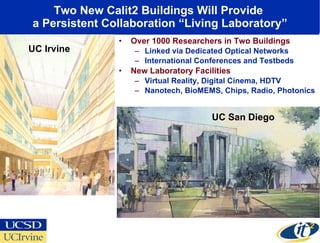

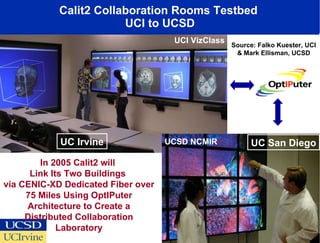

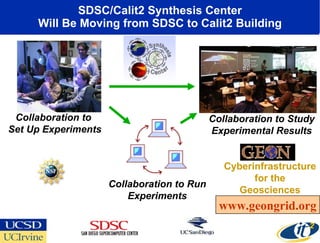

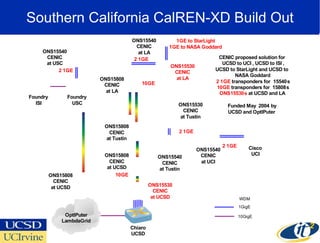

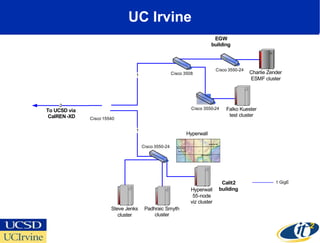

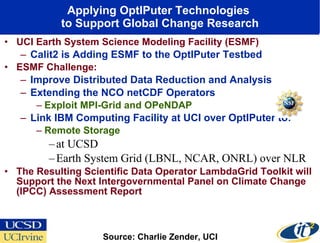

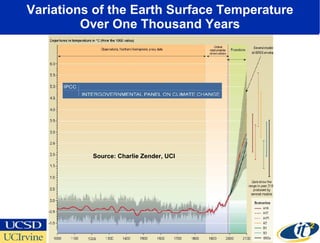

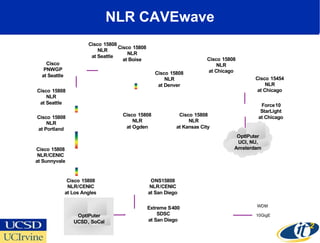

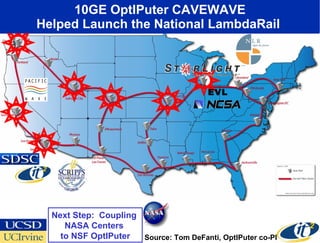

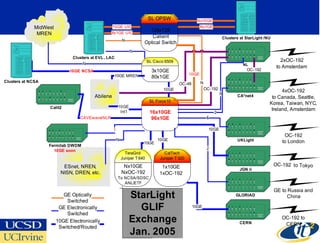

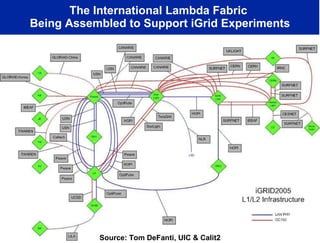

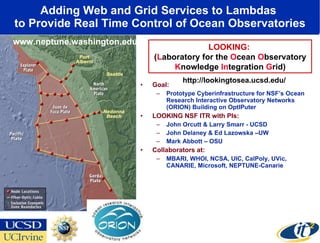

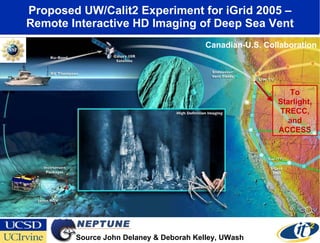

The document outlines the OptiPuter project, a cyberinfrastructure model aiming to integrate optical networking technologies with advanced computing resources, enhancing data collaboration across various research fields. It emphasizes the transition from supercomputer-centric to supernetwork-centric systems, highlighting the expansion of network bandwidth and collaborative visualization capabilities. Key partnerships include educational institutions and industry leaders, focusing on applications in biomedical imaging, earth sciences, and high-resolution data visualization.