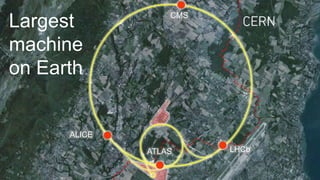

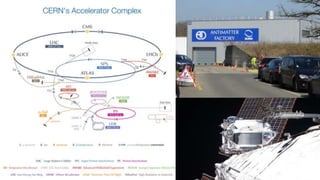

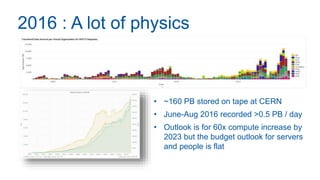

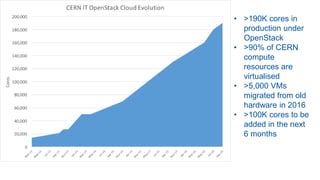

CERN operates the largest machine on Earth, the Large Hadron Collider (LHC), which produces over 1 billion collisions per second and records over 0.5 petabytes of data per day. CERN relies heavily on OpenStack, with over 190,000 CPU cores and 5,000 VMs under OpenStack management, accounting for over 90% of CERN's computing resources. CERN plans to add over 100,000 more CPU cores in the next 6 months and explores using public clouds and containers to help process the massive amount of data generated by the LHC.