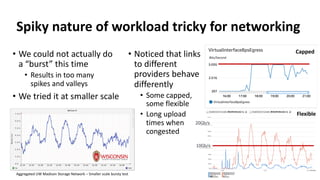

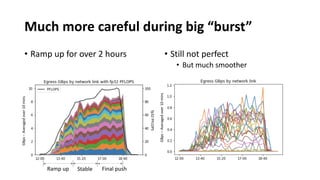

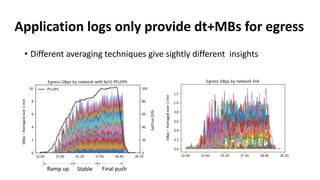

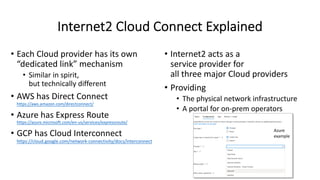

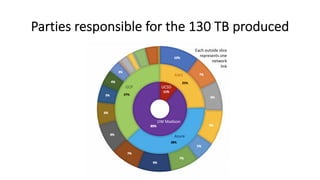

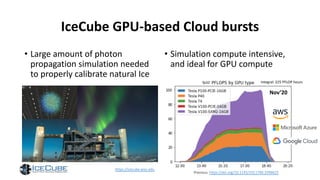

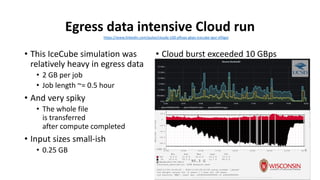

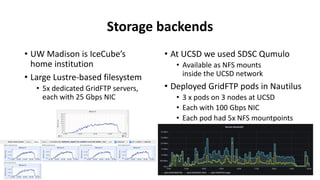

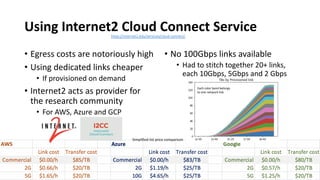

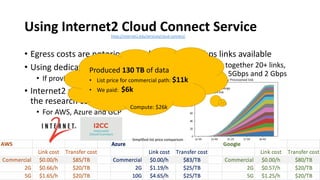

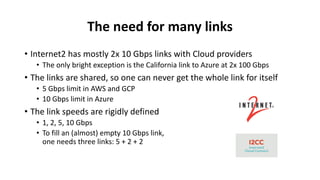

This document discusses a large-scale GPU-based cloud burst simulation run by the IceCube collaboration to calibrate simulations of natural ice. The simulation was data-intensive, producing over 130 TB of data and exceeding 10 Gbps of egress bandwidth. Internet2 Cloud Connect service was used to provision over 20 dedicated network links between collaborators' institutions and cloud providers to enable high-throughput data transfer at a lower cost than commercial routes. Careful planning was required to smoothly ramp up the burst and avoid overloading individual network links.

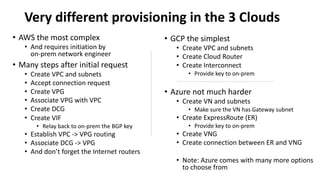

![Additional on-prem networking setup needed

• Quote from Michael Hare, UW Madison Network engineer:

In addition to network configuration [at] UW Madison (AS59), we

provisioned BGP based Layer 3 MPLS VPNs (L3VPNs) towards Internet2

via our regional aggregator, BTAA OmniPop.

This work involved reaching out to the BTAA NOC to coordinate on VLAN

numbers and paths and to [the] Internet2 NOC to make sure the newly

provisioned VLANs were configurable inside OESS.

Due to limitations in programmability or knowledge at the time regarding

duplicate IP address towards the cloud (GCP, Azure, AWS) endpoints, we

built several discrete L3VPNs inside the Internet2 network to accomplish

the desired topology.

• Tom Hutton did the UCSD part](https://image.slidesharecdn.com/exa3tnrprc2-201112170843/85/Data-intensive-IceCube-Cloud-Burst-10-320.jpg)