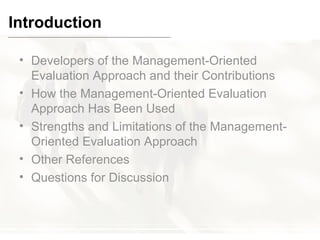

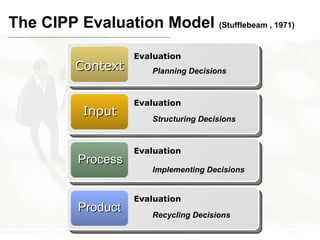

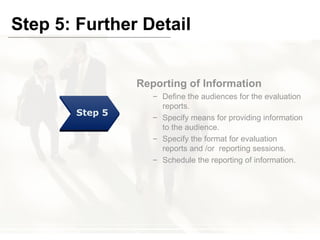

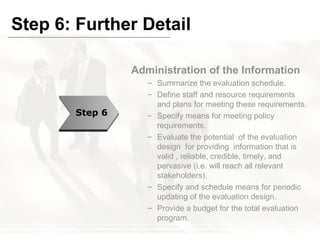

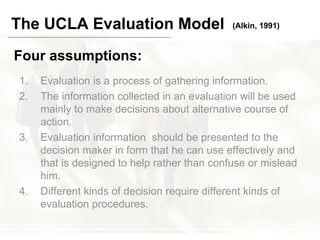

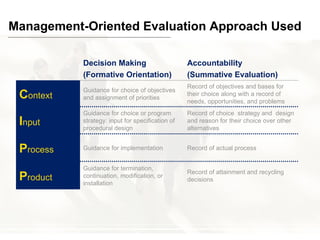

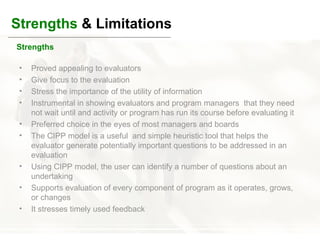

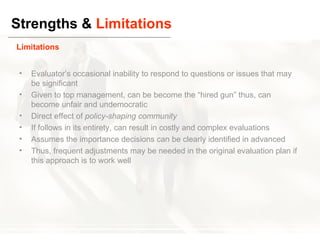

The document discusses management-oriented evaluation approaches, primarily focusing on the CIPP (Context, Input, Process, Product) evaluation model and its applications. It outlines the steps for conducting evaluations, the strengths and limitations of these models, and the significance of gathering information to inform decision-makers effectively. The text also compares the CIPP model with the UCLA evaluation model, highlighting their similarities in providing insights on program evaluation.