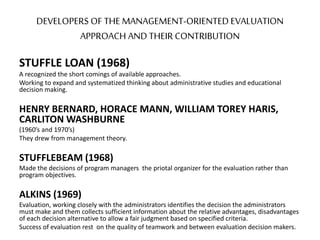

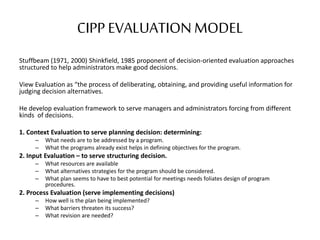

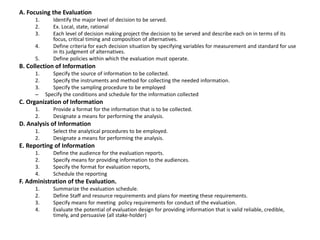

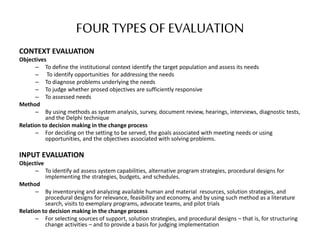

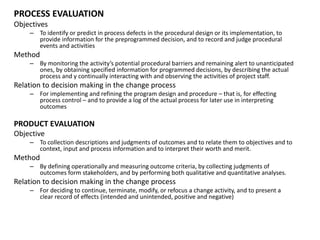

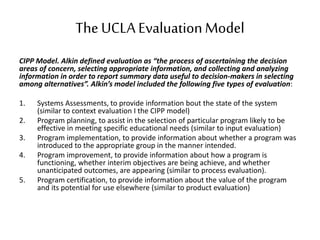

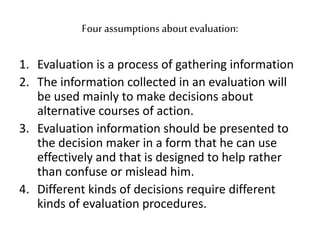

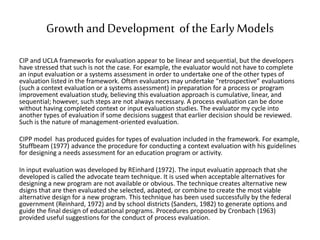

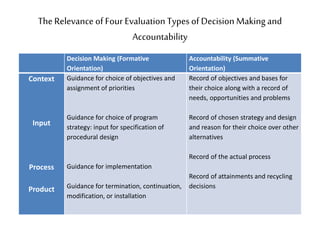

This document discusses management-oriented evaluation approaches. It begins by stating that these approaches aim to serve decision makers by providing evaluation information to help with good decision making. It describes the CIPP model created by Stuffbeam which evaluates programs based on Context, Input, Process, and Product. The document also discusses other early evaluation models like the UCLA model. It notes strengths of the management approach include focusing evaluations and linking them to decision making. Potential limitations include the evaluator becoming too aligned with management or evaluations becoming too complex.