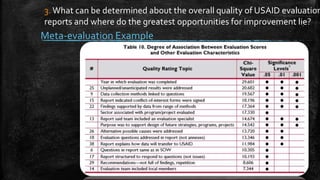

1. The quality of USAID evaluation reports has generally improved over 2009-2012, with improvements in factors like use of multiple data collection methods and identification of limitations. However, some factors did not improve.

2. USAID evaluation reports excelled in basic characteristics but fell short in factors like distinguishing findings from conclusions/recommendations and including an evaluation specialist.

3. Overall quality of USAID evaluations was moderately high but could be improved by increasing involvement of evaluation specialists and guidance on new standards.