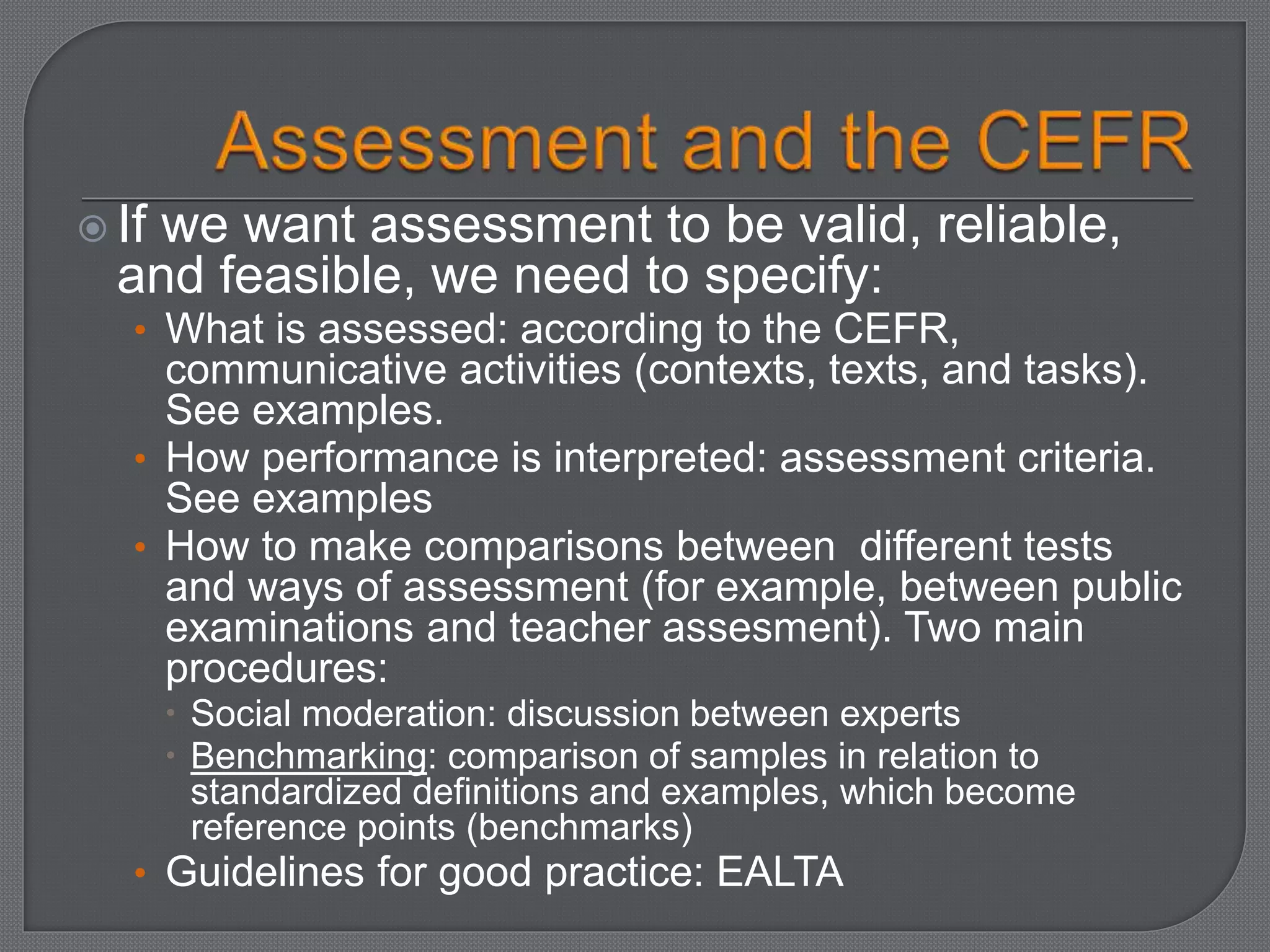

This document discusses key concepts in language assessment including validity, reliability, and feasibility. It provides definitions and examples of different types of validity including construct, content, criterion-related, and face validity. Reliability is discussed in terms of test-retest, alternate forms, and split-half methods. The document also covers types of language assessment such as proficiency tests, achievement tests, and diagnostic tests. Specific techniques for assessing writing, speaking, reading, listening, grammar, and vocabulary are outlined. Guidelines are provided for developing valid and reliable language tests.