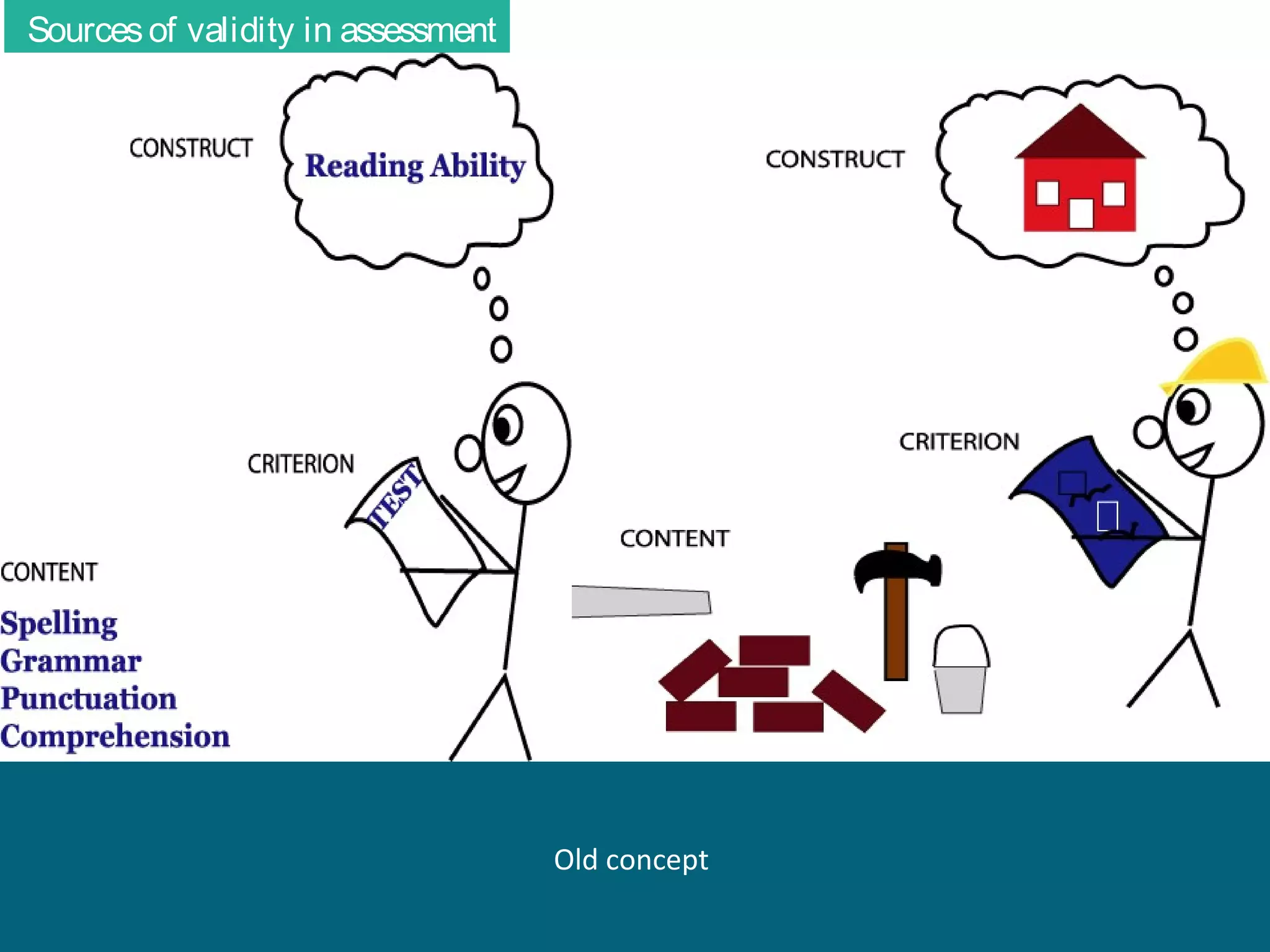

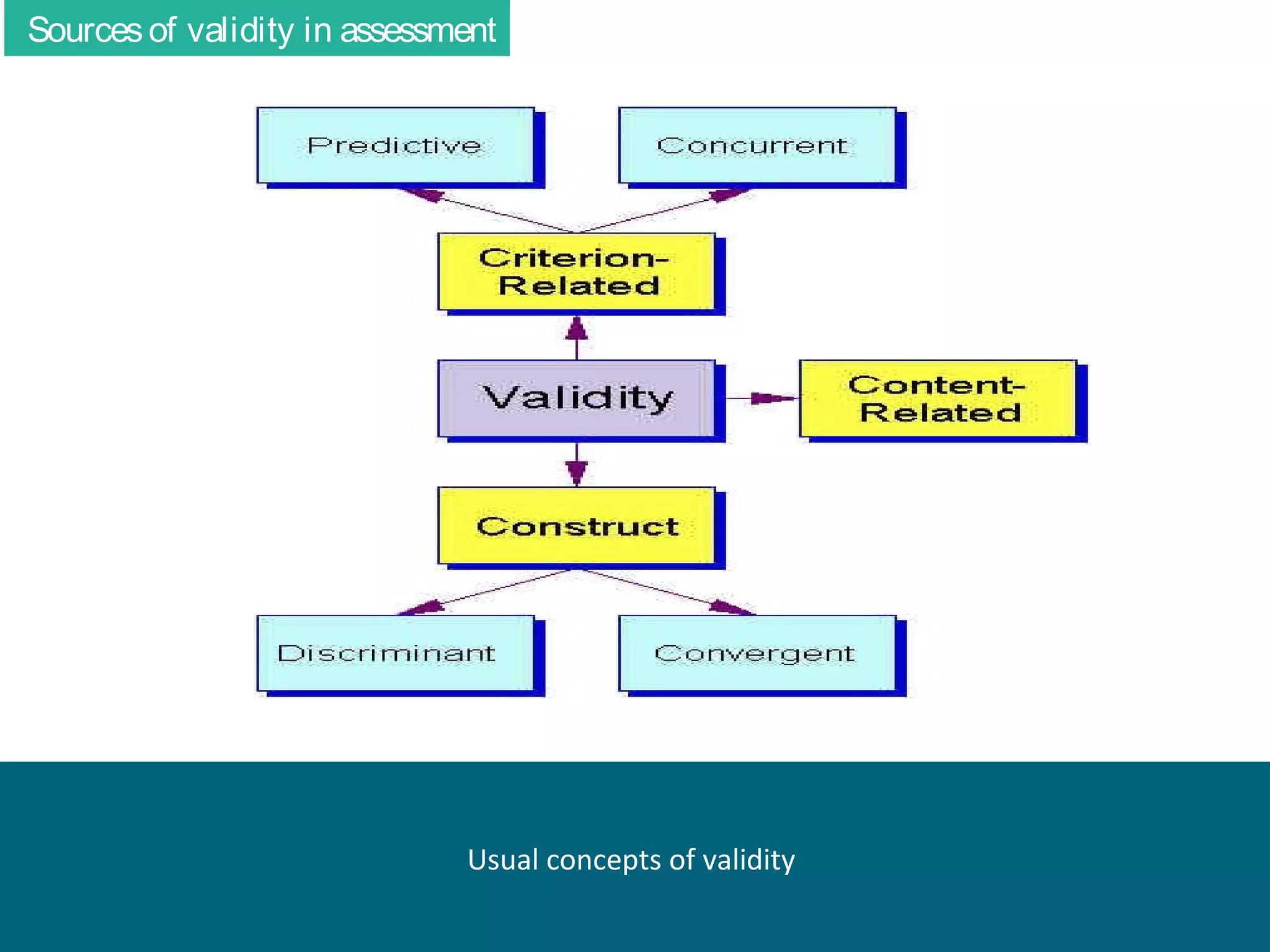

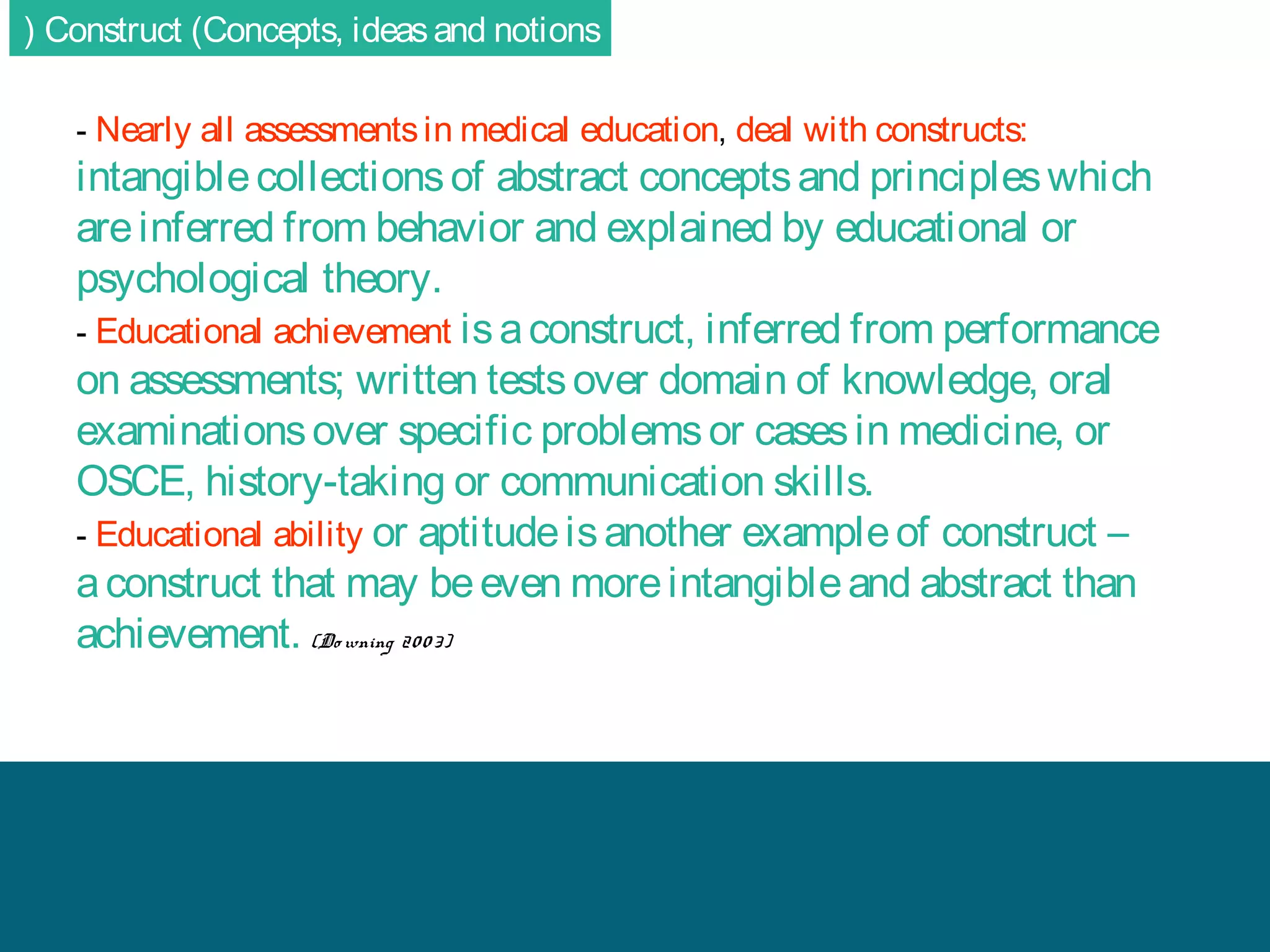

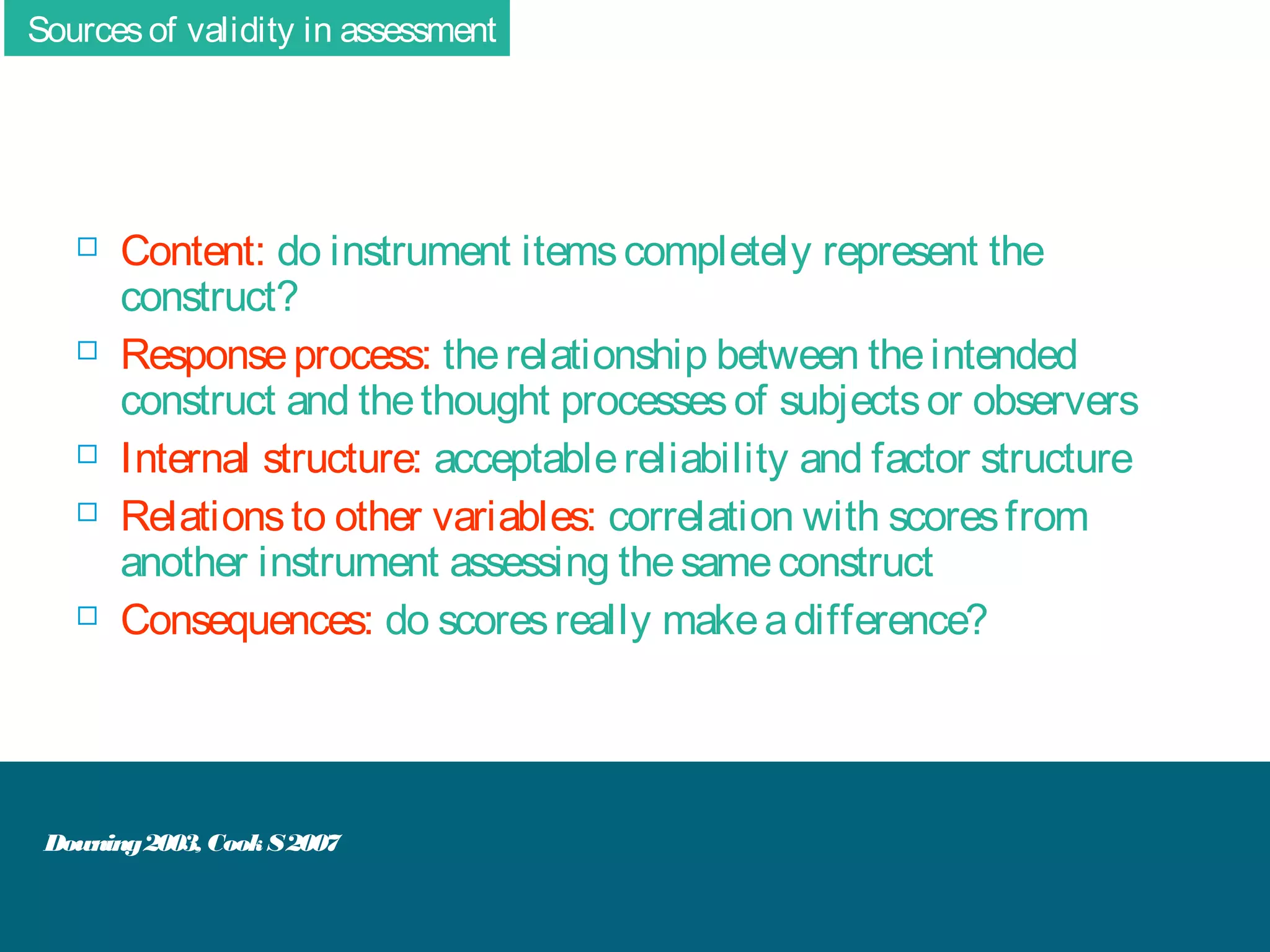

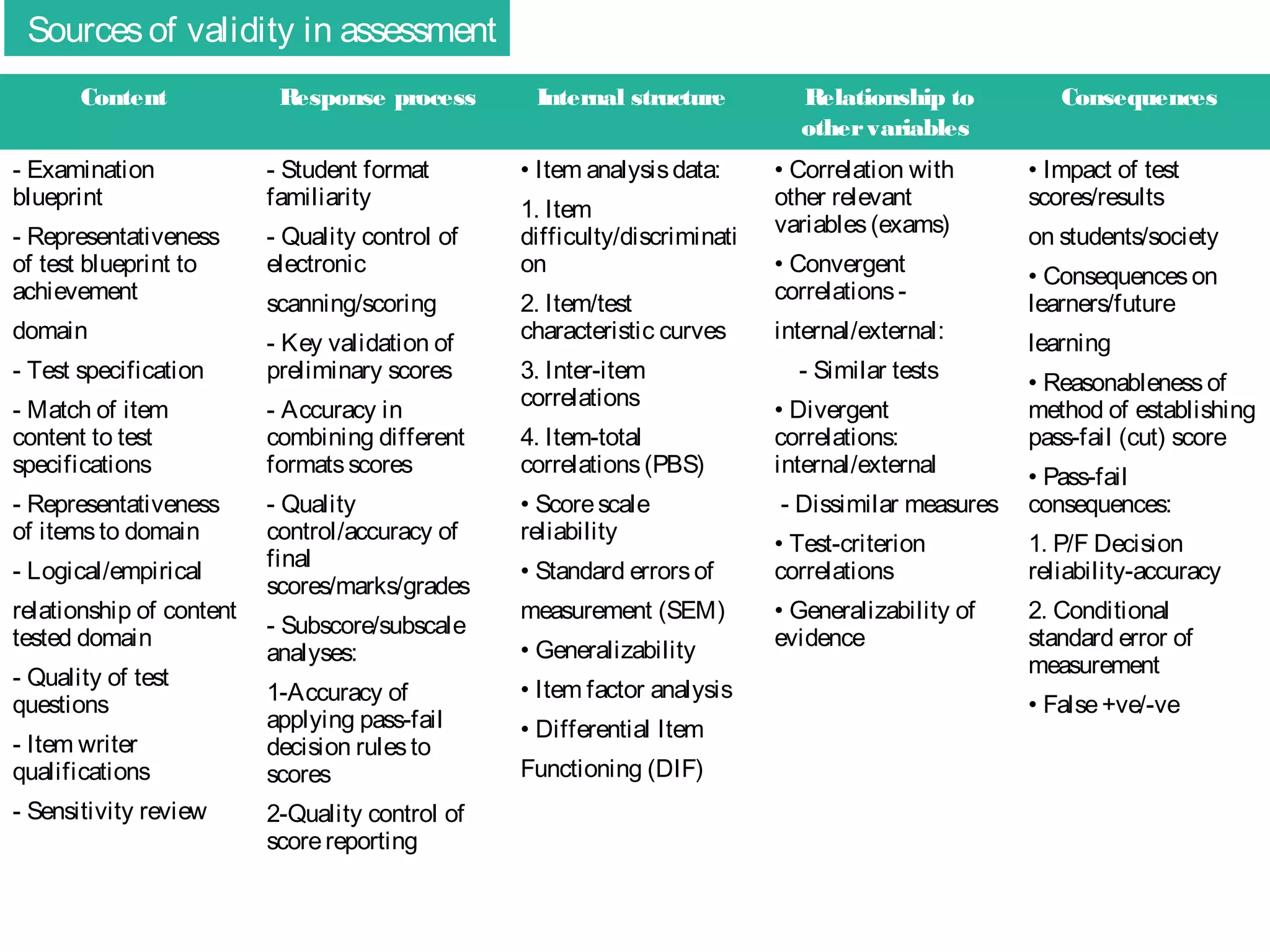

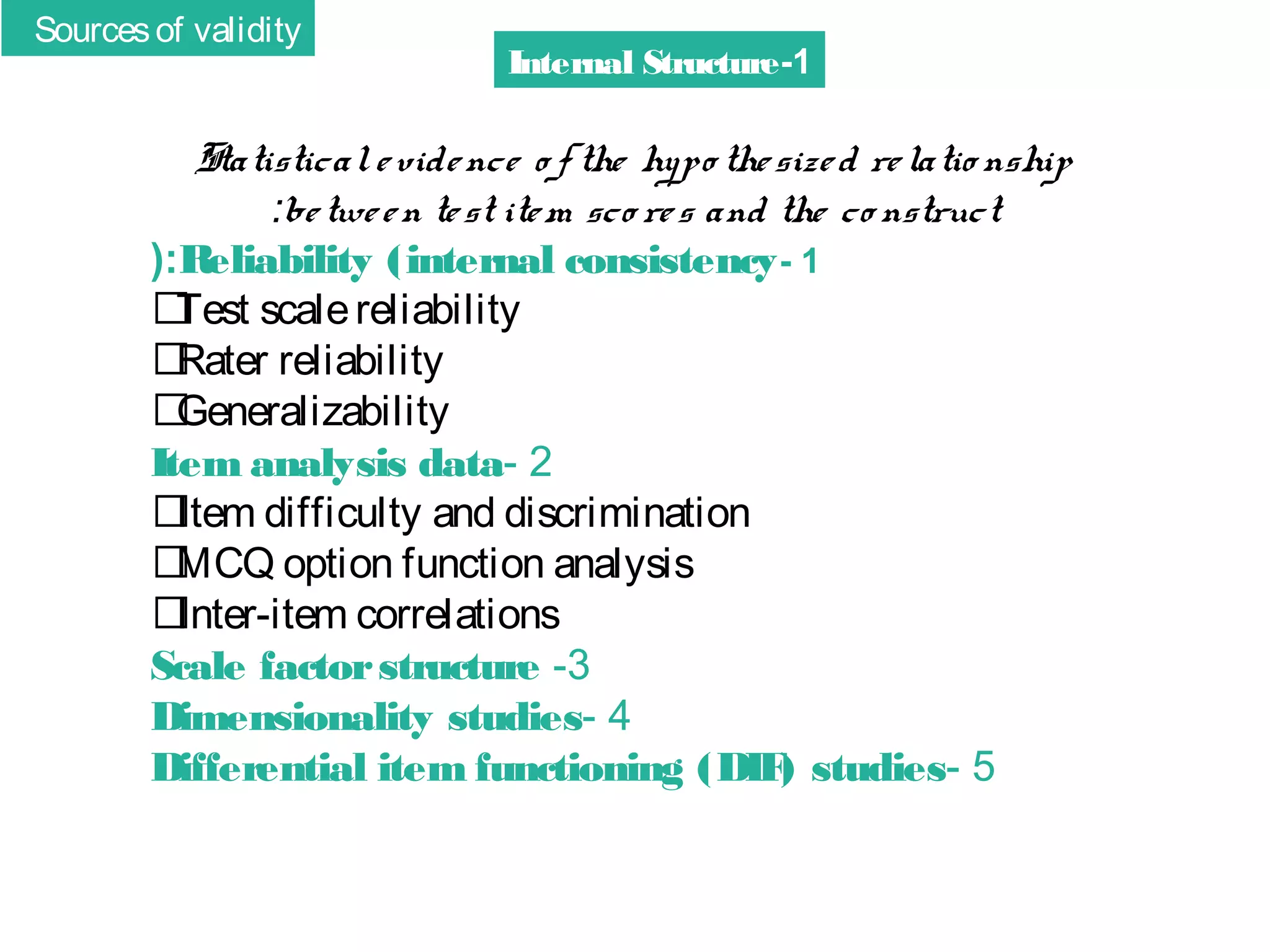

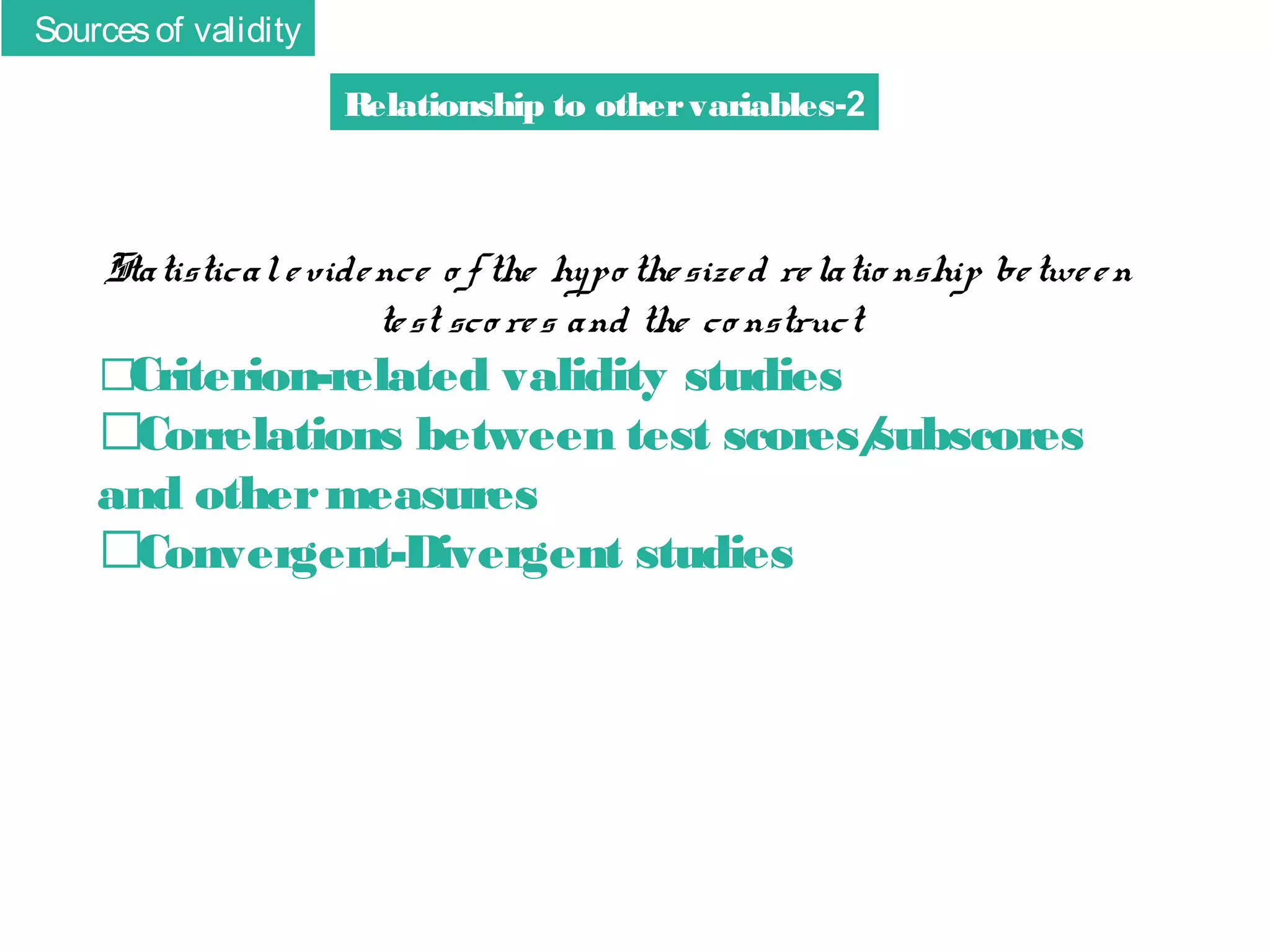

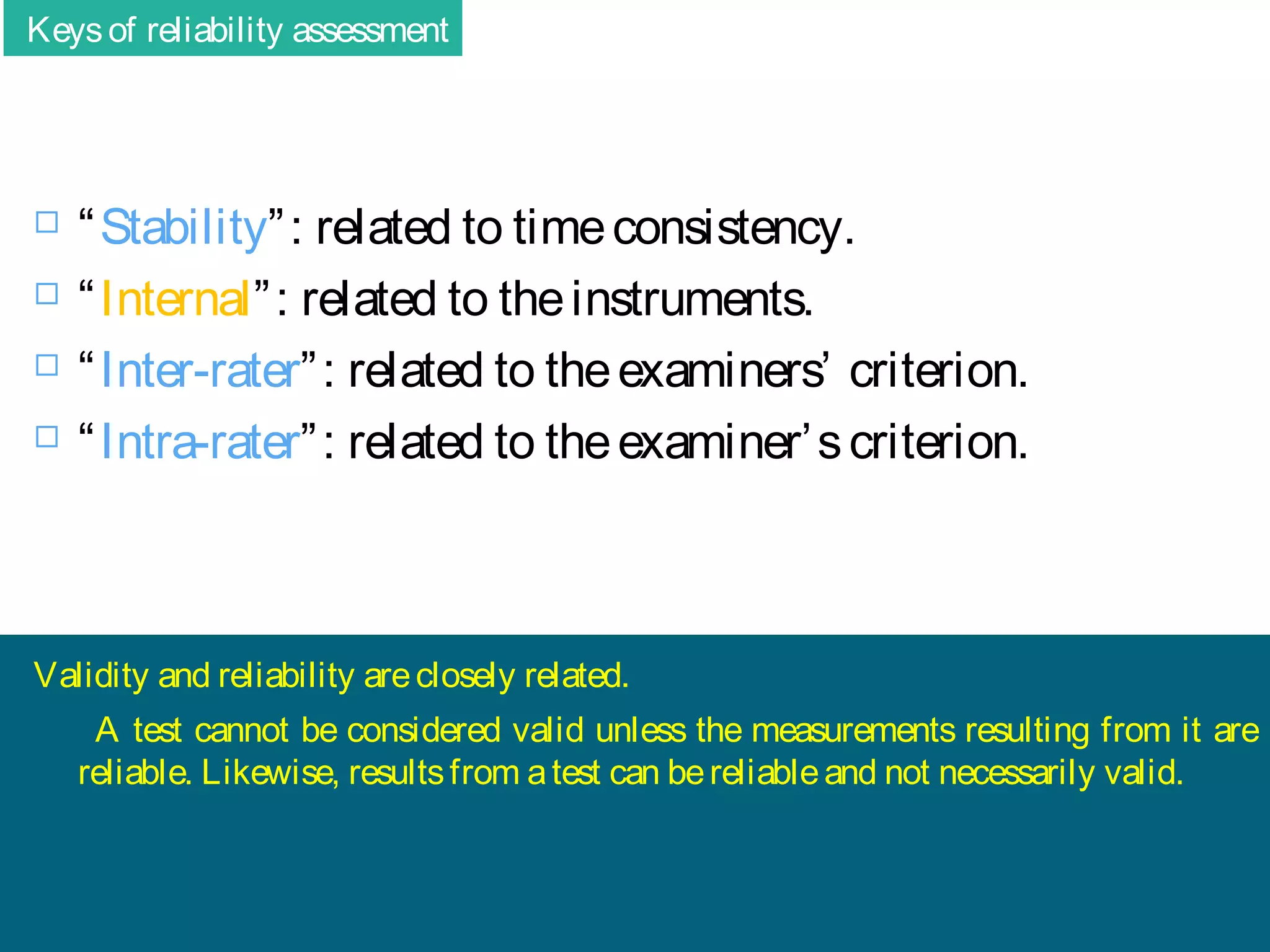

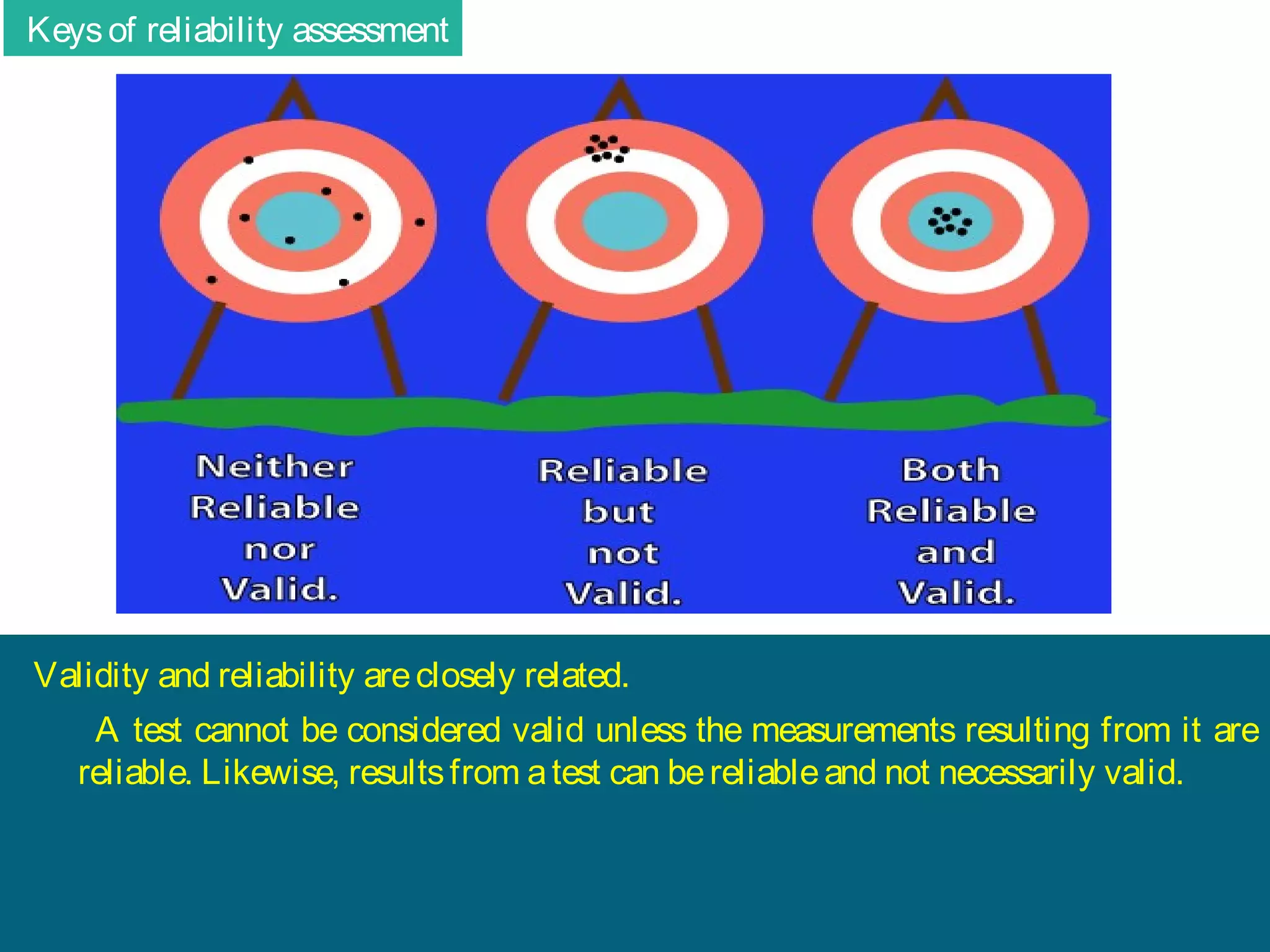

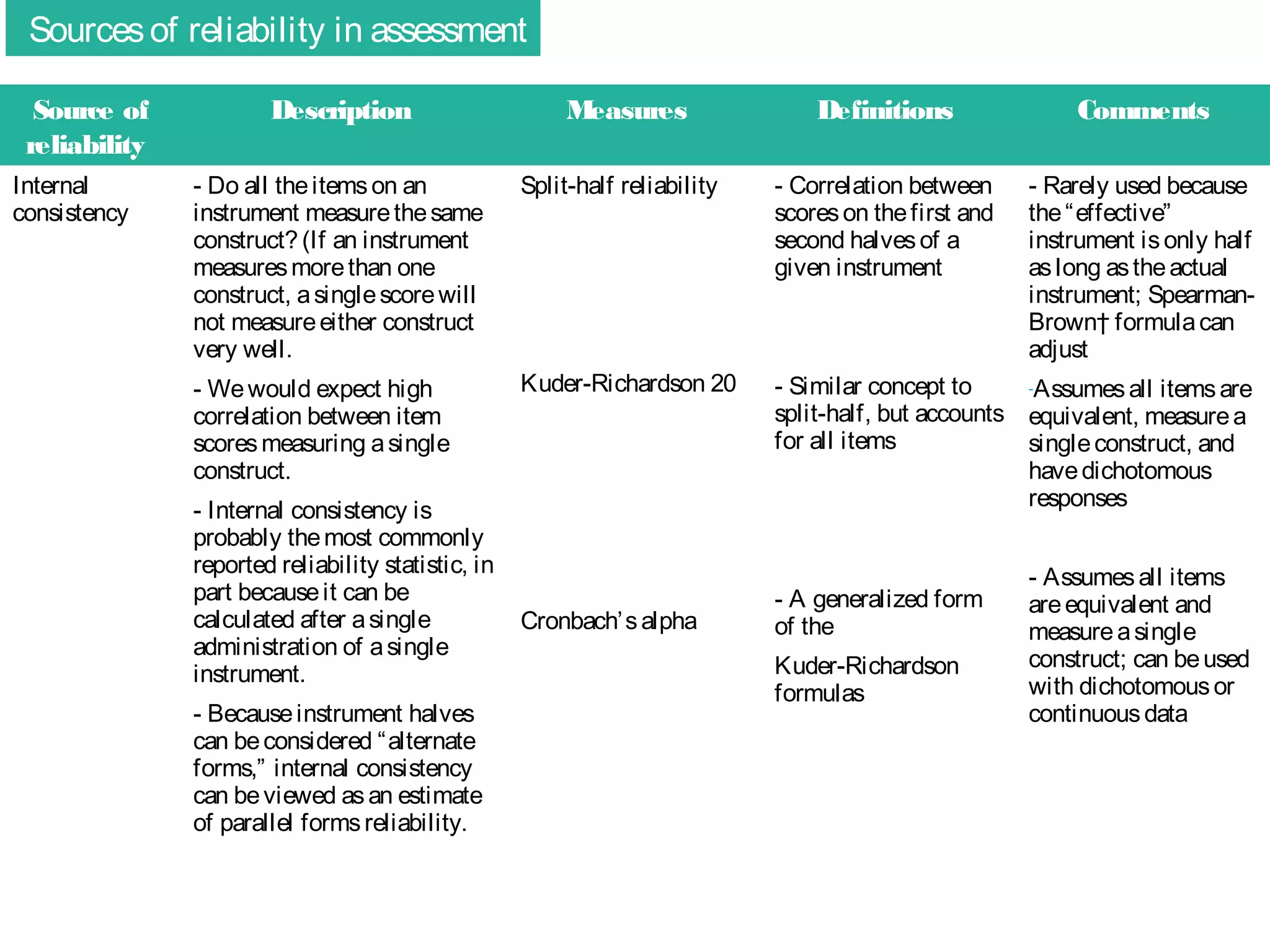

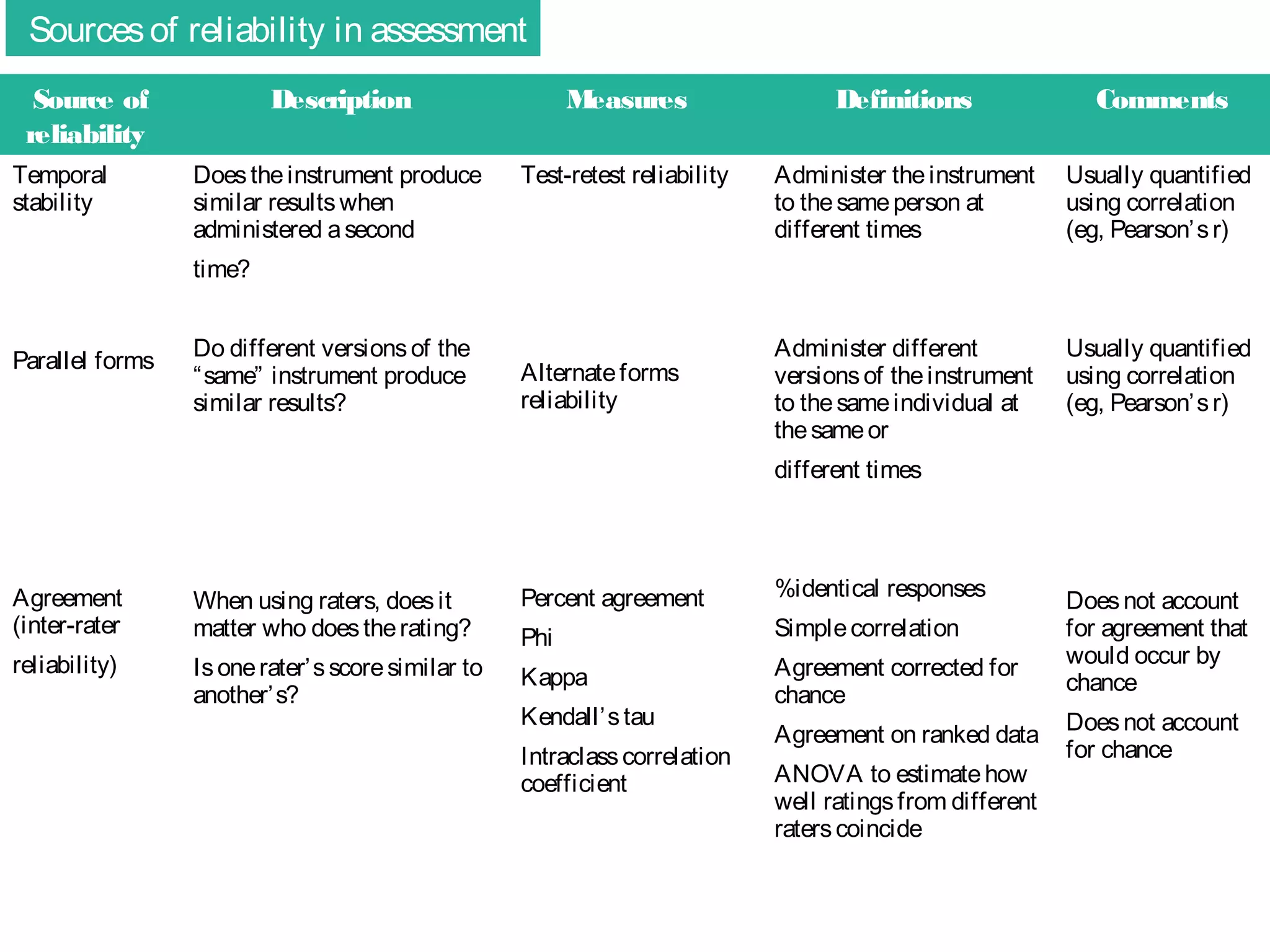

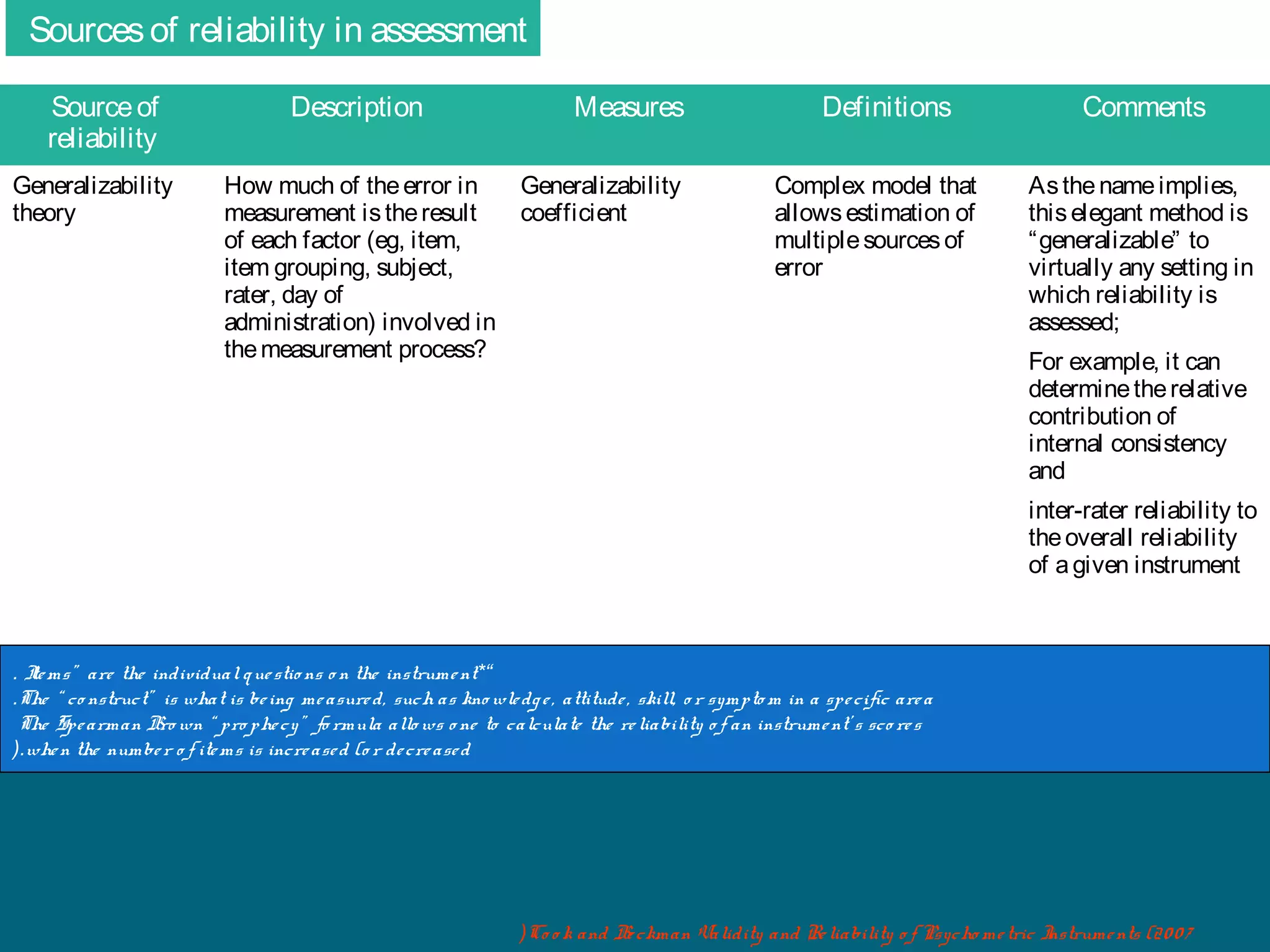

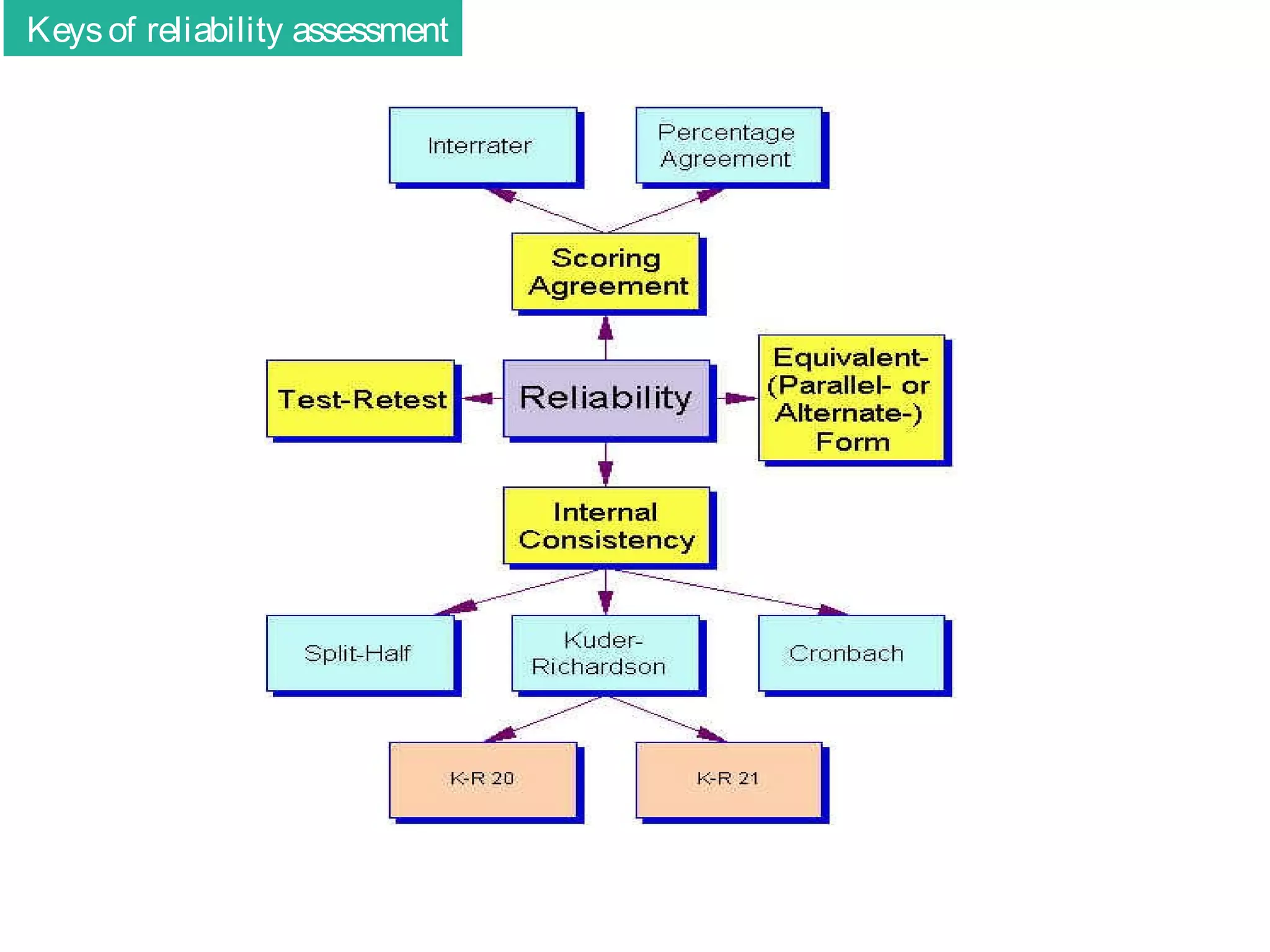

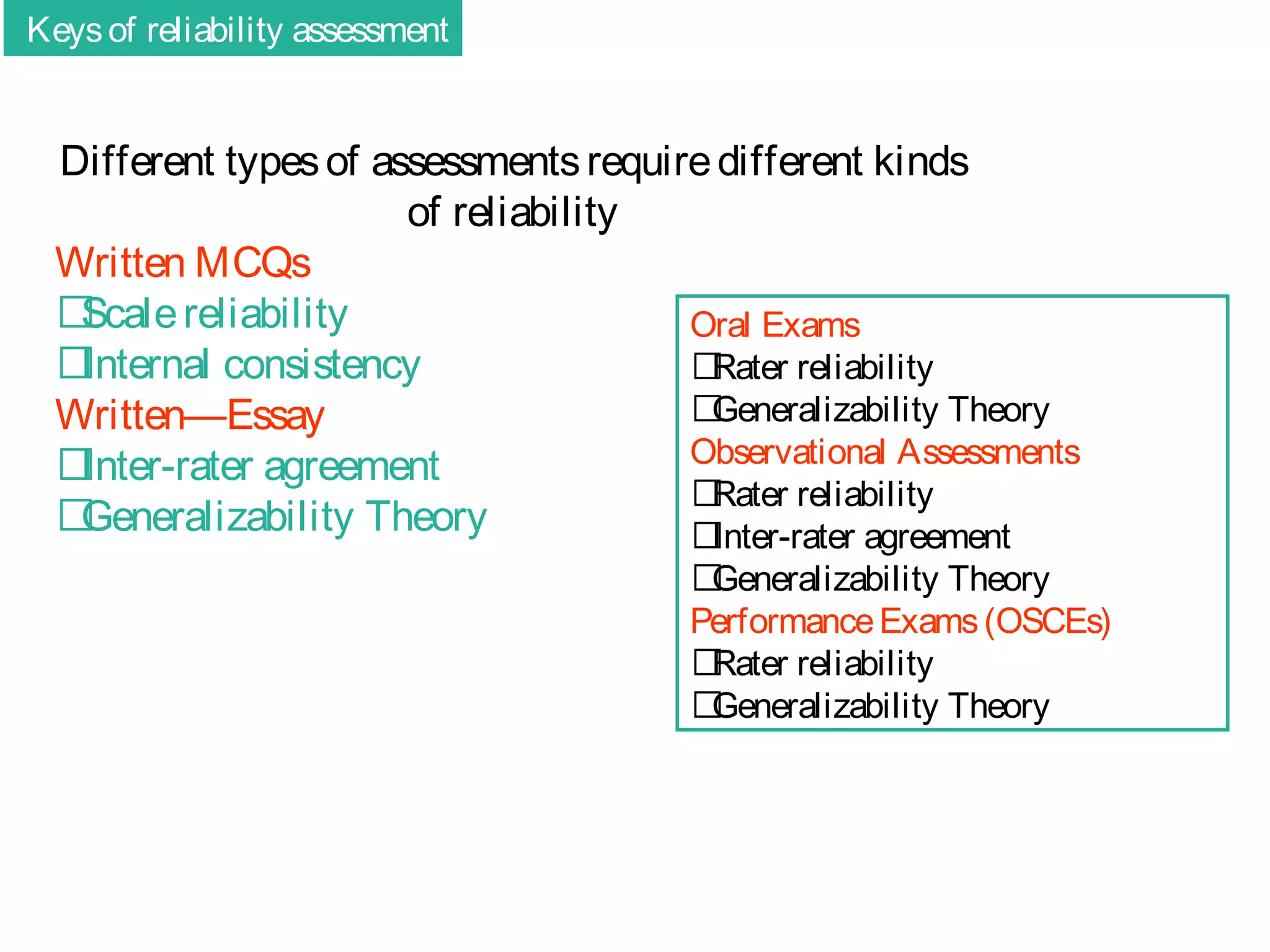

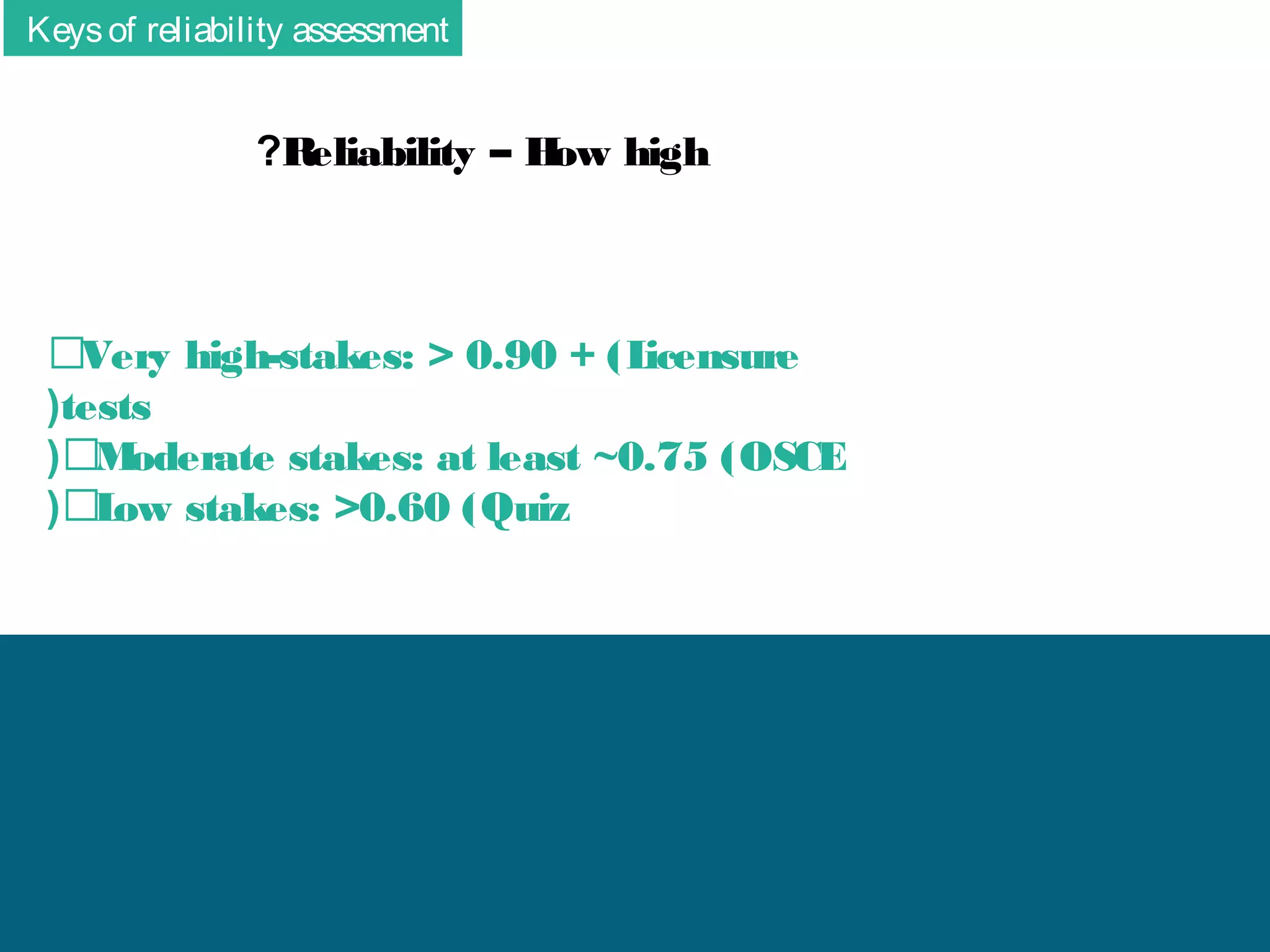

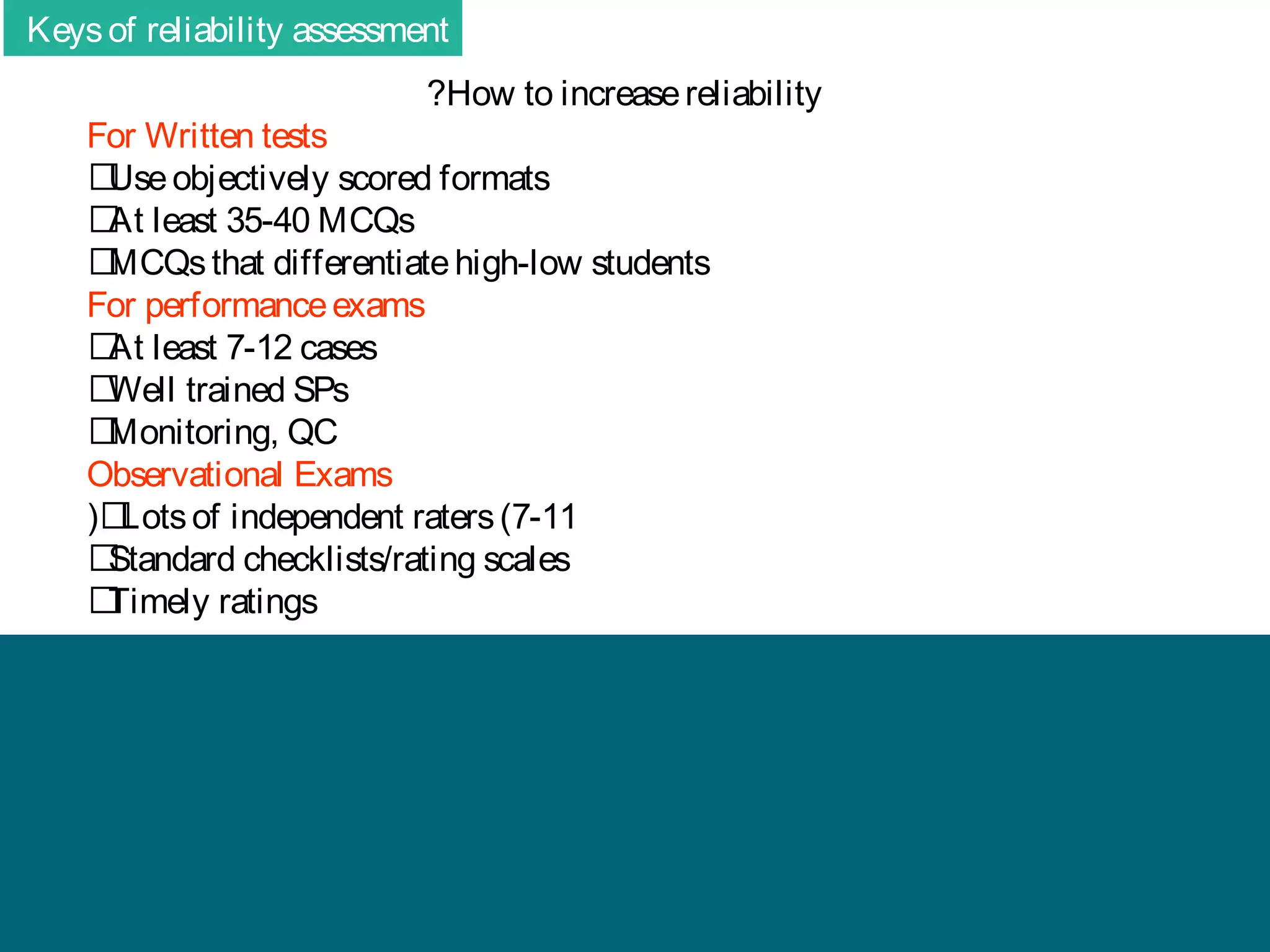

The document discusses the fundamental concepts of validity and reliability in educational assessments, emphasizing the importance of consistent and accurate measurement of constructs. It details various methodologies and criteria for assessing validity, including content, construct, and criterion-related validity, as well as multiple sources of evidence needed for meaningful interpretation. The importance of reliability, both internal and external, is also highlighted, noting that valid tests must also produce reliable results.