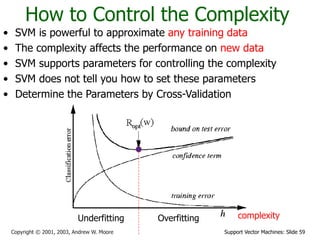

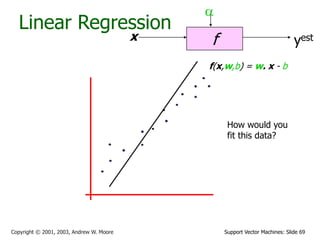

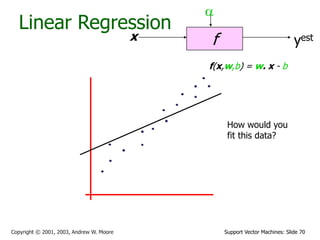

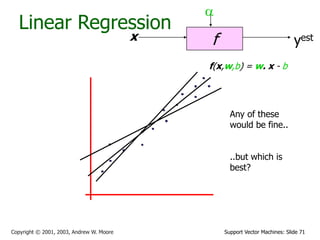

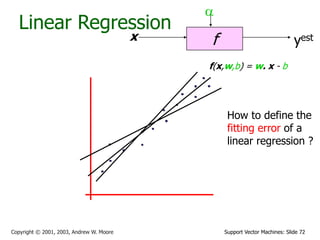

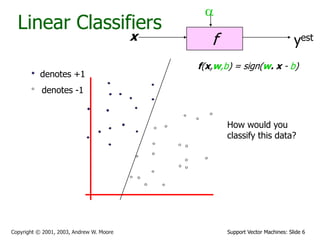

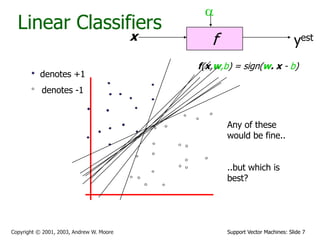

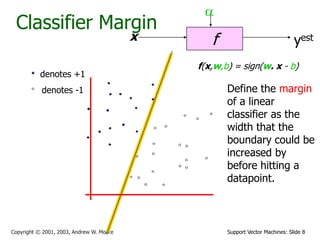

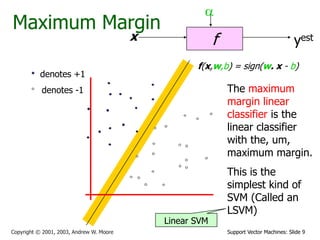

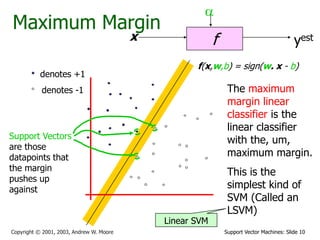

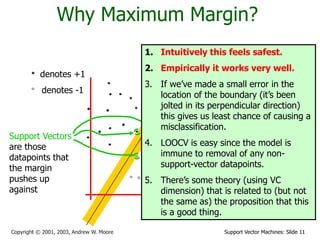

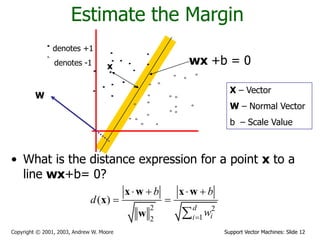

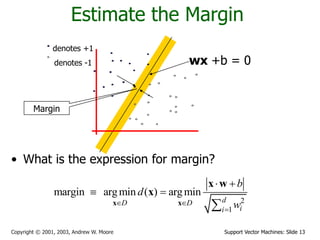

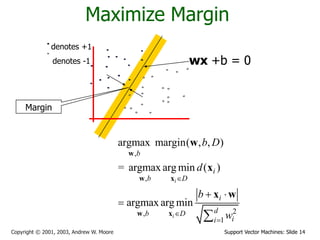

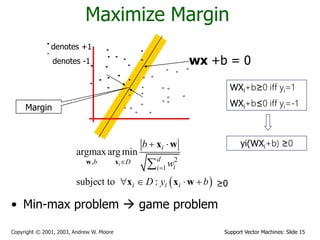

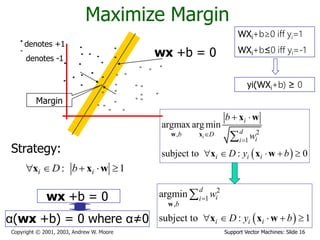

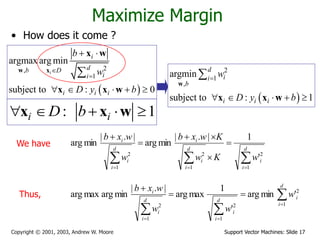

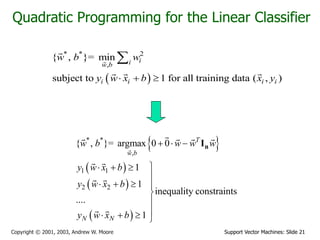

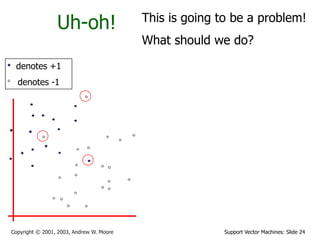

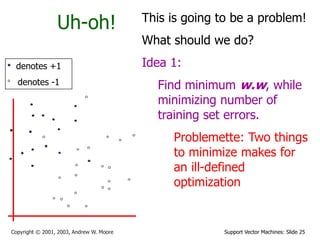

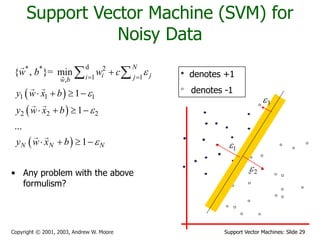

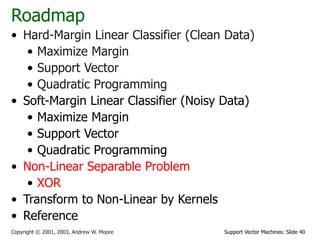

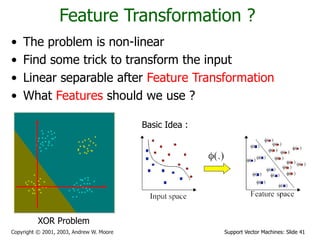

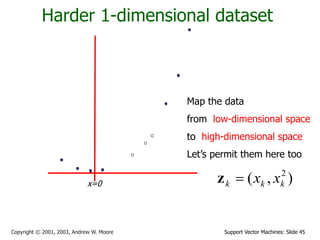

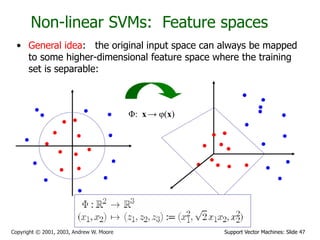

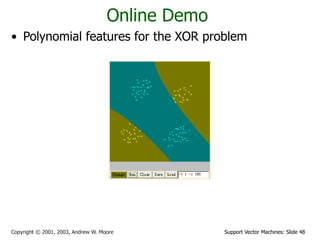

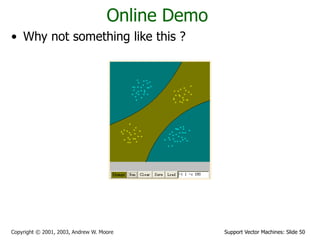

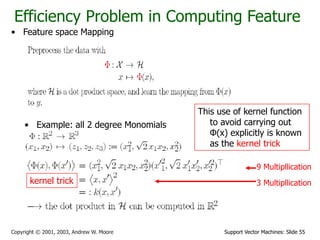

This document provides an overview of support vector machines (SVMs). It begins by discussing hard-margin linear classifiers and how to maximize the margin between classes. It notes that support vectors are data points that lie along the margin boundaries. The document then explains that the maximum margin linear classifier, or linear SVM, finds the linear decision boundary with the maximum margin using quadratic programming. It also discusses why maximizing the margin is preferable. The document continues by introducing the concept of soft-margin classifiers to handle non-separable data and notes that these can still be solved with quadratic programming. Finally, it provides an overview of how kernels can be used to transform linear SVMs into non-linear classifiers.

![Support Vector Machines: Slide 53

Copyright © 2001, 2003, Andrew W. Moore

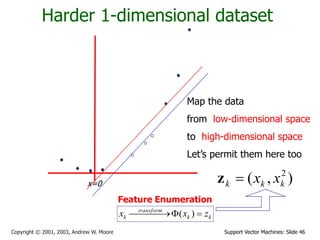

Towards Infinite Dimensions of Features

.......

!

4

1

!

3

1

!

2

1

!

1

1

!

1 4

3

2

1

0

x

x

x

x

x

i

e

i

i

x

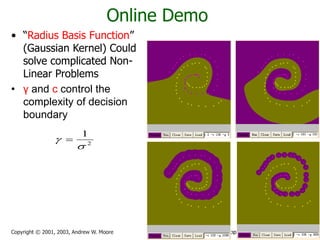

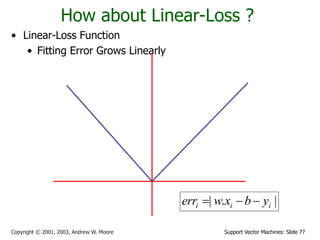

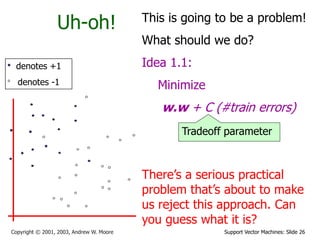

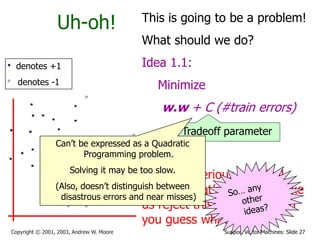

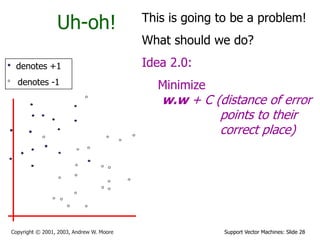

• Enuermate polynomial features of all degrees ?

• Taylor Expension of exponential function

zk = ( radial basis functions of xk )

2

2

|

|

exp

)

(

]

[

j

k

k

j

k φ

j

c

x

x

z](https://image.slidesharecdn.com/unit4svmandavr-230820025744-6450747c/85/Unit-4-SVM-and-AVR-ppt-53-320.jpg)

![Support Vector Machines: Slide 56

Copyright © 2001, 2003, Andrew W. Moore

Common SVM basis functions

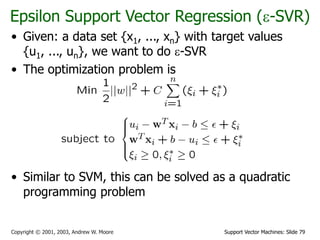

zk = ( polynomial terms of xk of degree 1 to q )

zk = ( radial basis functions of xk )

zk = ( sigmoid functions of xk )

2

2

|

|

exp

)

(

]

[

j

k

k

j

k φ

j

c

x

x

z](https://image.slidesharecdn.com/unit4svmandavr-230820025744-6450747c/85/Unit-4-SVM-and-AVR-ppt-56-320.jpg)