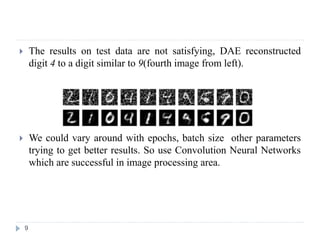

1) The document discusses using a denoising autoencoder to extract features from MNIST handwritten digit data to improve classification accuracy. It loads and preprocesses the noisy data, trains a denoising autoencoder on it, uses the hidden layers as new features, and trains a classifier on those features.

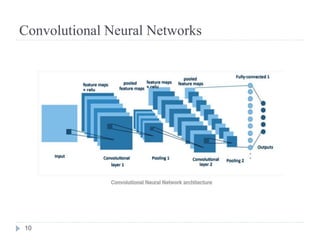

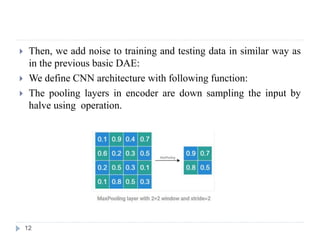

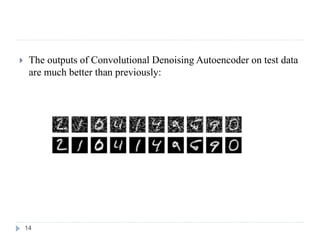

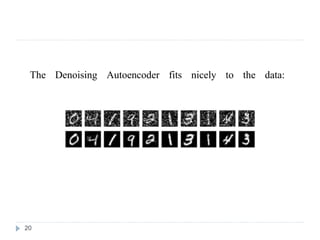

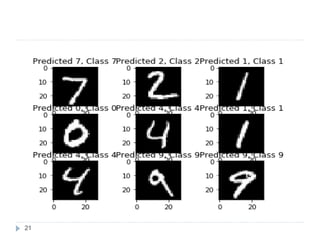

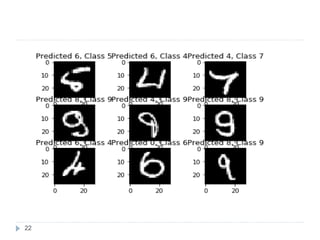

2) A convolutional denoising autoencoder is constructed and trained on noisy MNIST data, producing better reconstructions than a basic denoising autoencoder.

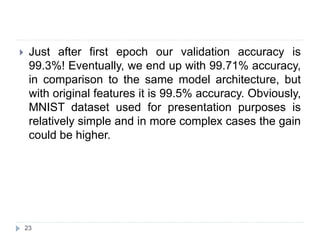

3) The pipeline extracts features from a denoising autoencoder trained on noisy MNIST data, uses those features to train a classifier, and achieves a validation accuracy of 99.71%, higher than training the same classifier on the original features.