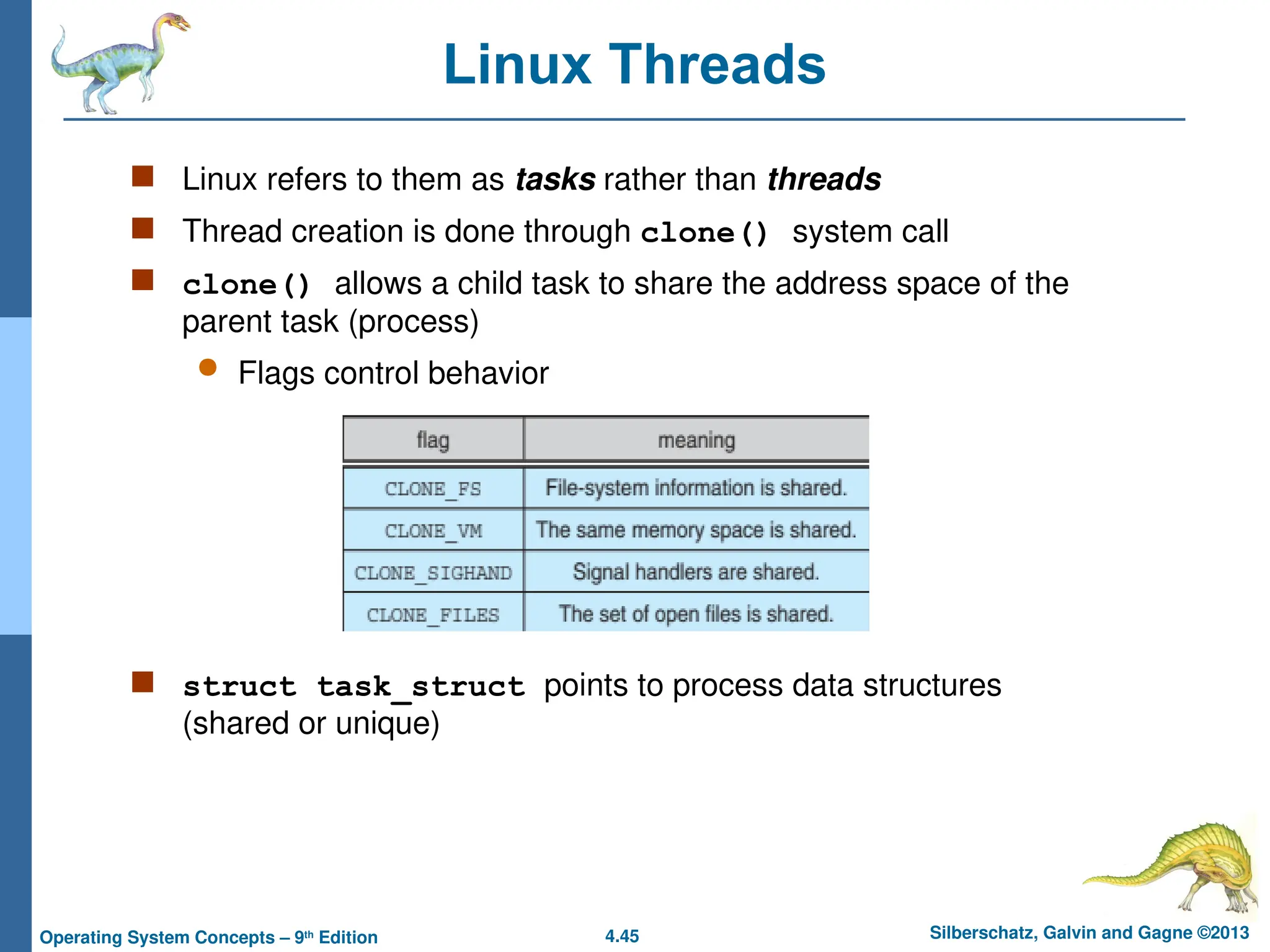

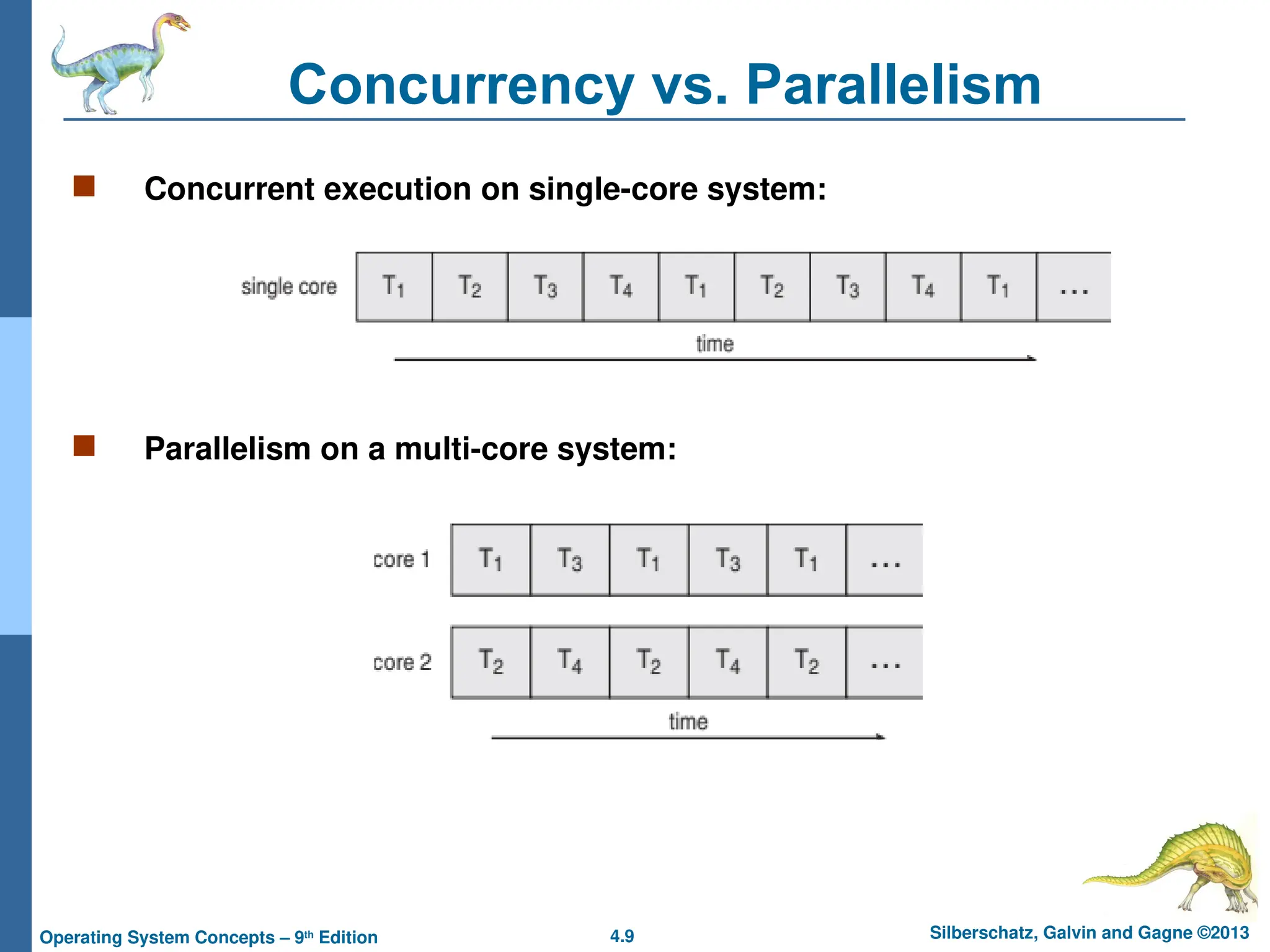

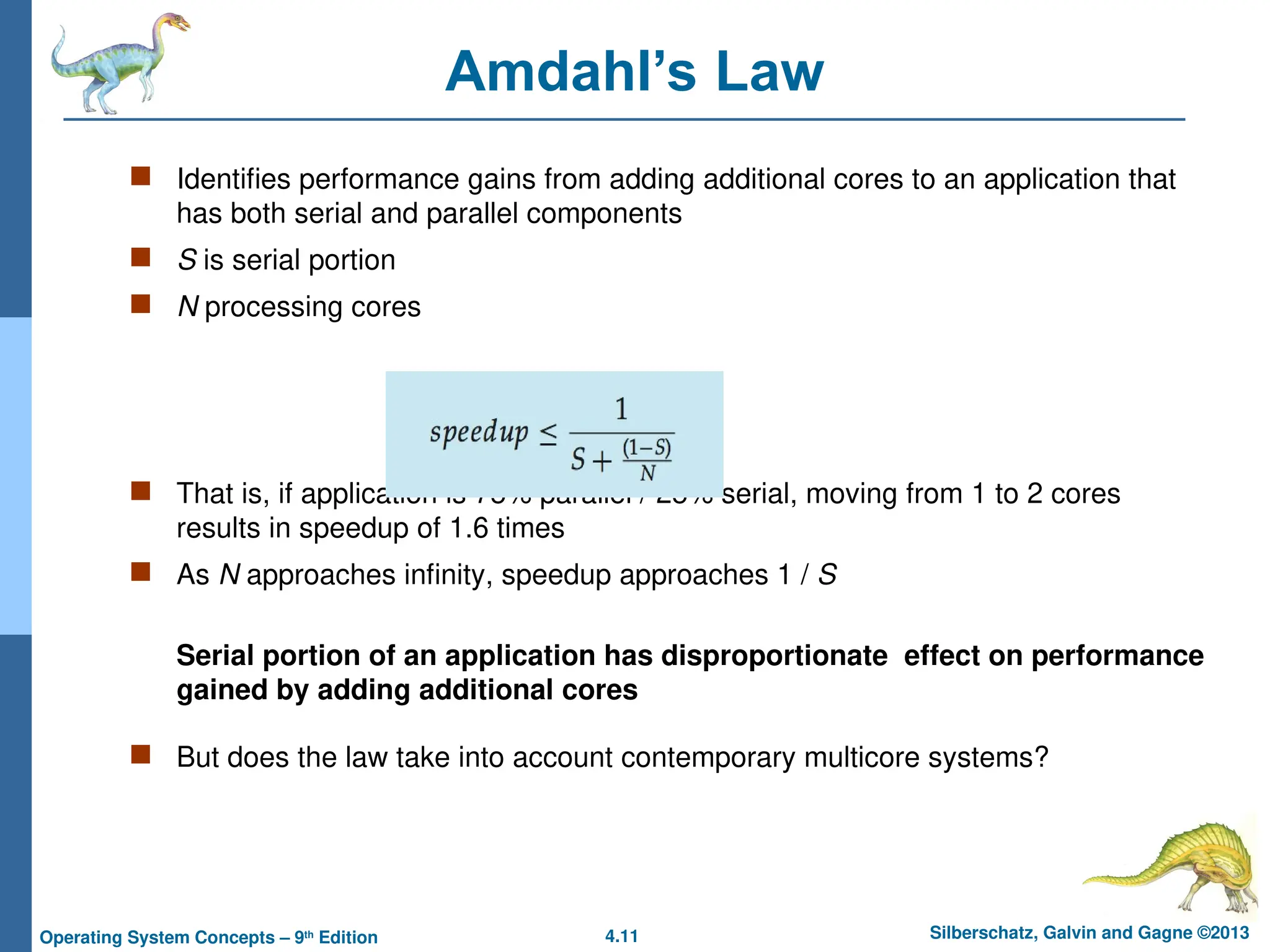

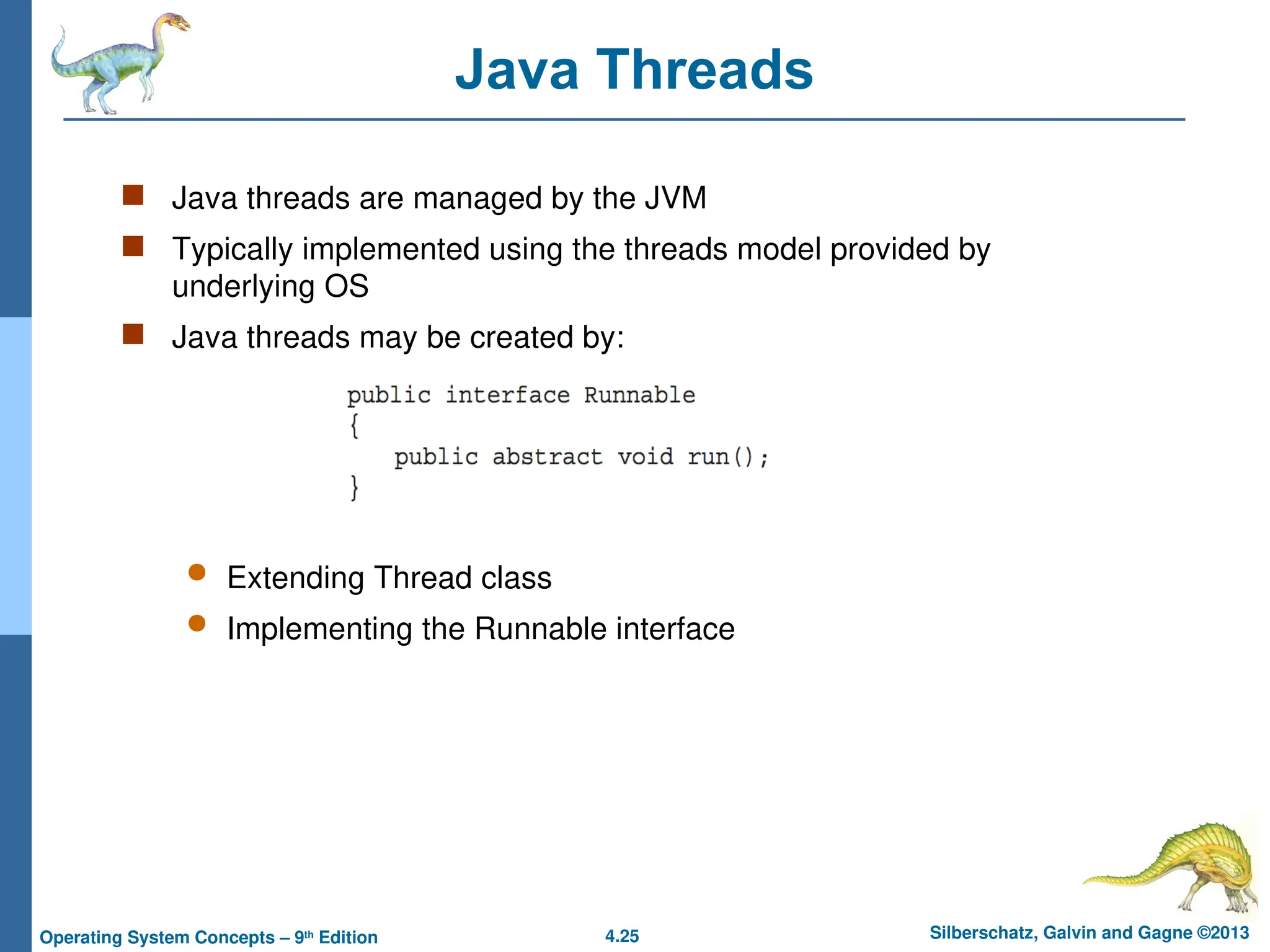

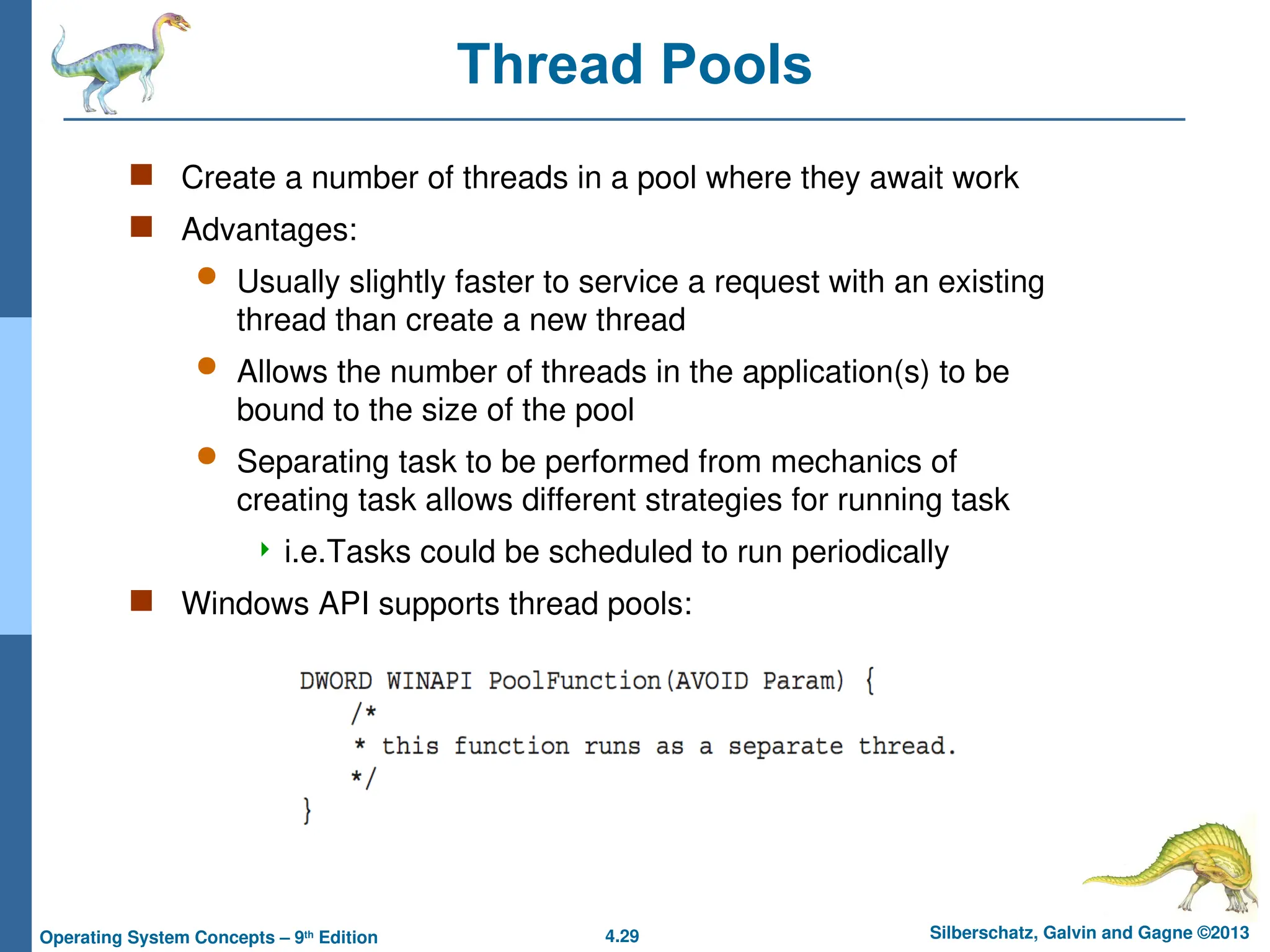

Chapter 4 of 'Operating System Concepts' discusses threads as fundamental units of CPU utilization, focusing on multithreading models, APIs for various thread libraries, and operating system support. It covers the benefits of multithreading, issues related to thread management, and methods for implicit threading. Additionally, it highlights different threading models, such as one-to-one and many-to-many, and explores user and kernel thread implementations across operating systems like Windows and Linux.

![4.30 Silberschatz, Galvin and Gagne ©2013

Operating System Concepts – 9th

Edition

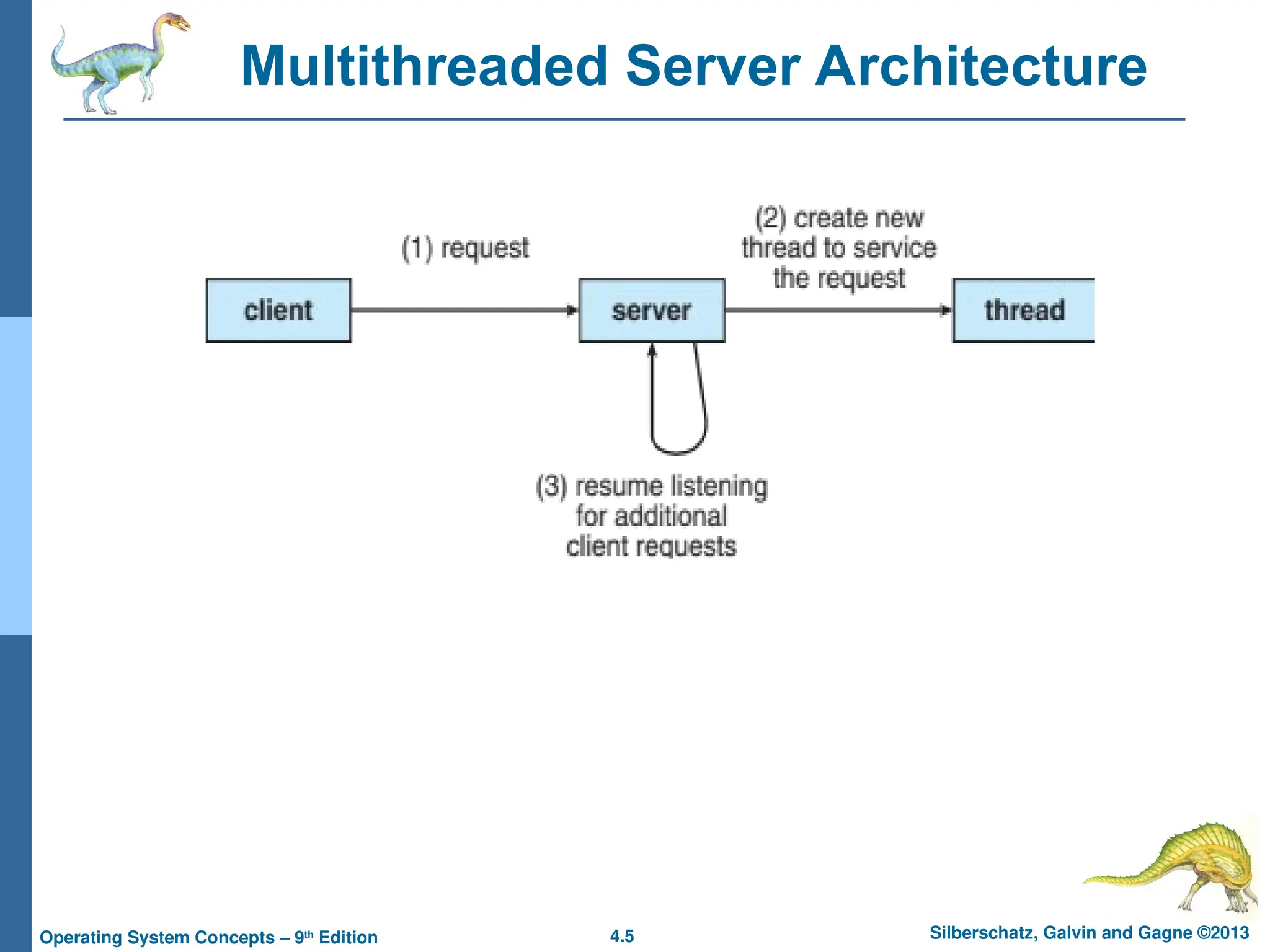

OpenMP

Set of compiler directives and an

API for C, C++, FORTRAN

Provides support for parallel

programming in shared-memory

environments

Identifies parallel regions – blocks

of code that can run in parallel

#pragma omp parallel

Create as many threads as there are

cores

#pragma omp parallel for

for(i=0;i<N;i++) {

c[i] = a[i] + b[i];

}

Run for loop in parallel](https://image.slidesharecdn.com/ch4-241216103928-027a6748/75/chapter-4-introduction-to-powerpoint-ppt-30-2048.jpg)