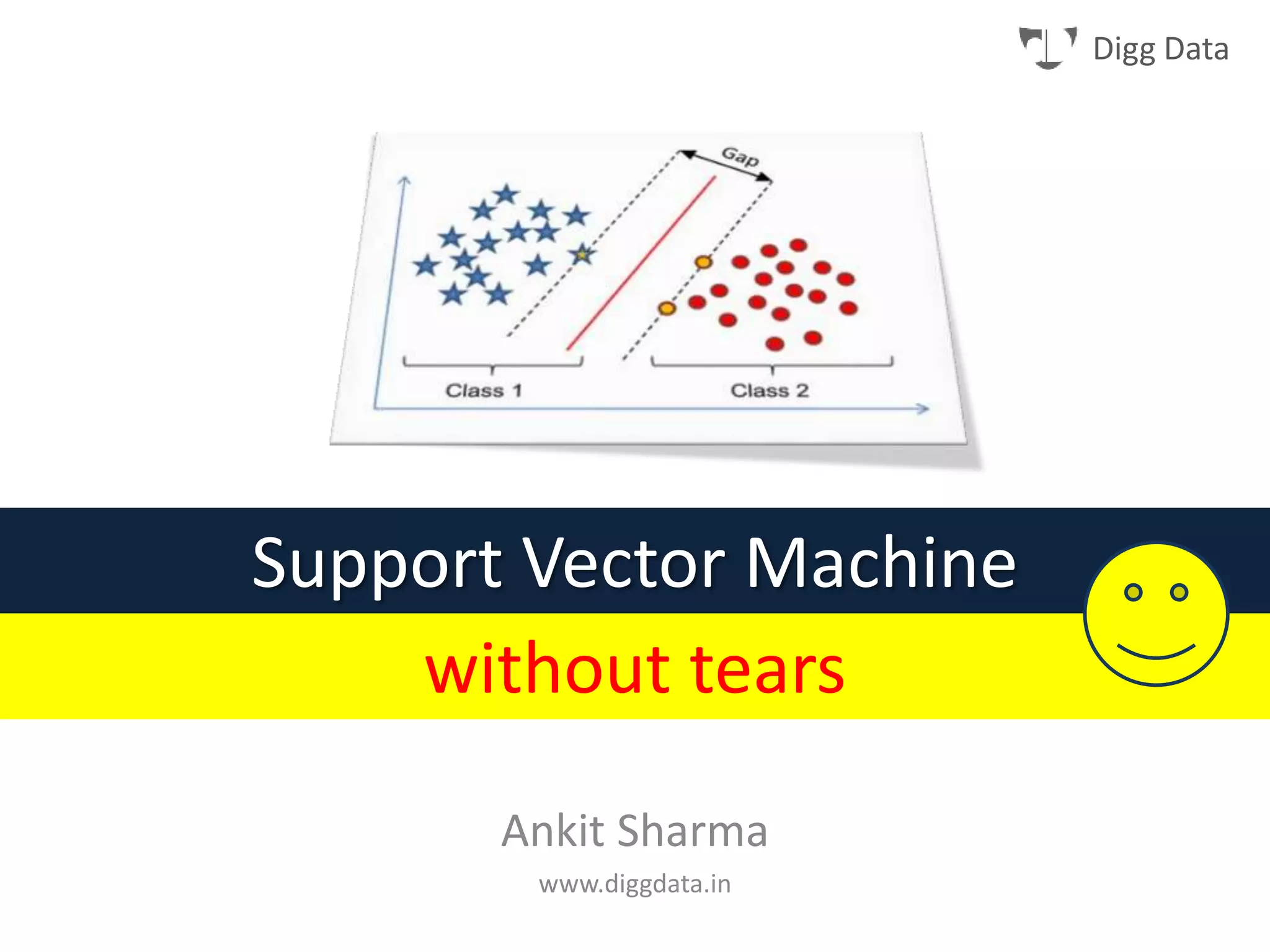

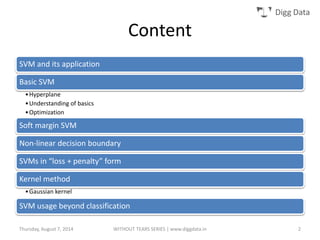

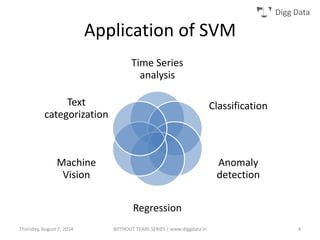

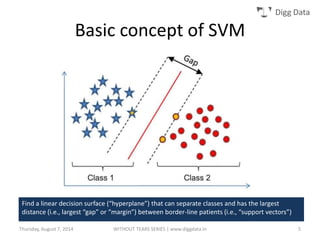

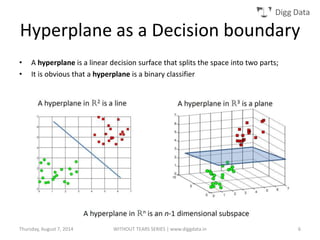

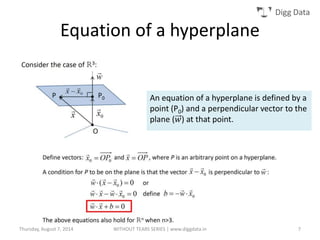

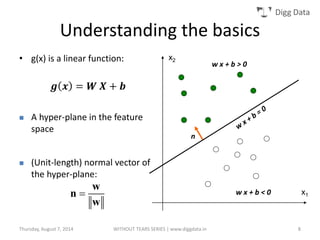

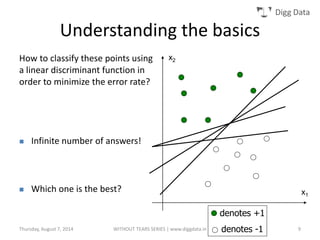

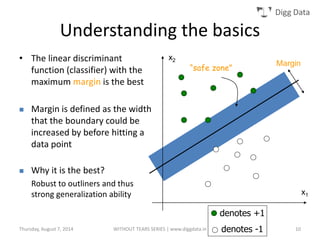

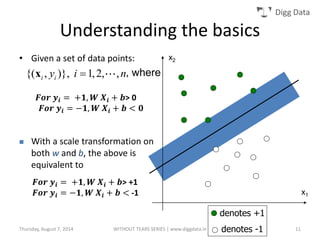

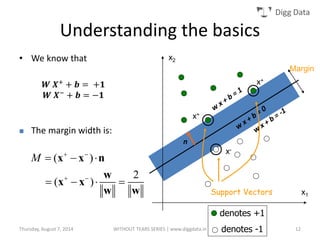

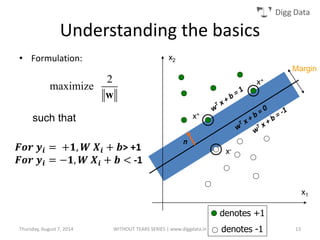

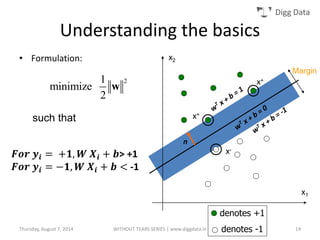

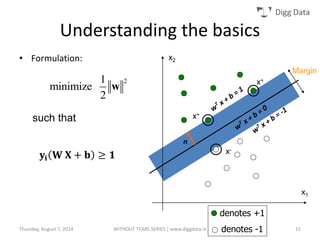

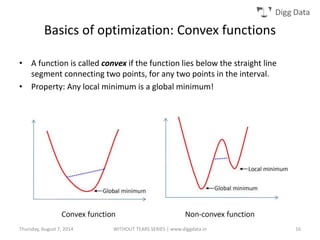

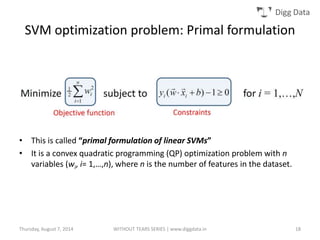

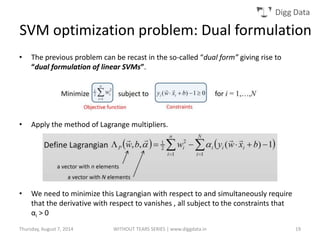

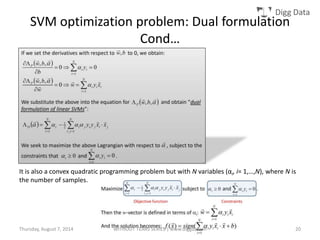

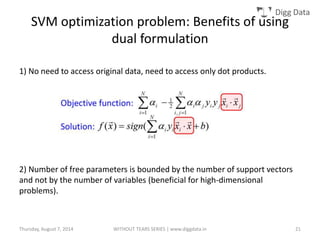

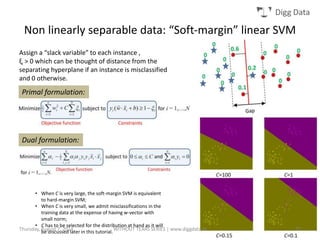

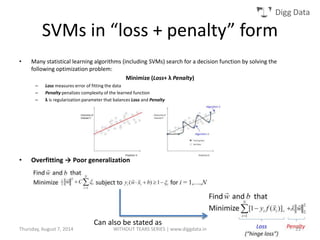

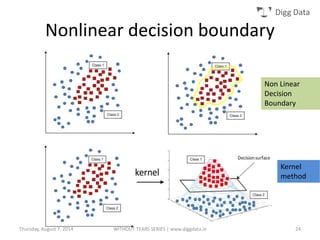

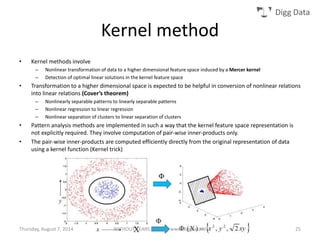

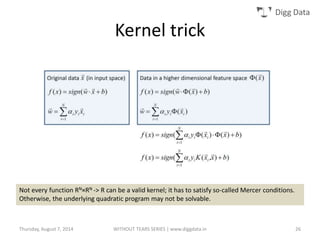

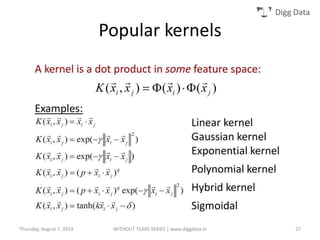

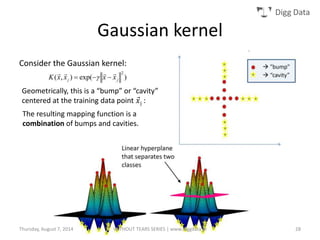

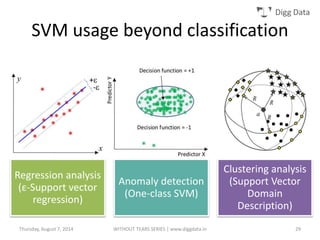

This document provides an overview of support vector machines (SVMs), including their basic concepts, formulations, and applications. SVMs are supervised learning models that analyze data, recognize patterns, and are used for classification and regression. The document explains key SVM properties, the concept of finding an optimal hyperplane for classification, soft margin SVMs, dual formulations, kernel methods, and how SVMs can be used for tasks beyond binary classification like regression, anomaly detection, and clustering.