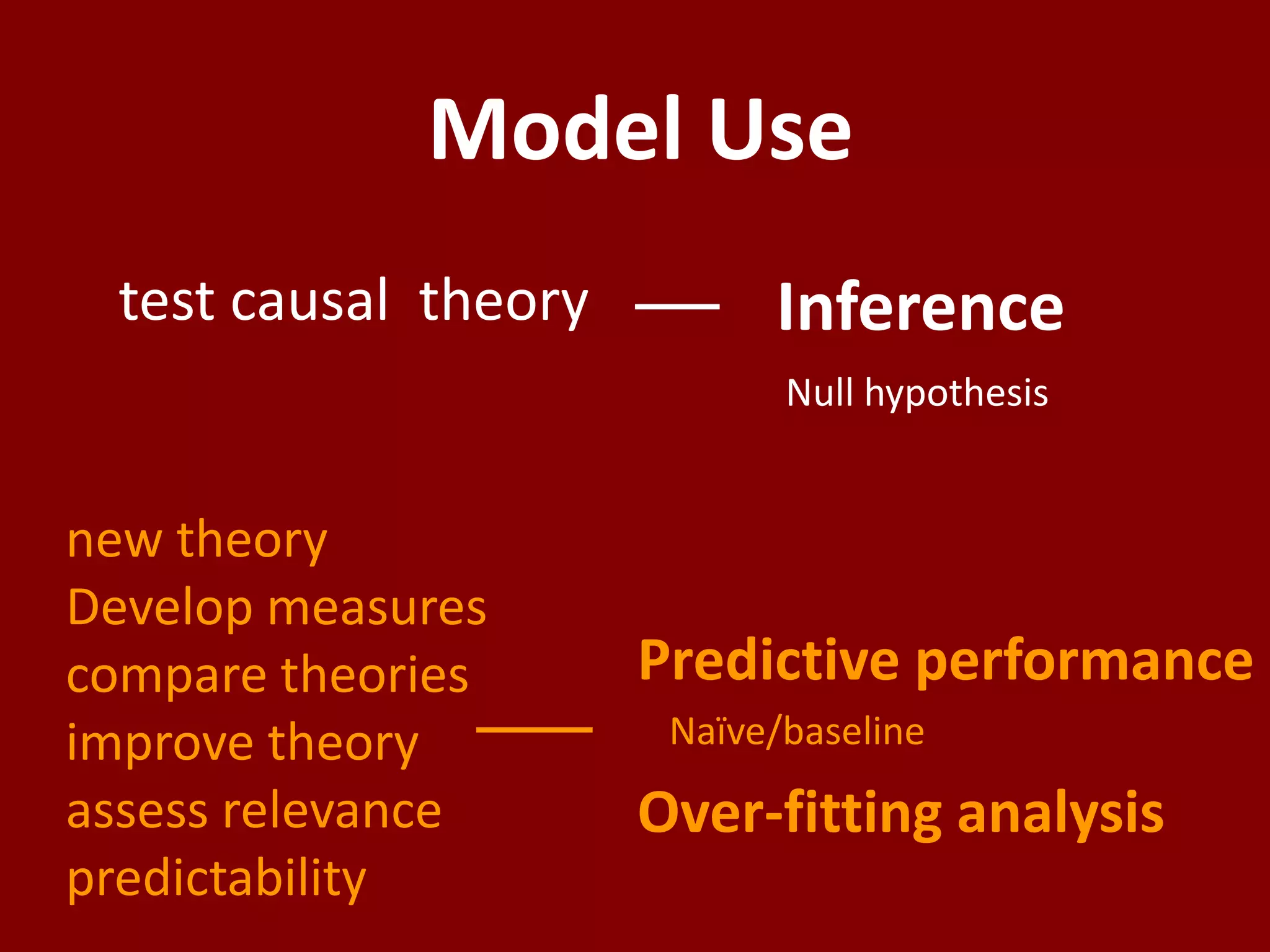

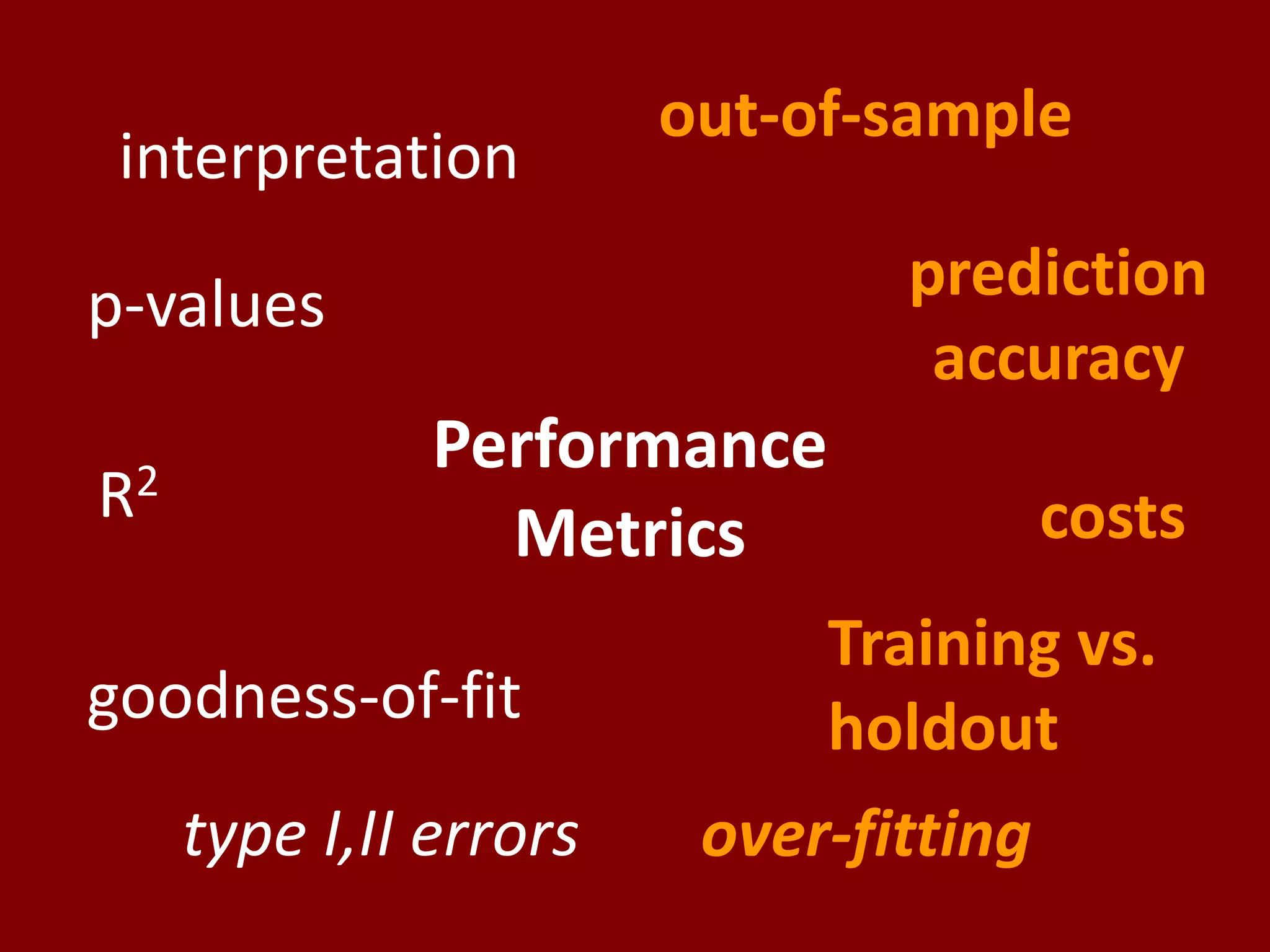

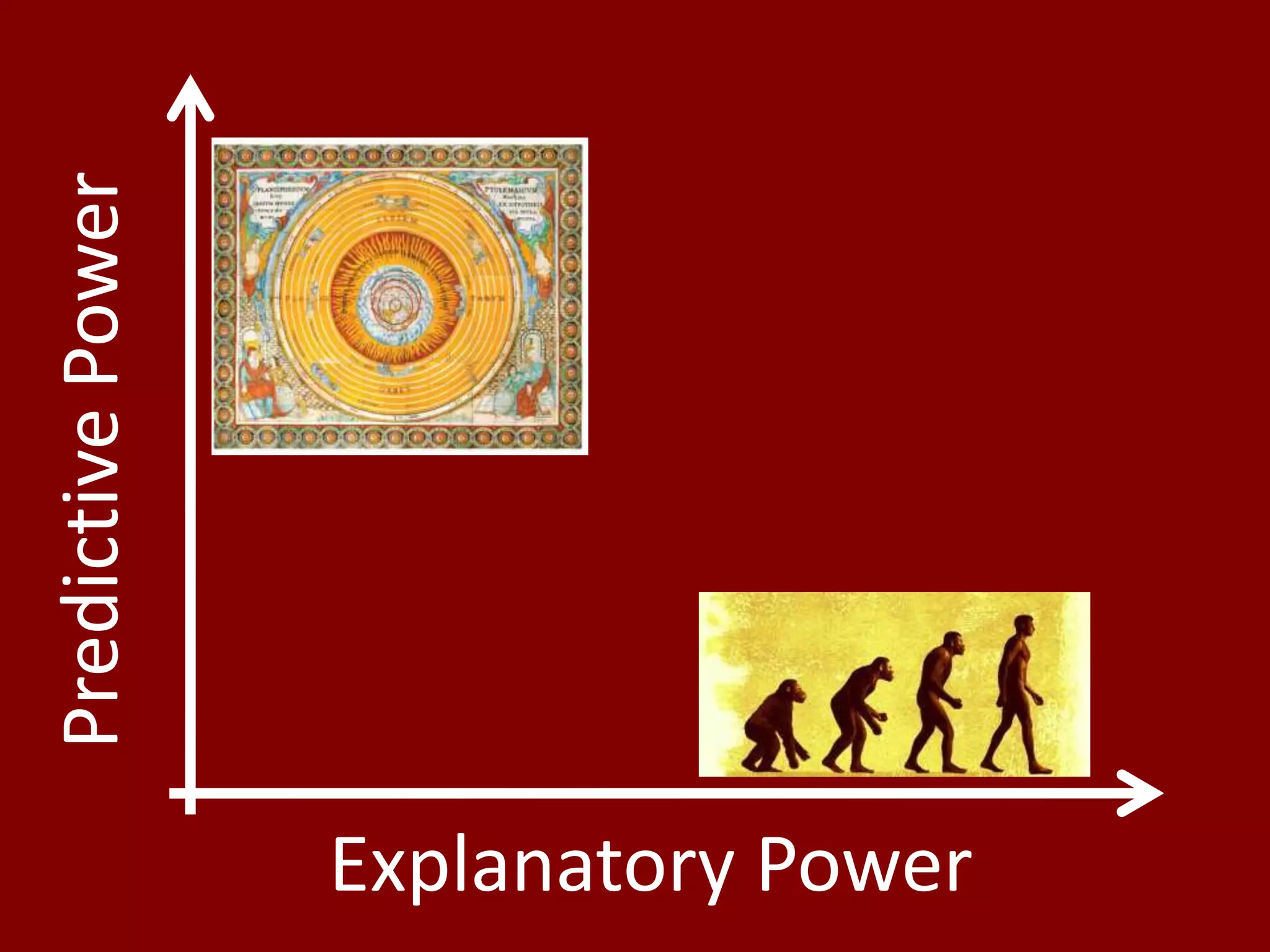

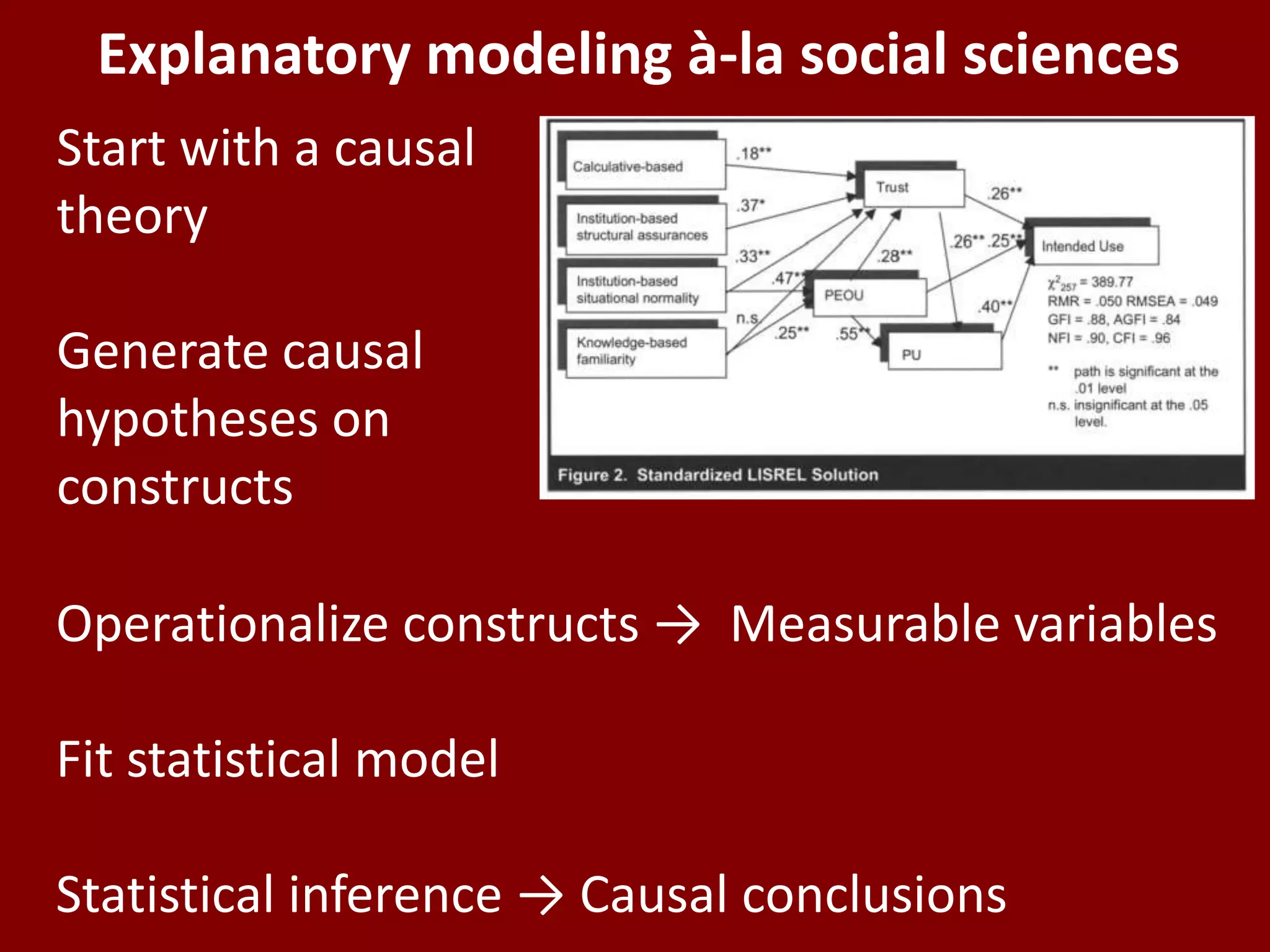

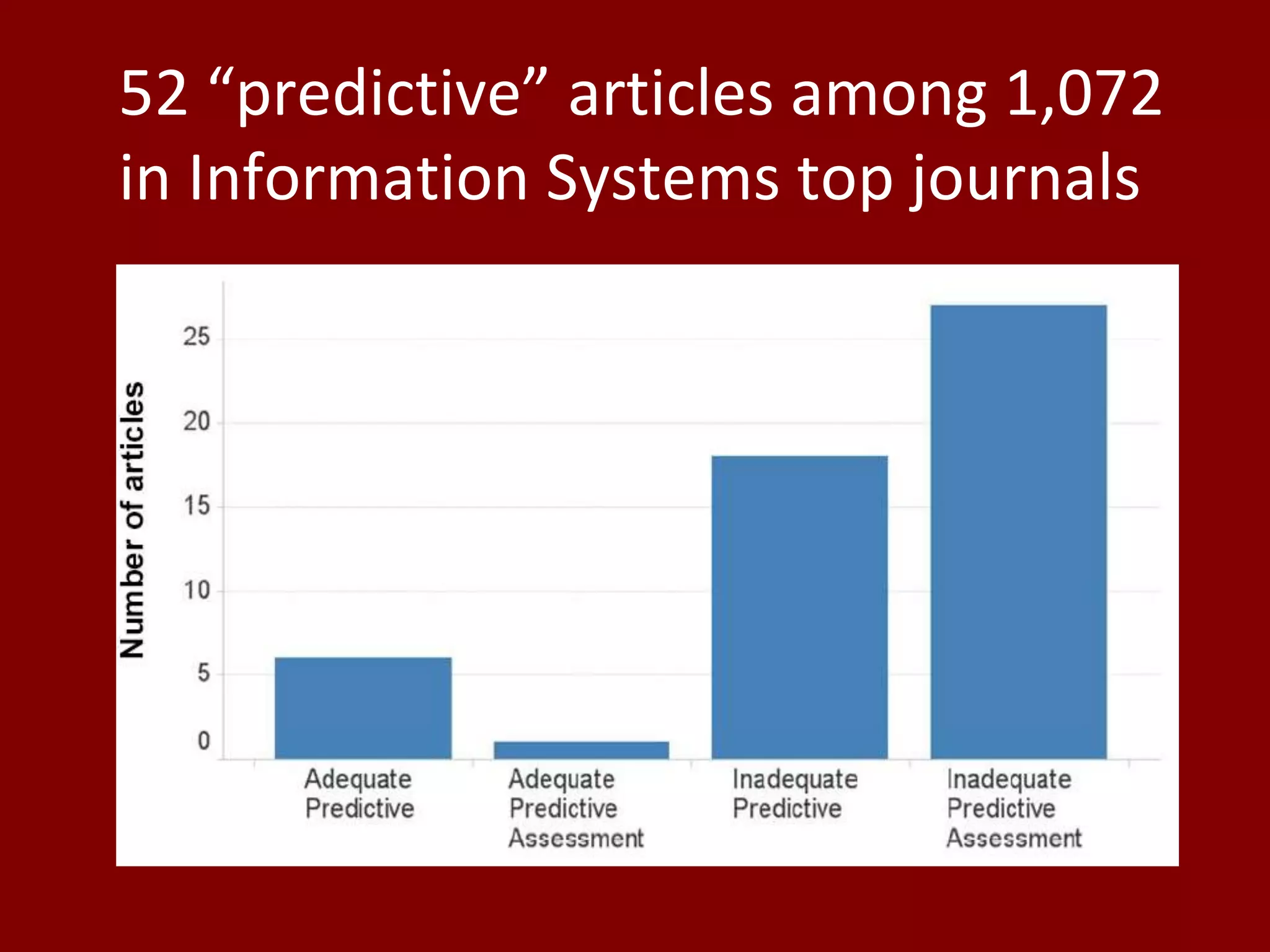

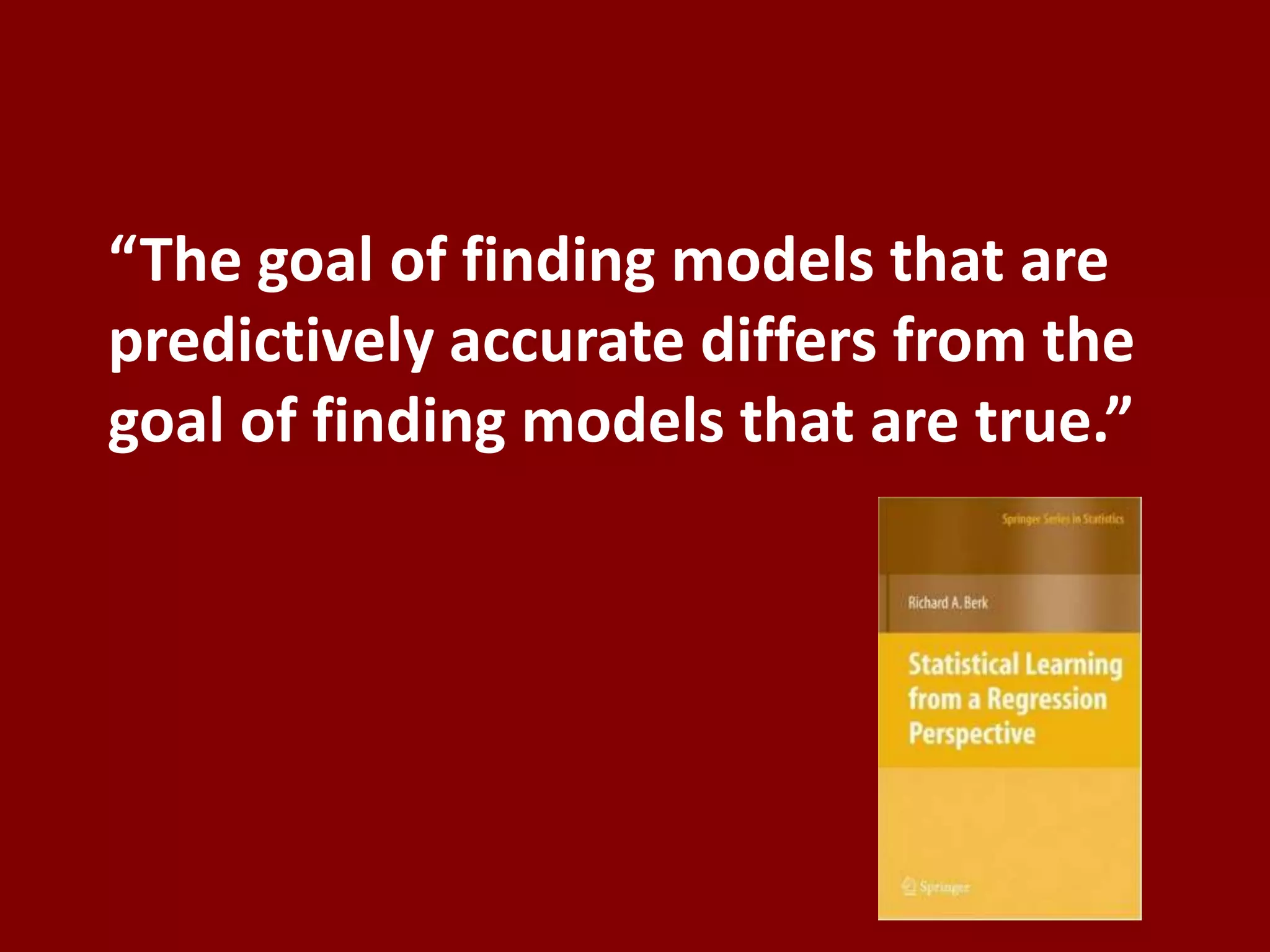

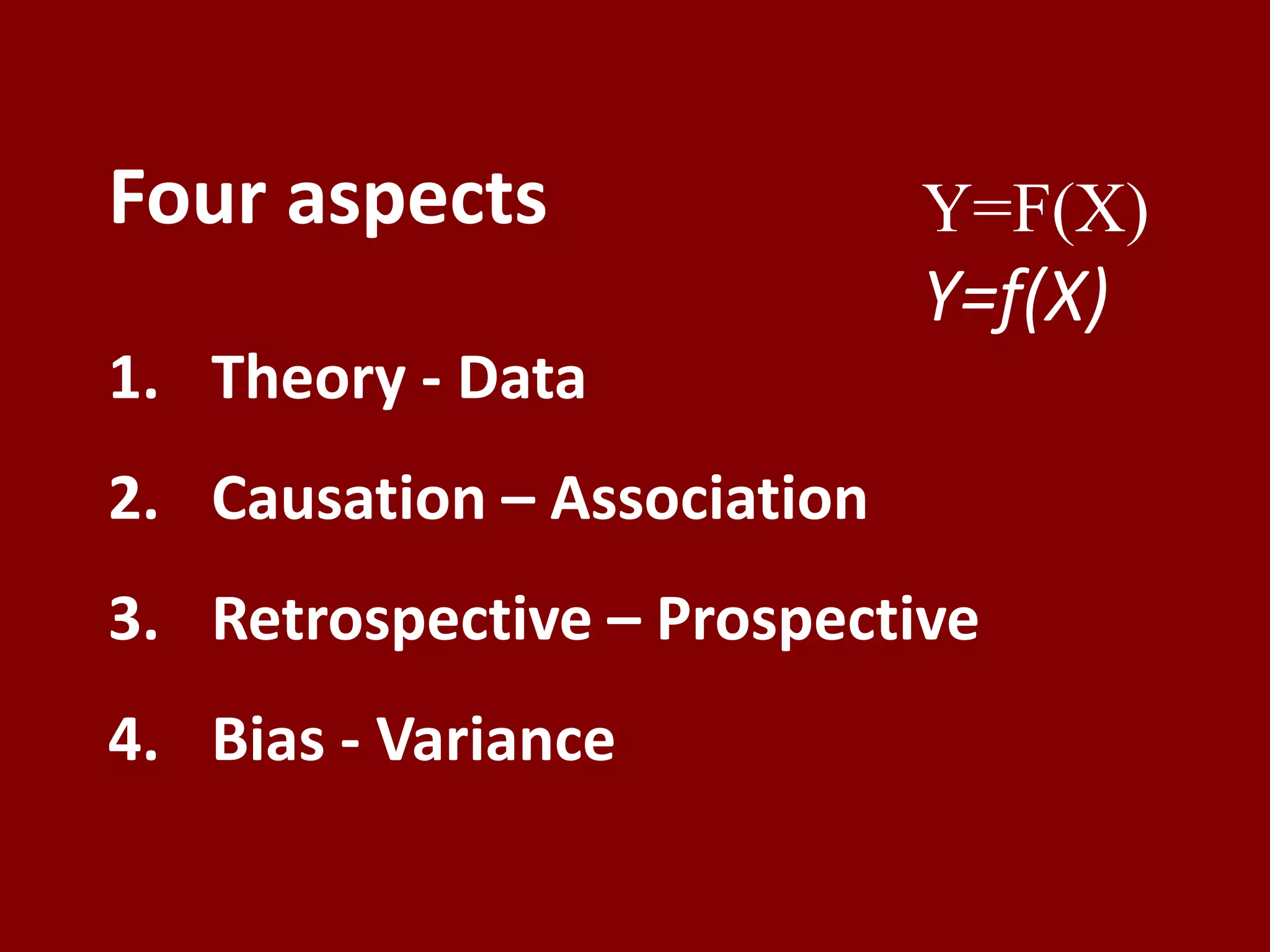

The document discusses the distinction between explanatory and predictive modeling in social sciences, emphasizing that explanatory modeling is theory-based while predictive modeling focuses on empirical data. It argues that the goals of understanding (explanation) and forecasting (prediction) require different approaches and methodologies, with a need for better integration of predictive techniques in scientific research. Additionally, it highlights the importance of predictive power in evaluating scientific models, suggesting that good explanatory models should also be able to predict outcomes accurately.

![Predict ≠ Explain

“we tried to benefit from an extensive

set of attributes describing each of the

movies in the dataset. Those attributes

certainly carry a significant signal and

+

can explain some of the user behavior.

However… they could not help at all

?

for improving the [predictive]

accuracy.”

Bell et al., 2008](https://image.slidesharecdn.com/toexplainortopredicttaujuly2012-120709151249-phpapp01/75/To-explain-or-to-predict-21-2048.jpg)