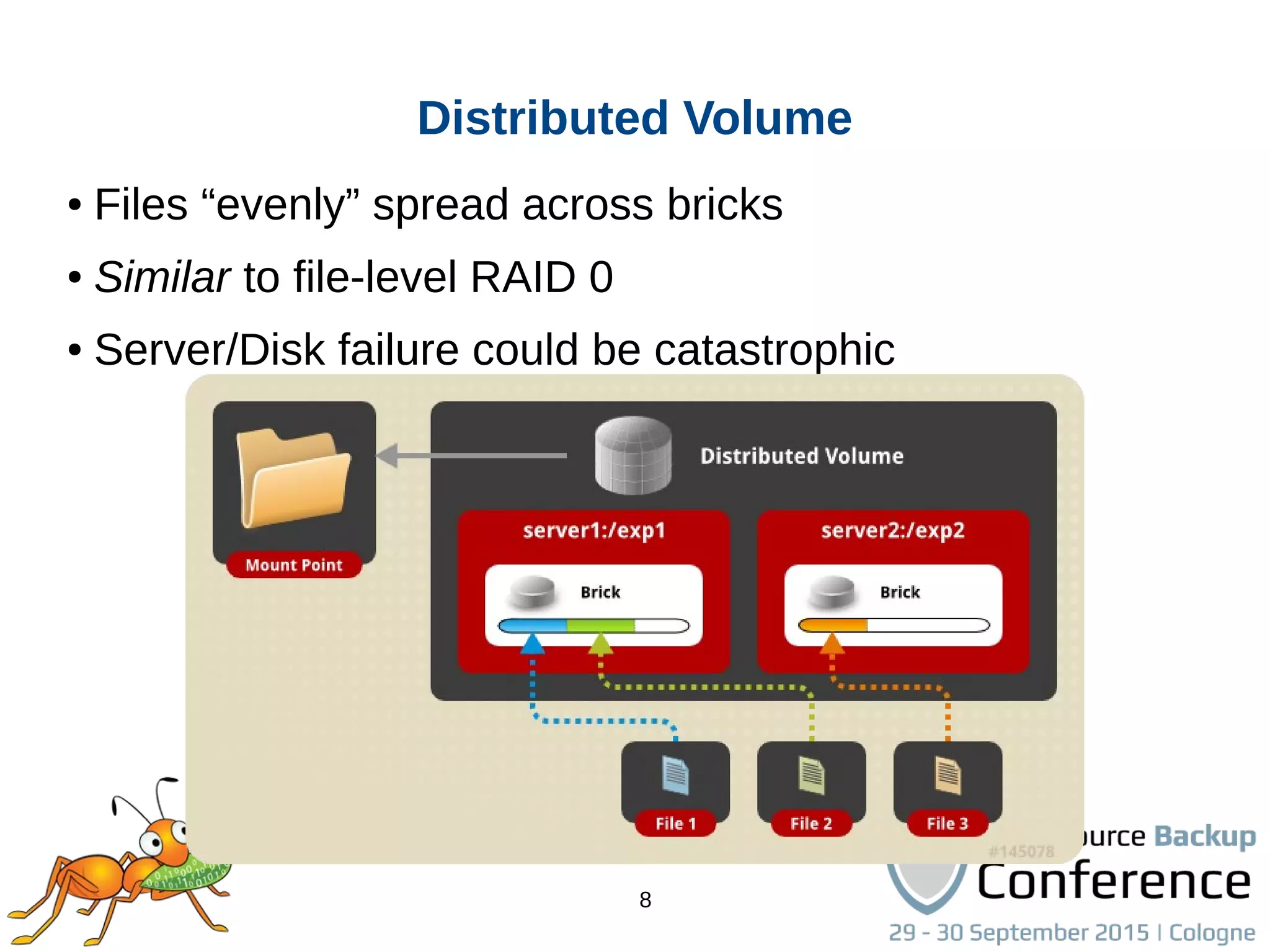

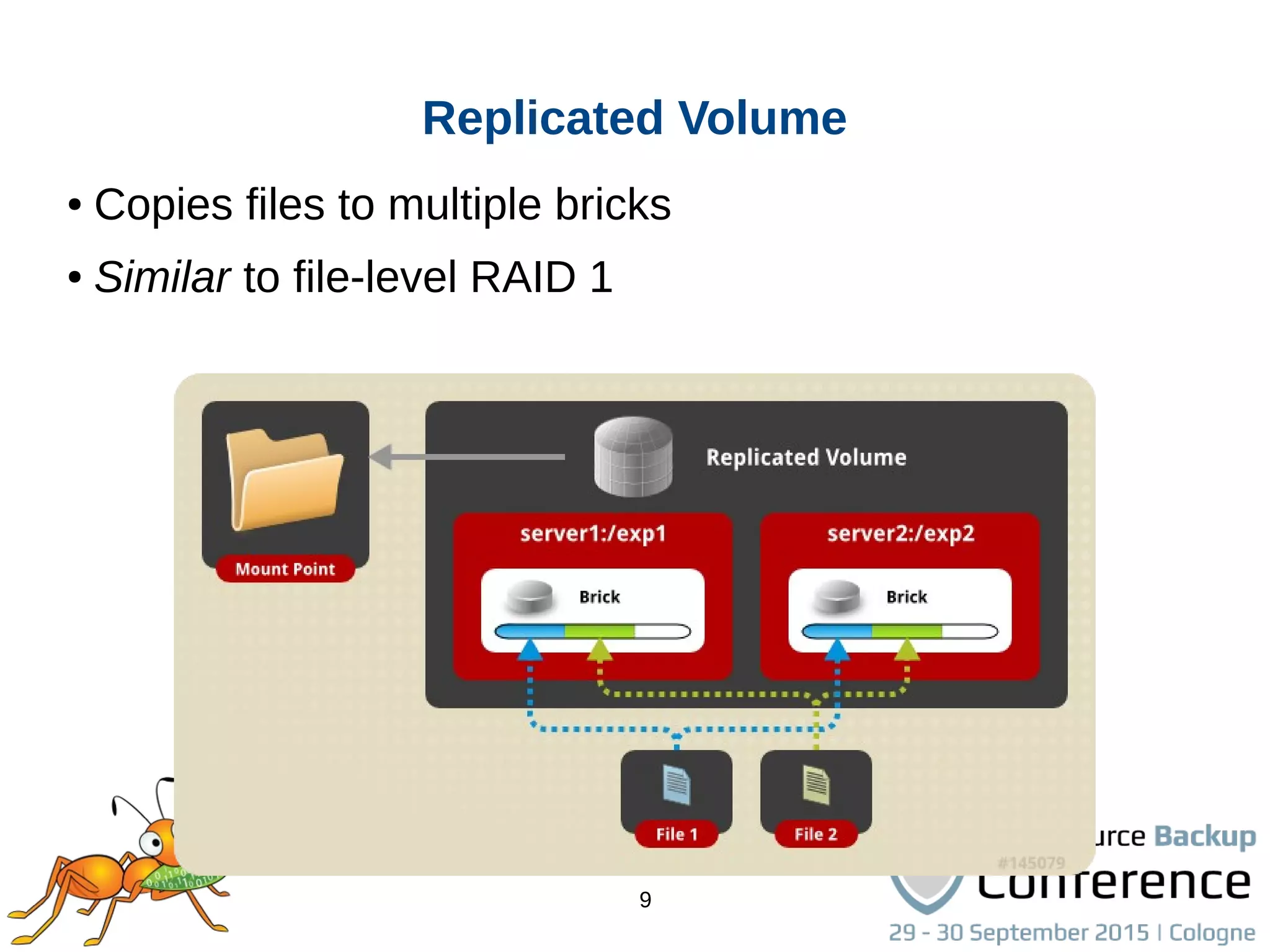

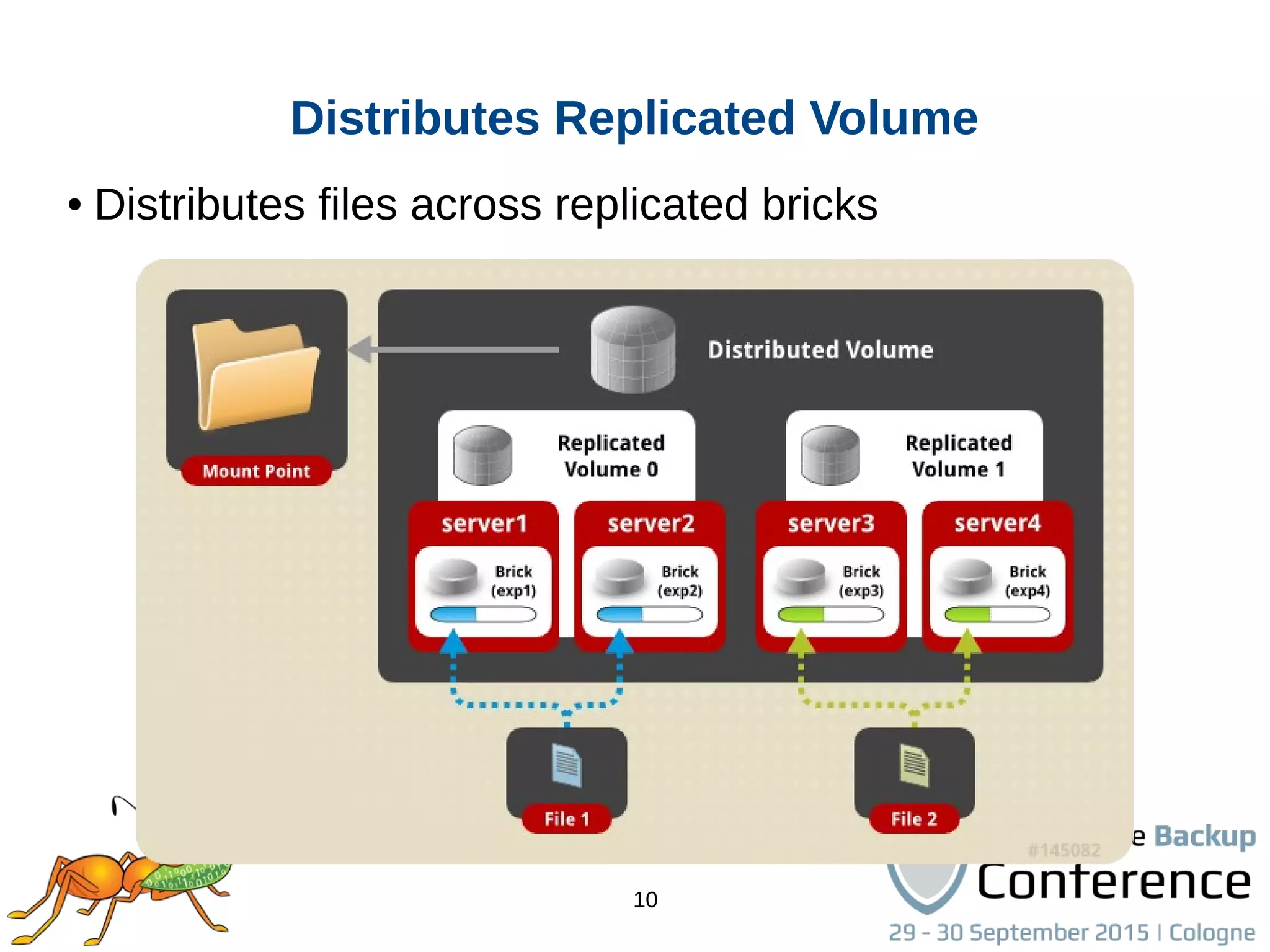

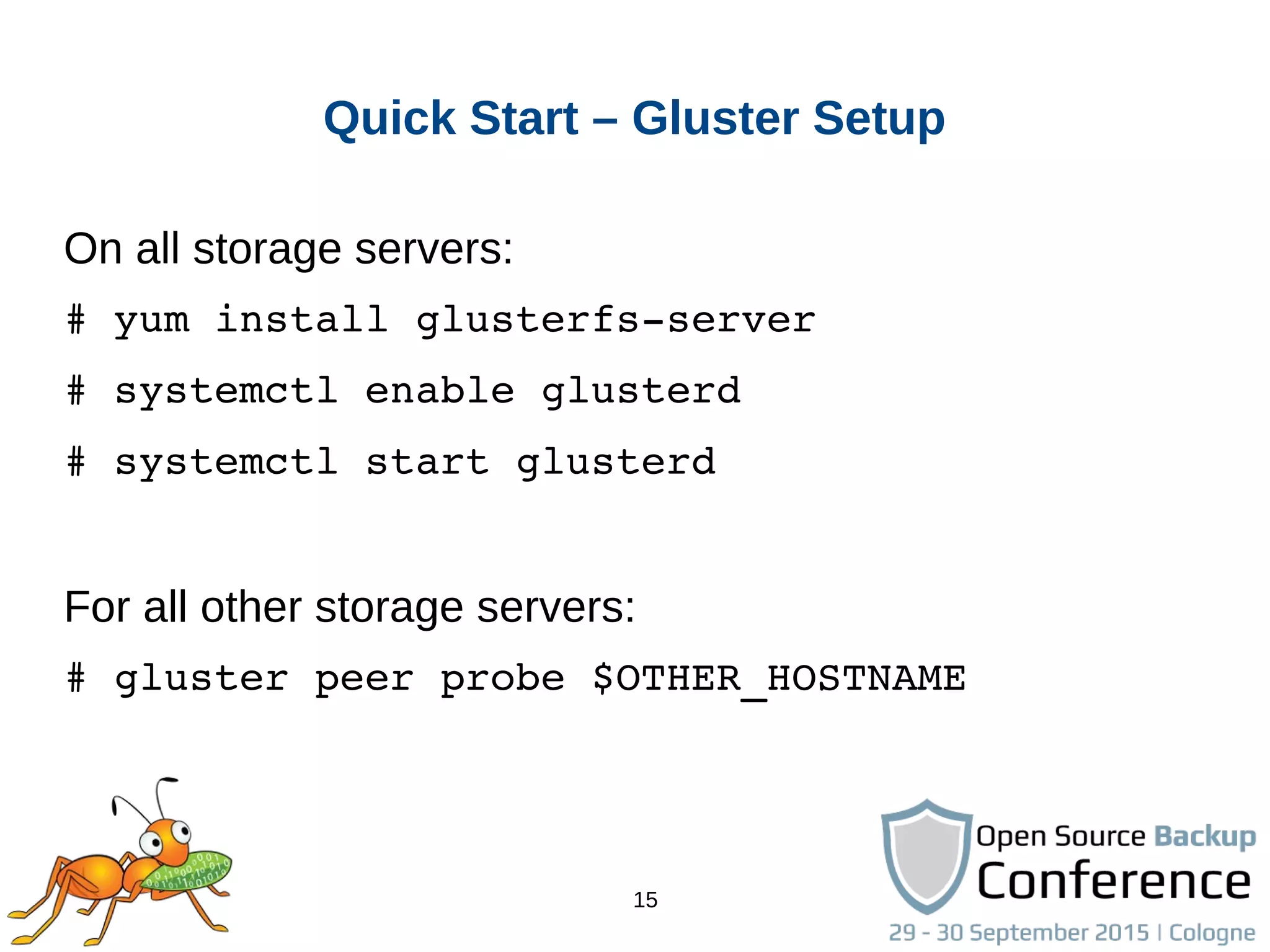

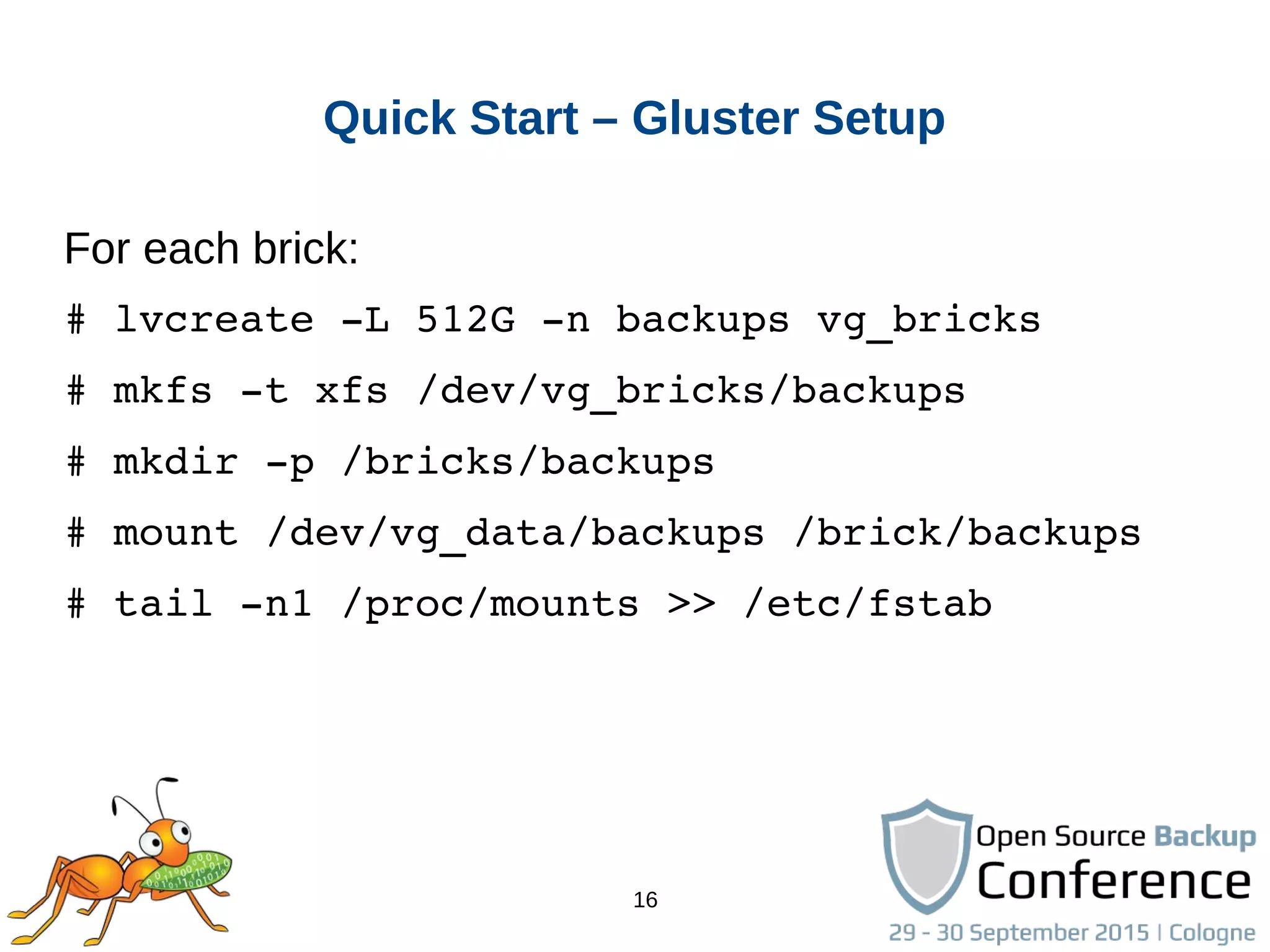

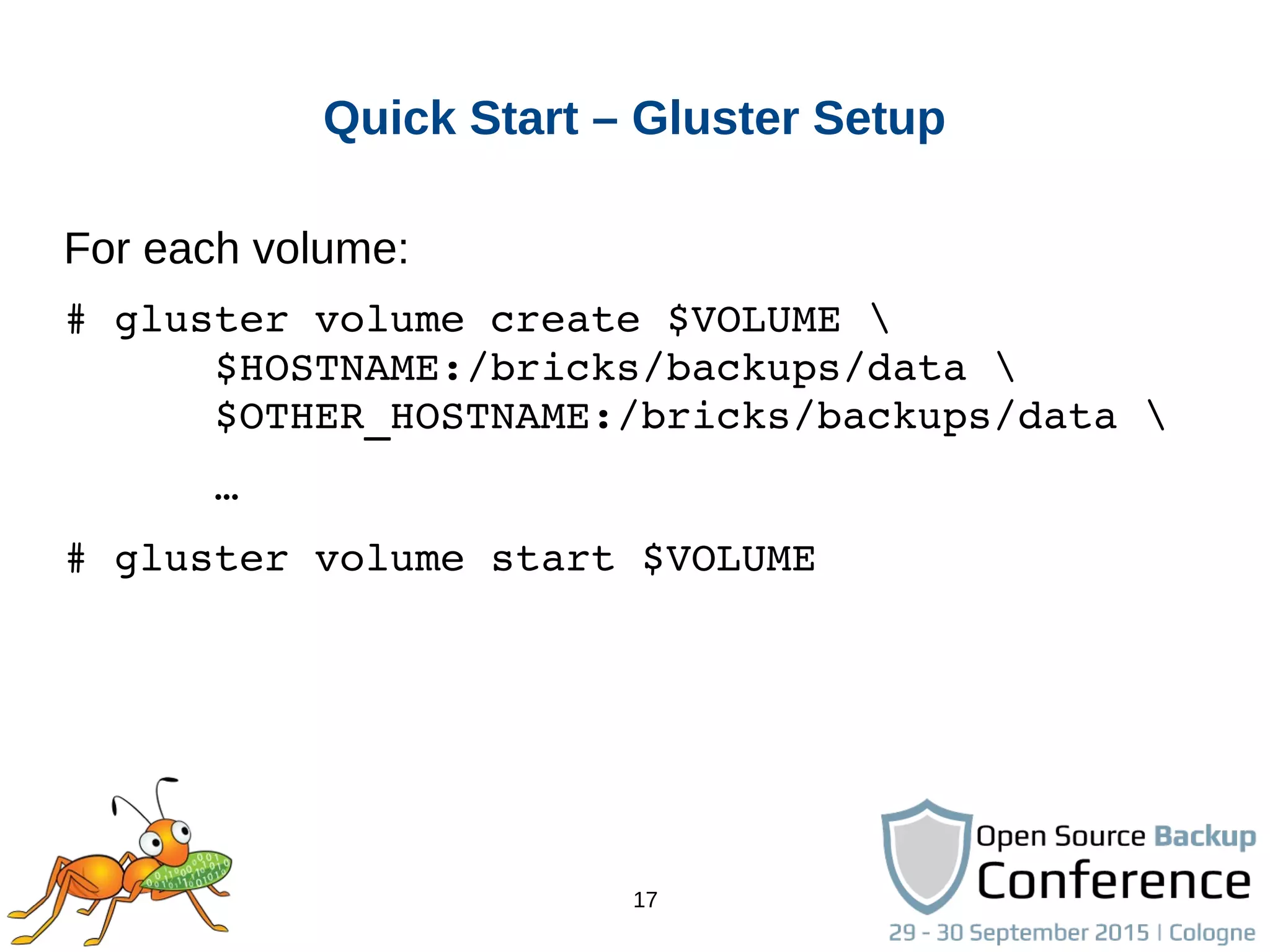

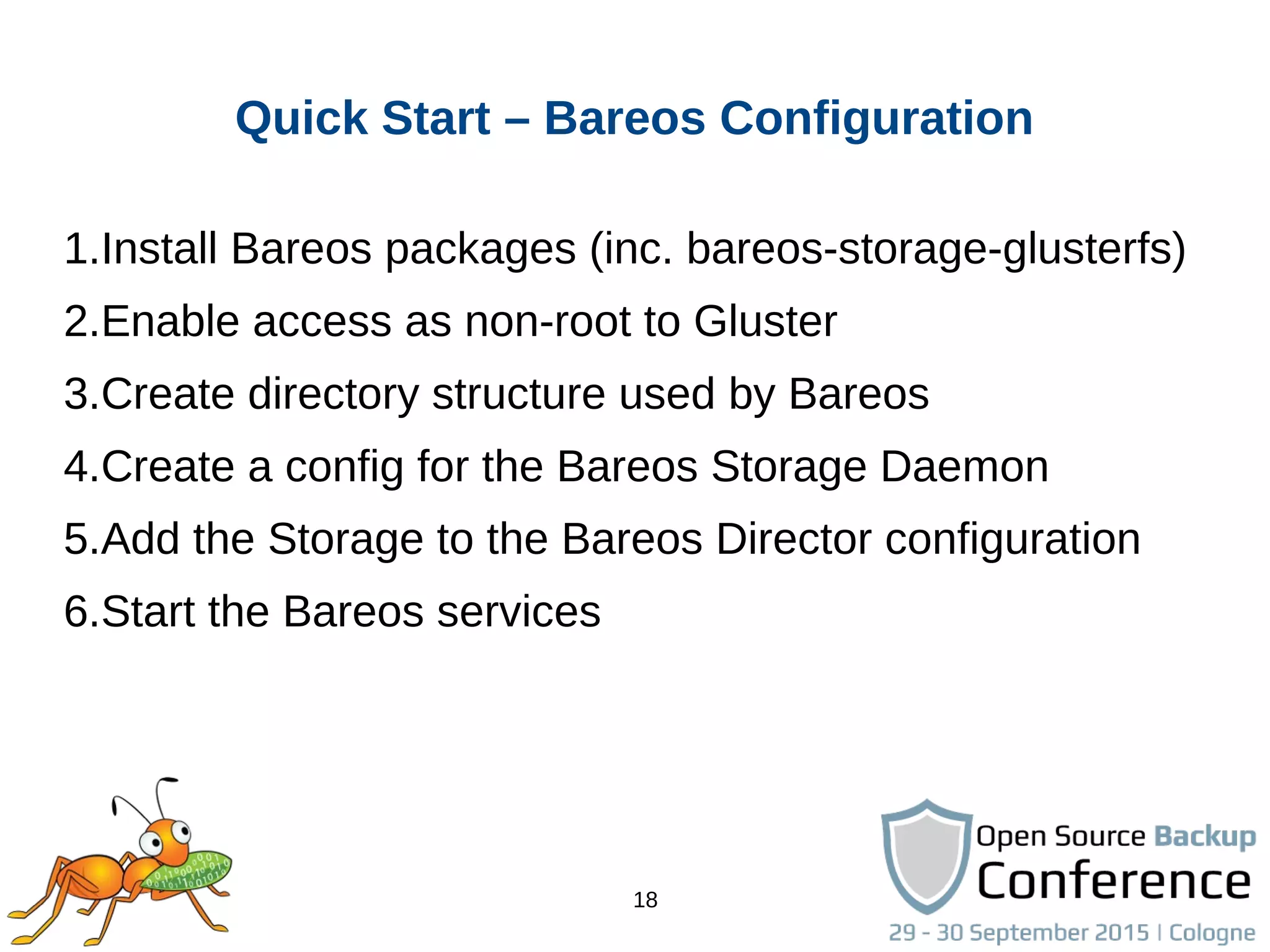

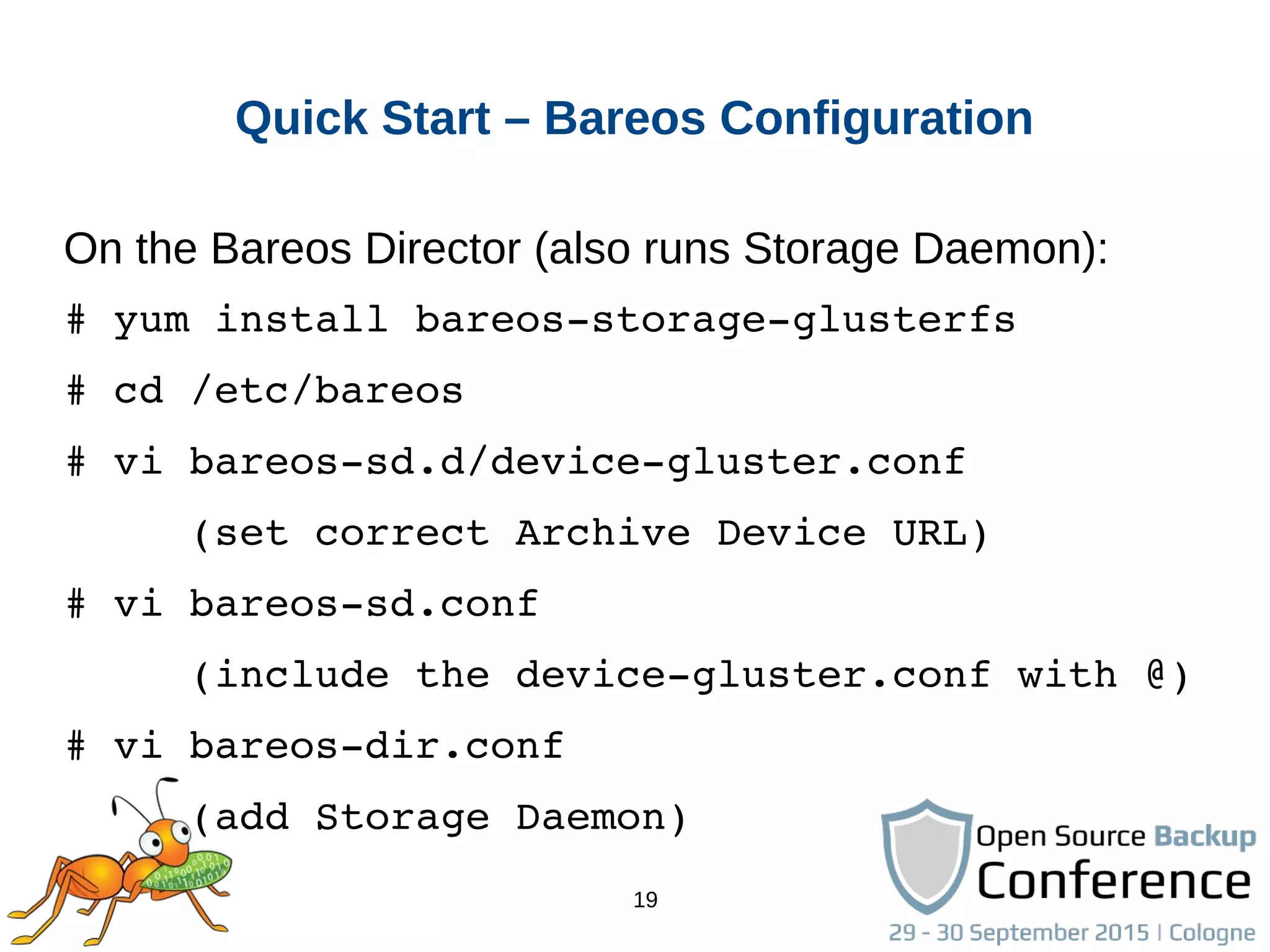

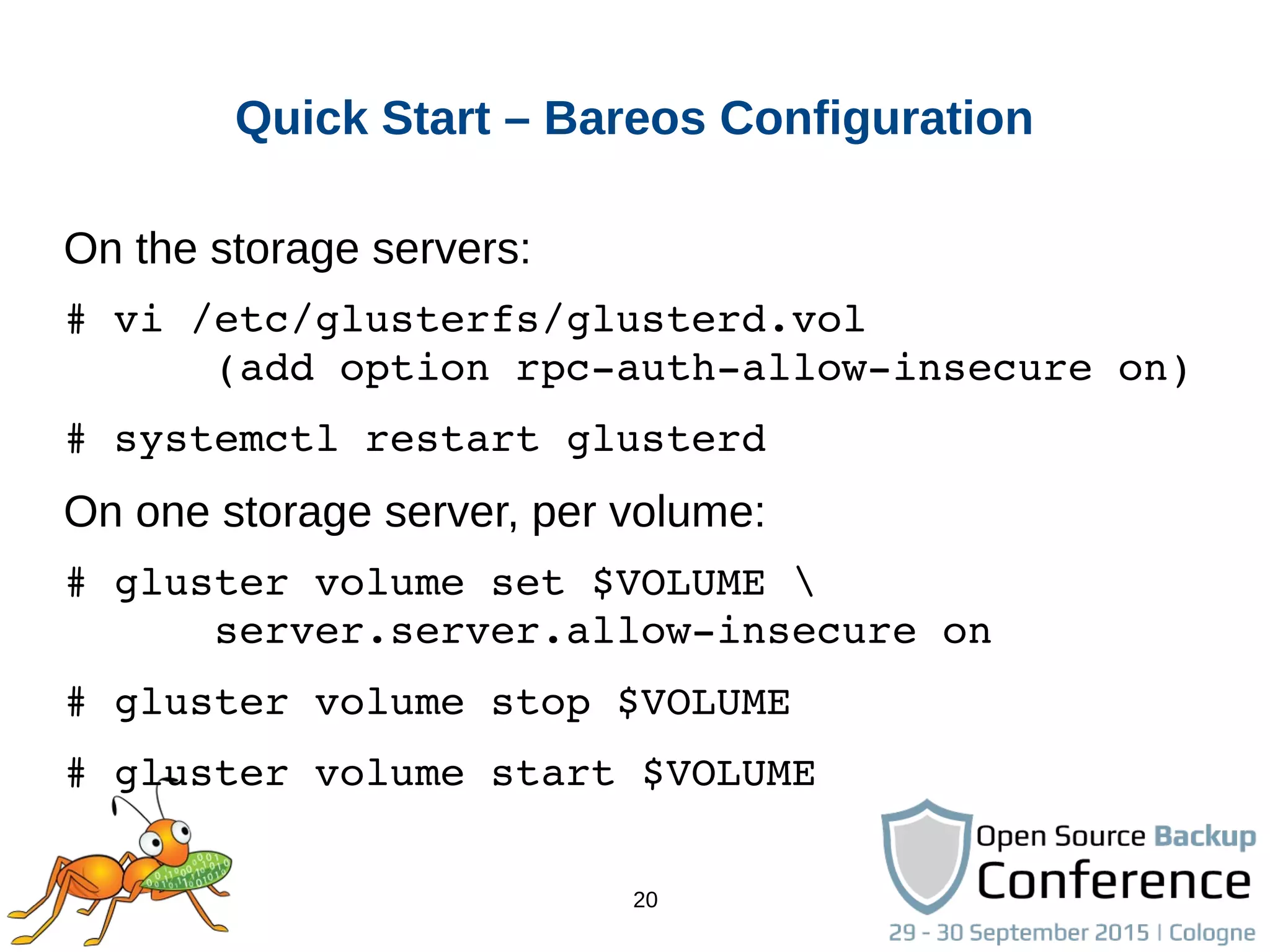

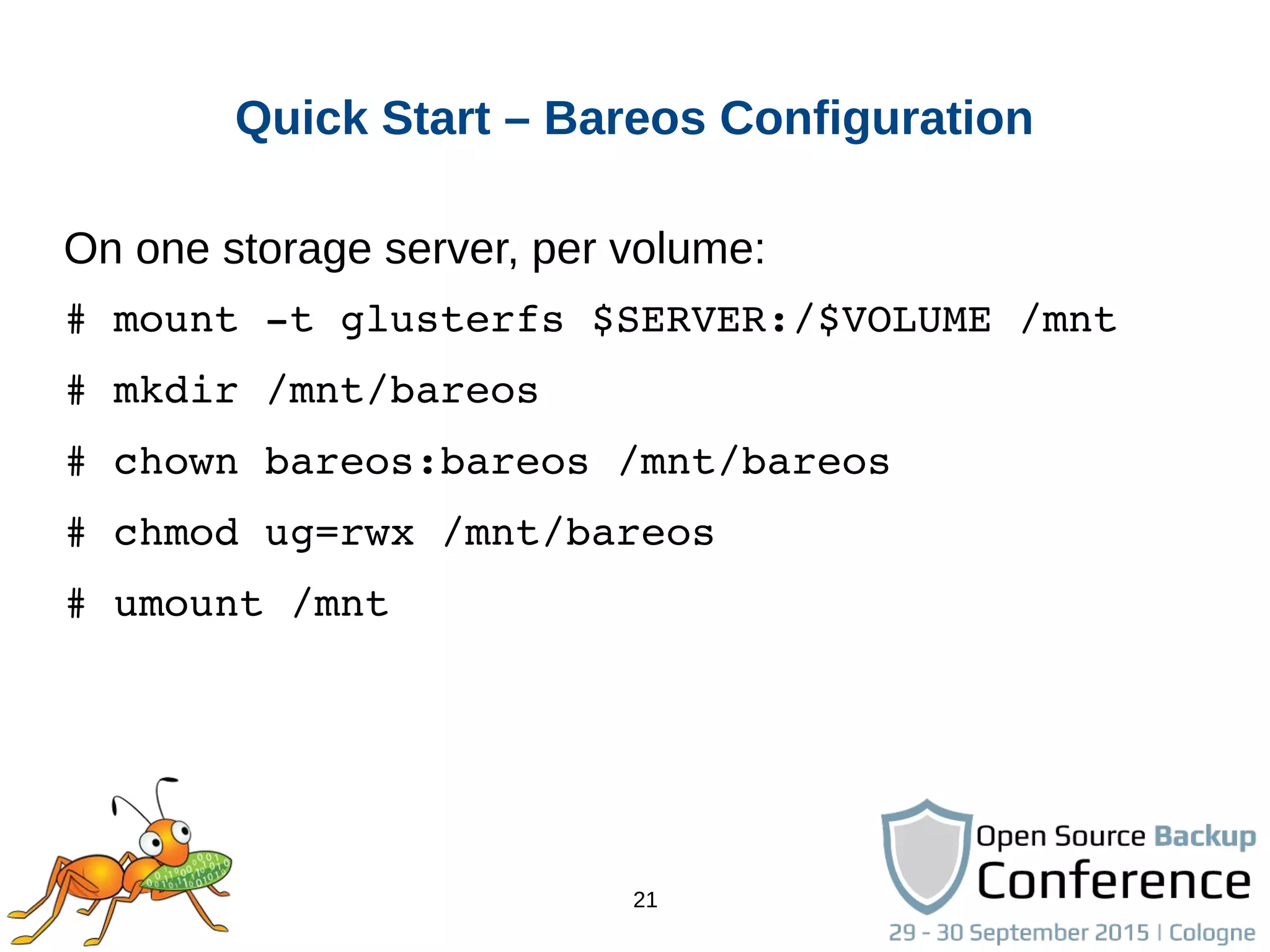

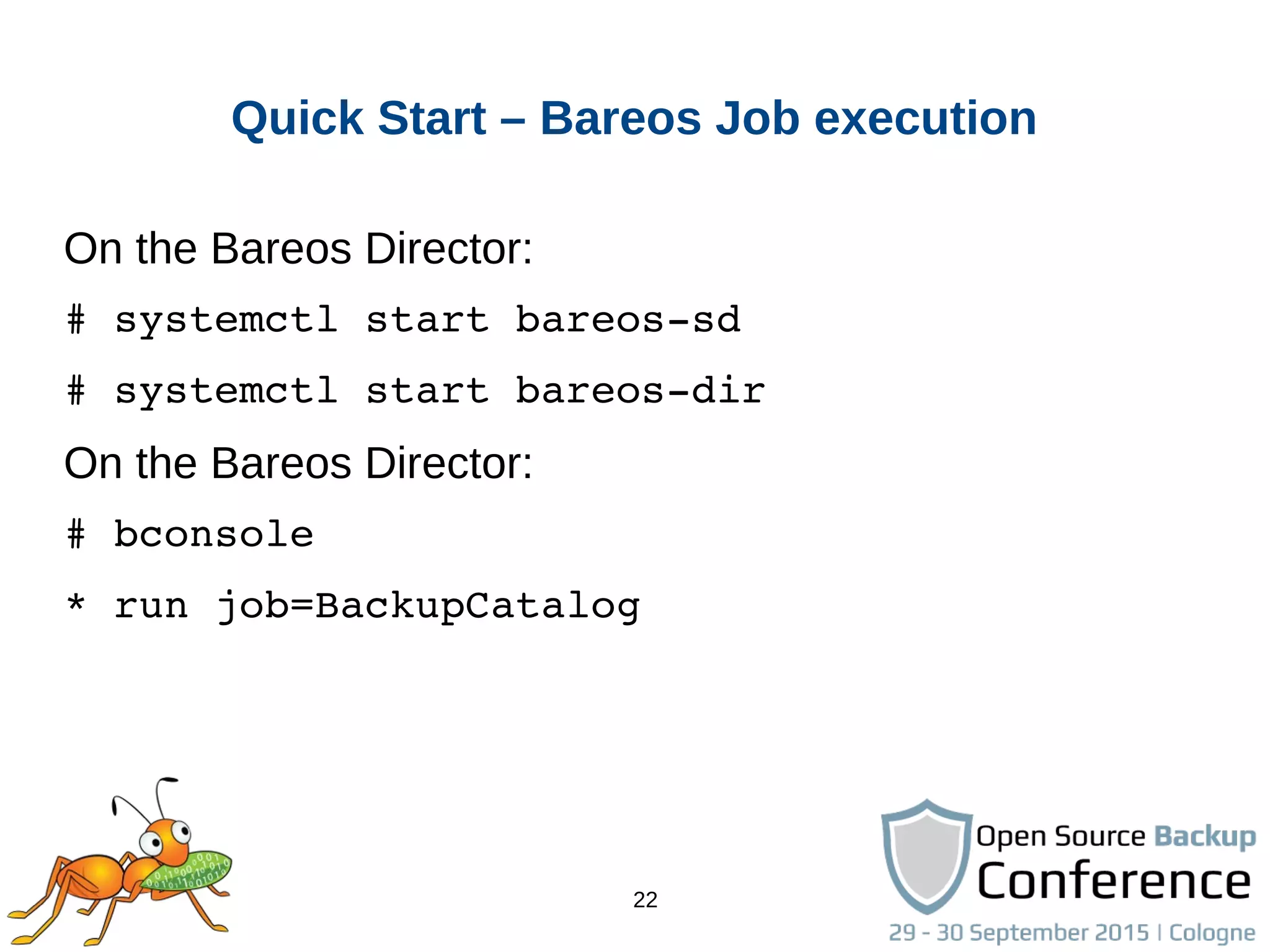

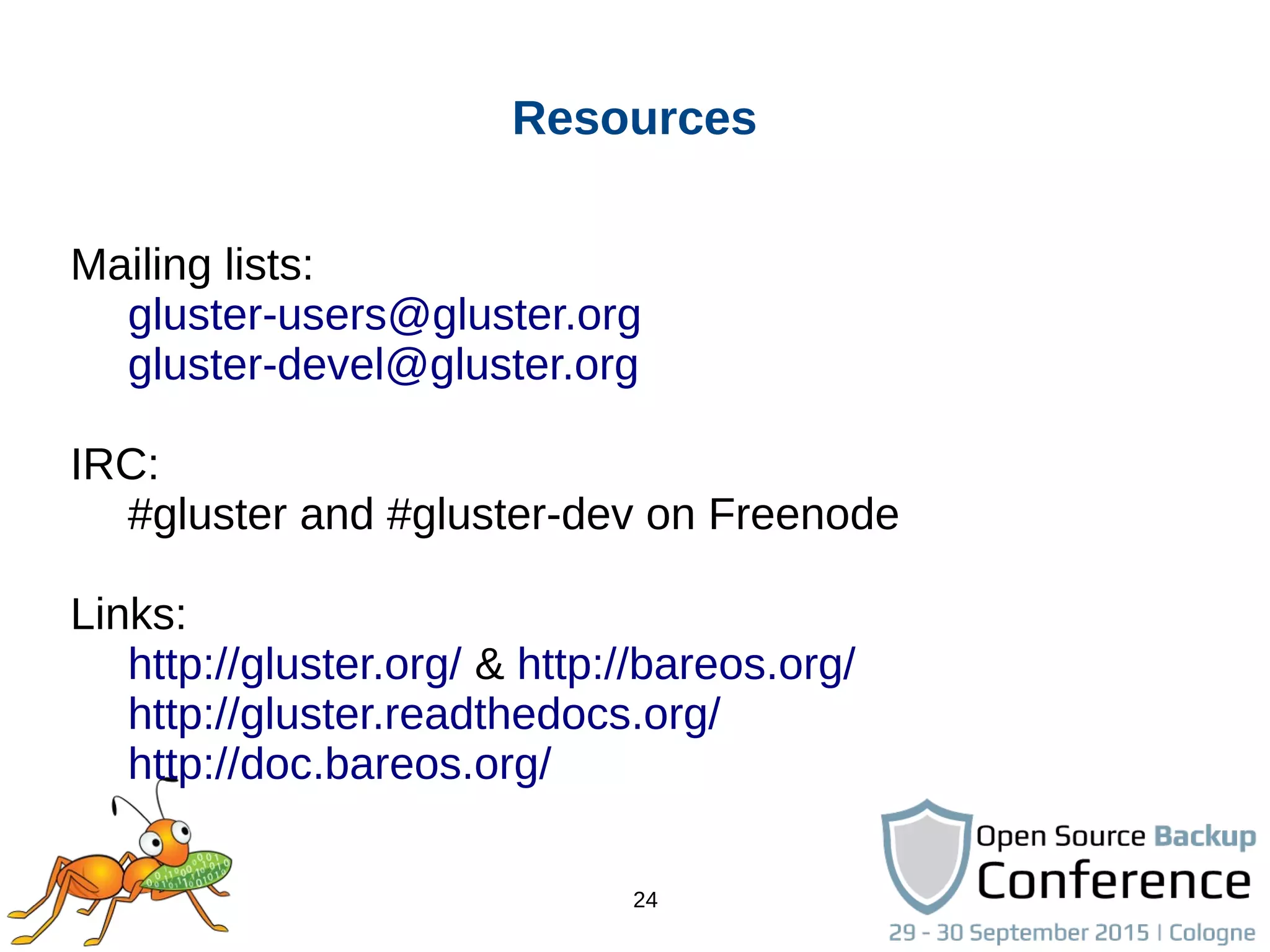

This document discusses integrating Bareos backups with the Gluster distributed file system for scalable backups. It begins with an agenda that covers the Gluster integration in Bareos, an introduction to GlusterFS, a quick start guide, an example configuration and demo, and future plans. It then provides more details on GlusterFS architecture including concepts like bricks, volumes, peers and site replication. The remainder of the document outlines quick start instructions for setting up Gluster and configuring Bareos to use the Gluster backend for scalable backups across multiple servers.