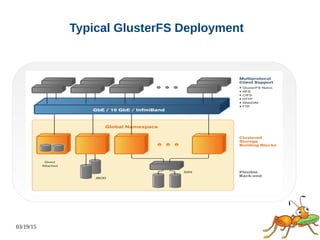

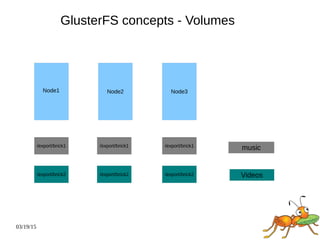

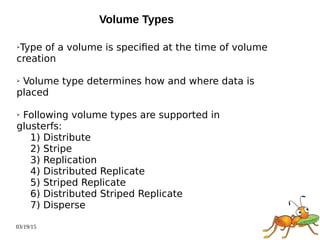

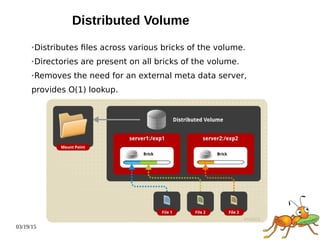

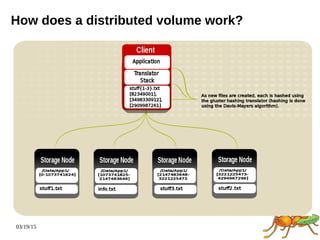

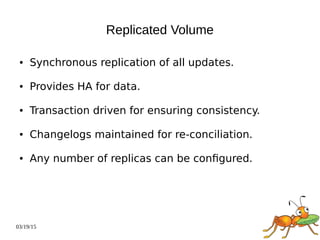

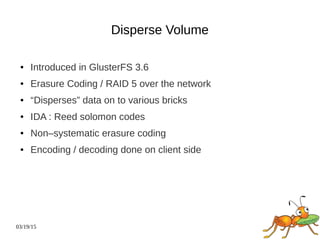

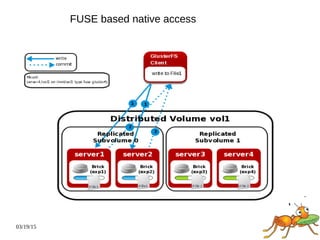

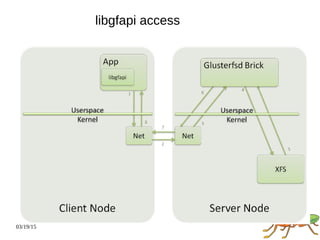

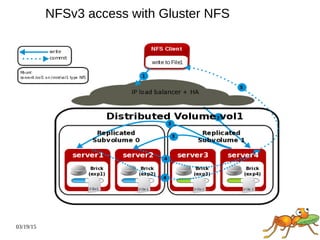

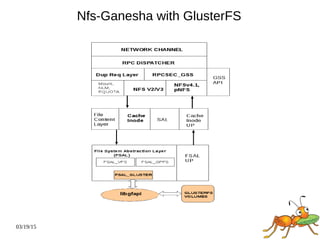

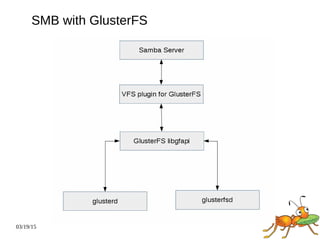

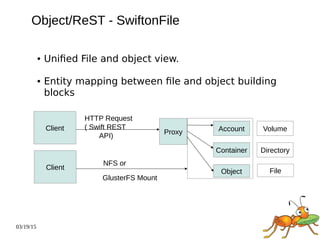

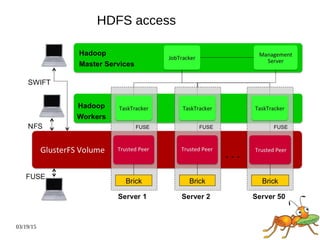

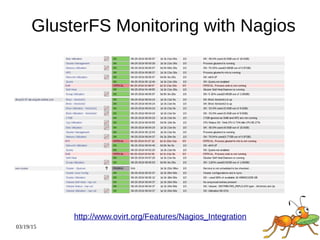

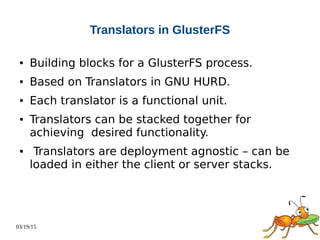

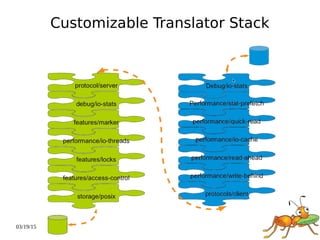

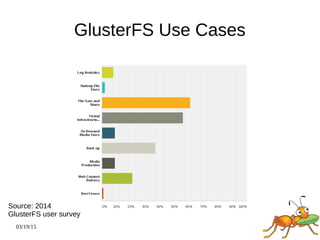

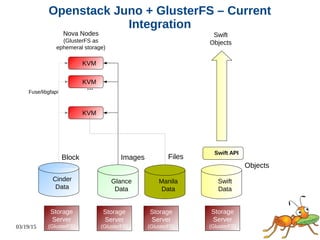

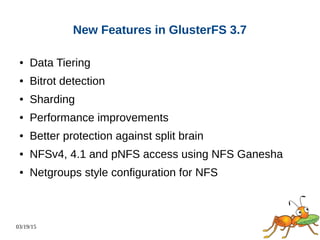

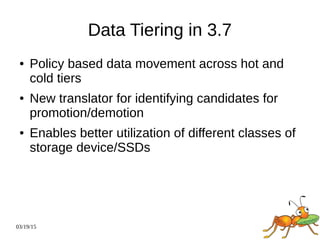

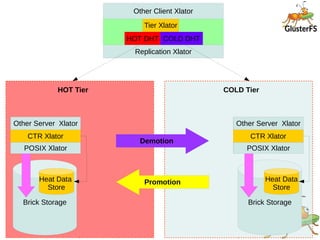

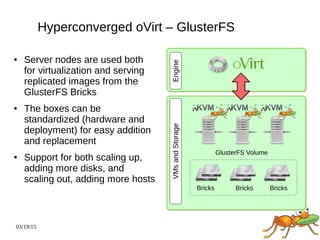

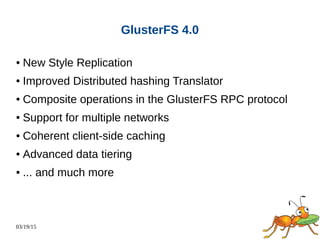

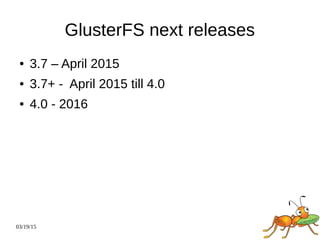

The document provides an overview of GlusterFS, including why it was created, what it is, how it works, where it is used, and what new features are planned. Specifically, it notes that GlusterFS is a scale-out distributed file system that aggregates storage over a network to provide a unified namespace. It discusses the various volume types, access mechanisms, features, and integrations with projects like OpenStack. The presentation also outlines new features coming in GlusterFS 3.7 like data tiering, bitrot detection, and NFSv4 support, as well as the goals for a major new version, GlusterFS 4.0.