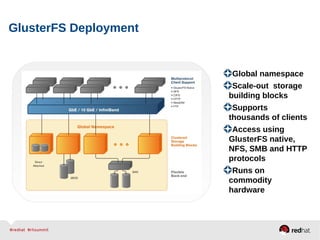

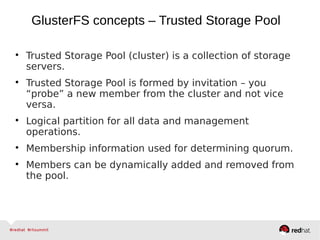

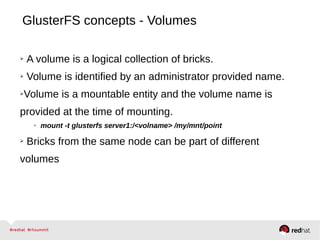

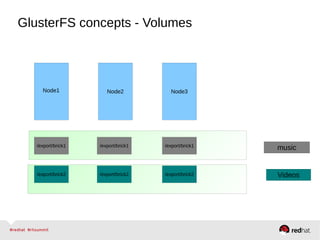

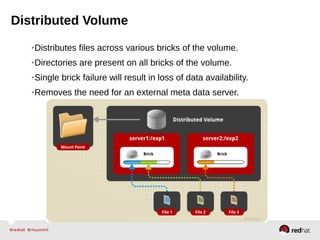

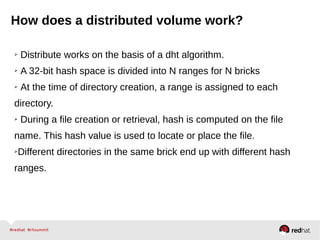

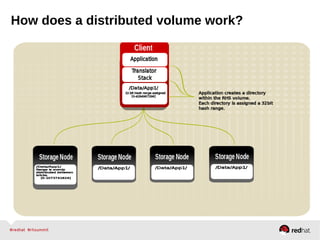

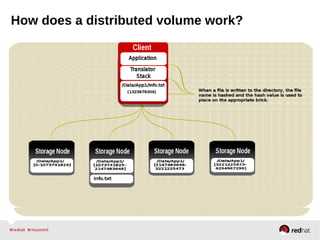

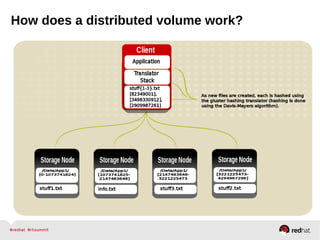

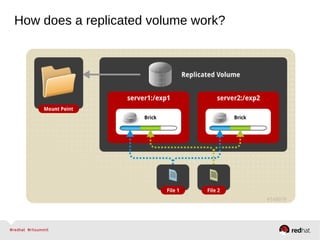

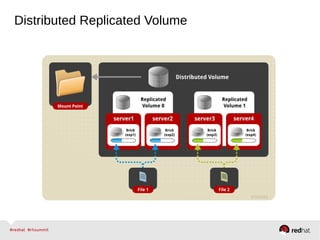

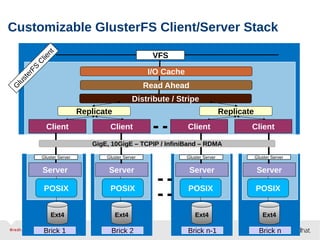

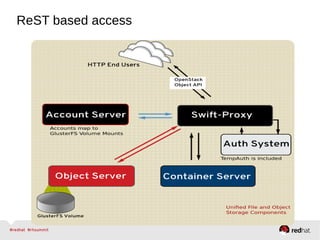

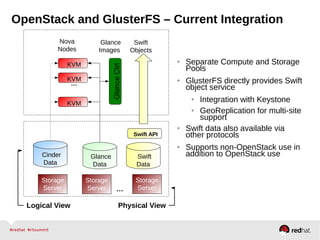

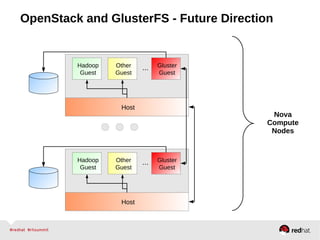

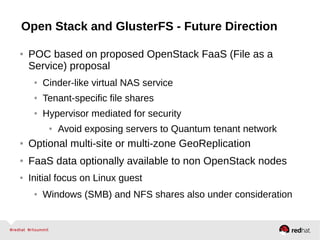

GlusterFS is an open-source networked file system that provides scalable, distributed storage for OpenStack. It aggregates disk storage from multiple servers into a single global namespace that is accessible from anywhere. GlusterFS uses a distributed hashing technique to spread files across servers and can be configured for replication or erasure coding for redundancy. It integrates with OpenStack components like Swift, Cinder, and Glance to provide block, object, and file-level storage services for virtual machines. Future integration plans include a Cinder-like file service (FaaS) and support for Windows and NFS shares.