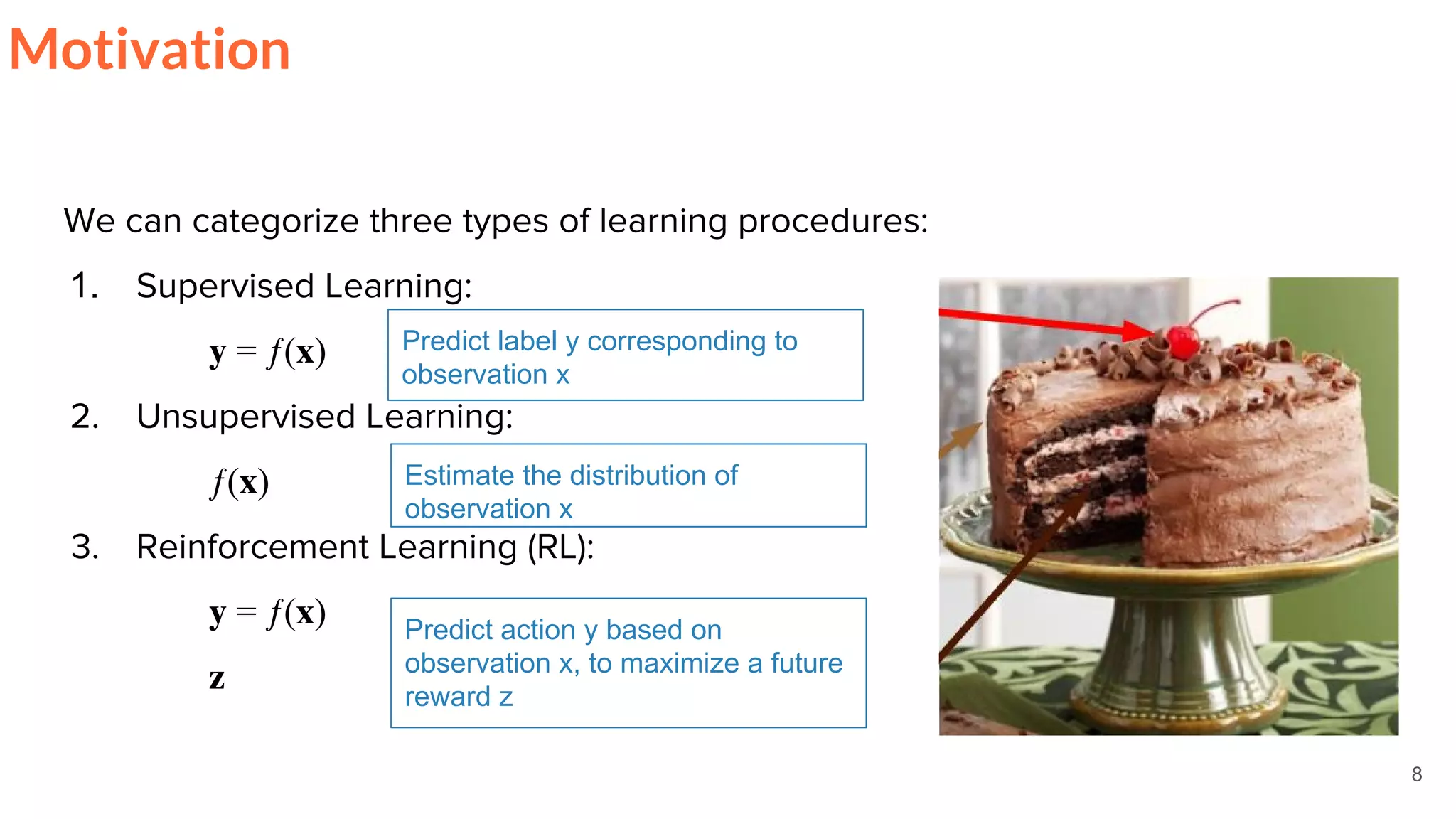

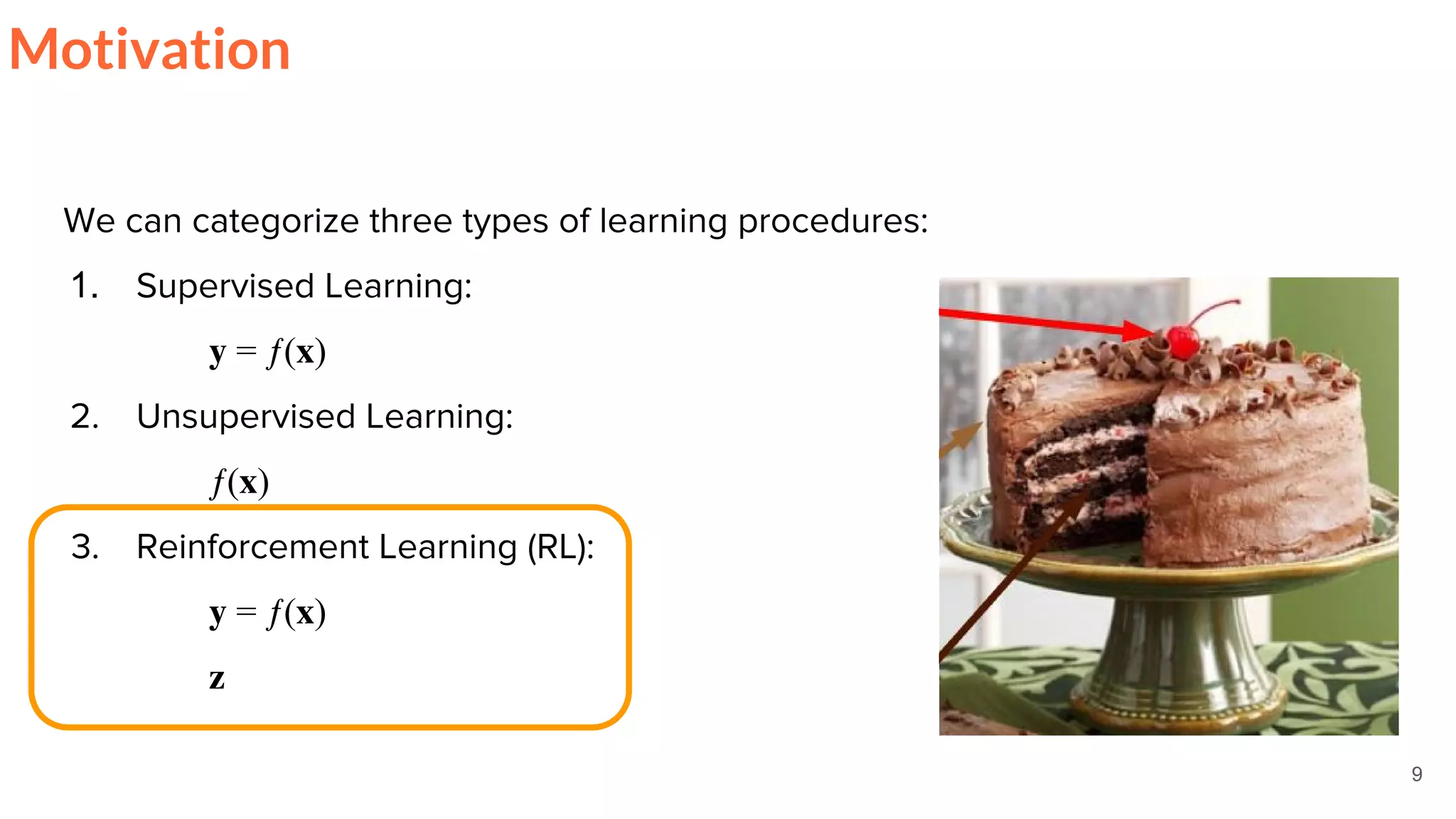

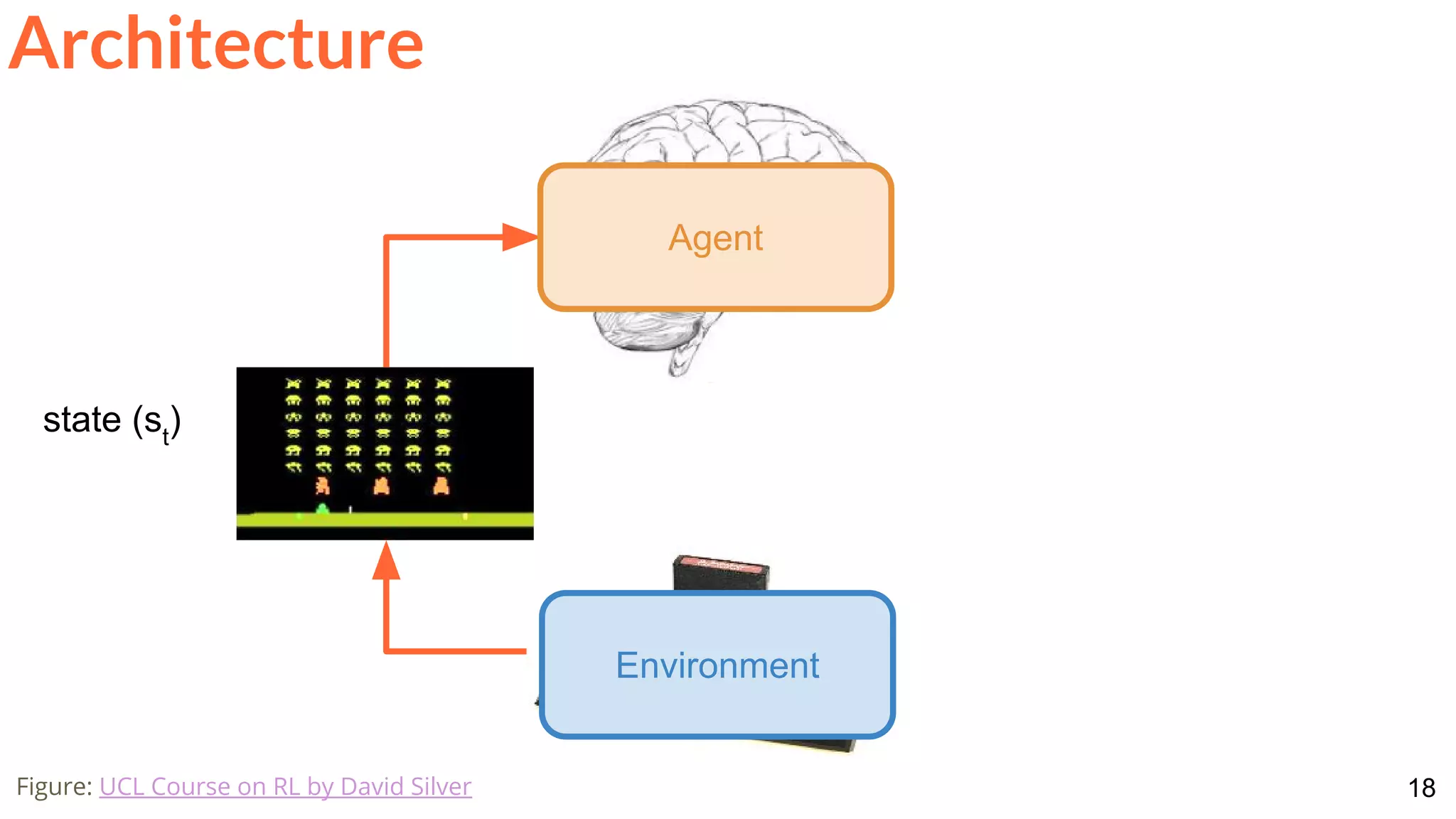

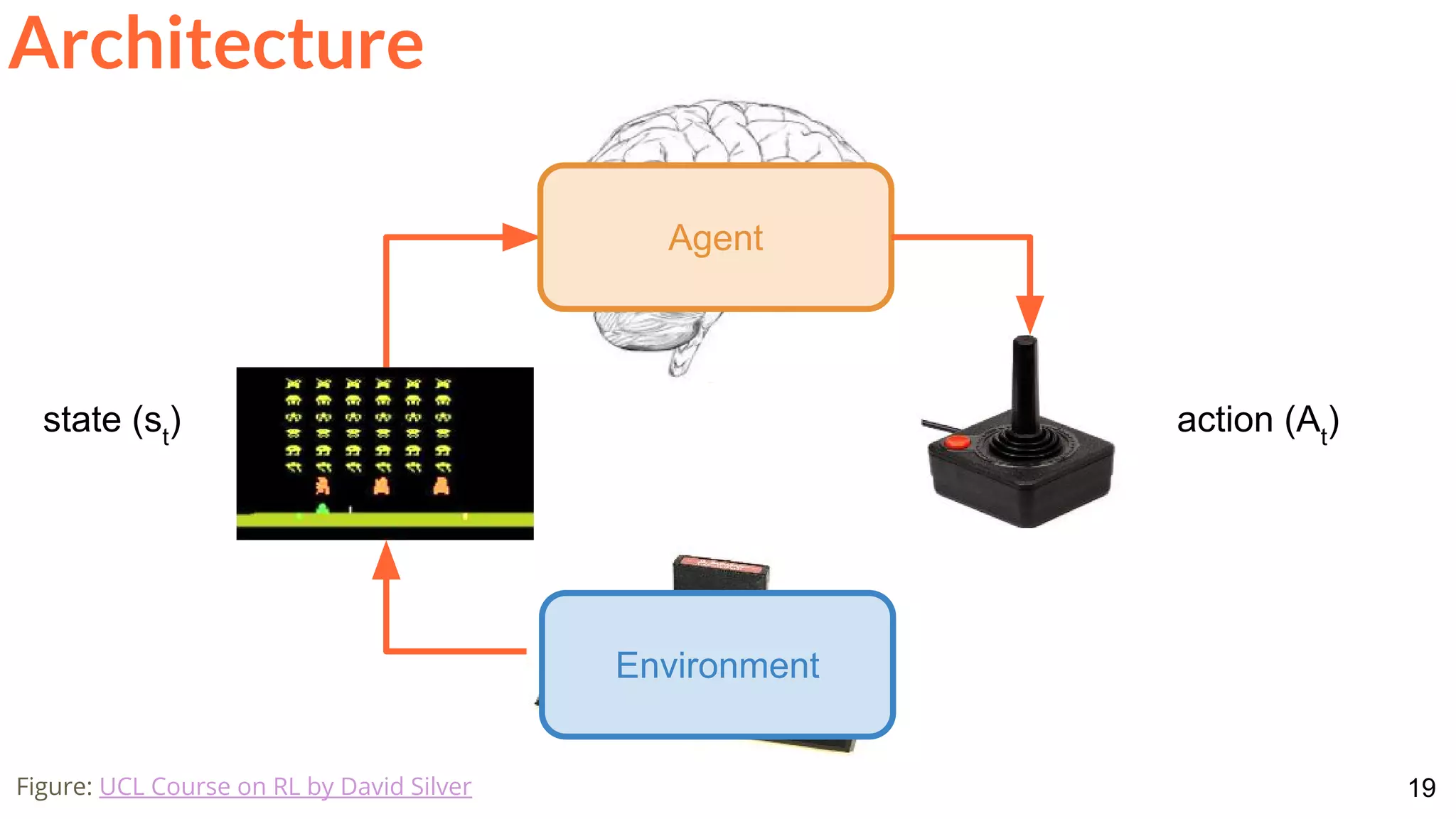

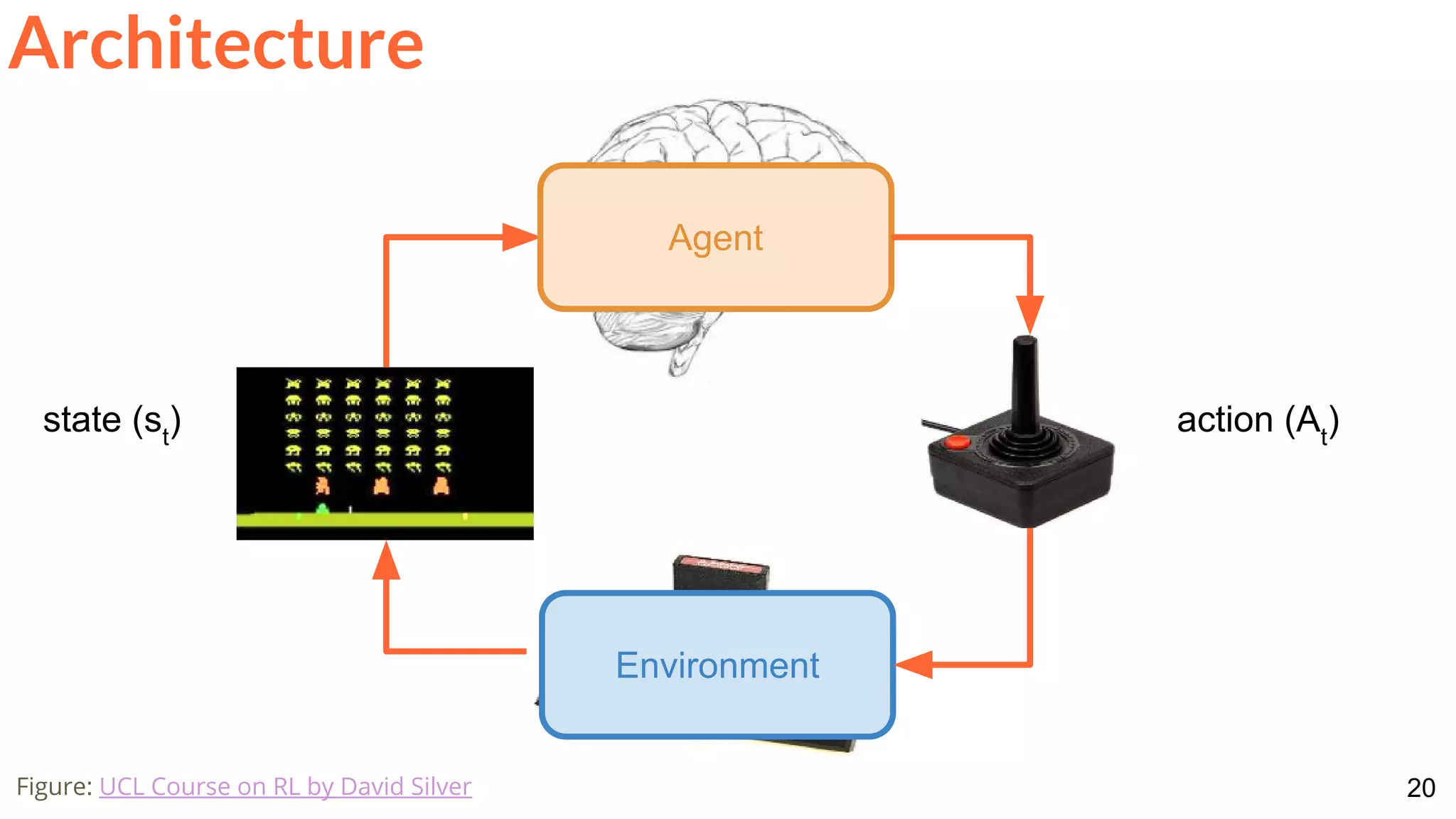

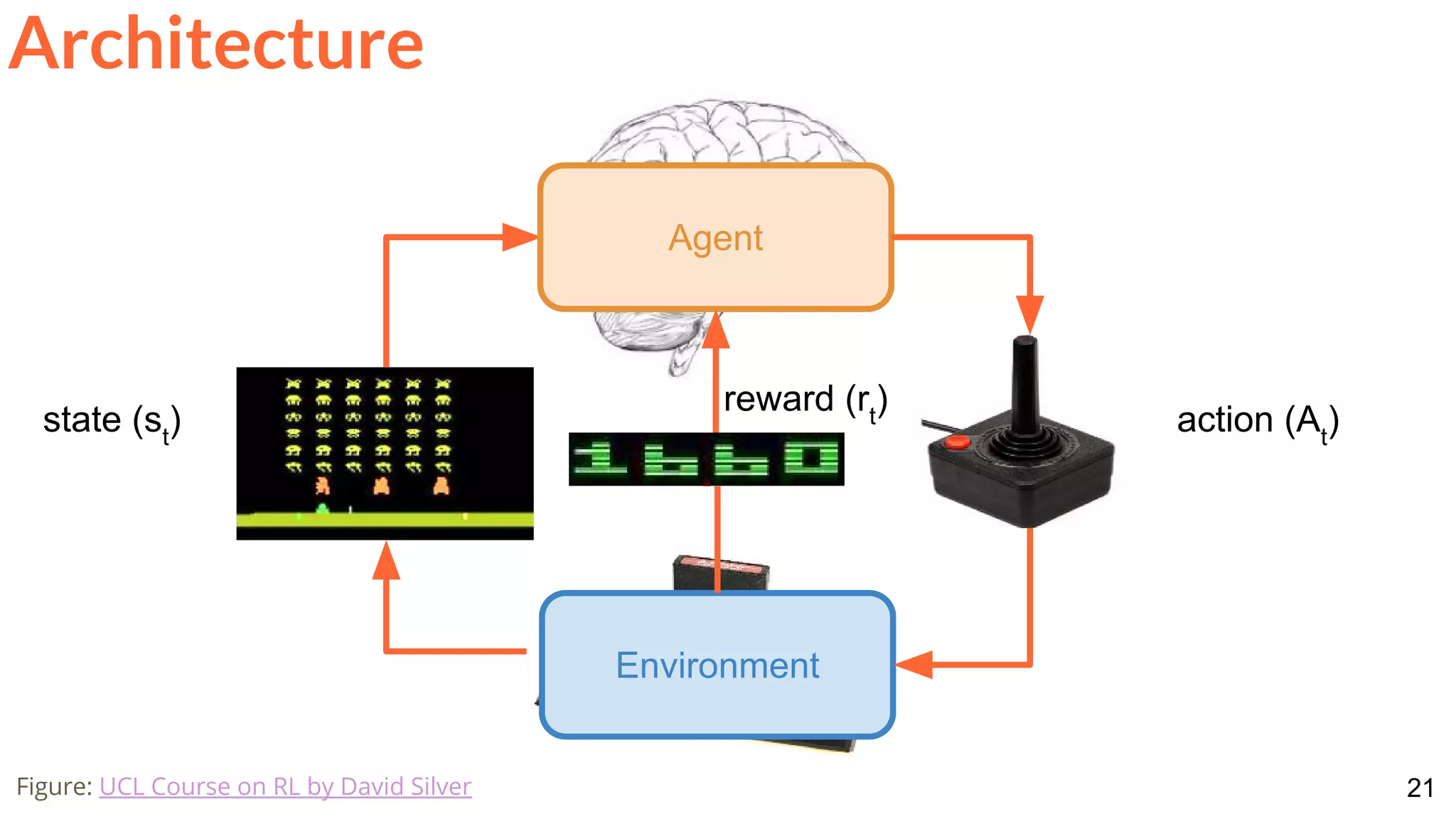

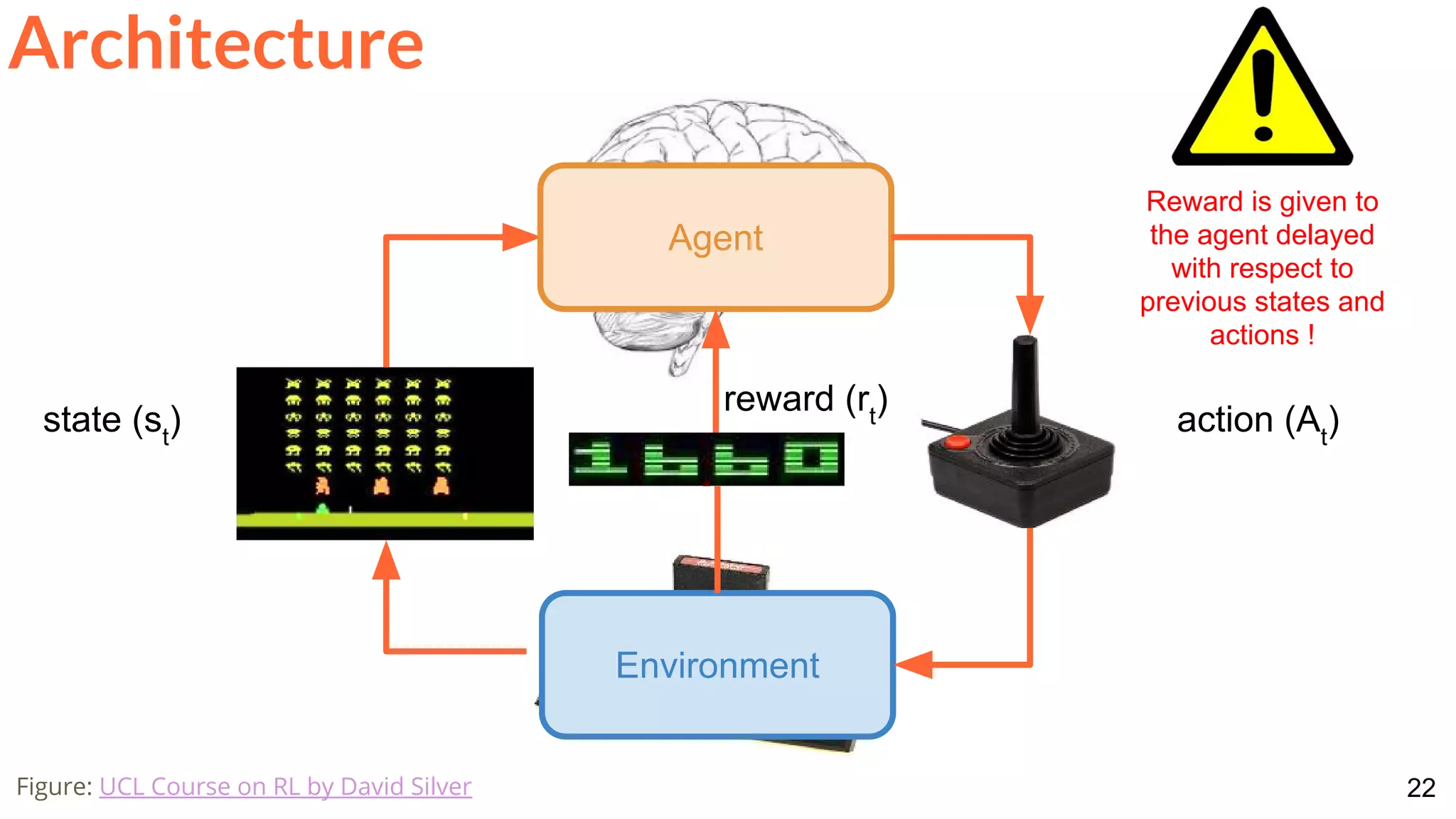

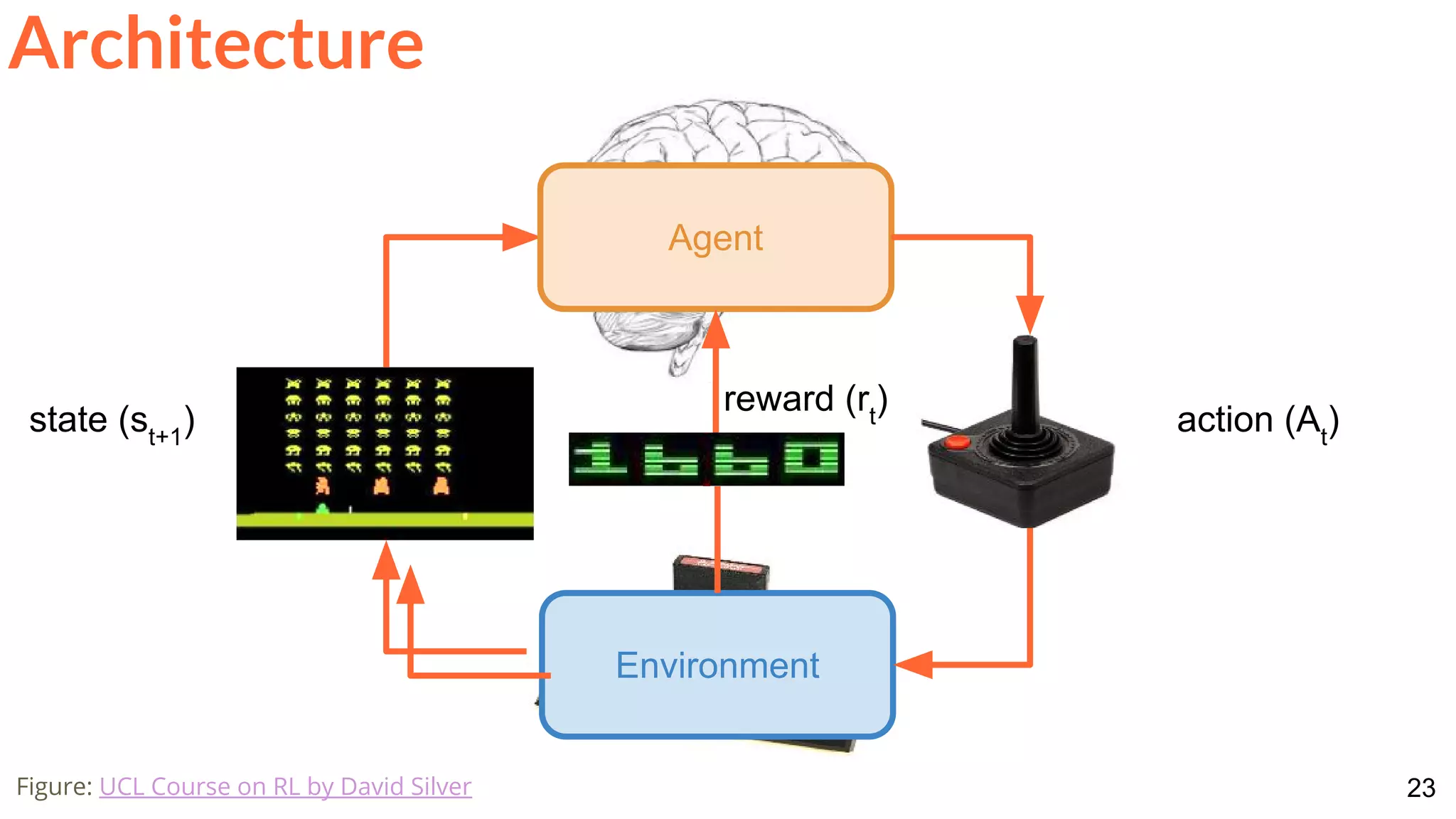

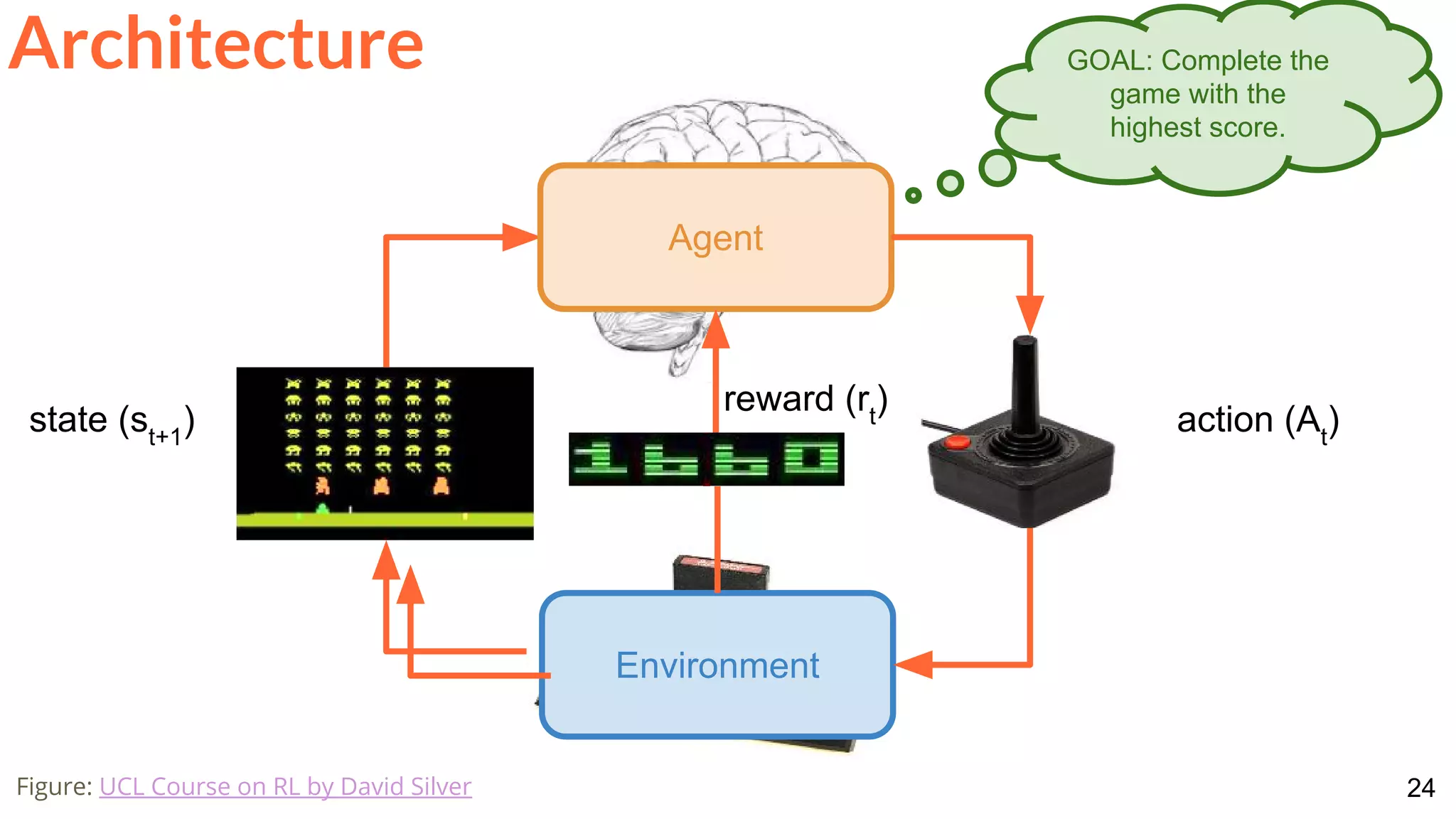

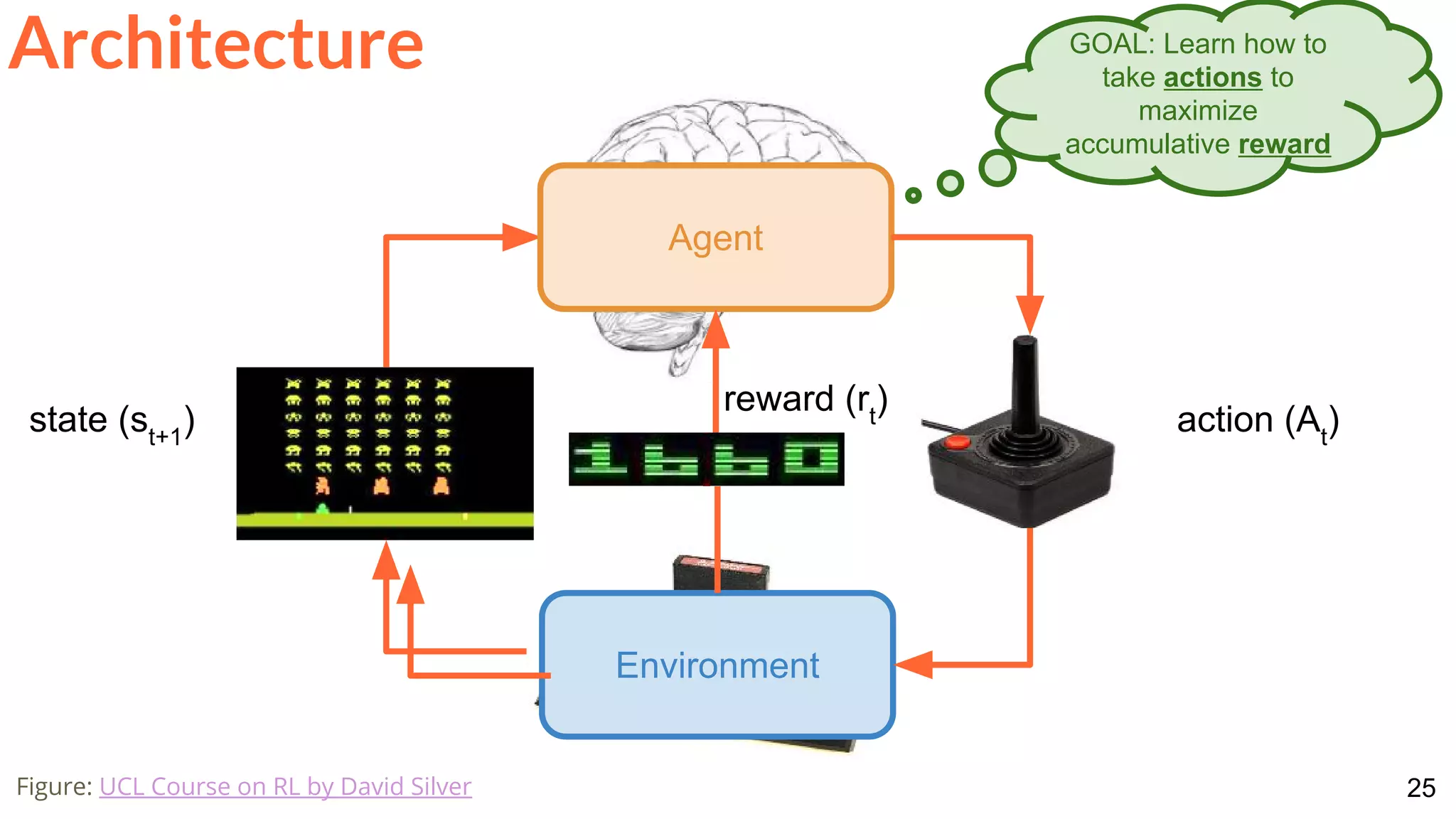

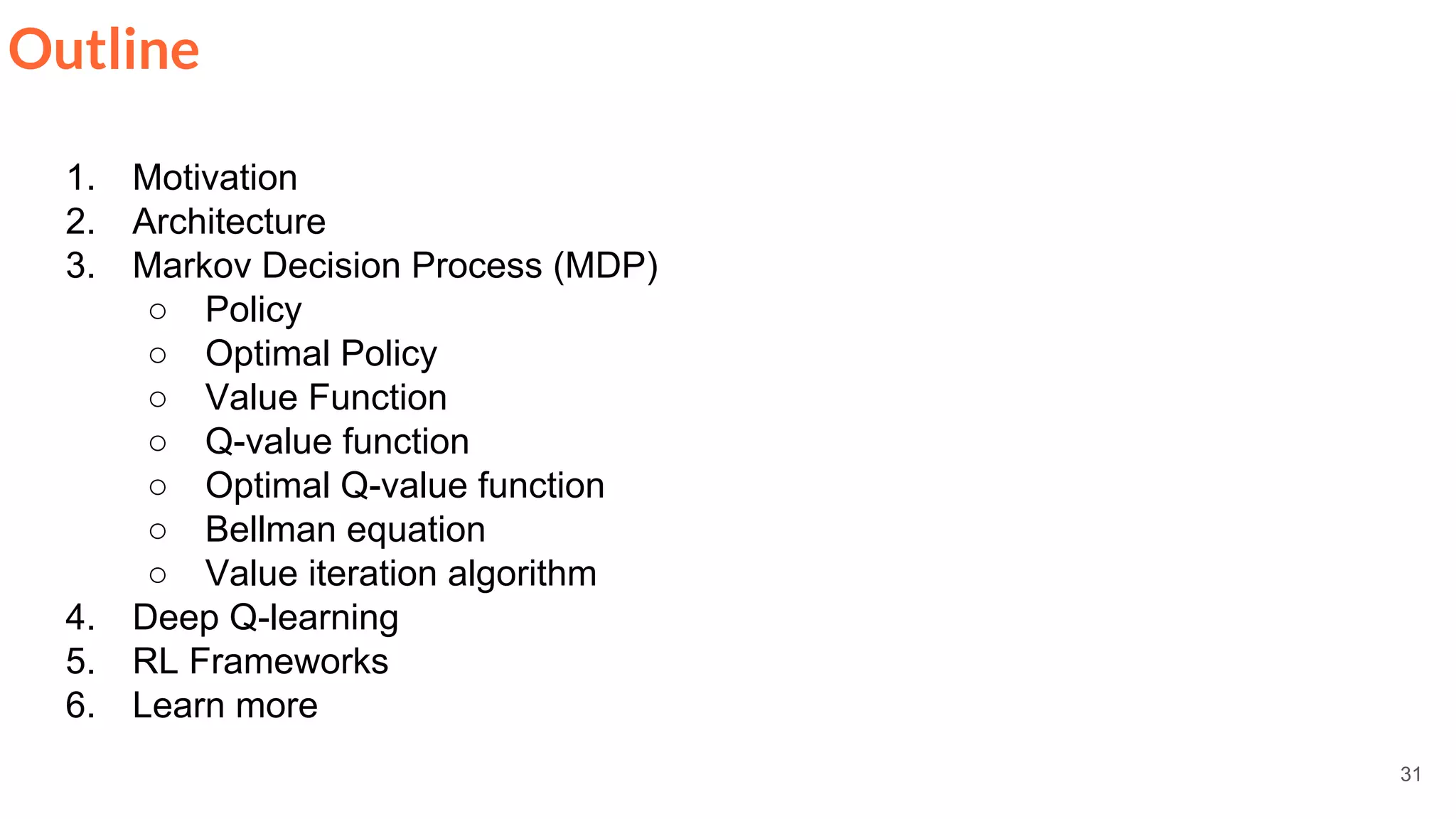

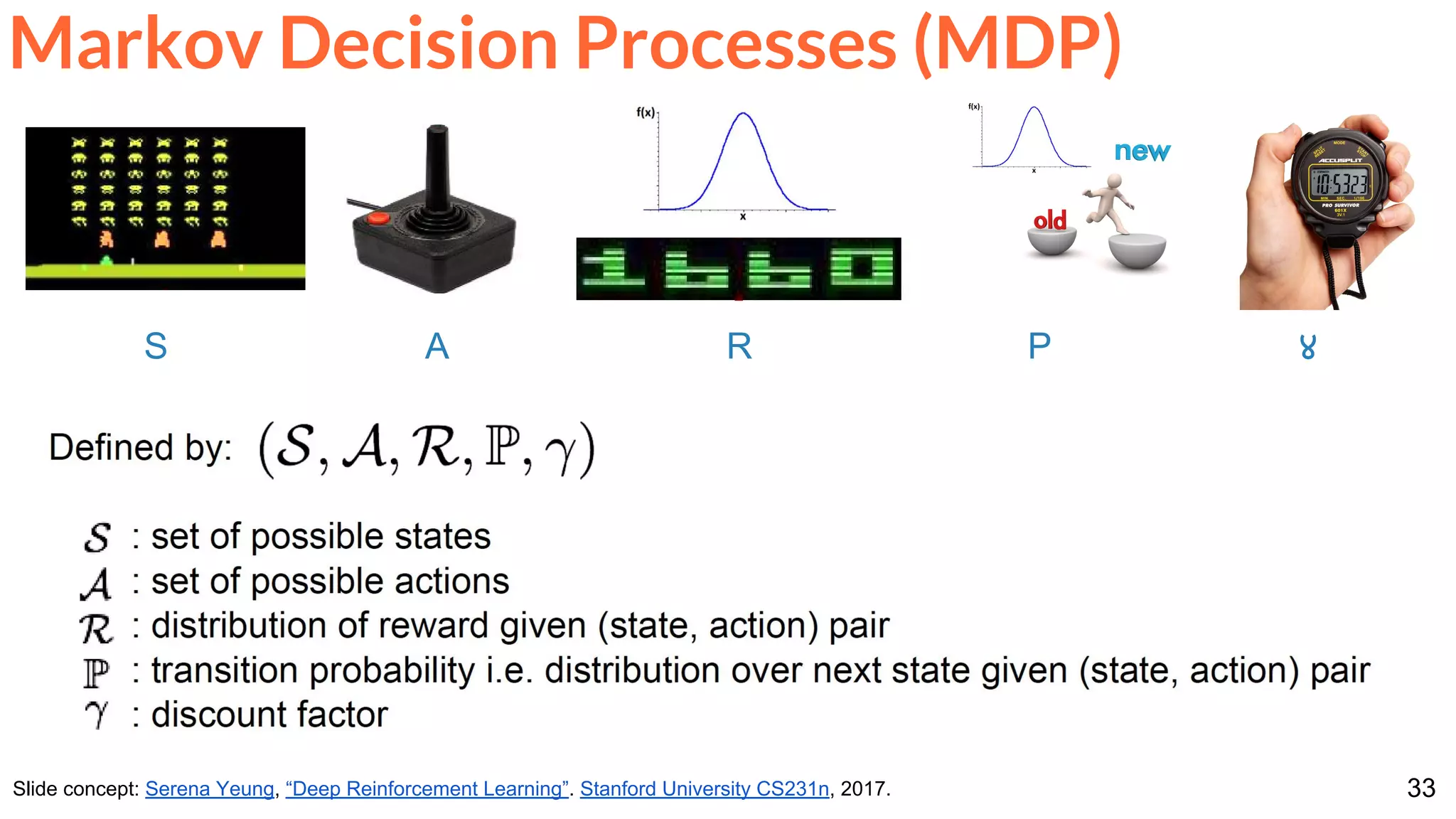

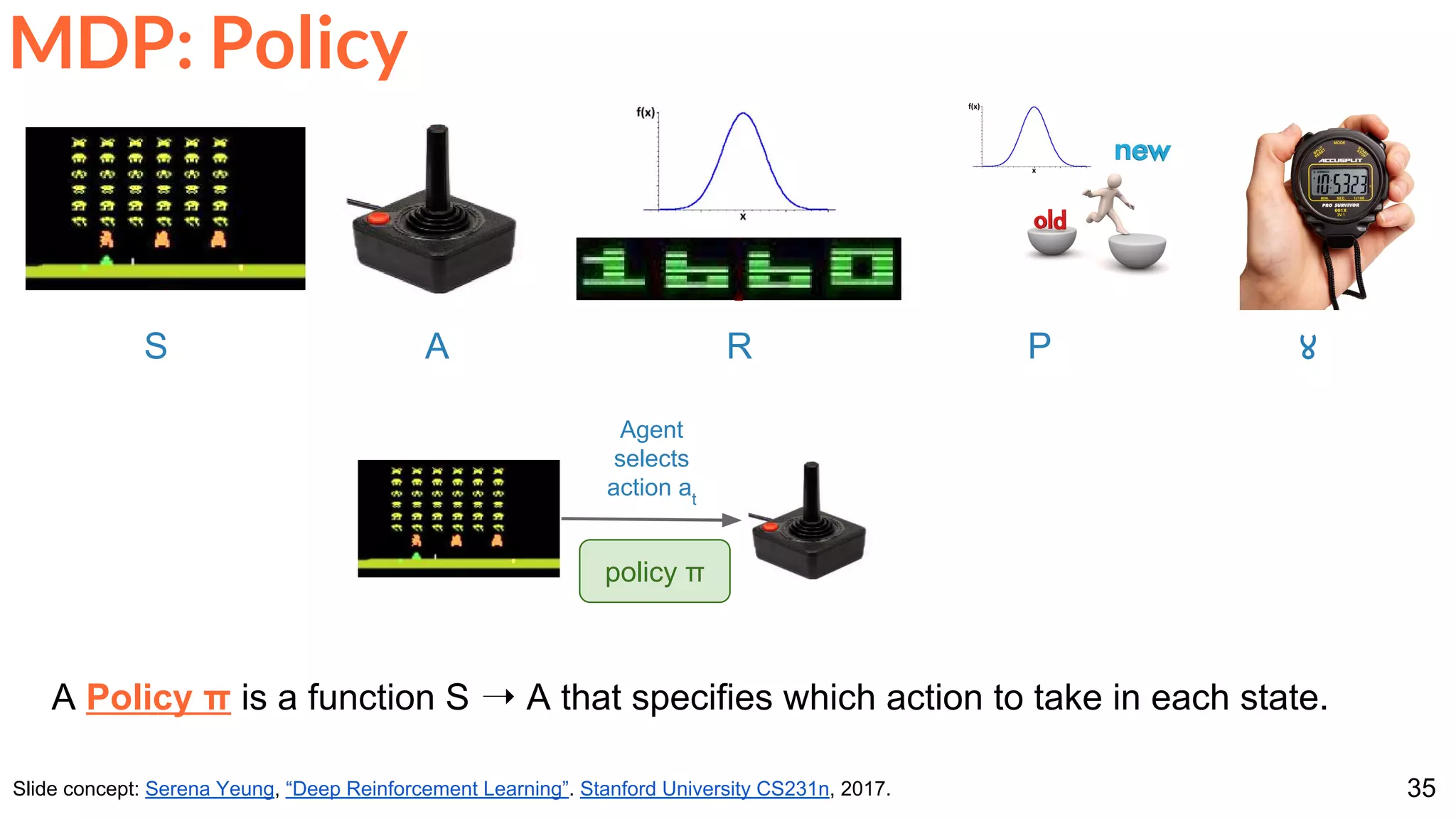

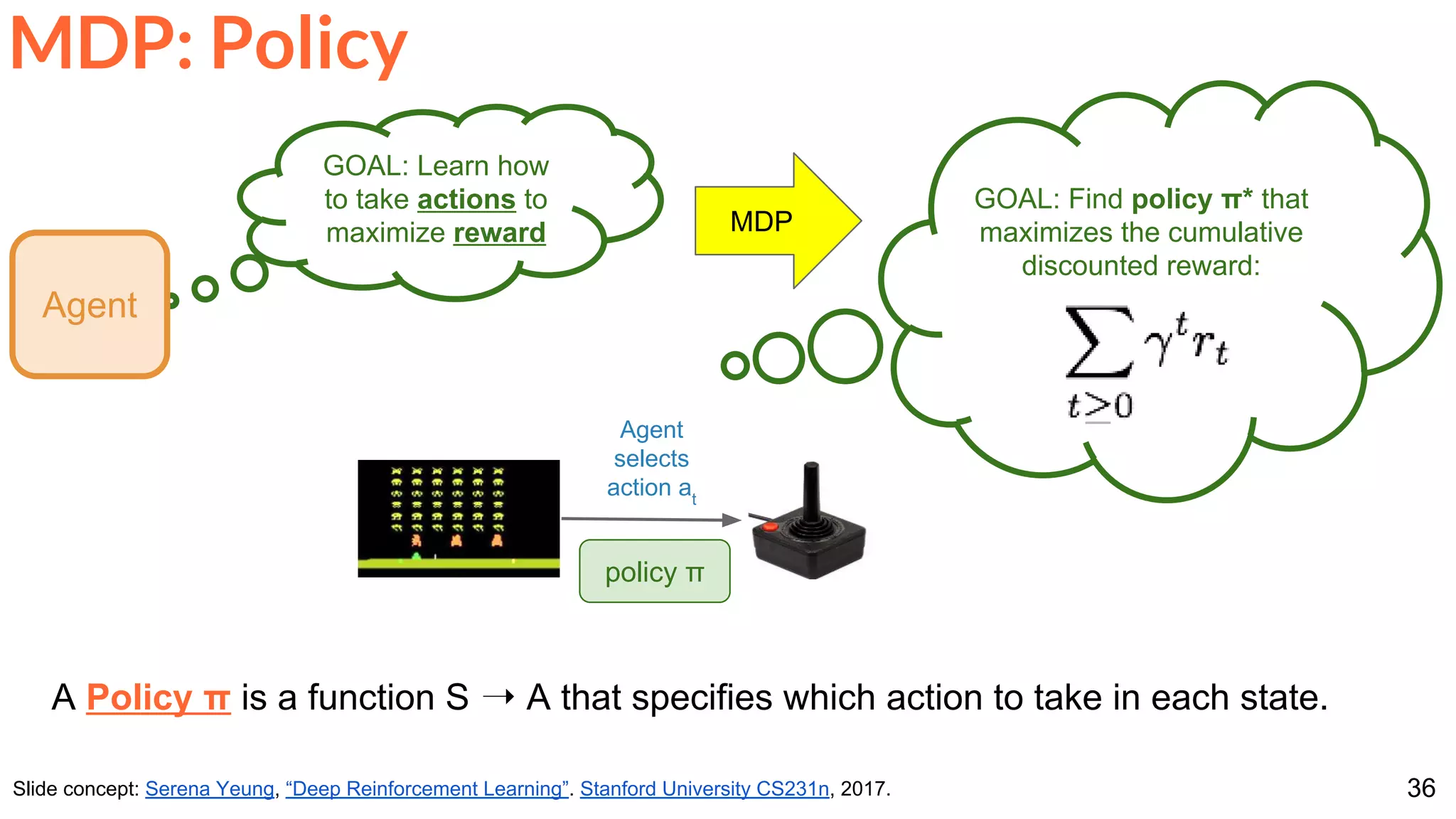

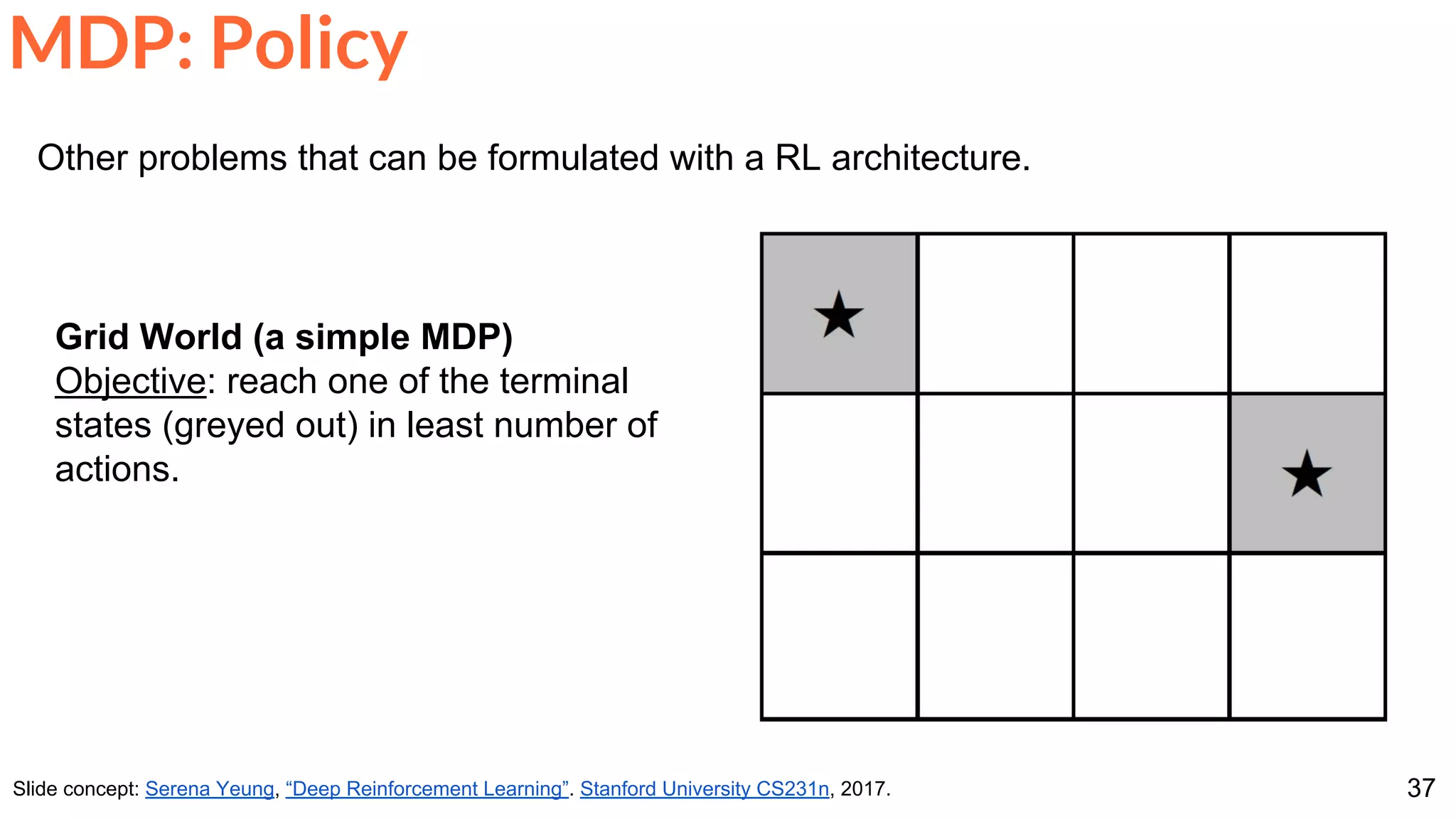

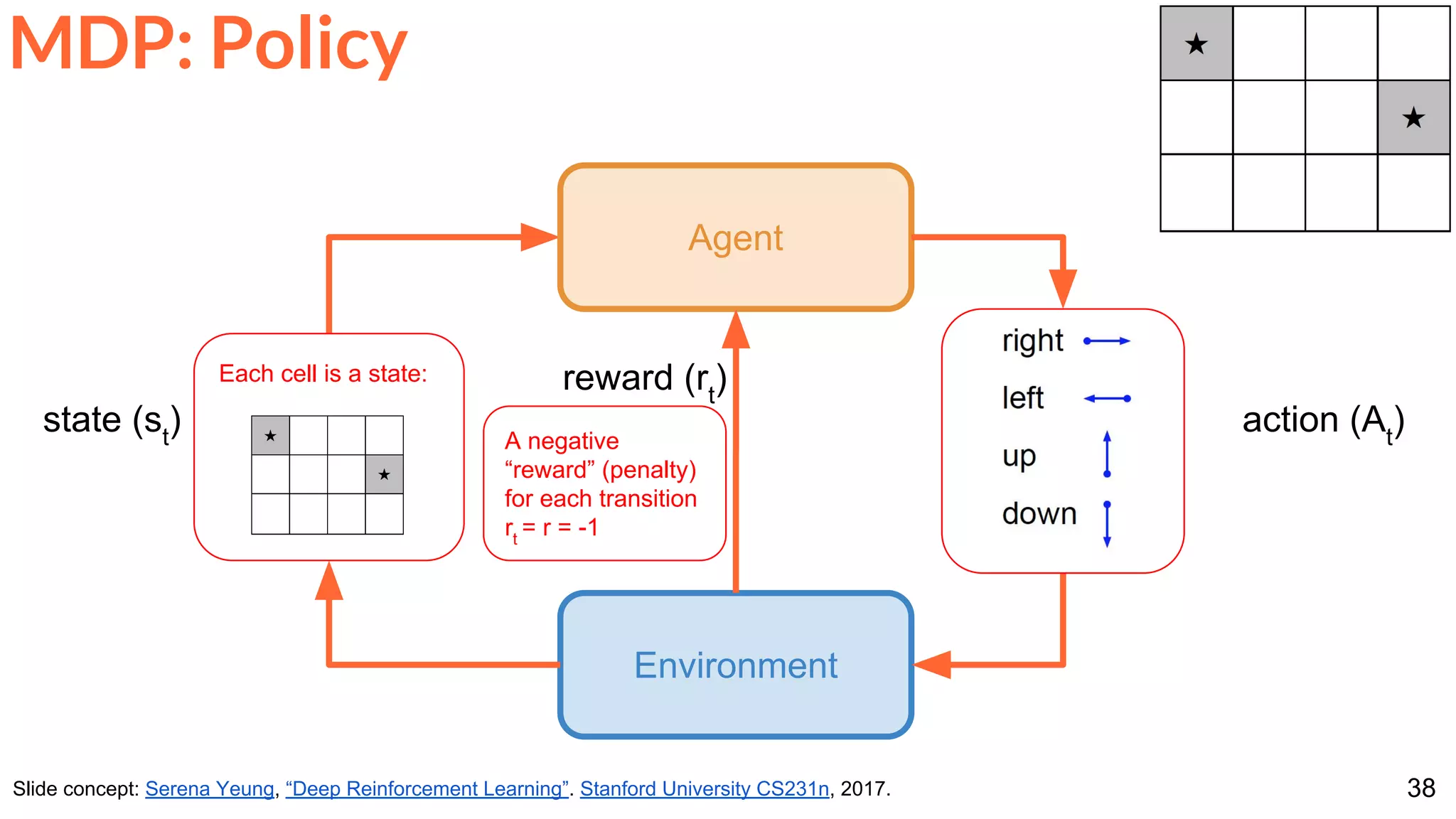

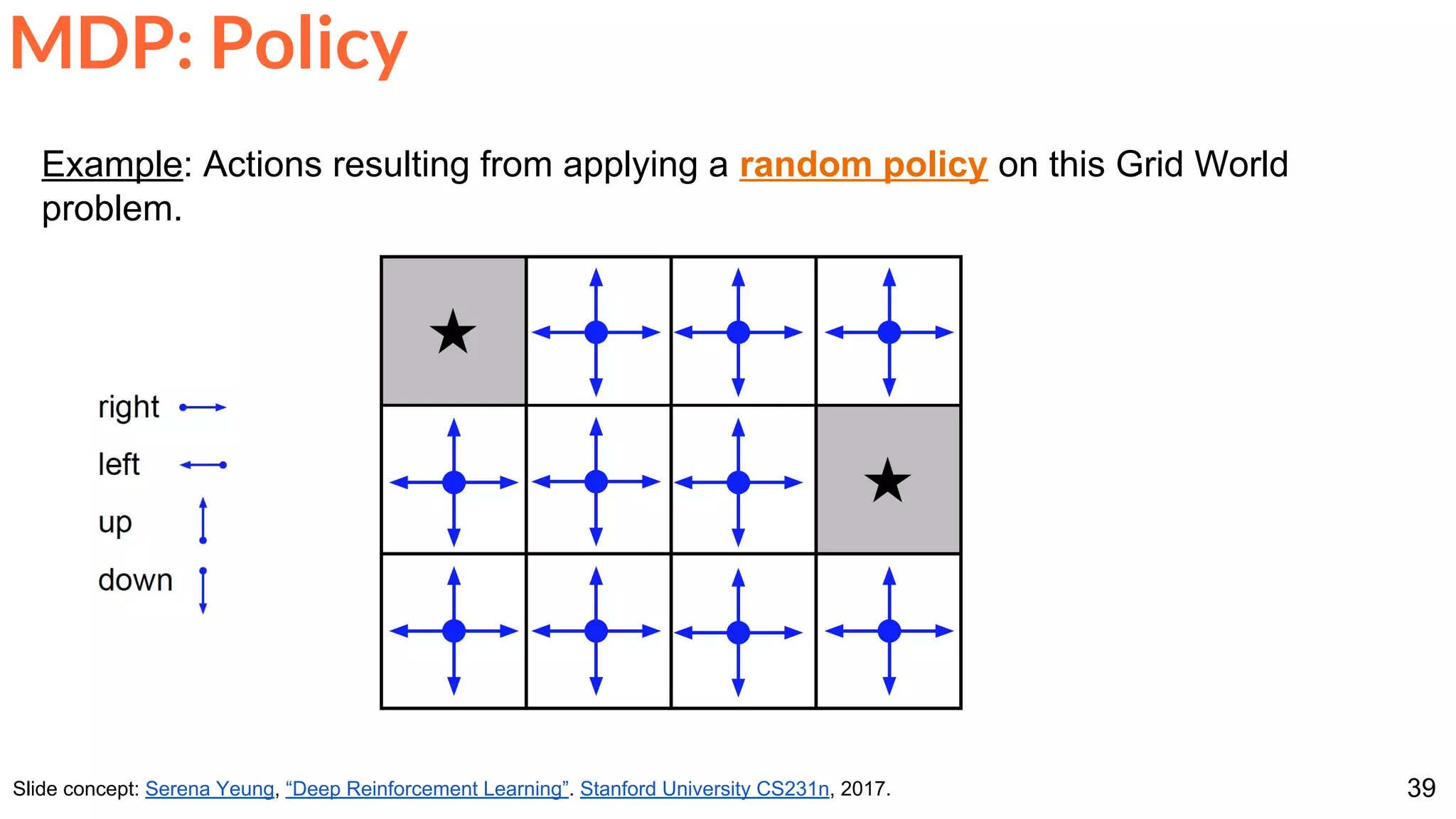

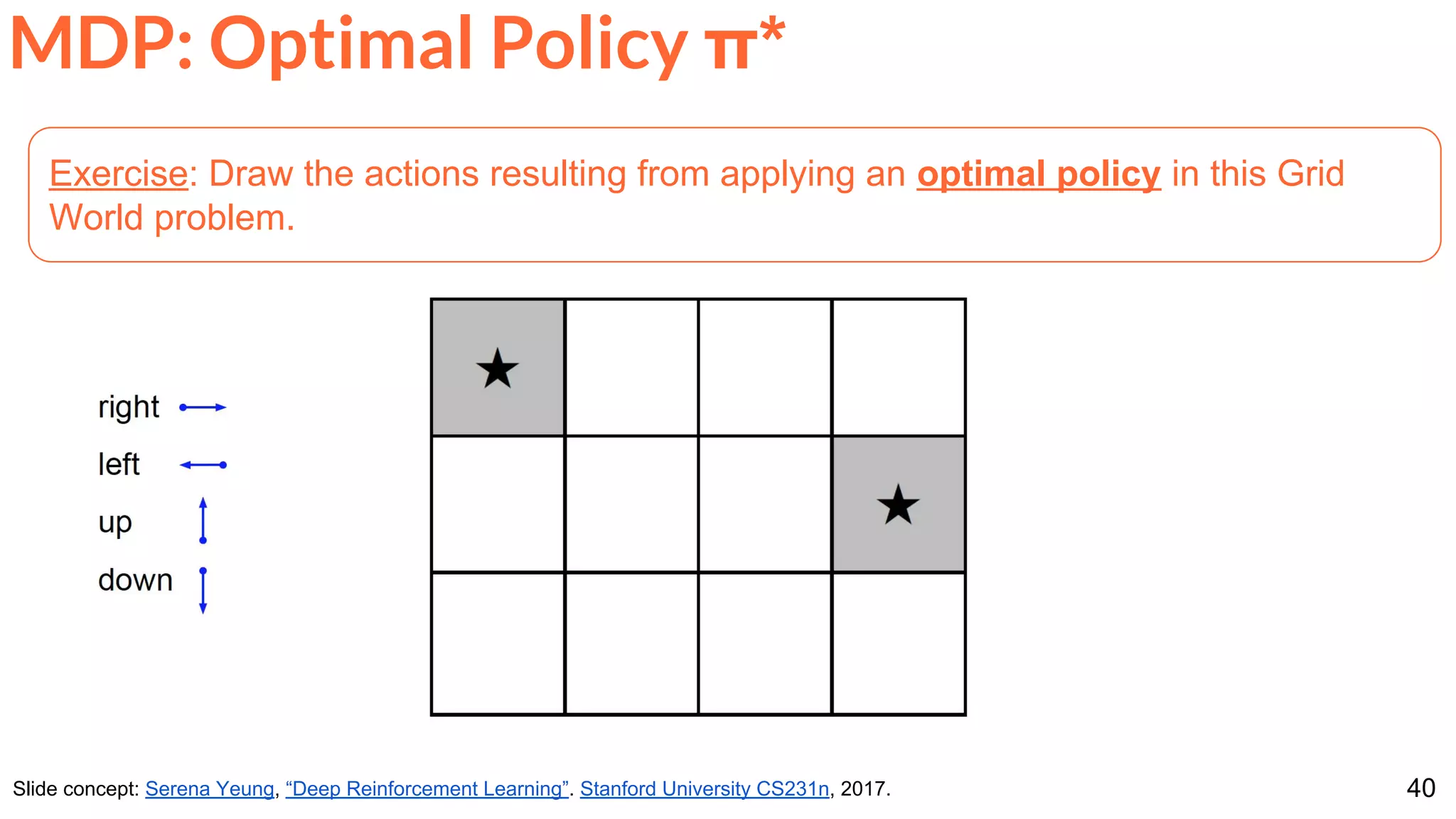

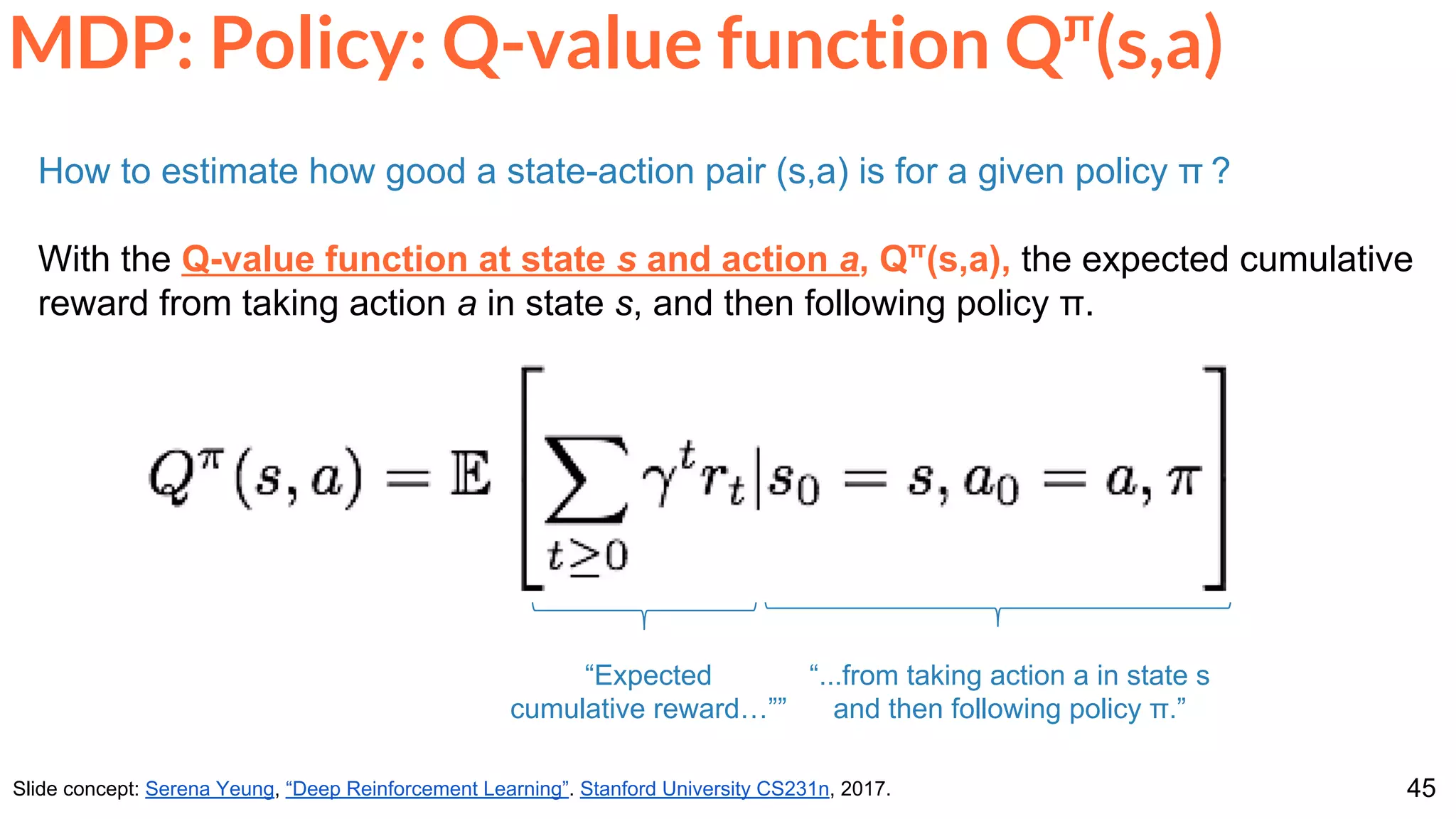

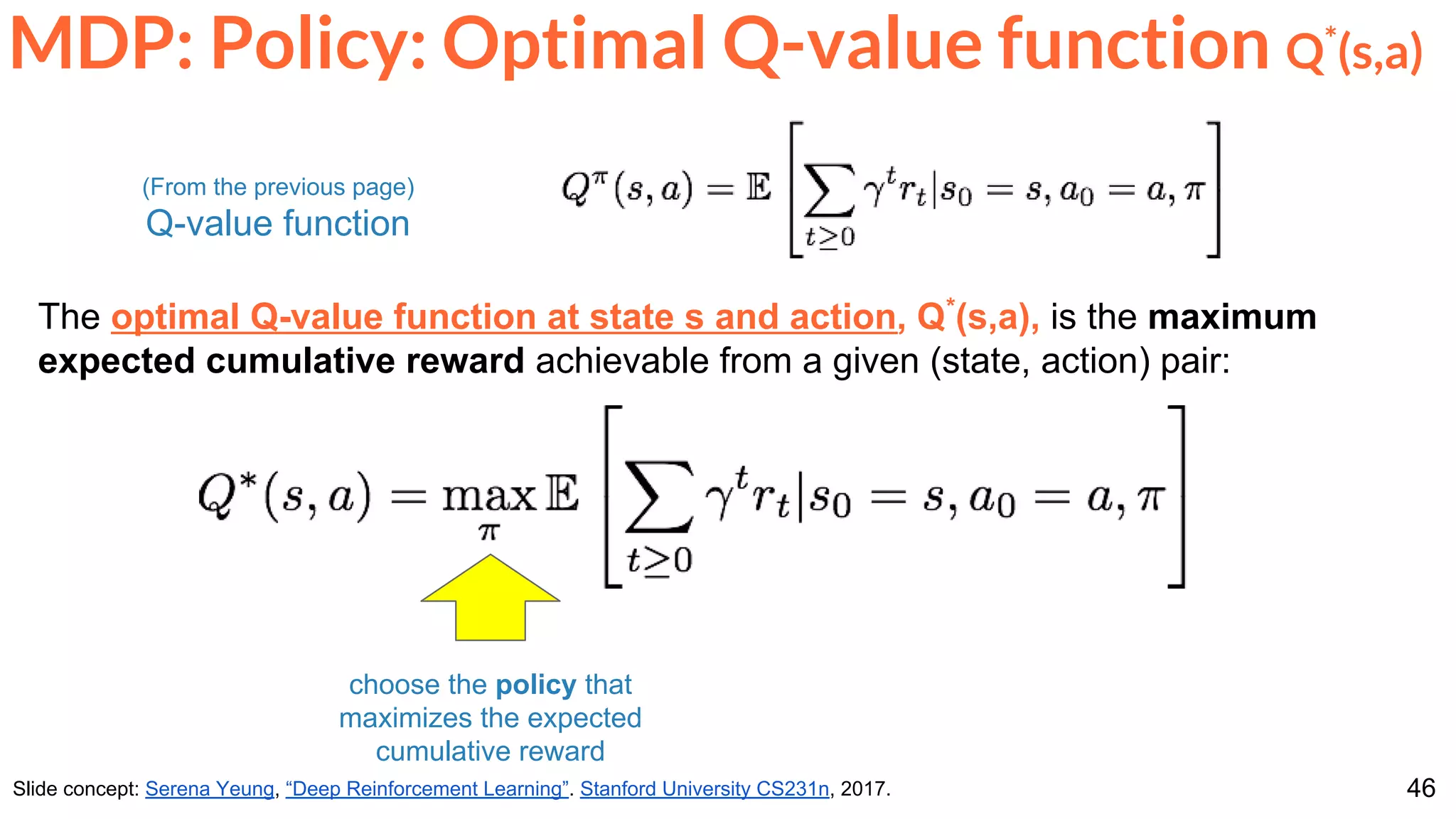

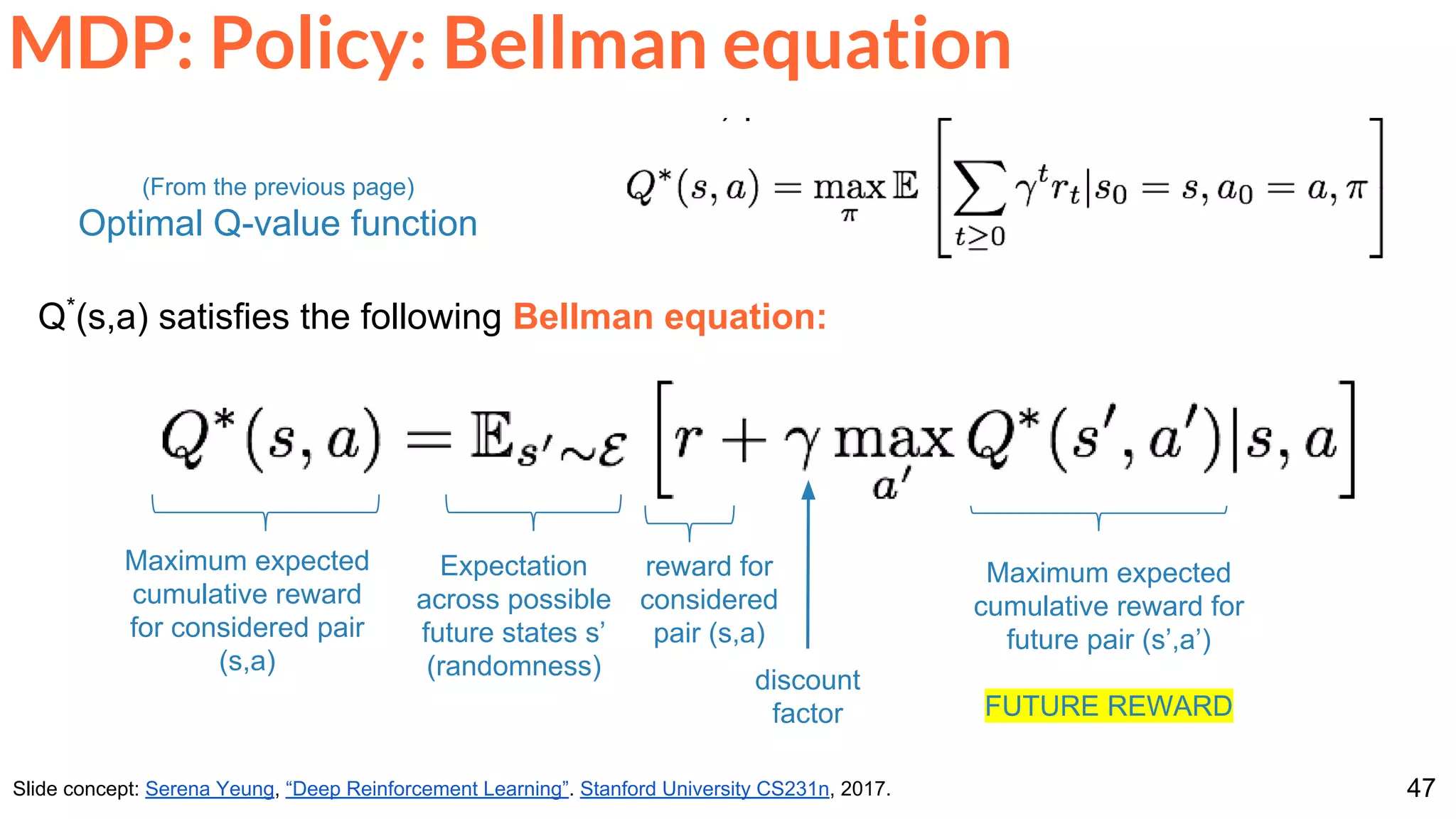

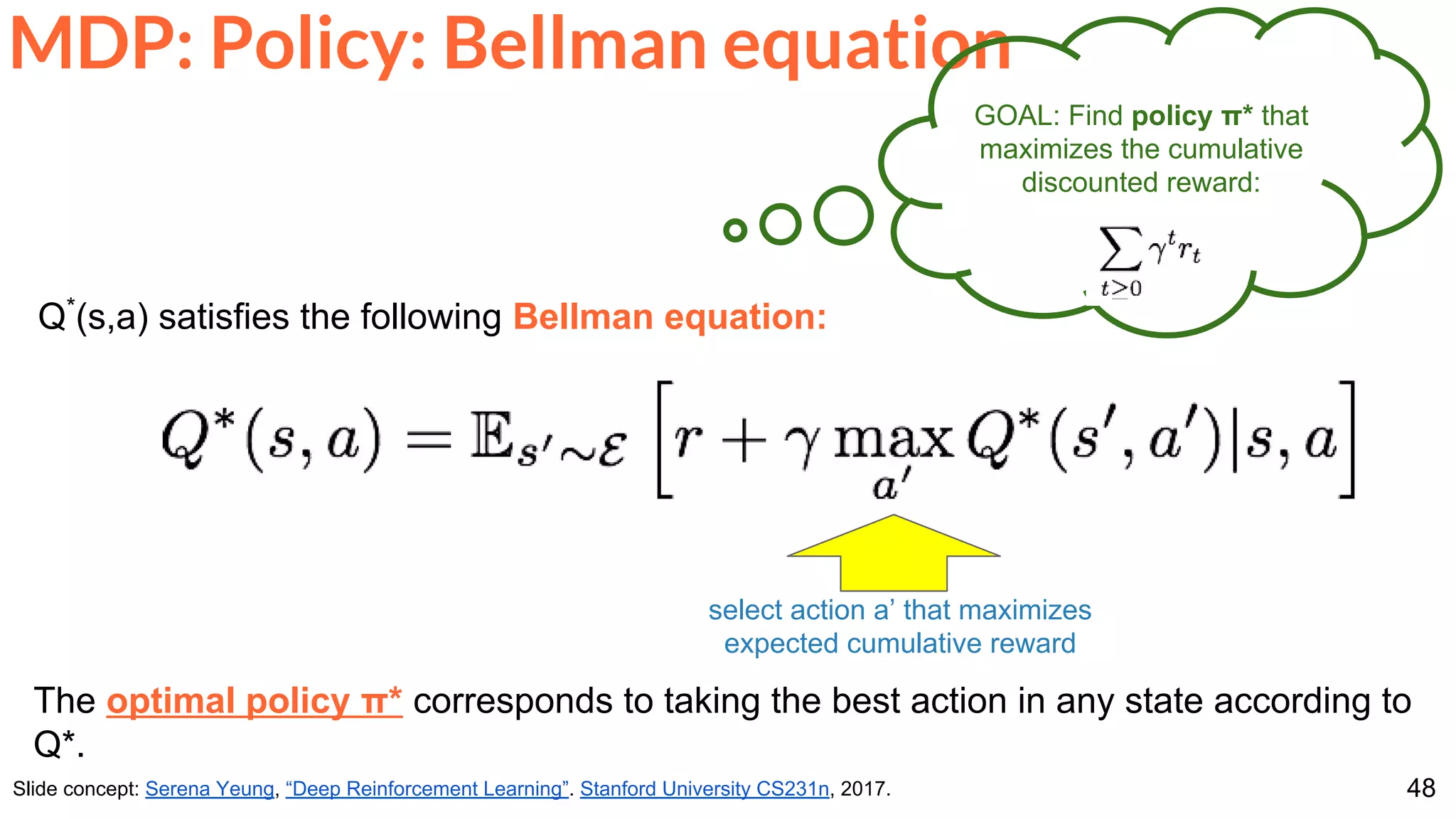

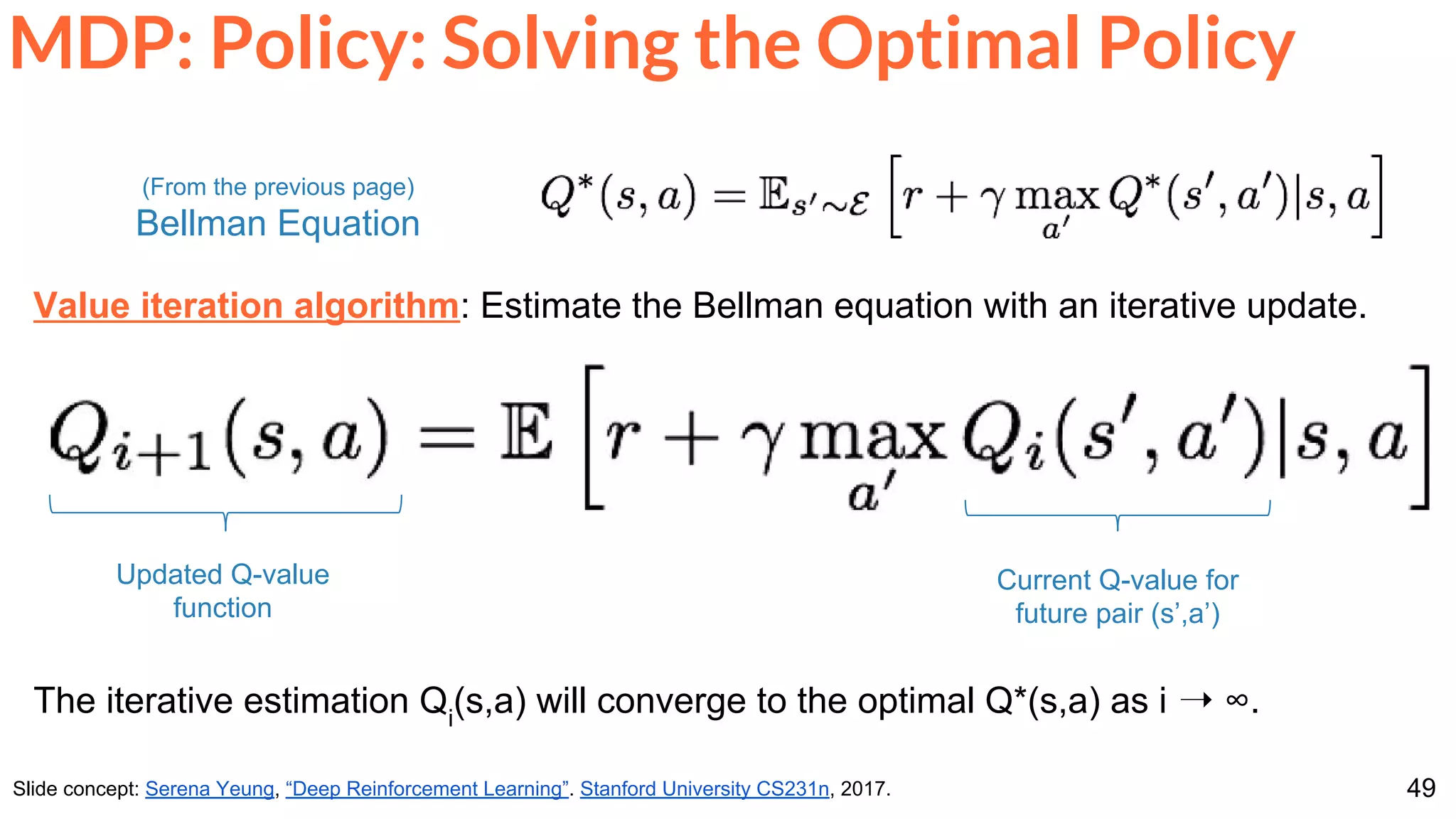

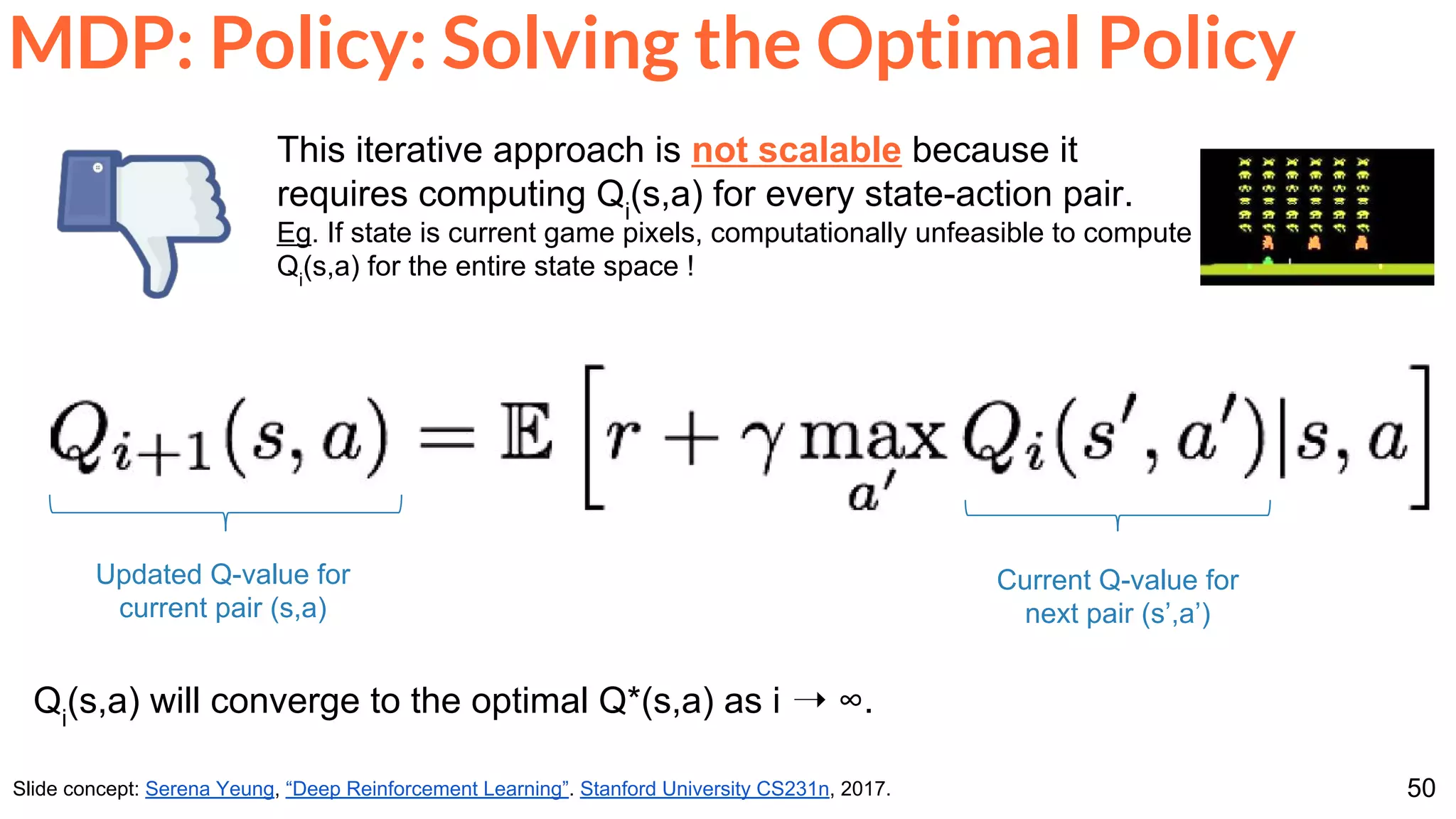

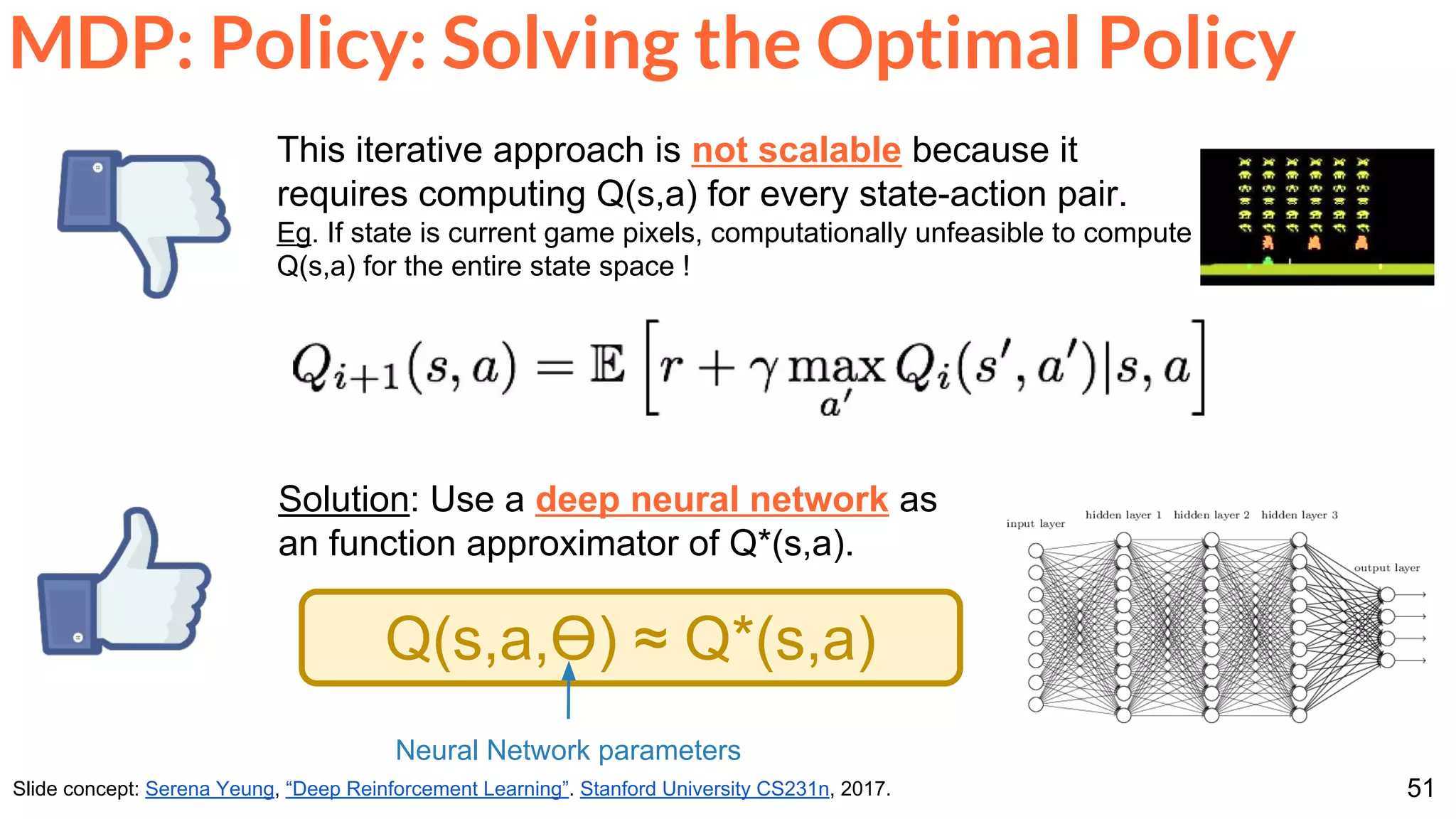

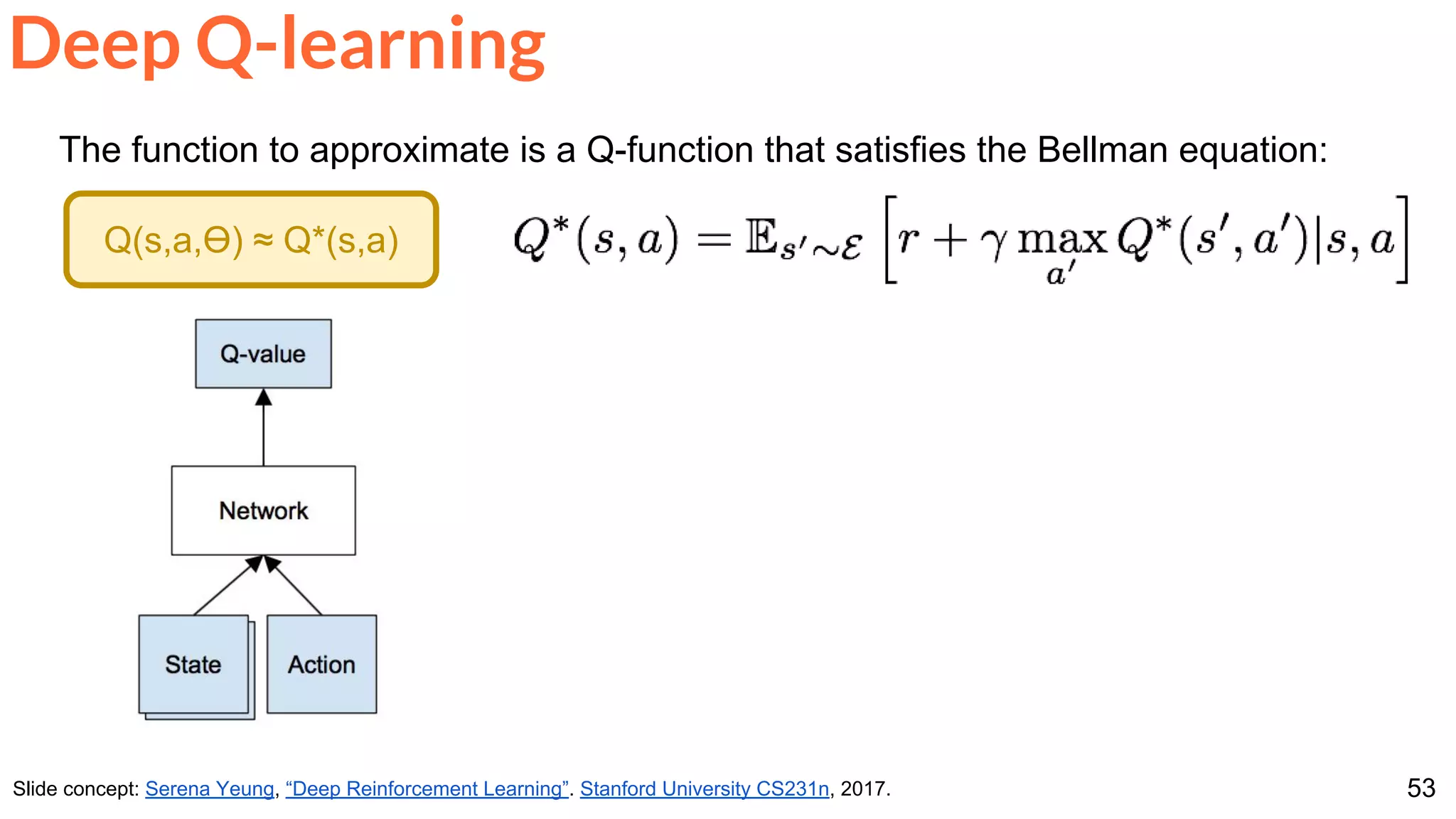

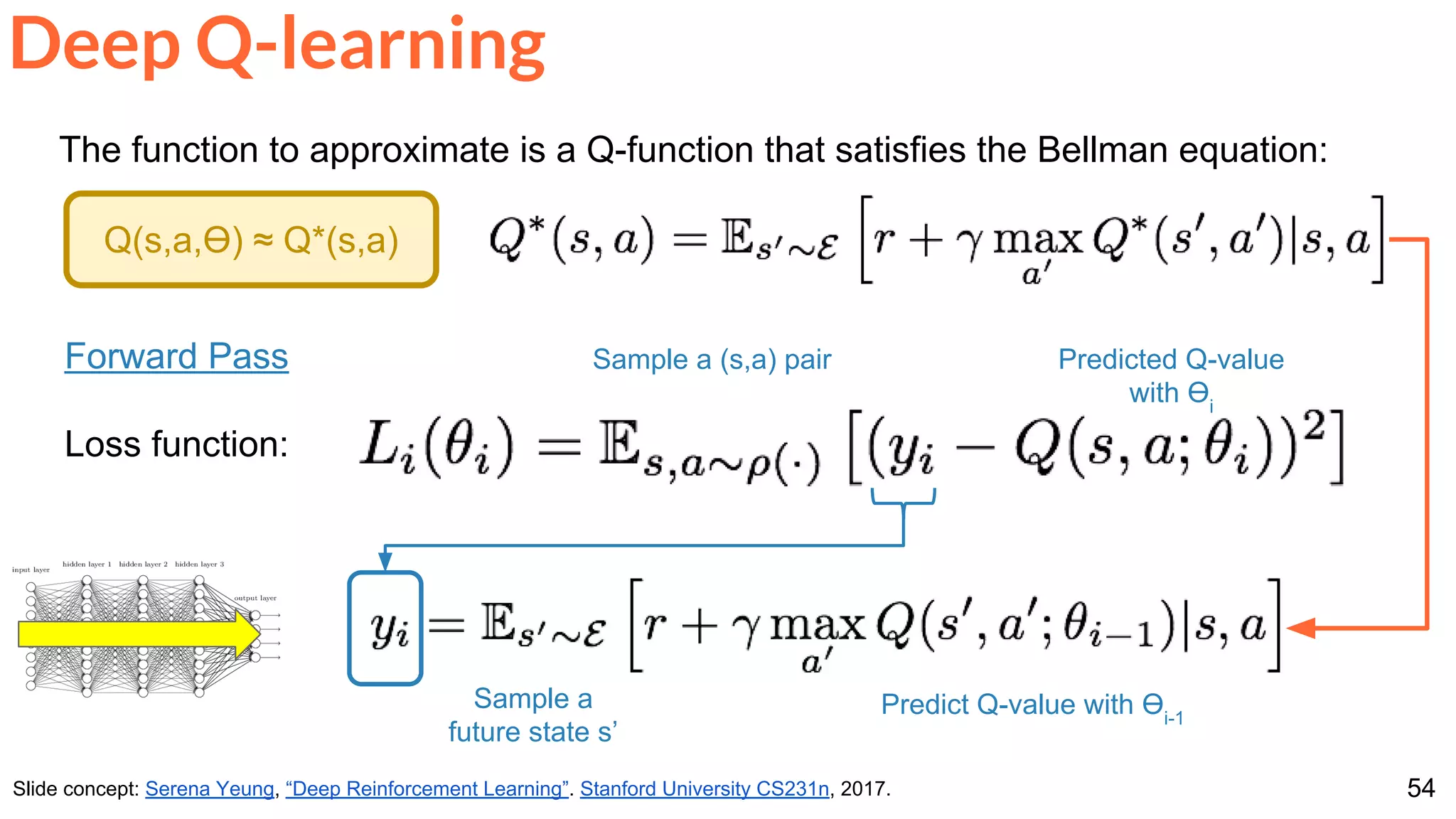

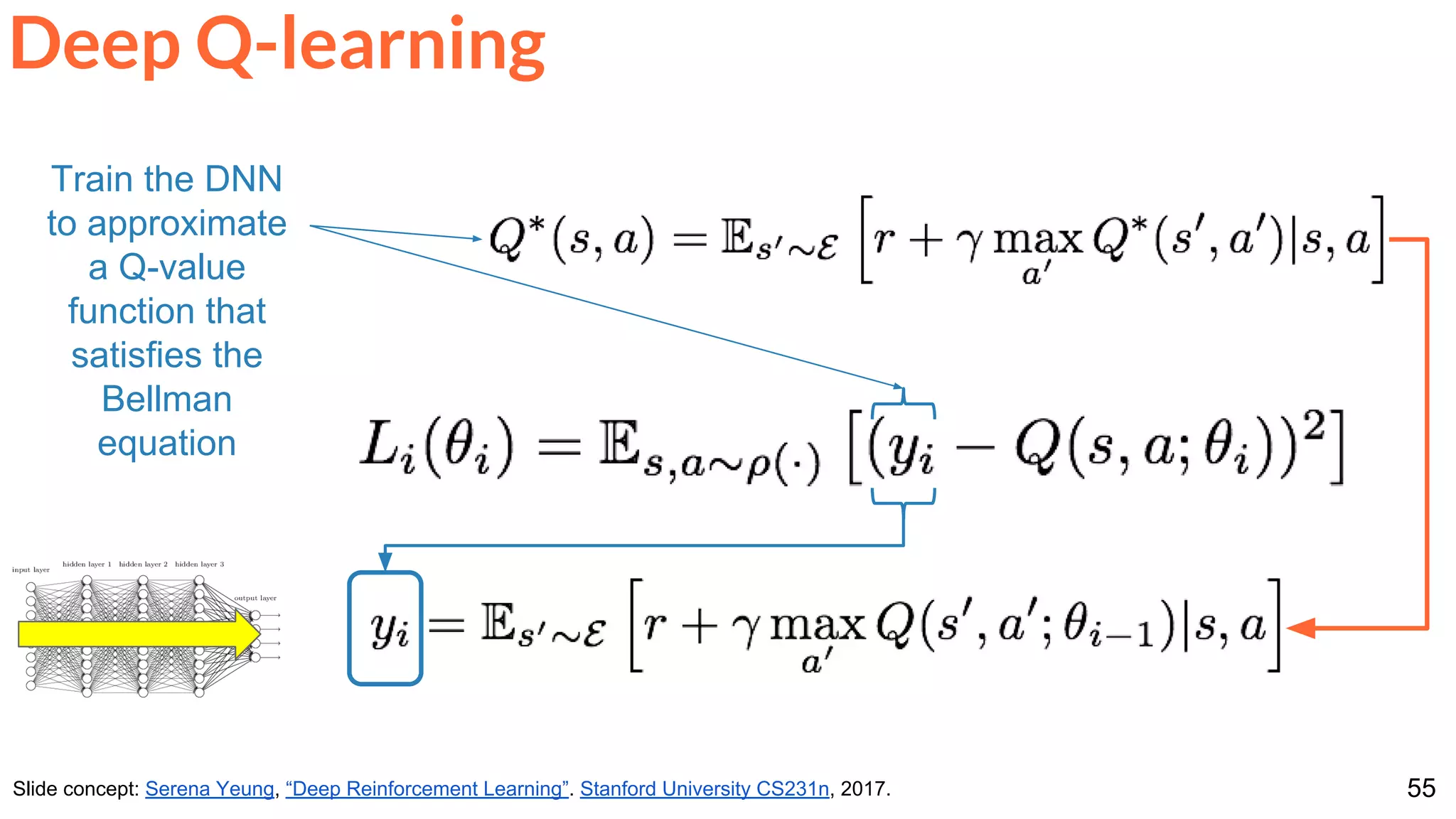

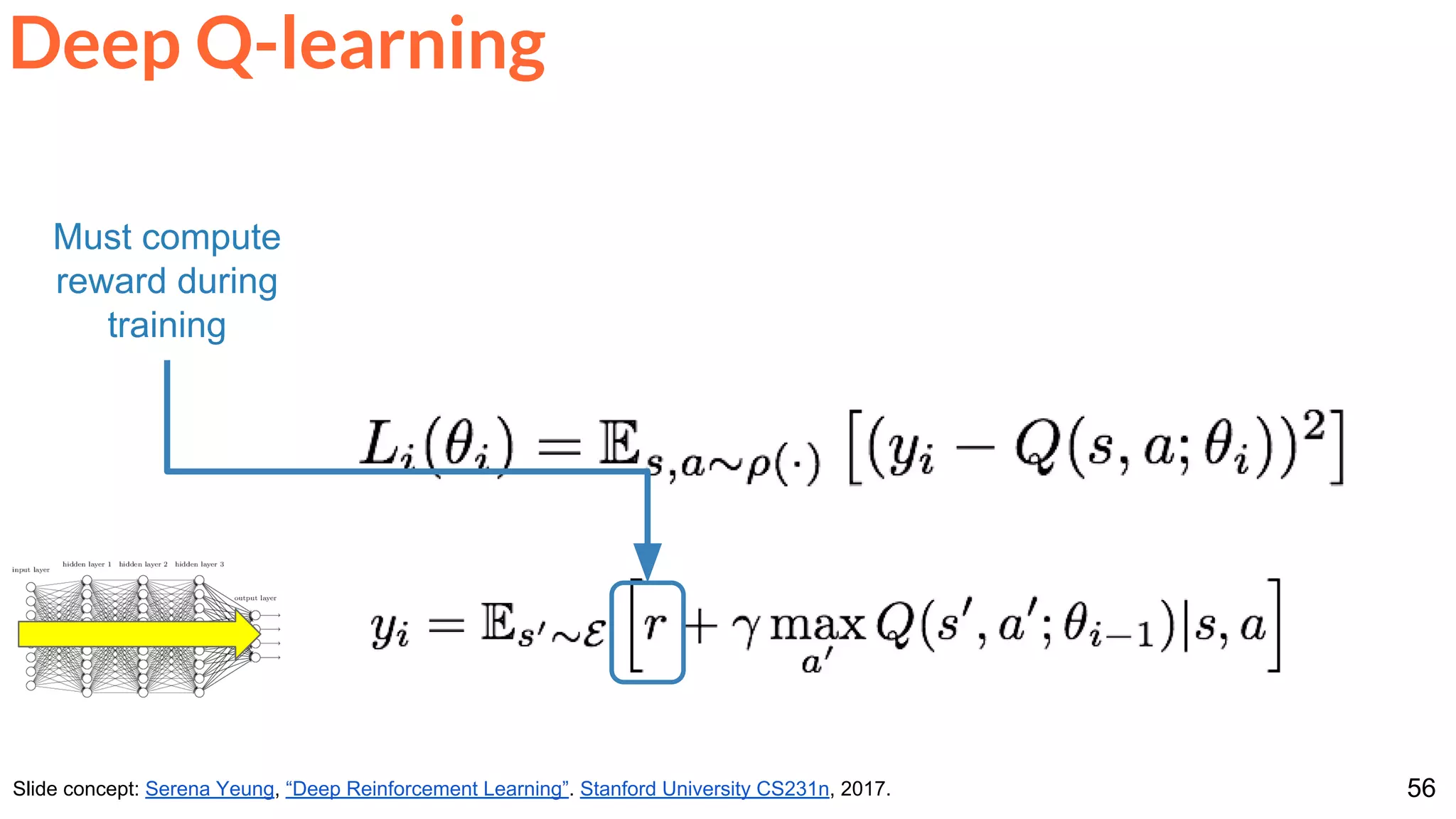

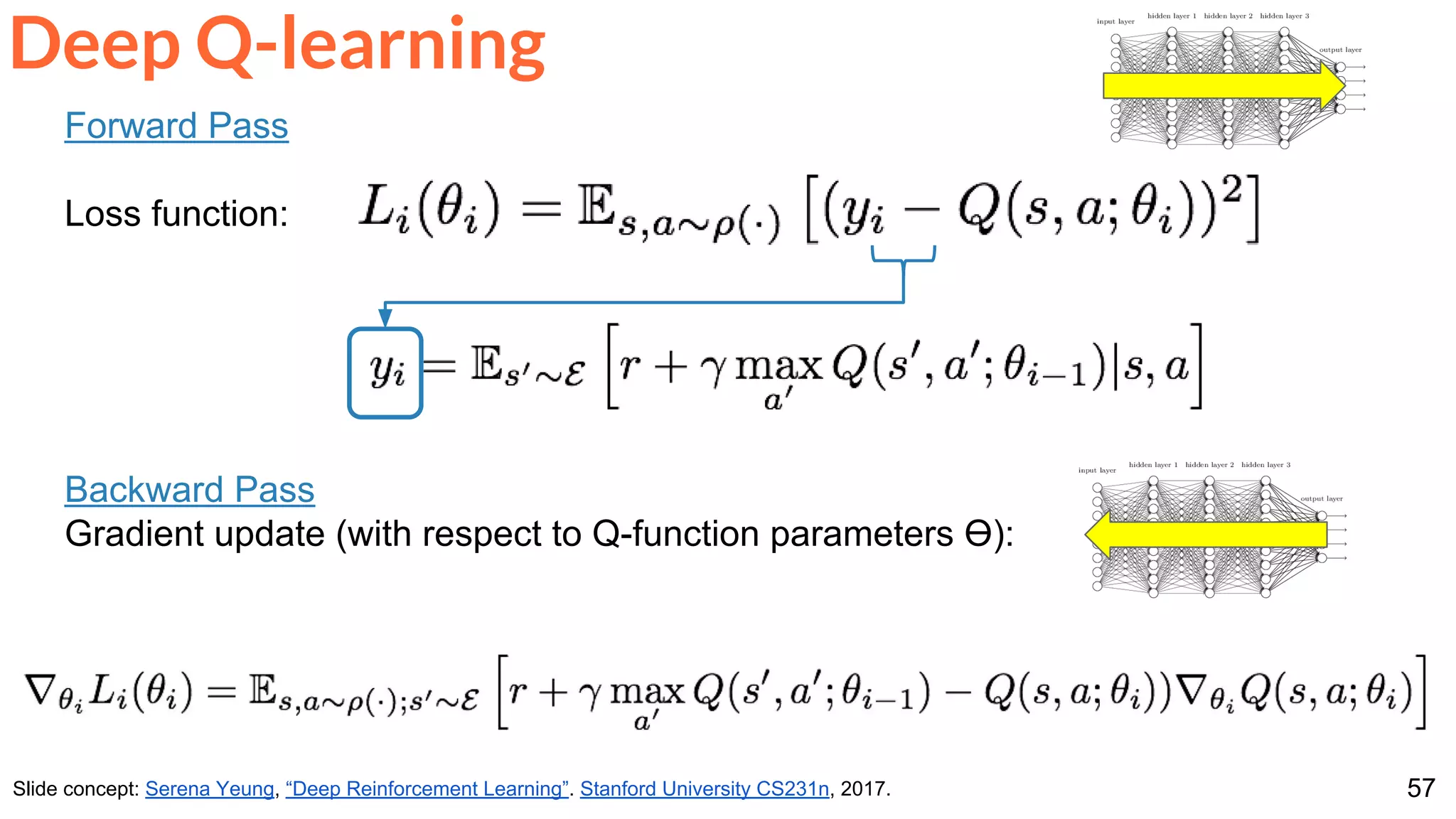

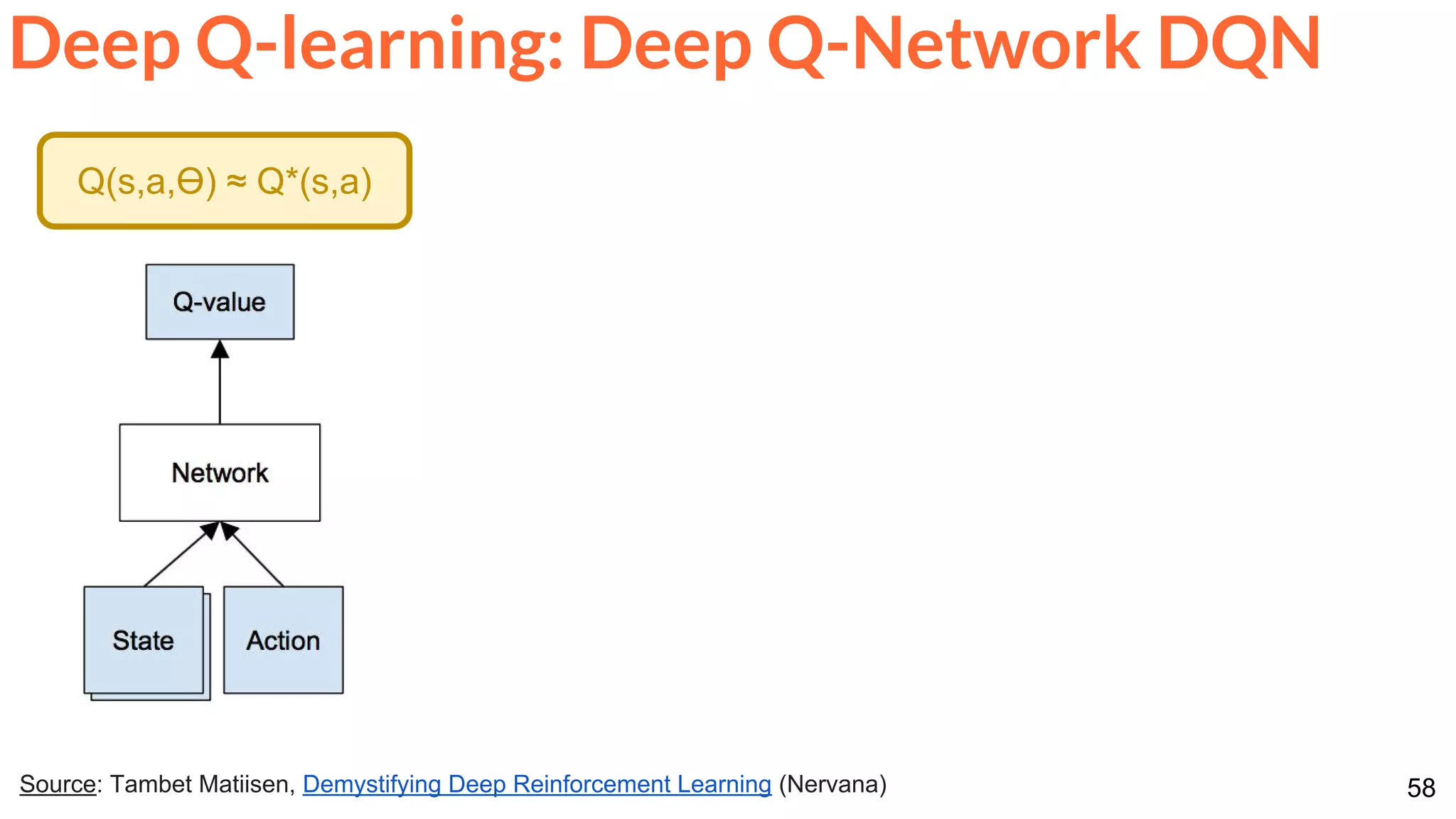

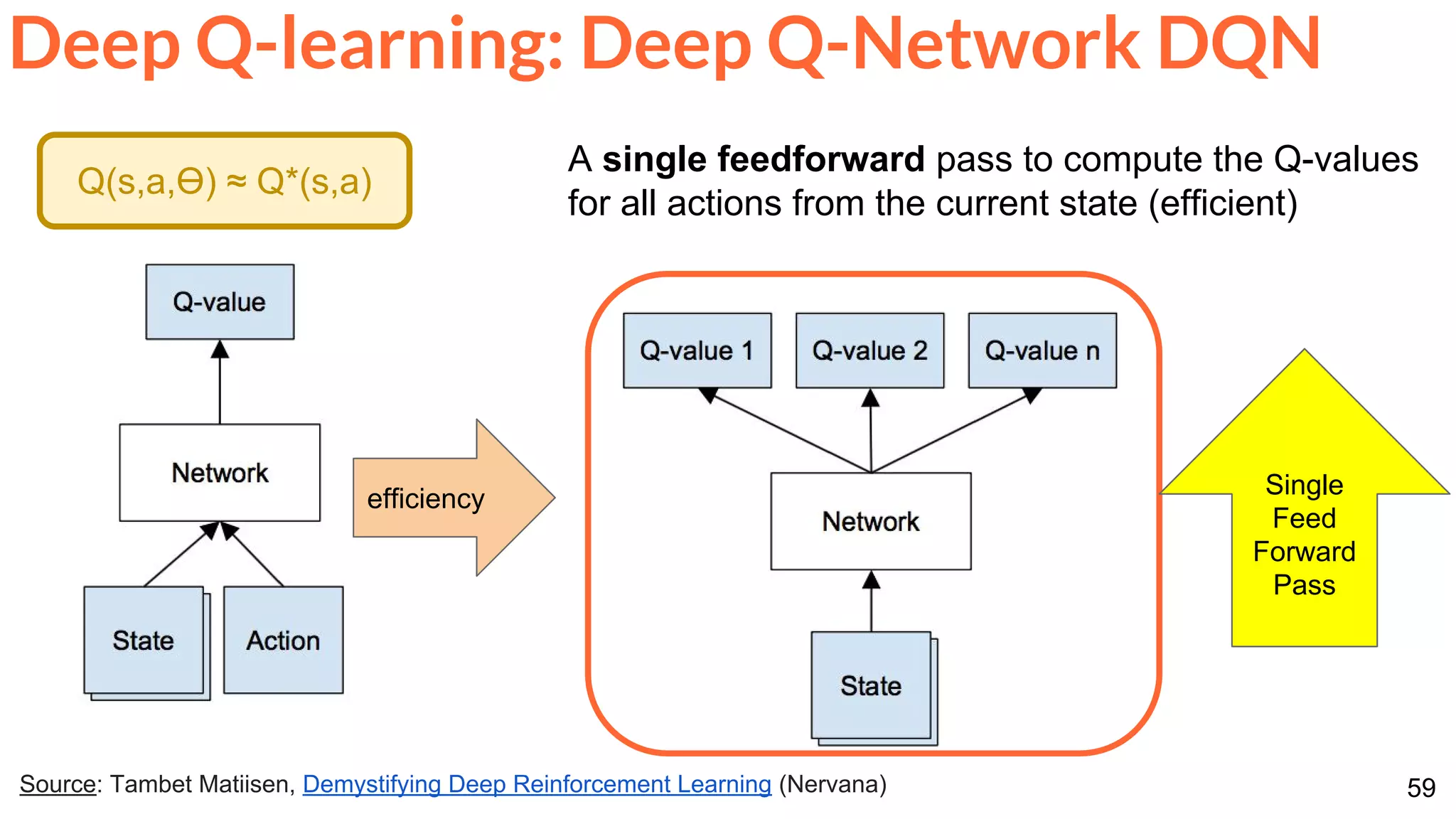

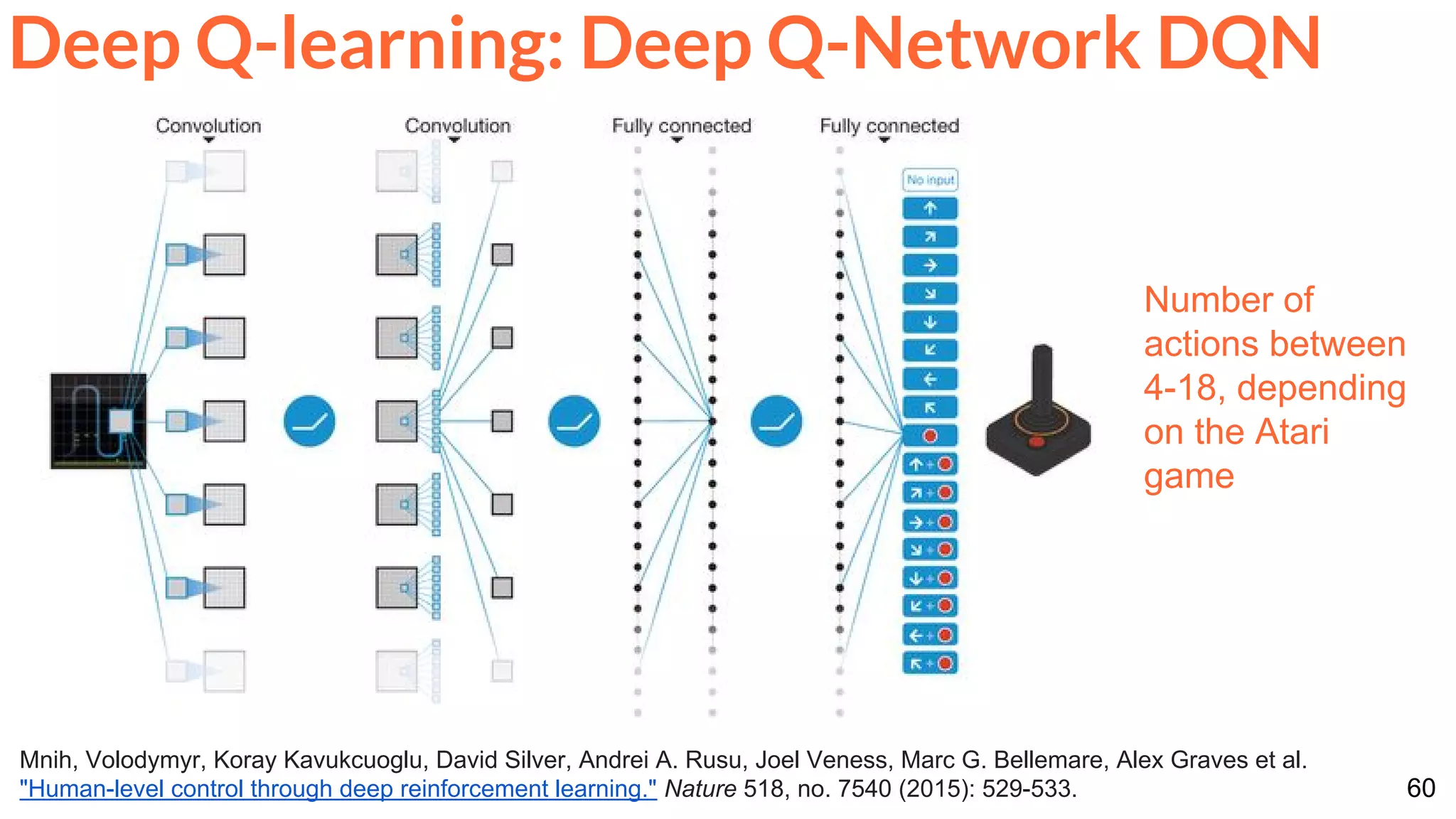

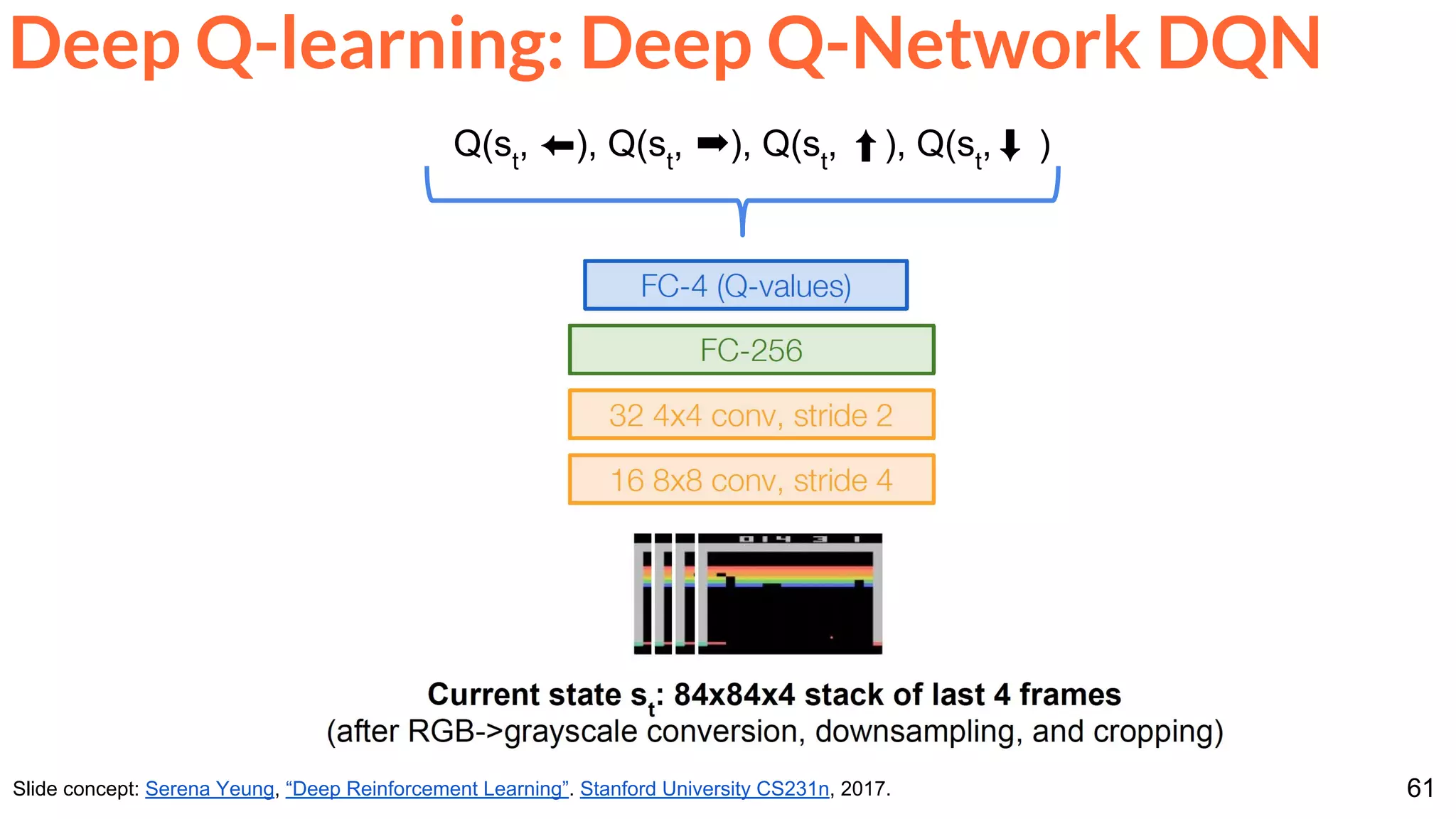

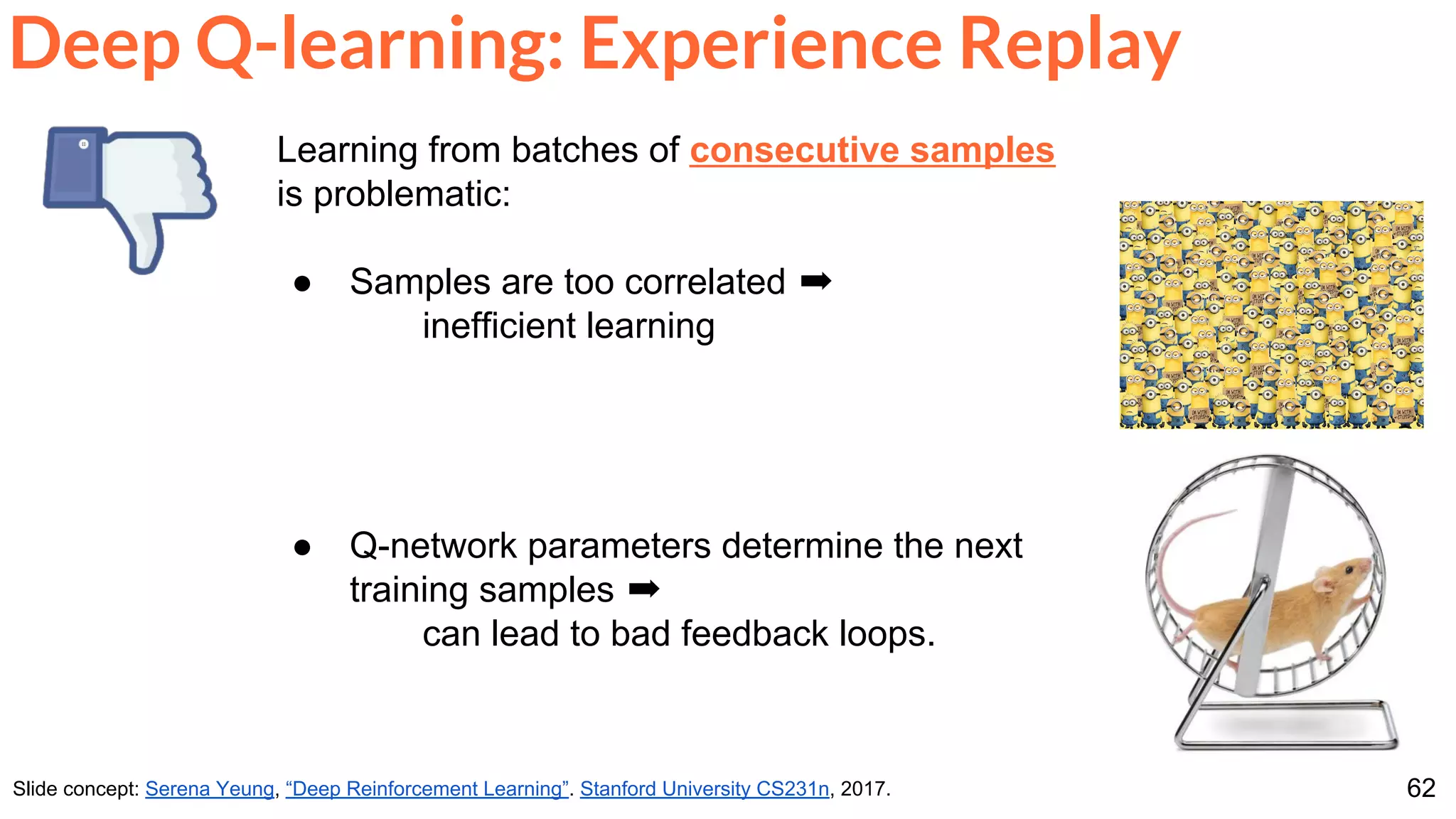

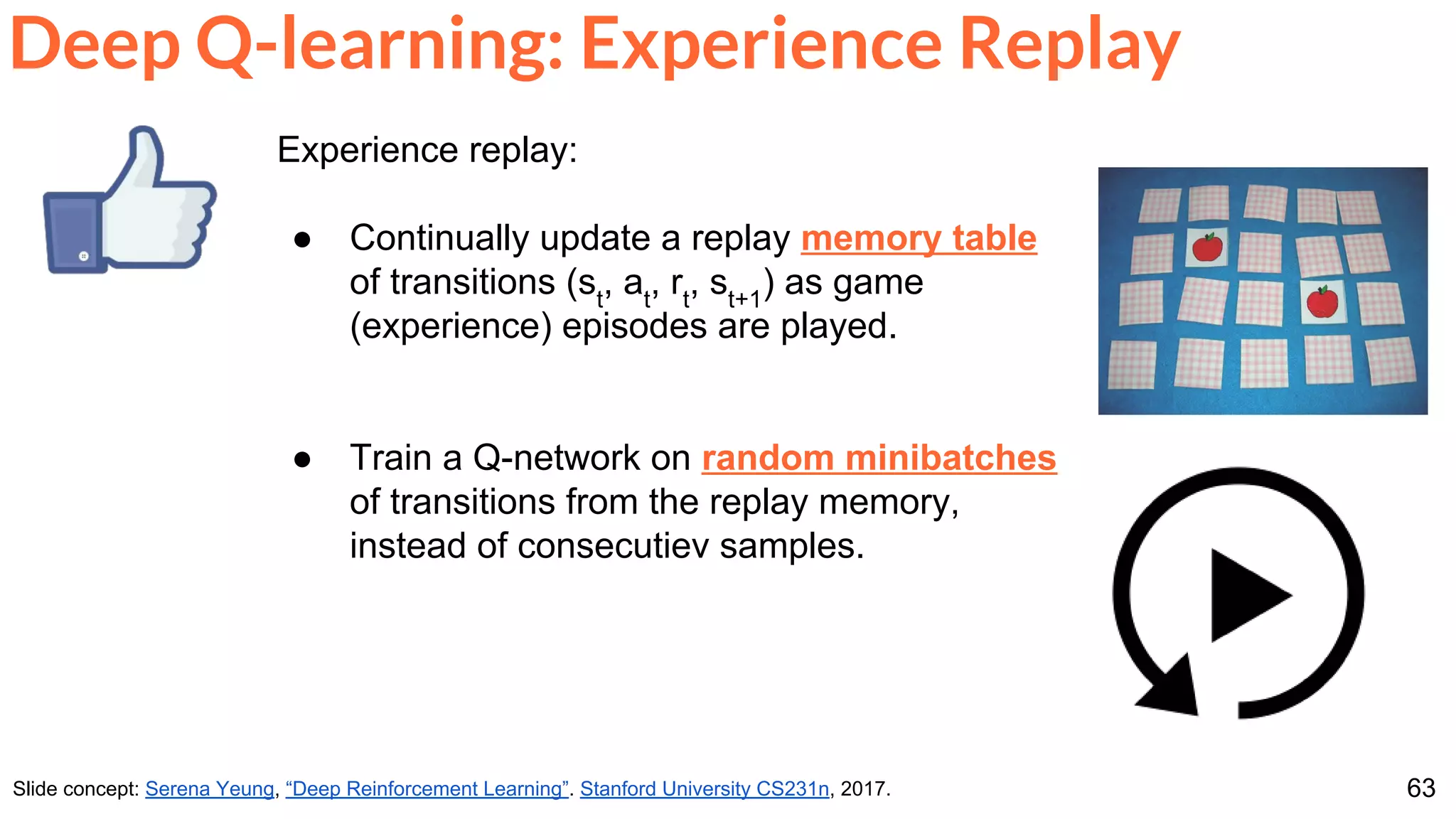

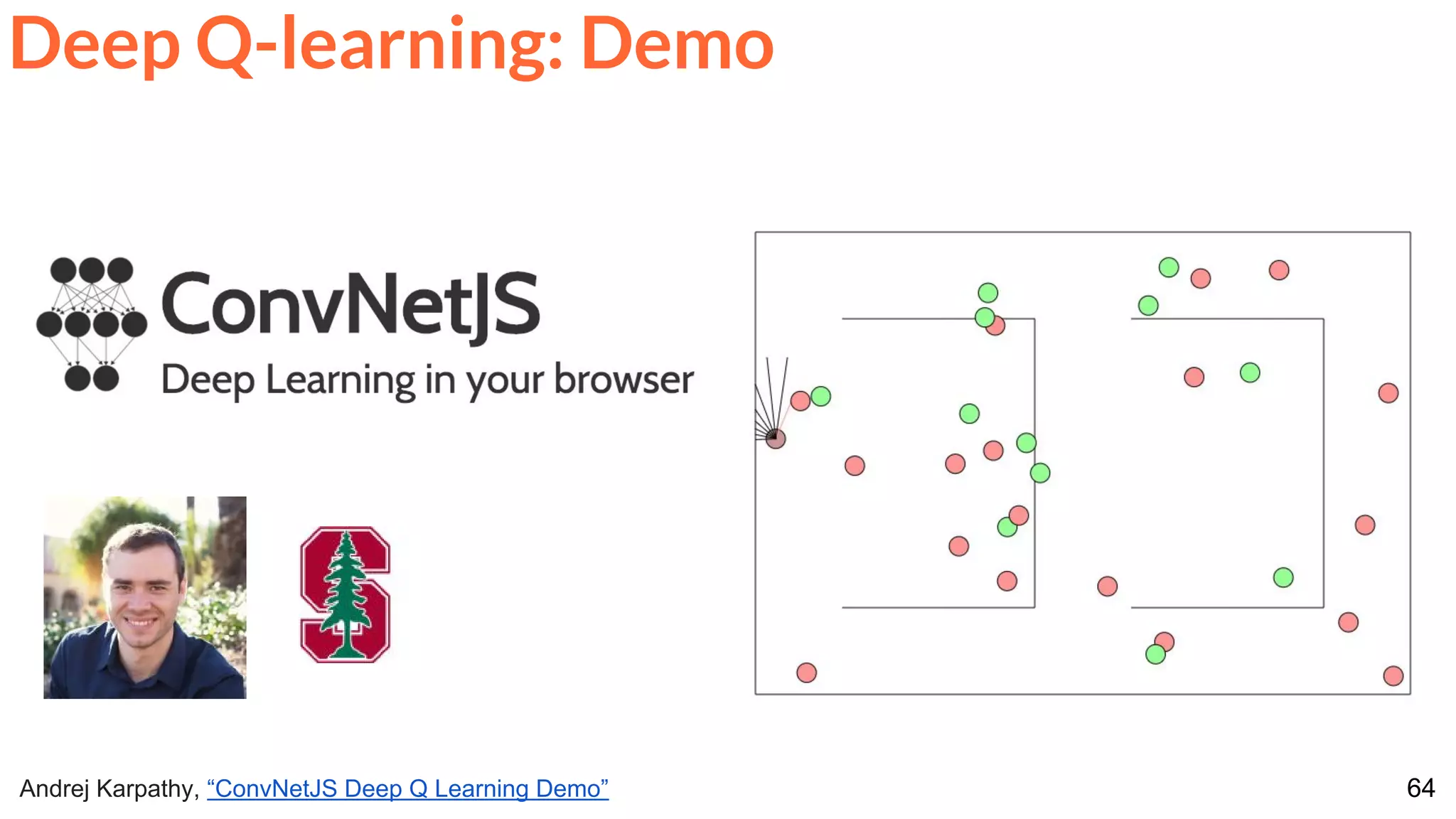

The document is a lecture presentation on reinforcement learning by Xavier Giro-i-Nieto, covering topics such as motivation, architecture, Markov decision processes (MDP), and deep Q-learning techniques. It discusses the categorization of learning procedures, policies, value functions, and the use of deep learning for approximating Q-values. The content also highlights key problems and solutions in reinforcement learning, including experience replay and the application of neural networks.

![[course site]

Xavier Giro-i-Nieto

xavier.giro@upc.edu

Associate Professor

Universitat Politecnica de Catalunya

Technical University of Catalonia

Reinforcement Learning

Day 7 Lecture 2

#DLUPC](https://image.slidesharecdn.com/dlai2017d7l2reinforcementlearning-171114180748/75/Reinforcement-Learning-DLAI-D7L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-1-2048.jpg)

![6

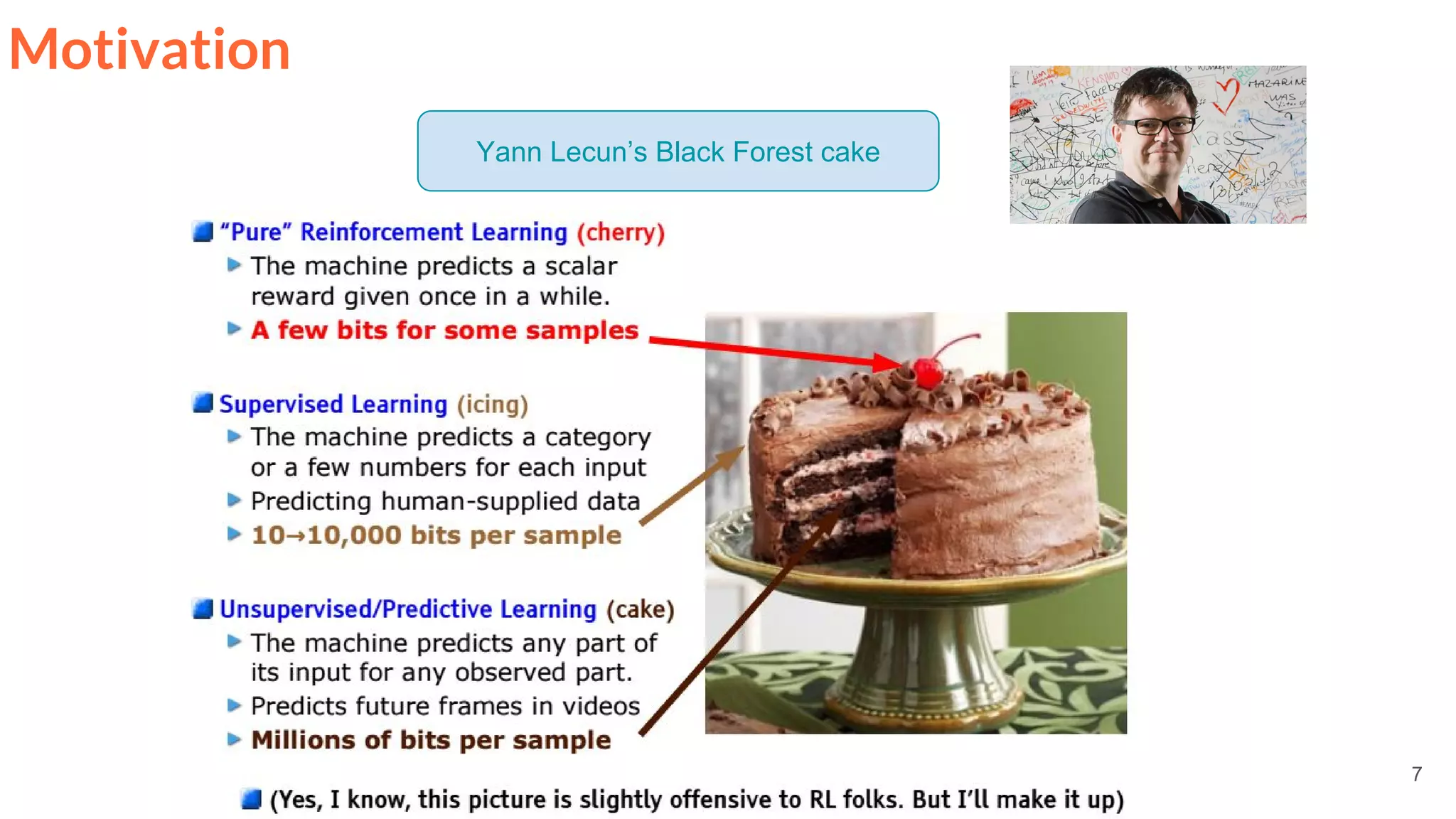

Motivation

What is Reinforcement Learning ?

“a way of programming agents by reward and punishment without needing to

specify how the task is to be achieved”

[Kaelbling, Littman, & Moore, 96]

Kaelbling, Leslie Pack, Michael L. Littman, and Andrew W. Moore. "Reinforcement learning: A survey." Journal of artificial

intelligence research 4 (1996): 237-285.](https://image.slidesharecdn.com/dlai2017d7l2reinforcementlearning-171114180748/75/Reinforcement-Learning-DLAI-D7L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-6-2048.jpg)

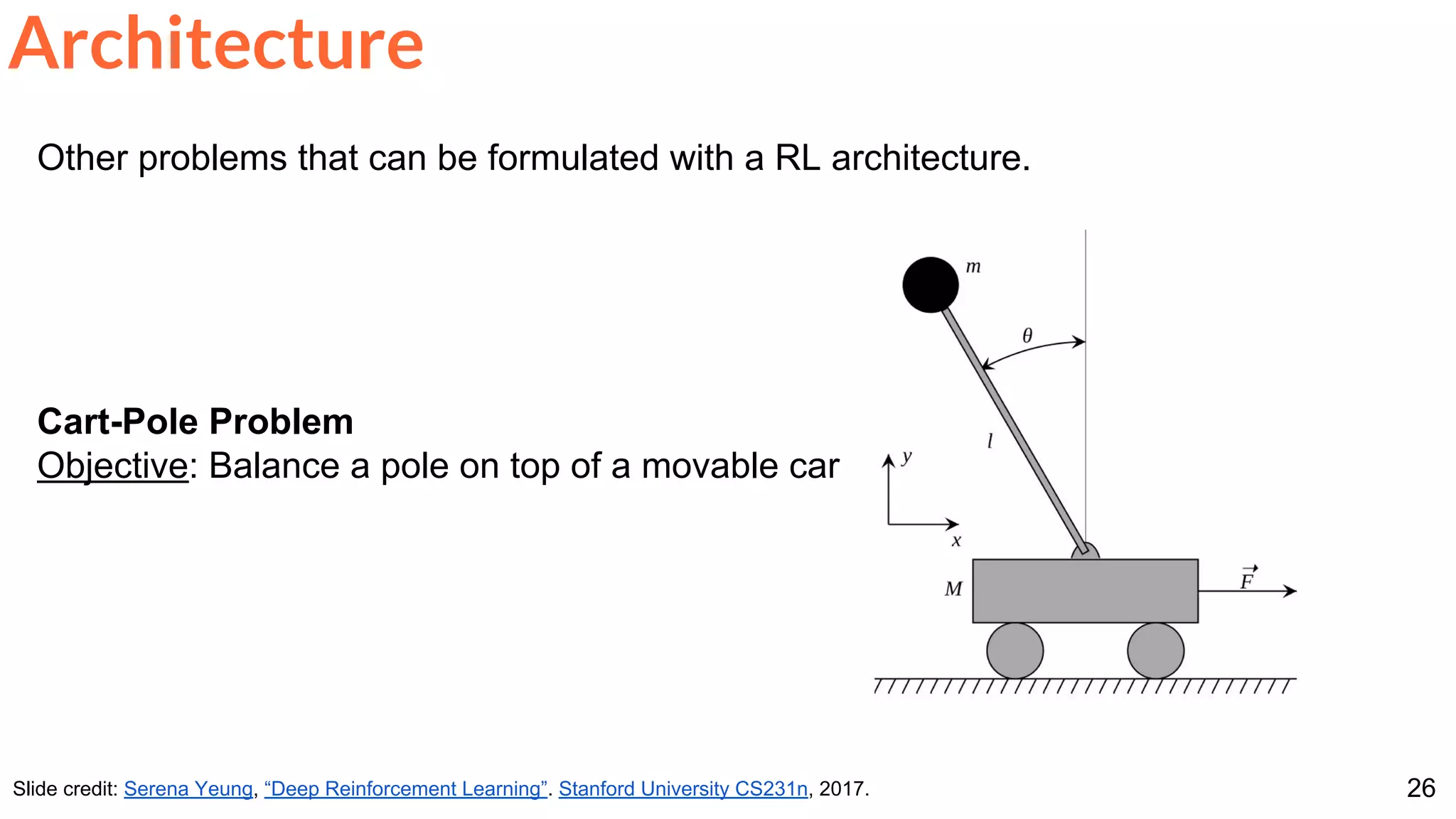

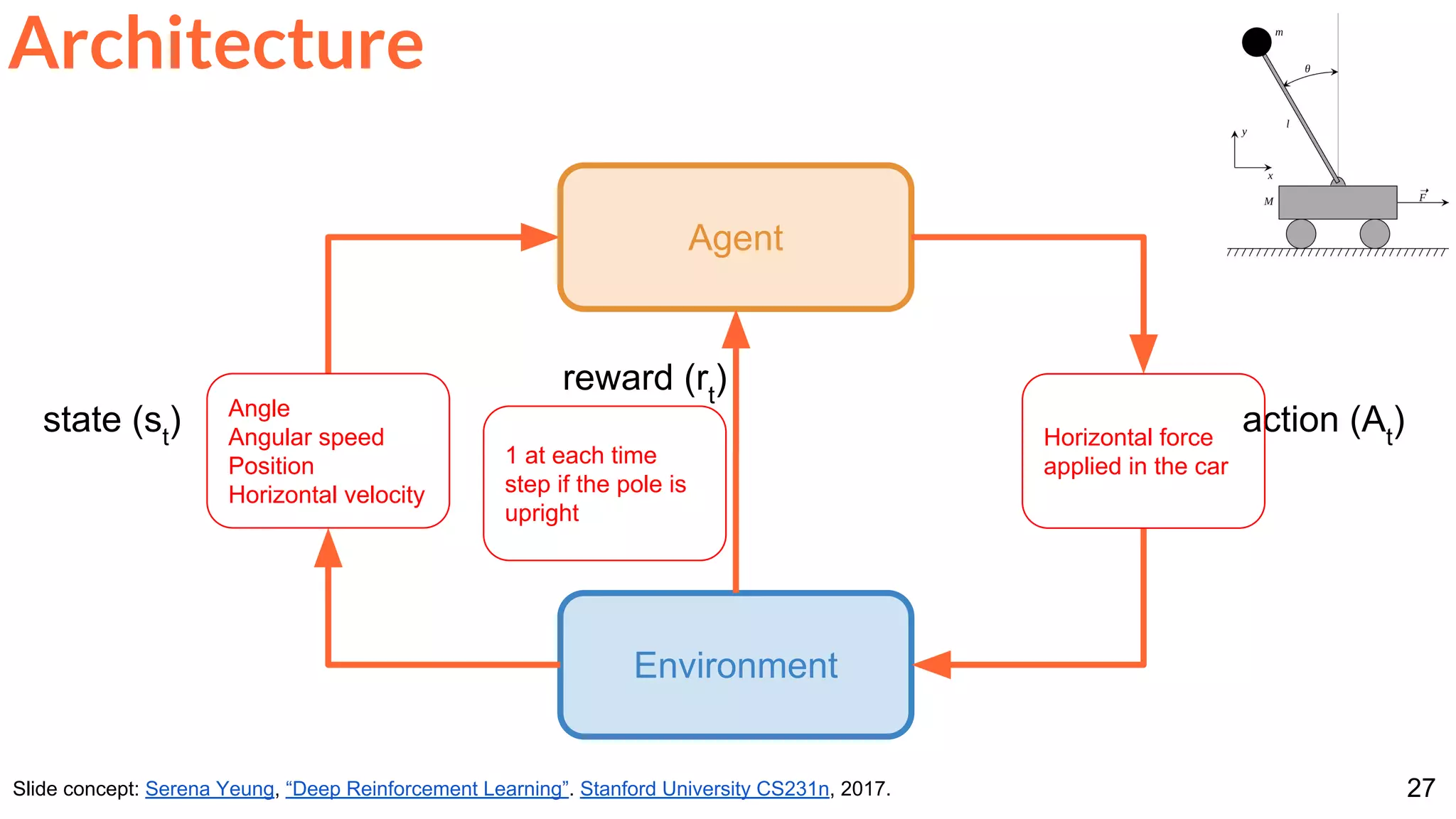

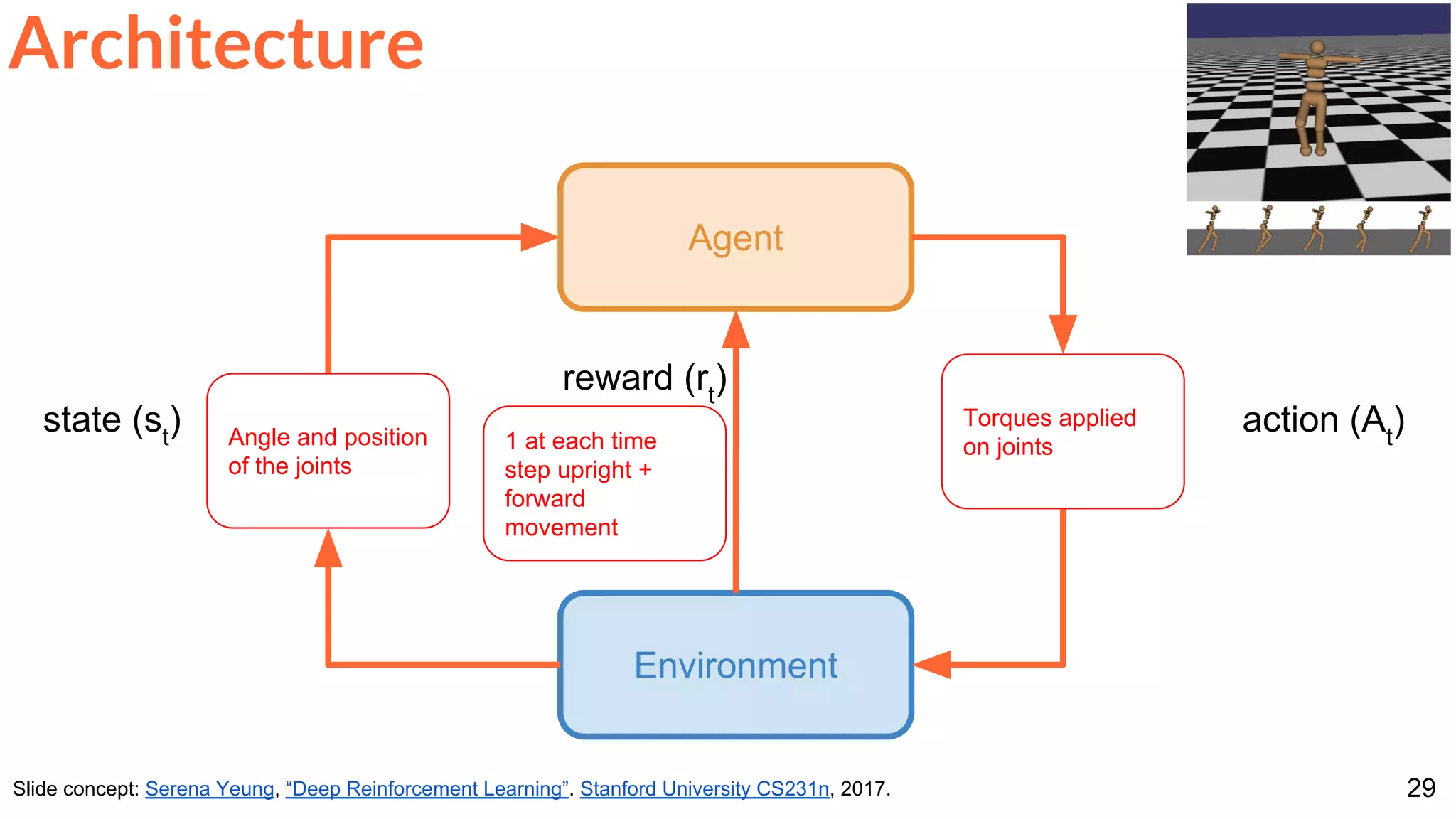

![28

Other problems that can be formulated with a RL architecture.

Robot Locomotion

Objective: Make the robot move forward

Architecture

Schulman, John, Philipp Moritz, Sergey Levine, Michael Jordan, and Pieter Abbeel. "High-dimensional continuous control using generalized

advantage estimation." ICLR 2016 [project page]](https://image.slidesharecdn.com/dlai2017d7l2reinforcementlearning-171114180748/75/Reinforcement-Learning-DLAI-D7L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-28-2048.jpg)

![30

Schulman, John, Philipp Moritz, Sergey Levine, Michael Jordan, and Pieter Abbeel. "High-dimensional continuous control using generalized

advantage estimation." ICLR 2016 [project page]](https://image.slidesharecdn.com/dlai2017d7l2reinforcementlearning-171114180748/75/Reinforcement-Learning-DLAI-D7L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-30-2048.jpg)

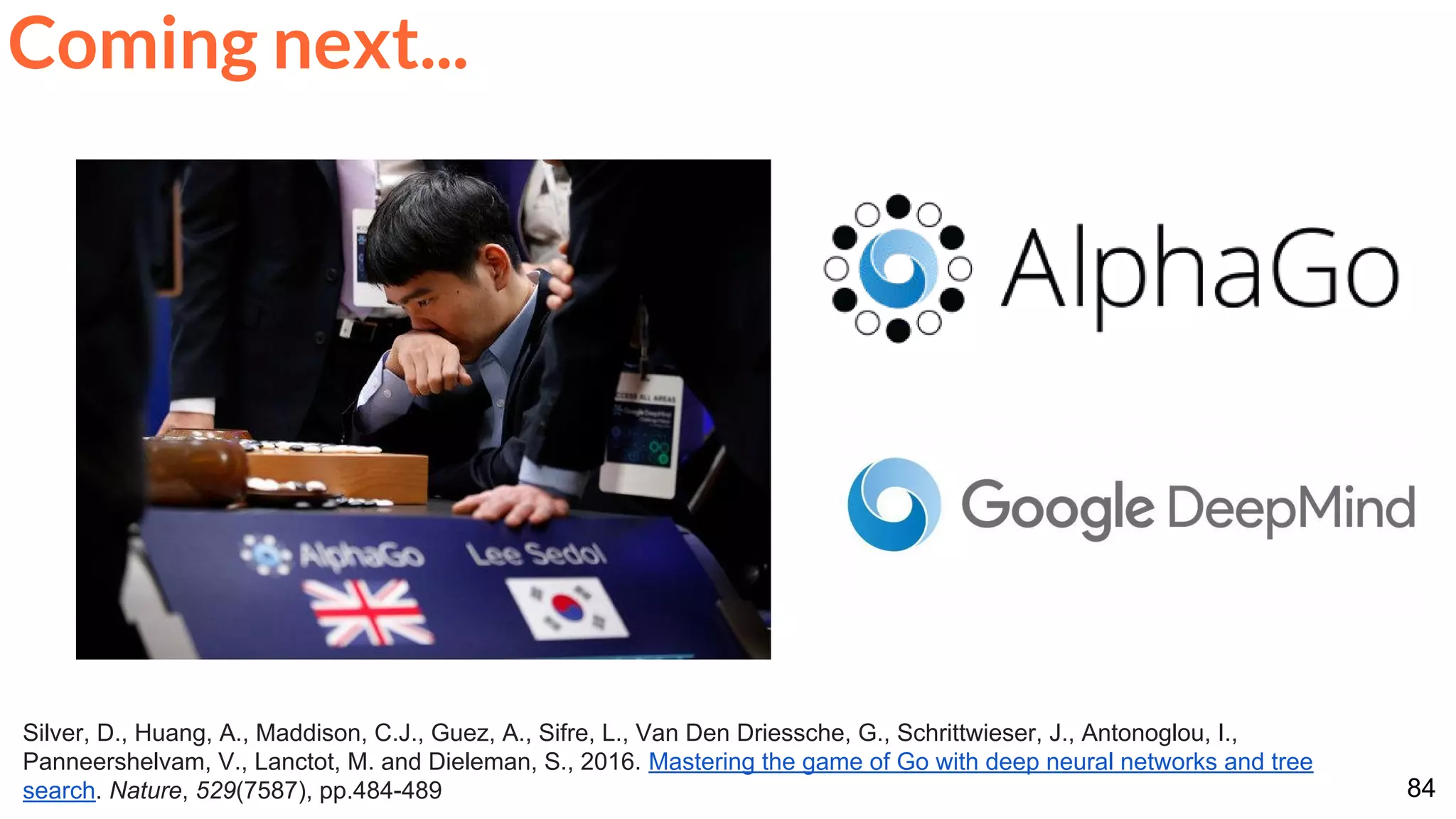

![86

Vinyals, O., Ewalds, T., Bartunov, S., Georgiev, P., Vezhnevets, A.S., Yeo, M., Makhzani, A., Küttler, H.,

Agapiou, J., Schrittwieser, J. and Quan, J., 2017. Starcraft ii: A new challenge for reinforcement learning.

arXiv preprint arXiv:1708.04782. [Press release]

Coming next...](https://image.slidesharecdn.com/dlai2017d7l2reinforcementlearning-171114180748/75/Reinforcement-Learning-DLAI-D7L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-86-2048.jpg)

![87

Vinyals, O., Ewalds, T., Bartunov, S., Georgiev, P., Vezhnevets, A.S., Yeo, M., Makhzani, A., Küttler, H.,

Agapiou, J., Schrittwieser, J. and Quan, J., 2017. Starcraft ii: A new challenge for reinforcement learning.

arXiv preprint arXiv:1708.04782. [Press release]

Coming next...](https://image.slidesharecdn.com/dlai2017d7l2reinforcementlearning-171114180748/75/Reinforcement-Learning-DLAI-D7L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-87-2048.jpg)