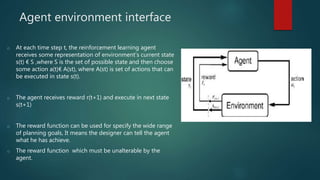

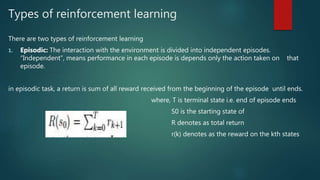

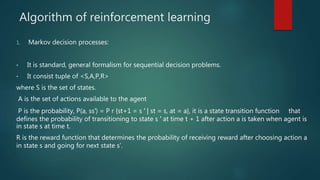

This document summarizes an efficient use of temporal difference techniques in computer game learning. It discusses reinforcement learning and some key concepts including the agent-environment interface, types of reinforcement learning tasks, elements of reinforcement learning like policy, reward functions, and value functions. It also describes algorithms like dynamic programming, policy iteration, value iteration, and temporal difference learning. Finally, it mentions some applications of reinforcement learning in benchmark problems, games, and real-world domains like robotics and control.

![References

• R. S. Sutton. Reinforcement learning: past, present and future [online]. Available from http:

//www-anw. cs. umass. edu/ rich/Talks/SEAL98/SEAL98. html [accessed on December 2005]. 1999.

• R. S. Sutton and A. G. Barto. Reinforcement learning. an introduction. Cambridge, MA: The MIT

Press, 1998.

• M. L. Puterman. Markov decision processes-discrete stochastic dynamic programming. John

Wiley and sons, Inc, New York, NY, 1994.](https://image.slidesharecdn.com/minorproject-anefficientuseoftemporaldifferencetechniquein-170614165712/85/An-efficient-use-of-temporal-difference-technique-in-Computer-Game-Learning-17-320.jpg)