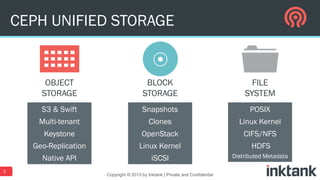

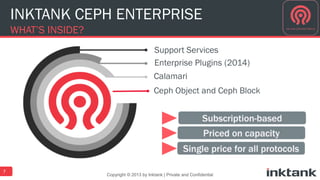

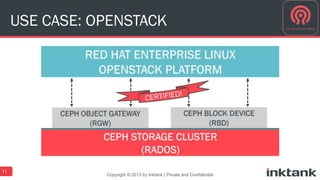

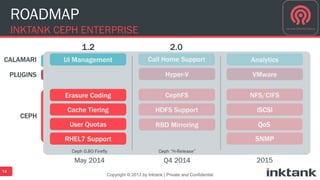

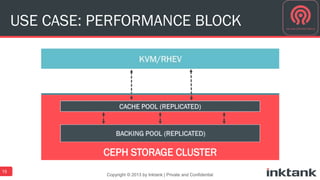

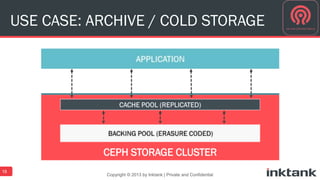

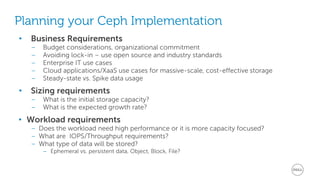

The document discusses the concept of software-defined storage, particularly focusing on Ceph, a unified storage solution for file, block, and object storage. It outlines the architecture, use cases, and implementation strategies for deploying Ceph in various environments, such as OpenStack and cloud applications. The document also highlights partnership efforts between Dell, Red Hat, and Inktank to deliver enterprise-grade storage solutions with extensive support and training services.