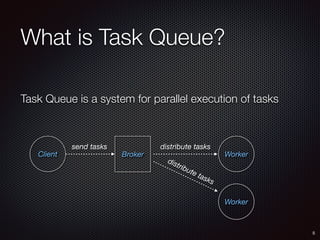

This document provides an overview of Celery, an open-source distributed task queue written in Python. It discusses what Celery is used for, its core architecture including brokers, tasks, workers, and monitoring. It also covers key Celery concepts like signatures, tasks workflows using groups, chains, chords, maps and more. The document explains how to define and call tasks, and configure workers with options like autoreloading, autoscaling, rate limits, and user components. Monitoring is also covered, highlighting the Flower web monitor for task progress and statistics.

![Calling Tasks

apply_async(args[, kwargs[, …]])

delay(*args, **kwargs)

calling(__call__)

e.g:

• result = add.delay(1, 2)

• result = add.apply_async((1, 2),

countdown=10)

10](https://image.slidesharecdn.com/0e1f4554-15a0-49ec-8231-1de0e2b3512a-150720081941-lva1-app6892/85/Celery-A-Distributed-Task-Queue-10-320.jpg)

![Group

19

A signature takes a list of tasks should be applied in

parallel

s = group(add.s(i, i) for i in xrange(5))

s().get() => [0, 2, 4, 6, 8]](https://image.slidesharecdn.com/0e1f4554-15a0-49ec-8231-1de0e2b3512a-150720081941-lva1-app6892/85/Celery-A-Distributed-Task-Queue-19-320.jpg)

![Map

22

Like built-in map function

c = task.map([1, 2, 3])

c() => [task(1), task(2), task(3)]](https://image.slidesharecdn.com/0e1f4554-15a0-49ec-8231-1de0e2b3512a-150720081941-lva1-app6892/85/Celery-A-Distributed-Task-Queue-22-320.jpg)

![Starmap

23

Same map except the args are applied as *args

c = add.map([(1, 2), (3, 4)])

c() => [add(1, 2), add(3, 4)]](https://image.slidesharecdn.com/0e1f4554-15a0-49ec-8231-1de0e2b3512a-150720081941-lva1-app6892/85/Celery-A-Distributed-Task-Queue-23-320.jpg)

![Chunks

24

Chunking splits a long list of args to parts

items = zip(xrange(10), xrange(10))

c = add.chunks(items, 5)

c() => [0, 2, 4, 6, 8], [10, 12, 14, 16, 18]](https://image.slidesharecdn.com/0e1f4554-15a0-49ec-8231-1de0e2b3512a-150720081941-lva1-app6892/85/Celery-A-Distributed-Task-Queue-24-320.jpg)