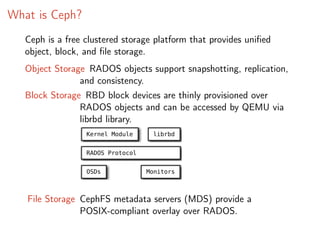

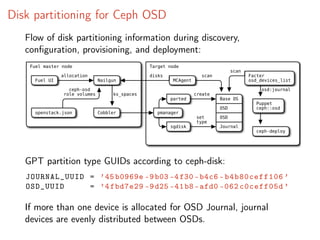

The document provides a comprehensive overview of integrating Ceph, a clustered storage platform, with Mirantis OpenStack, detailing its functionality and deployment processes. It covers aspects such as Ceph's various storage types, the role of Fuel in deploying Ceph within OpenStack, and the configuration necessary for effective VM migrations. Additionally, it addresses challenges faced and resources available for enhancing the integration of both technologies.