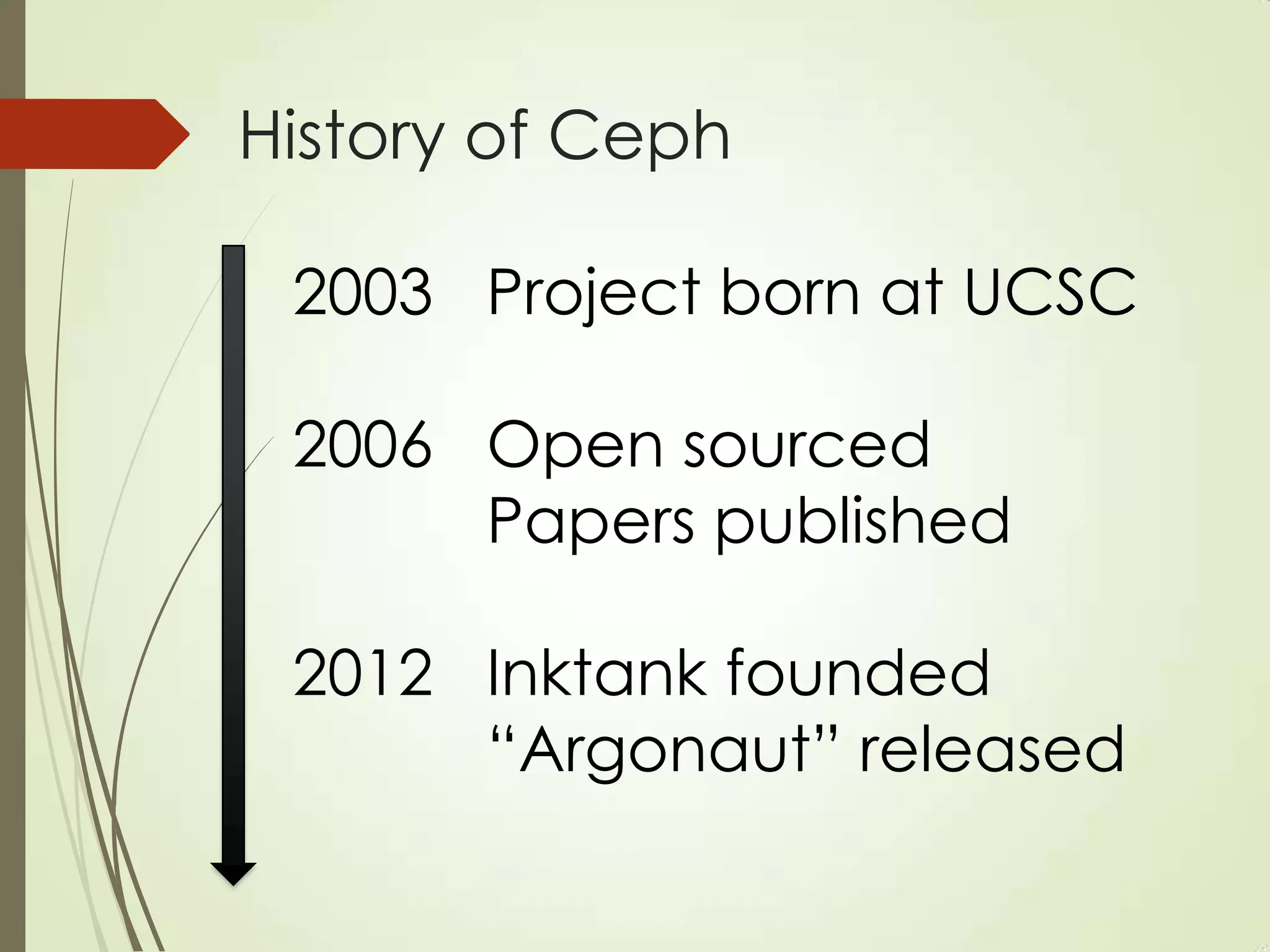

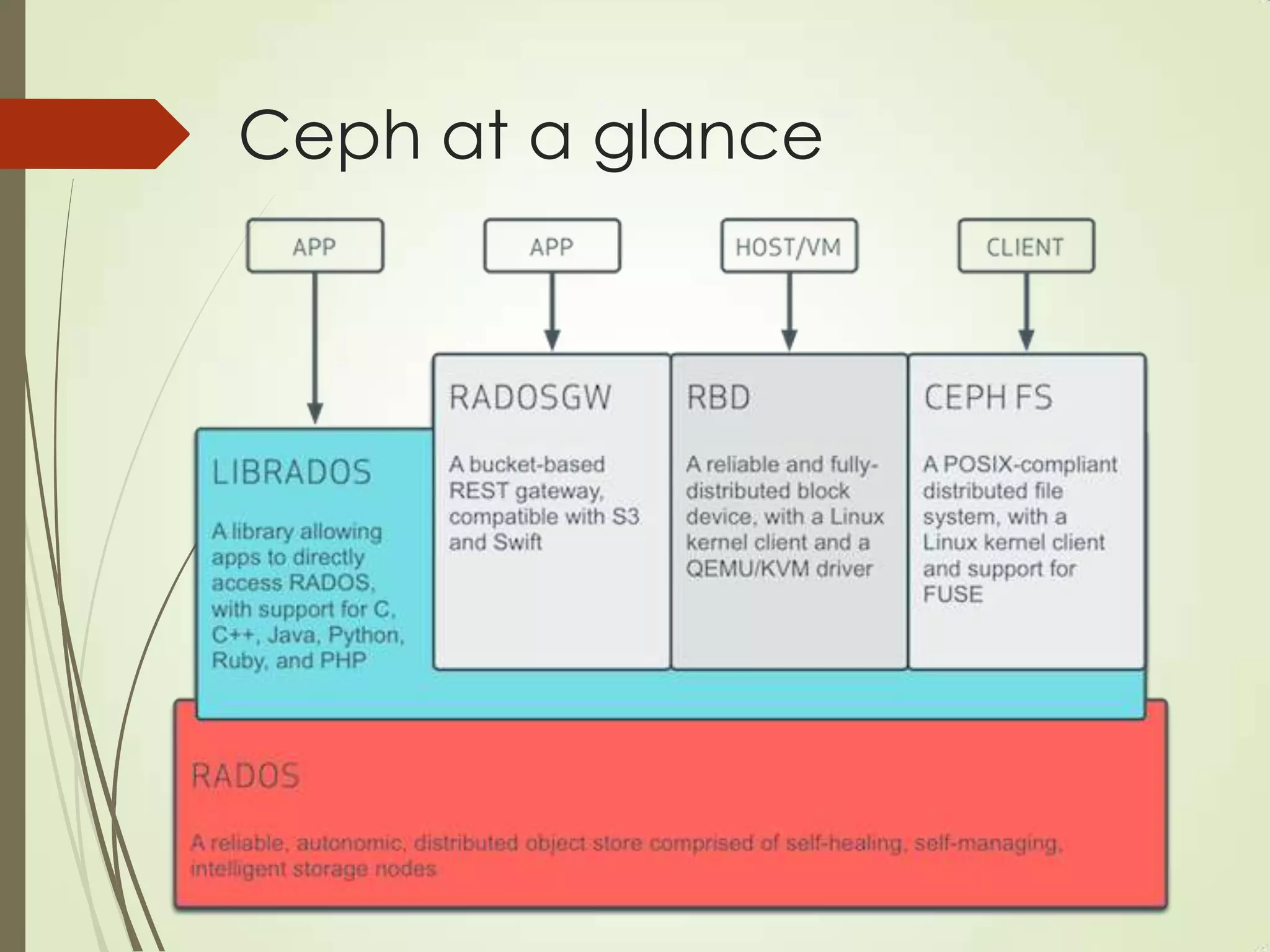

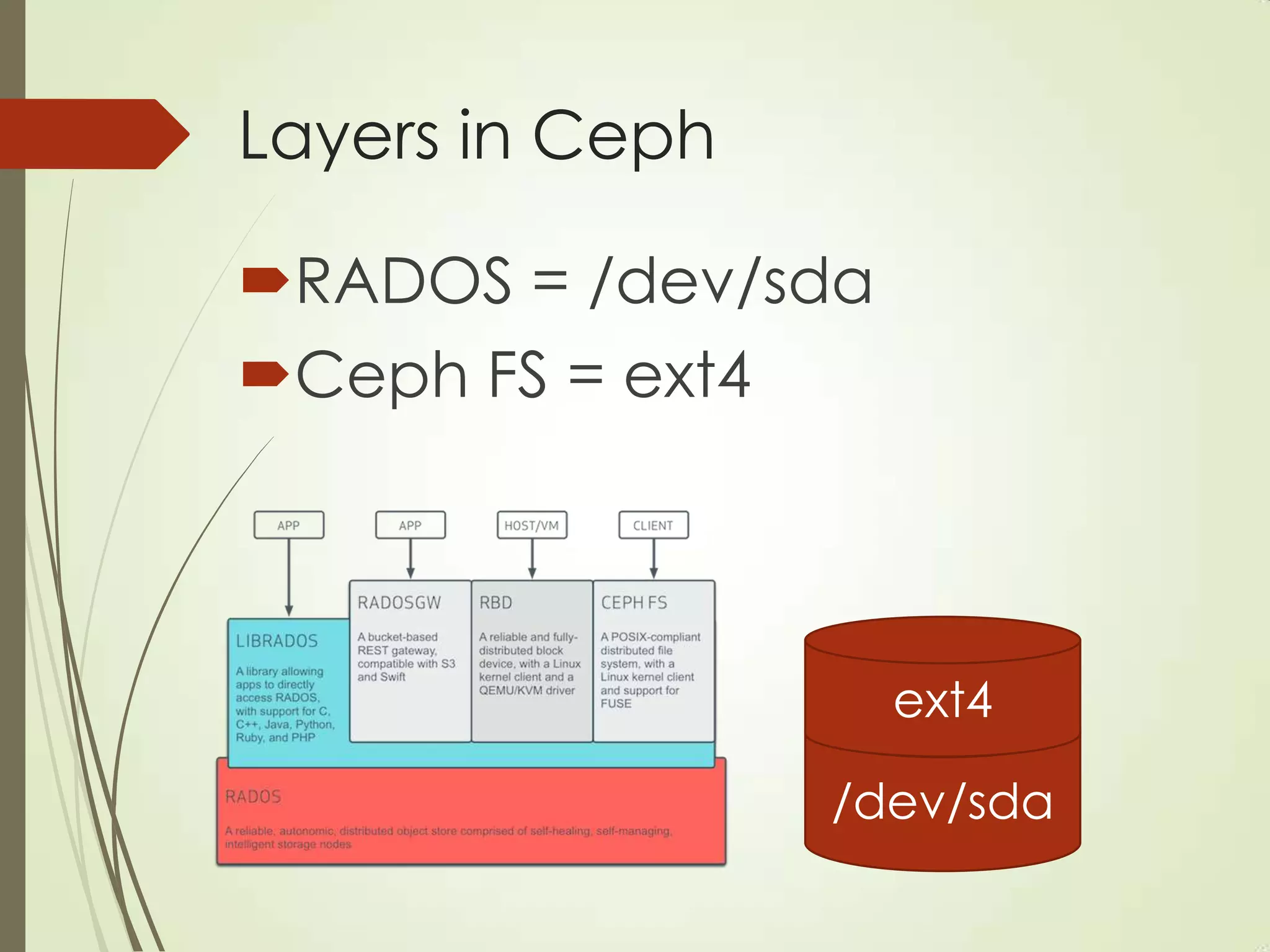

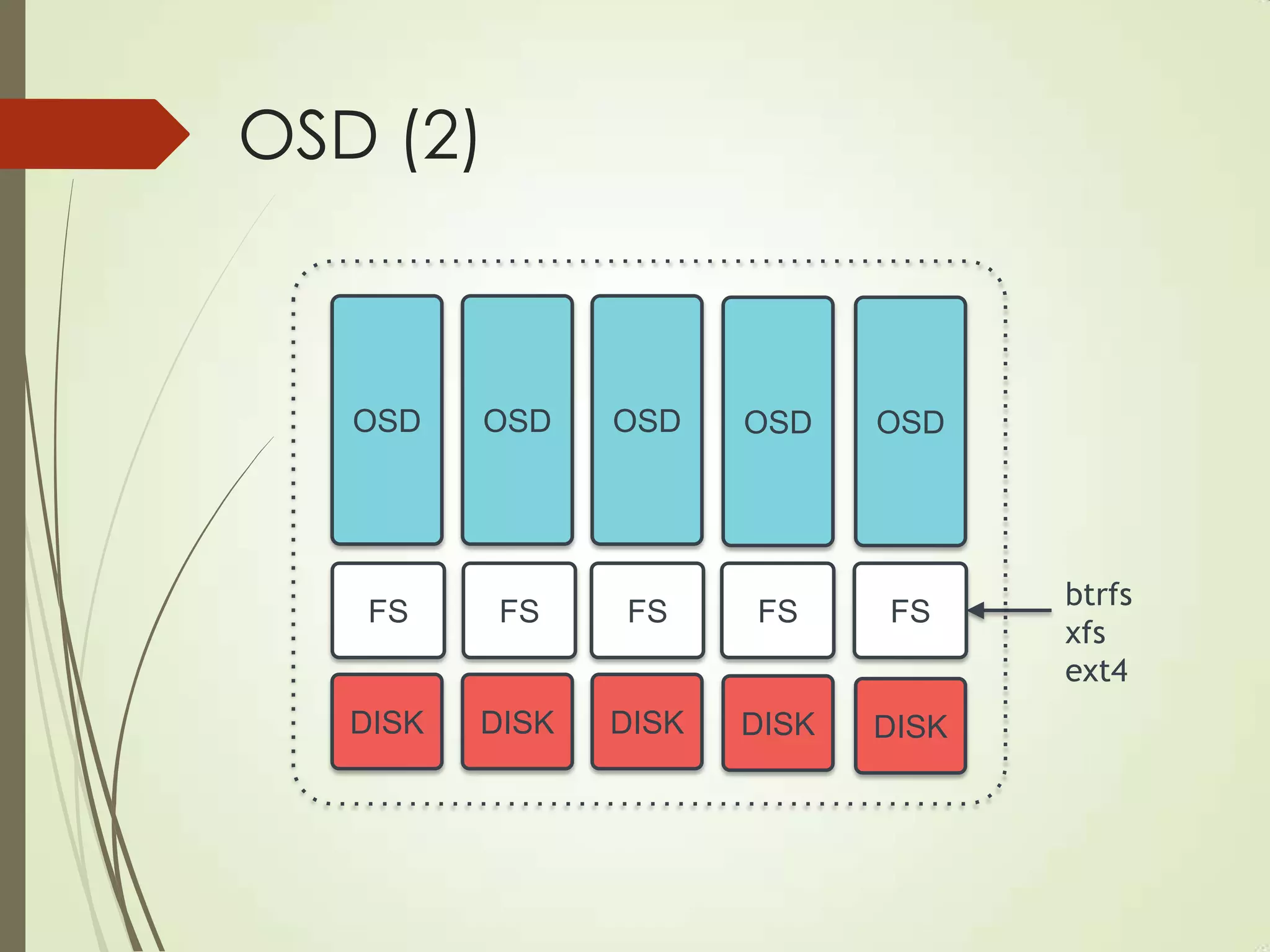

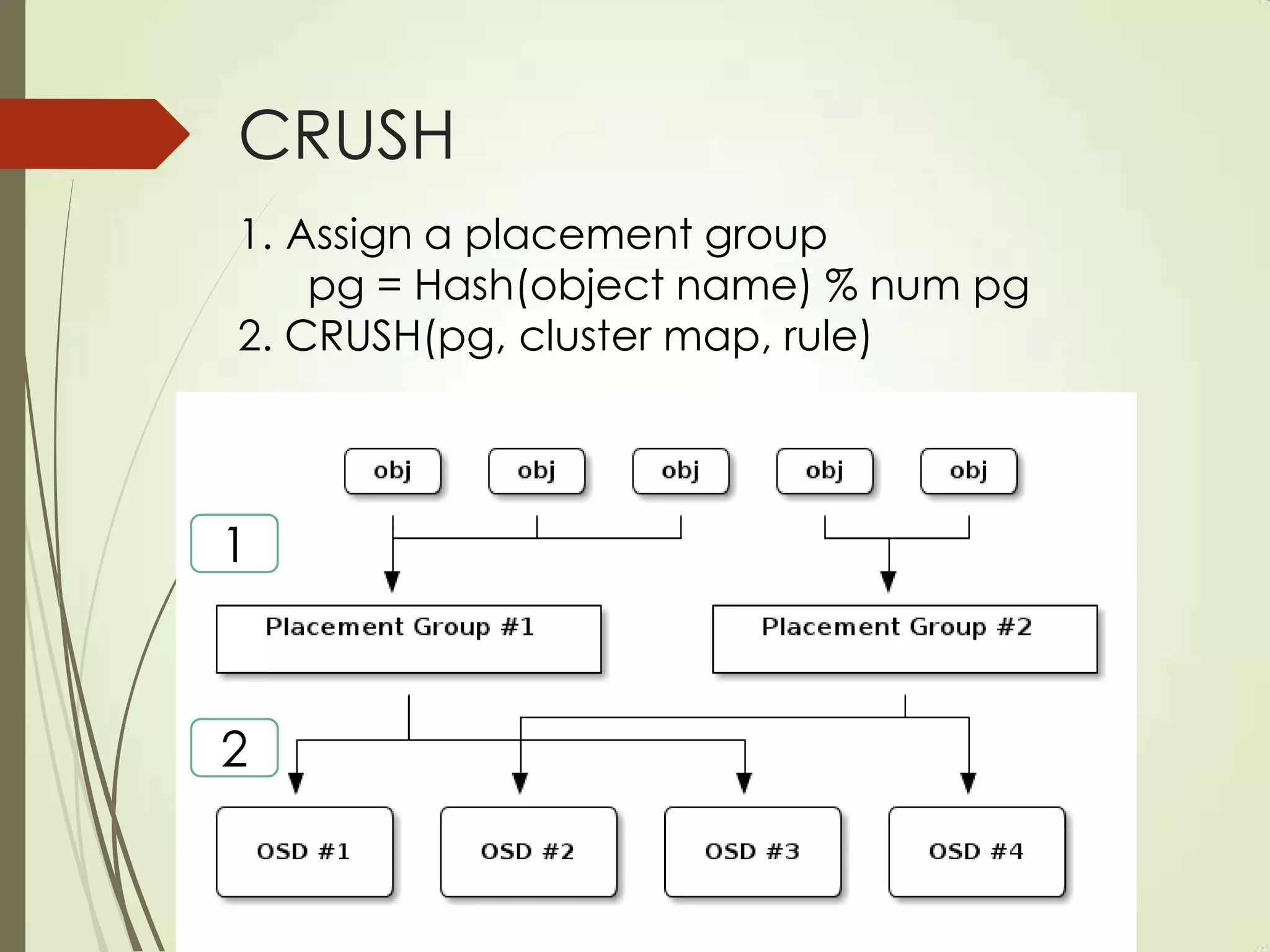

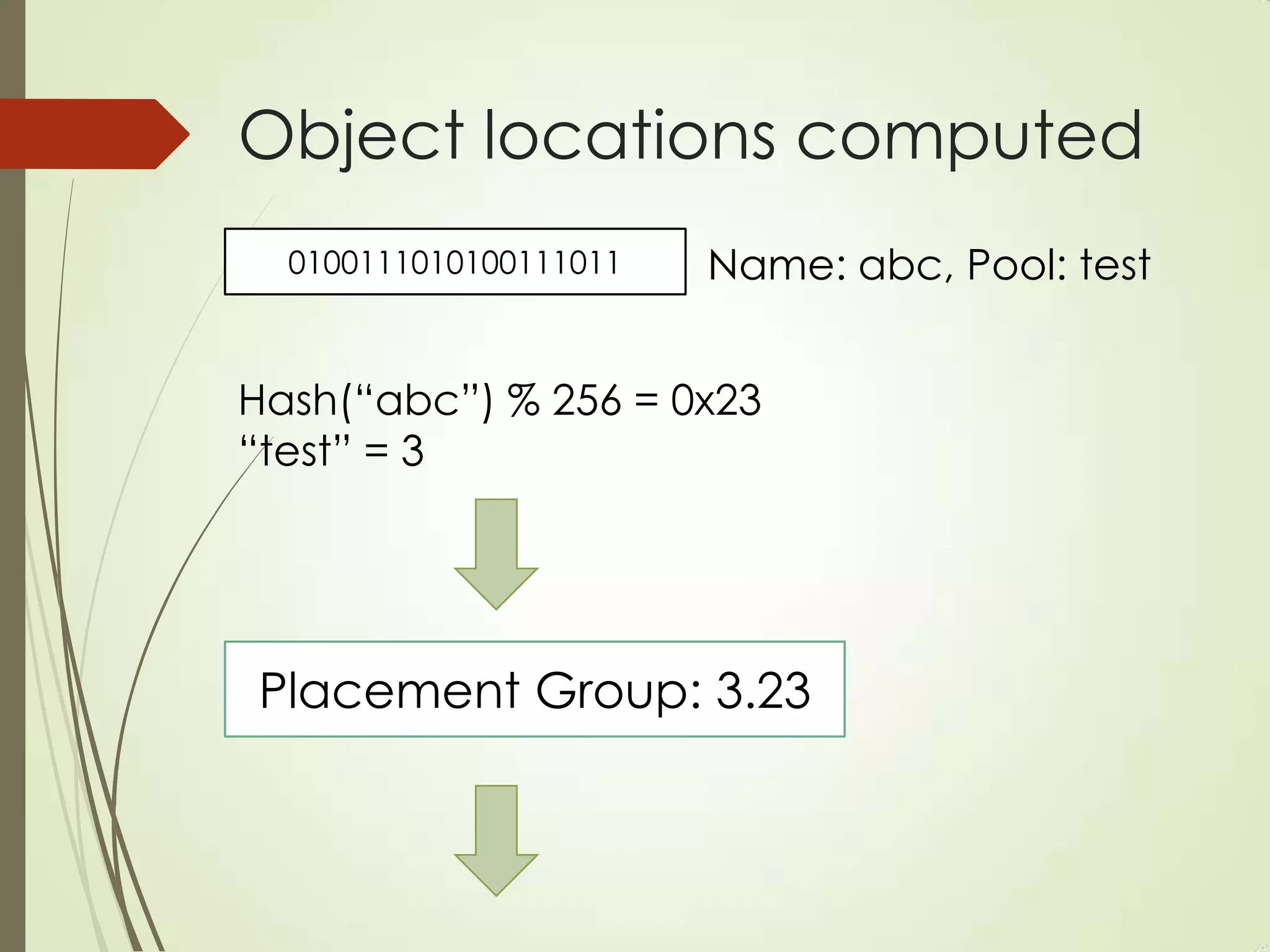

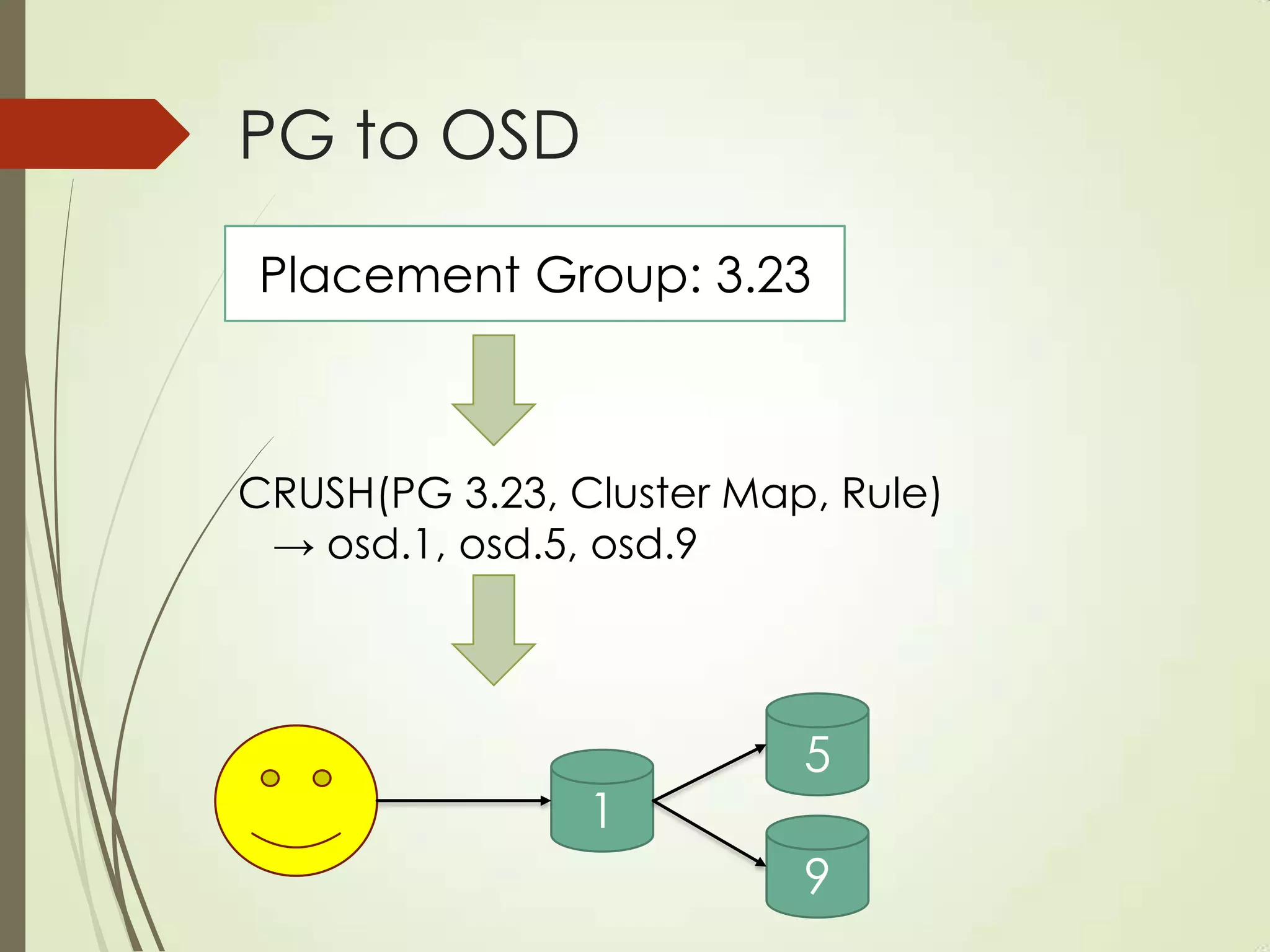

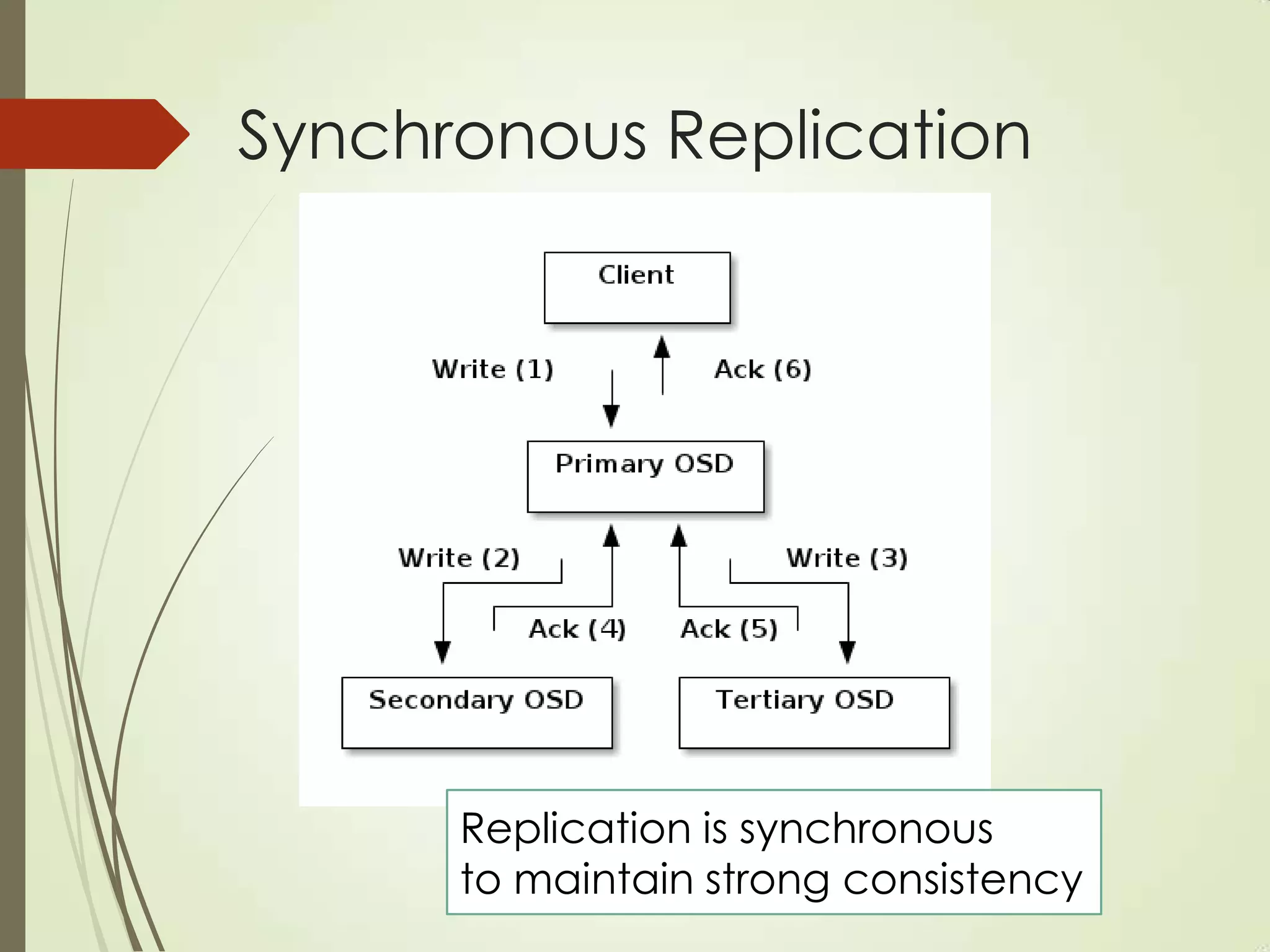

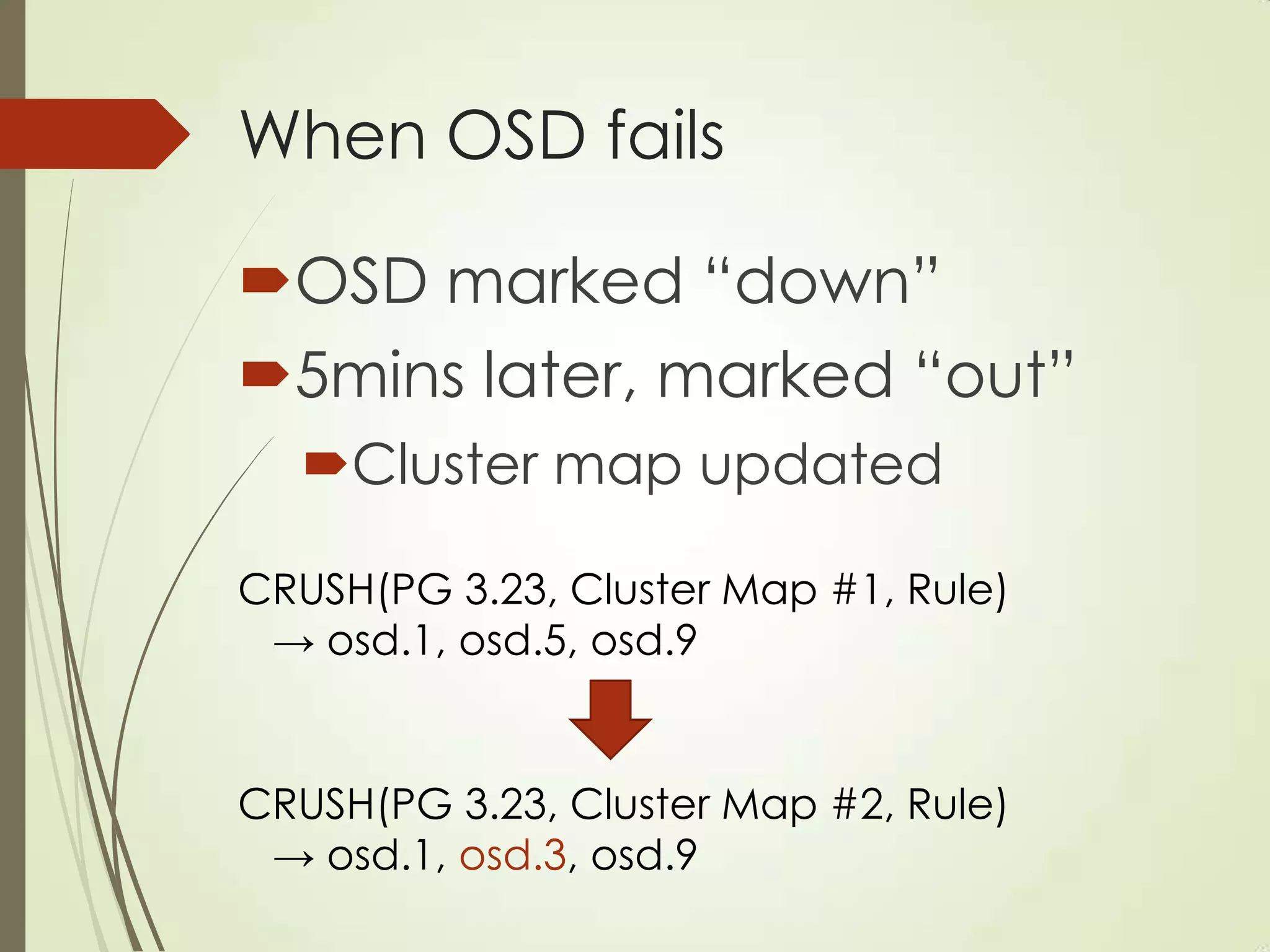

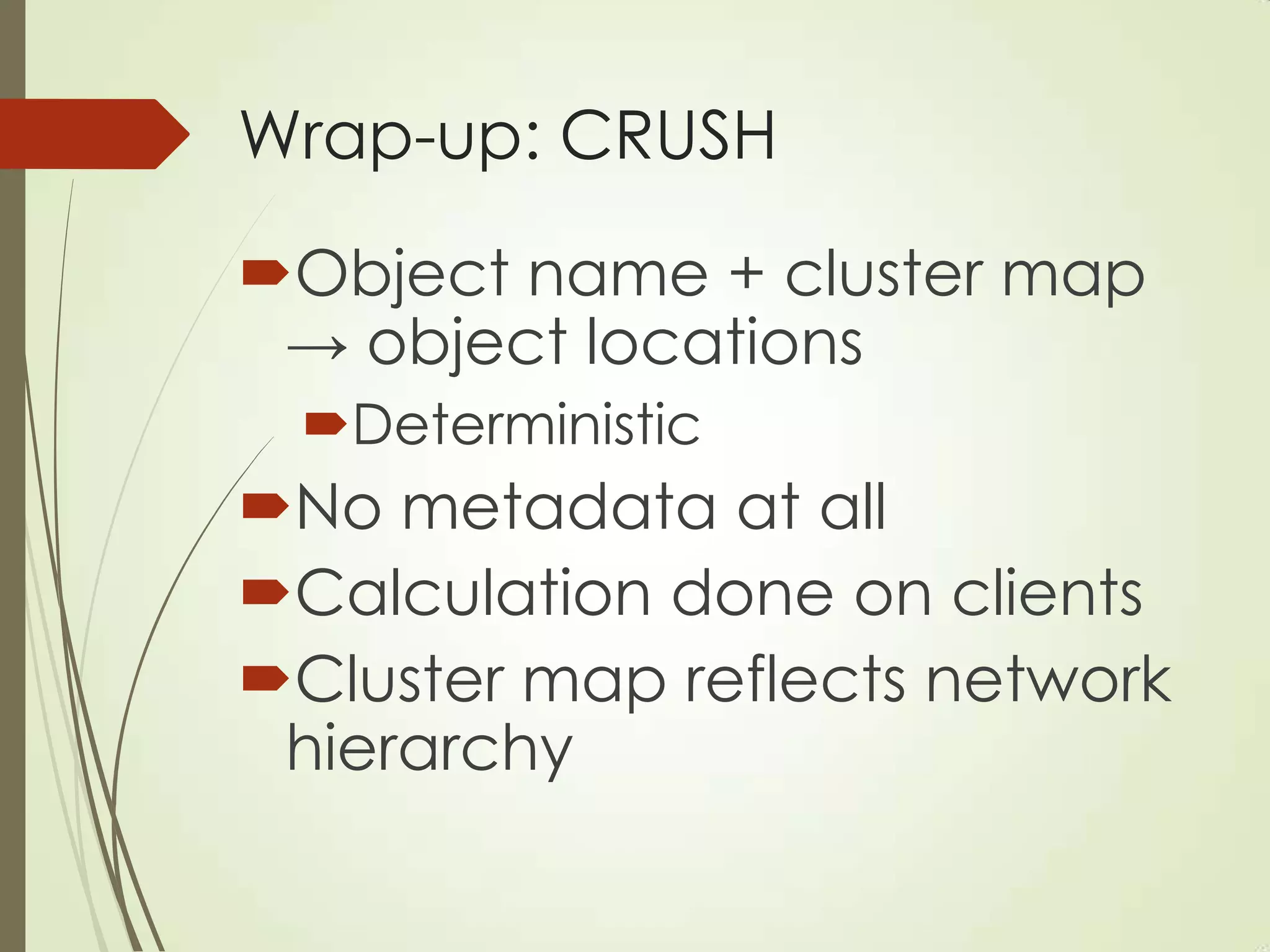

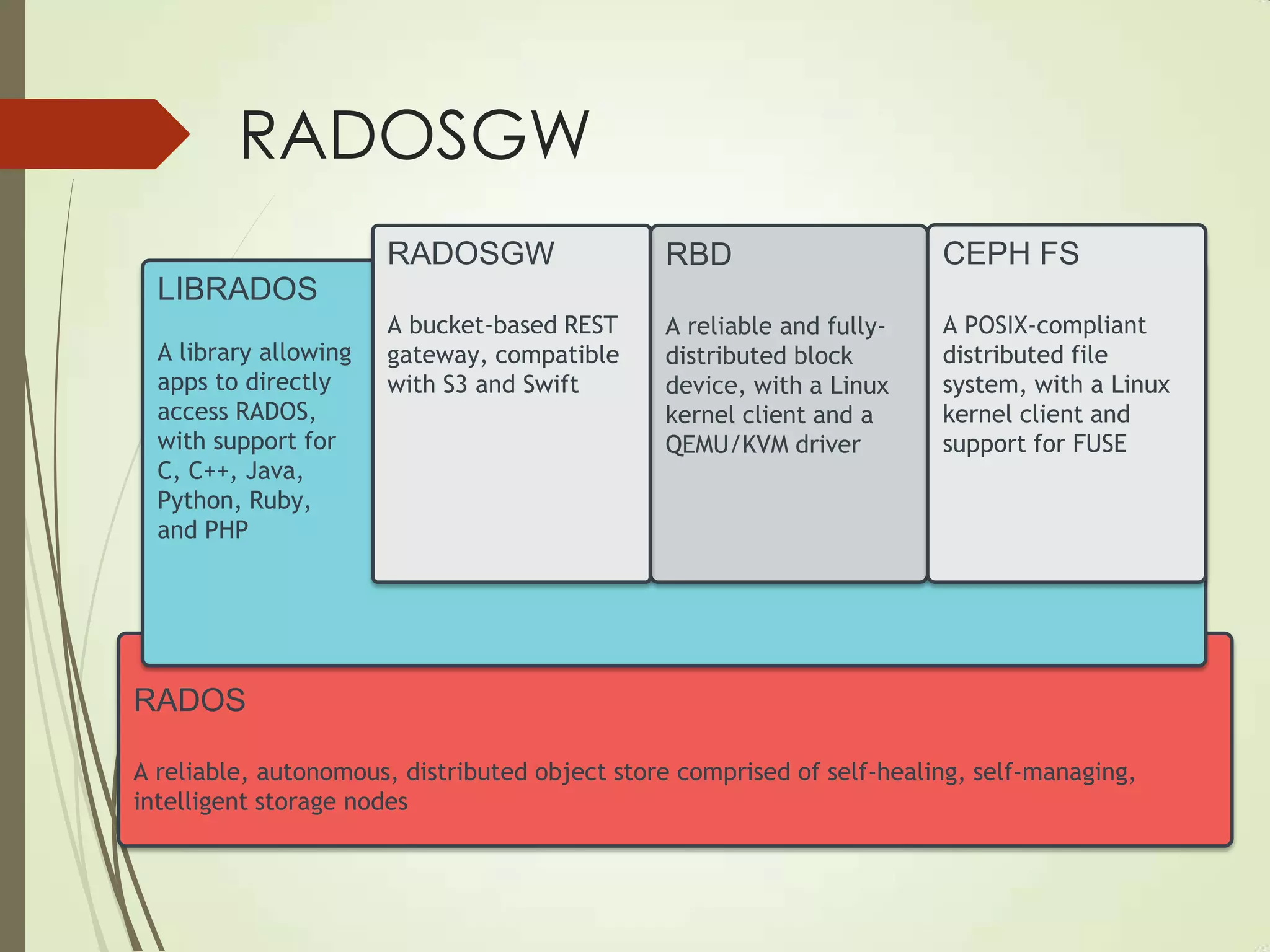

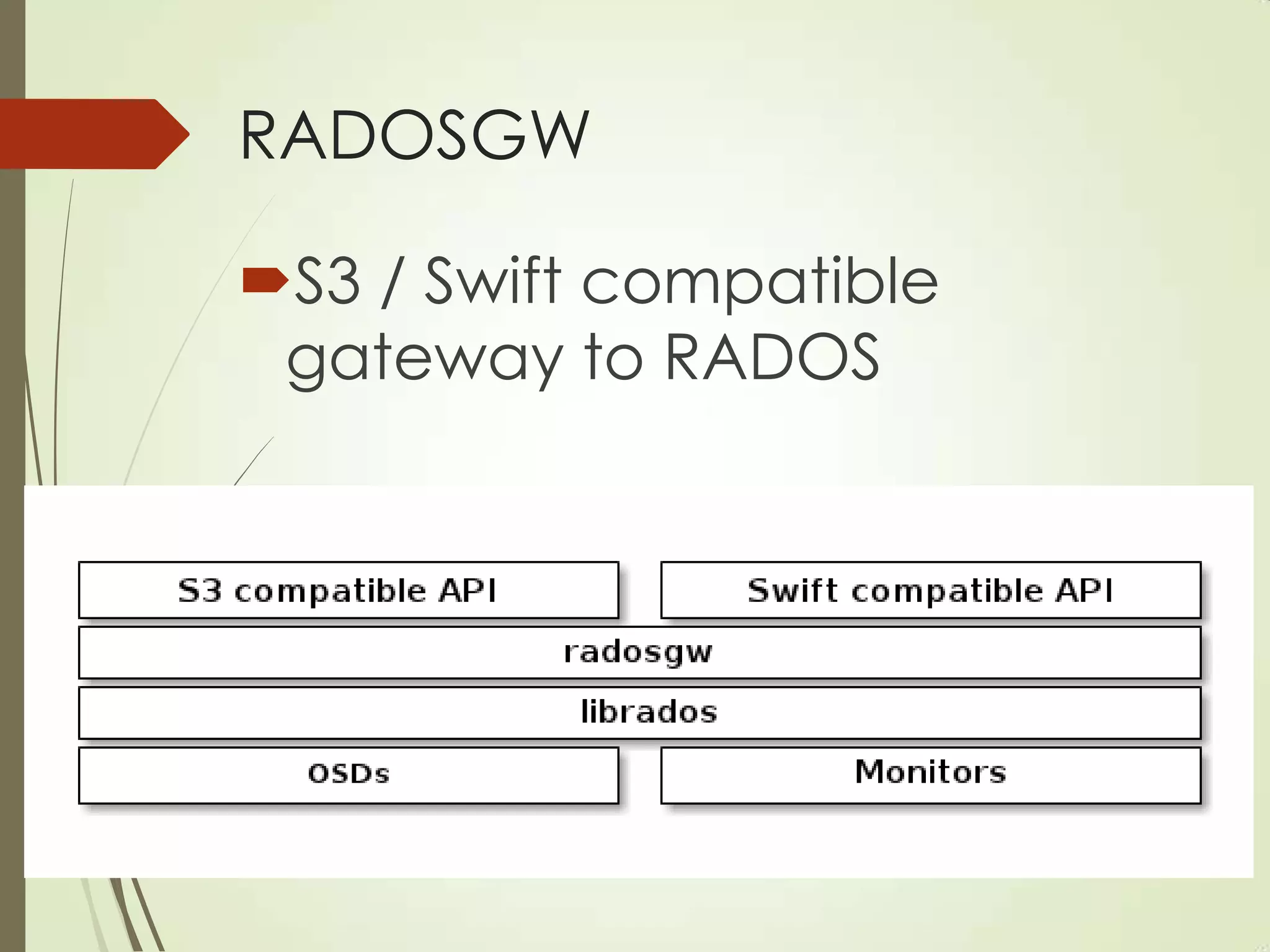

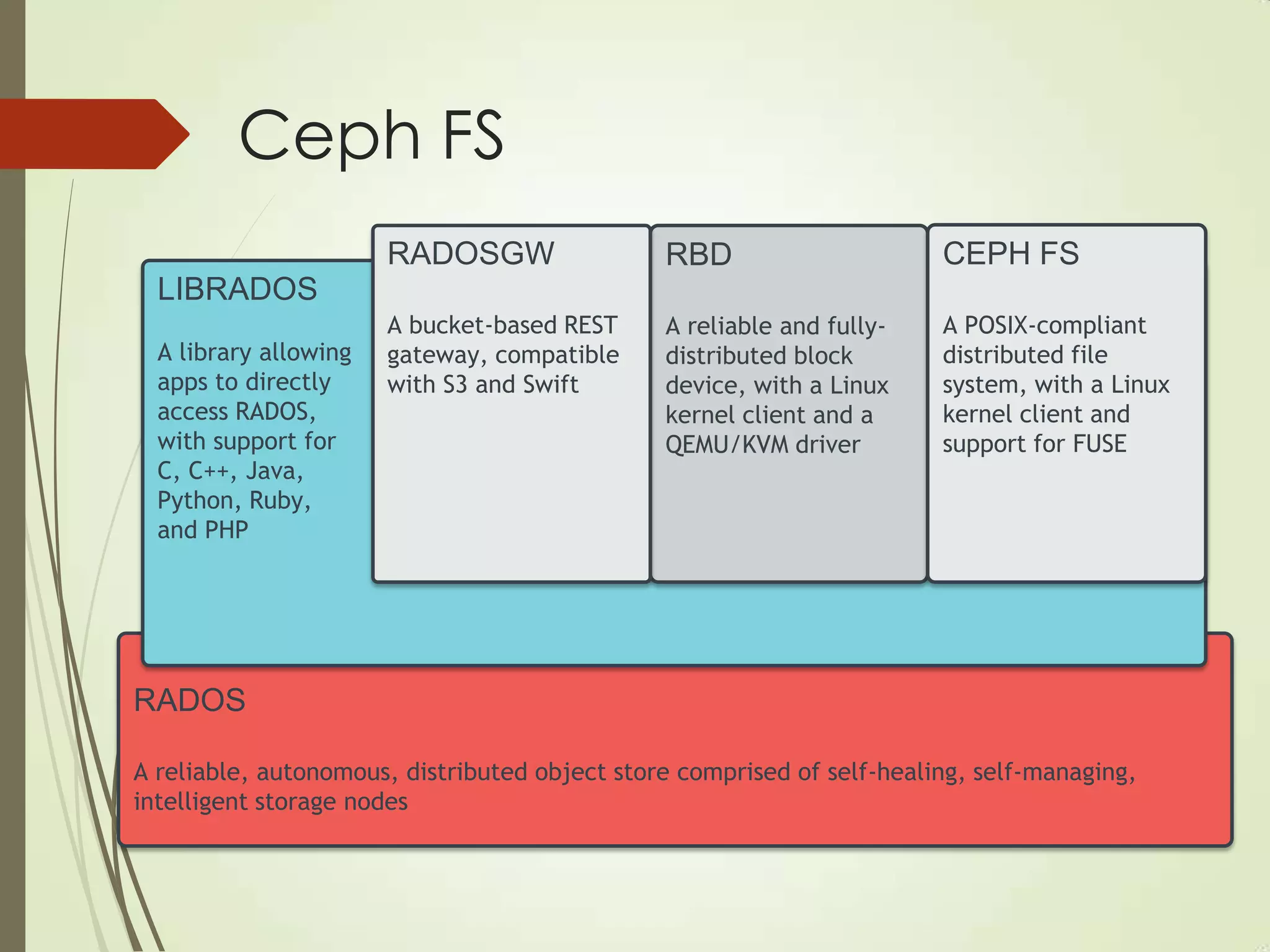

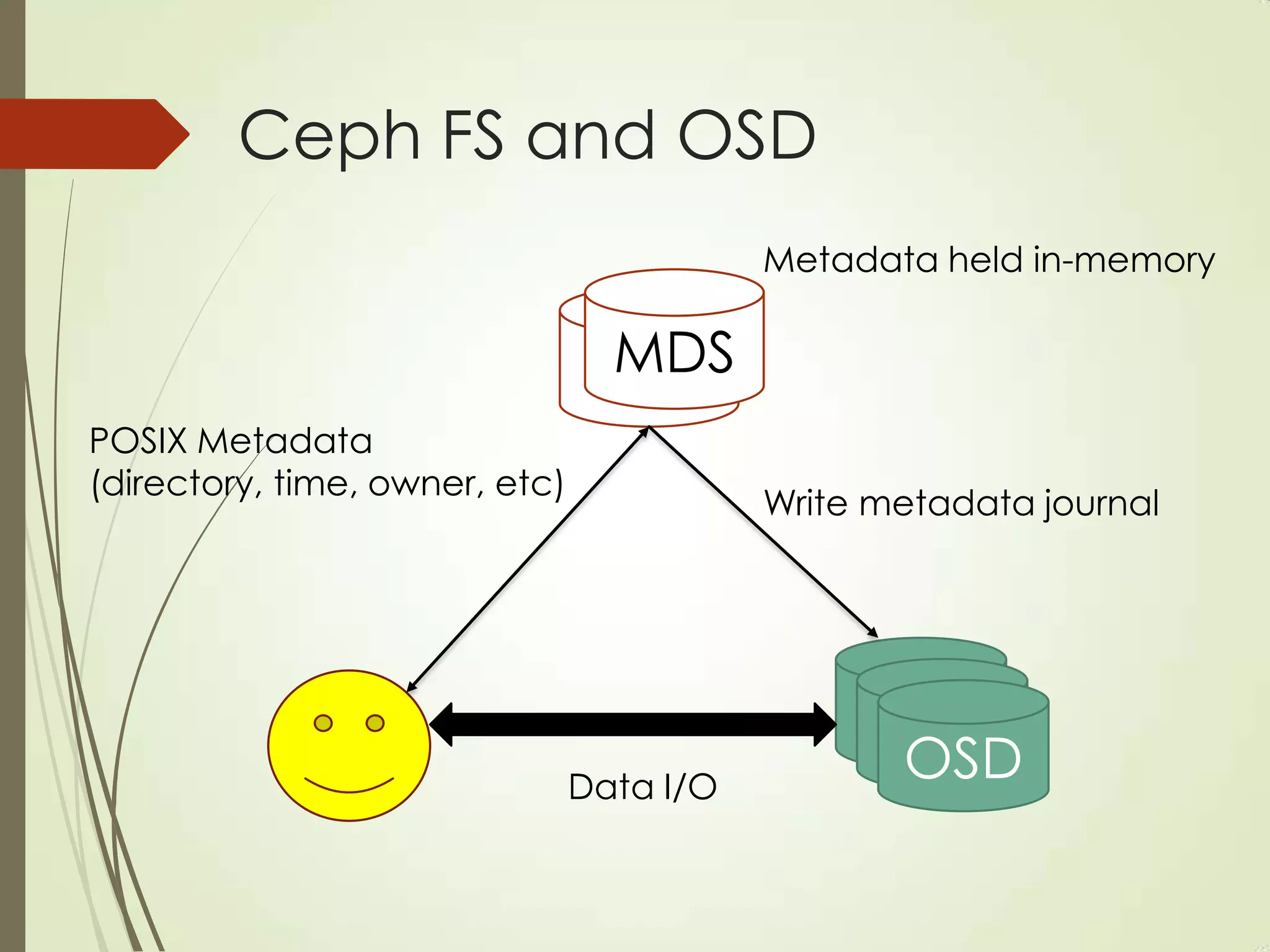

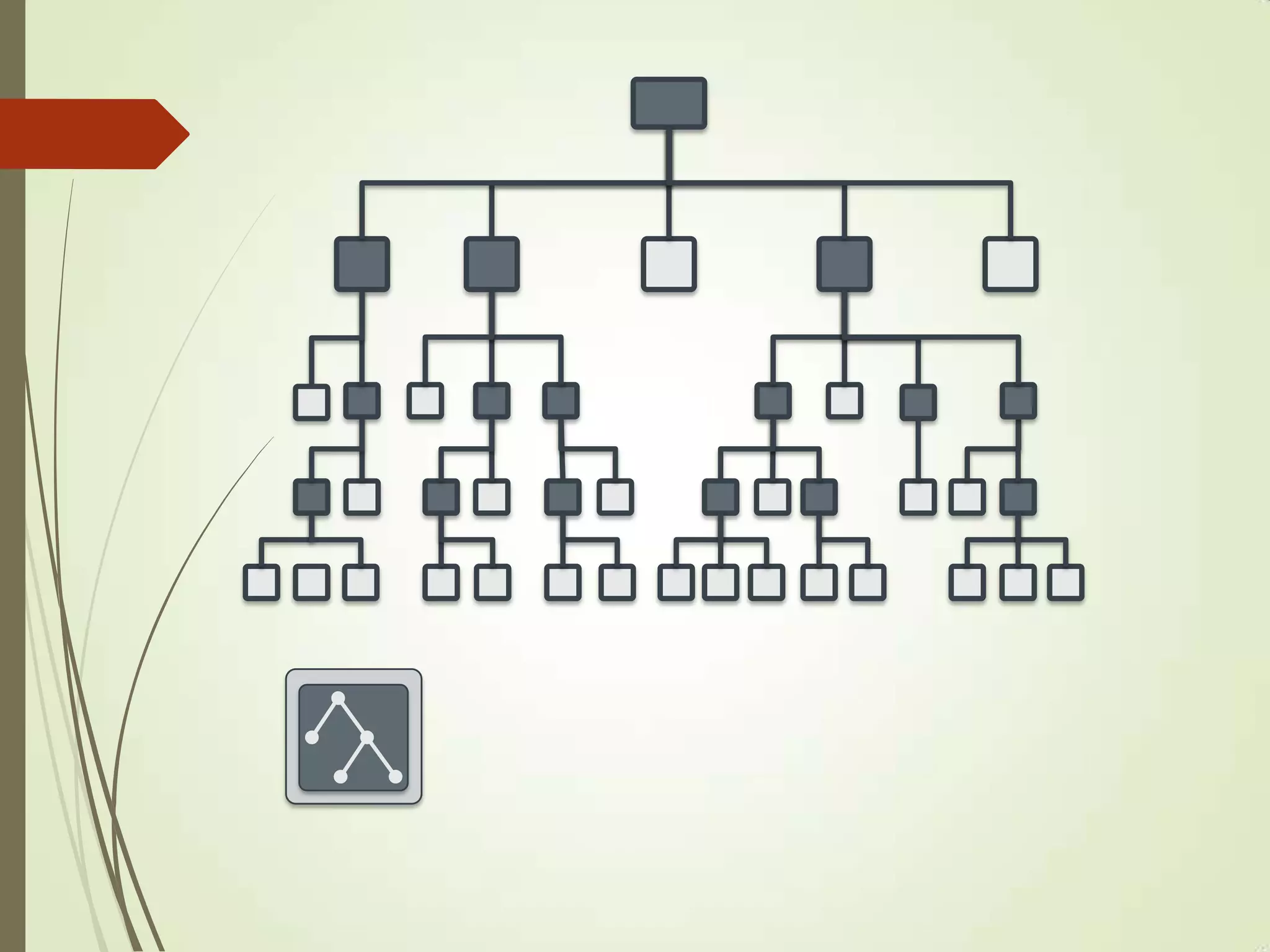

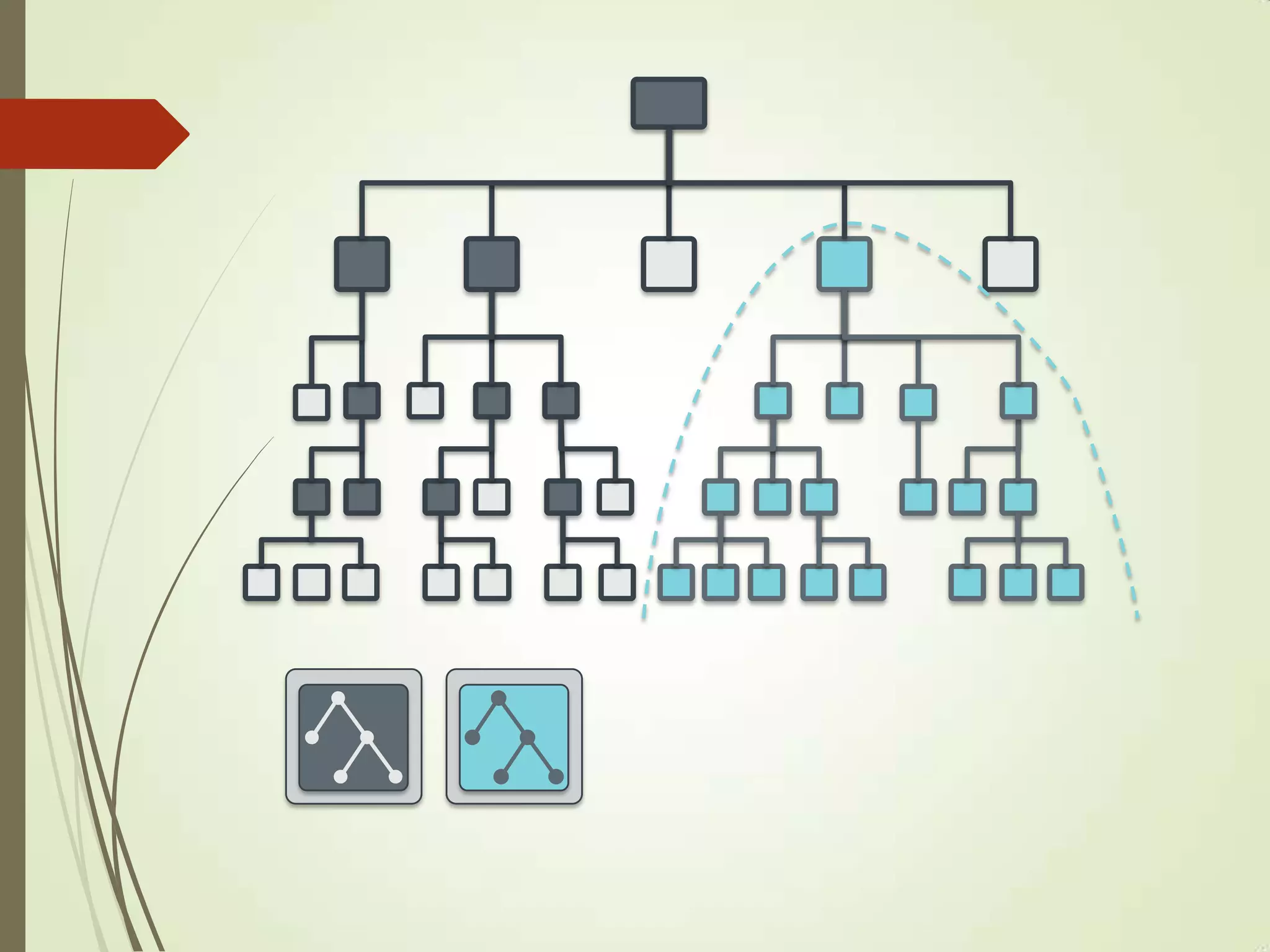

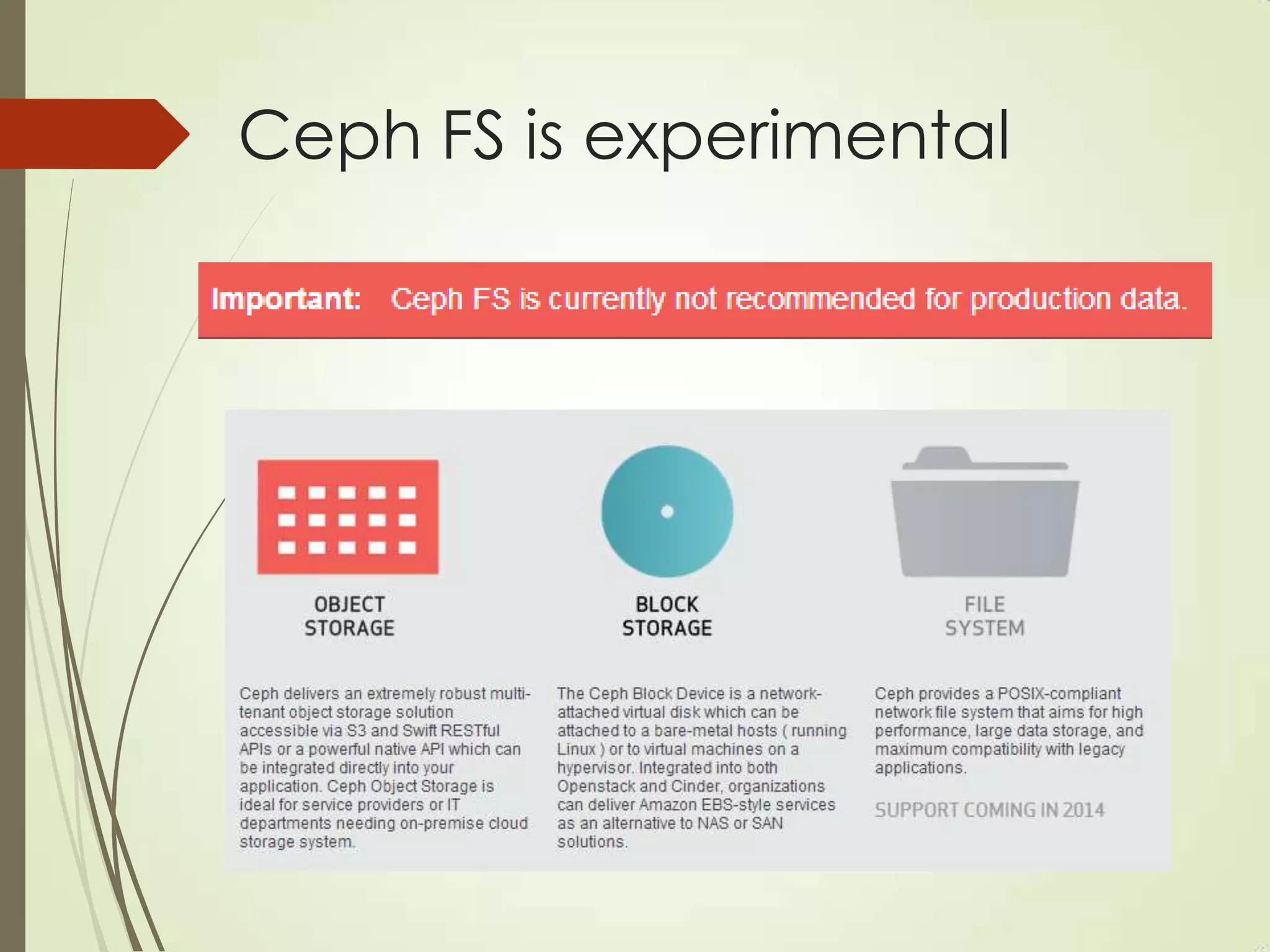

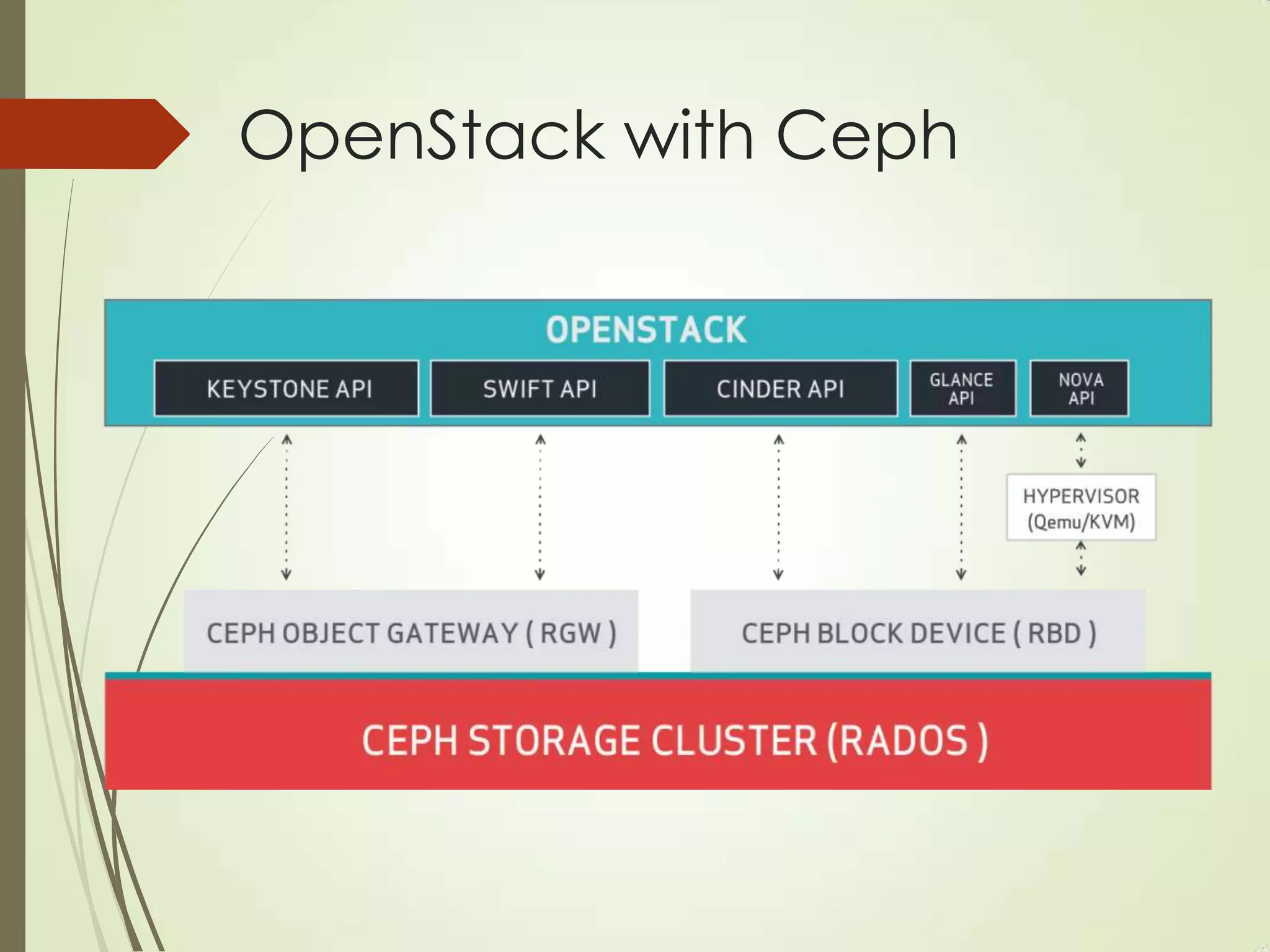

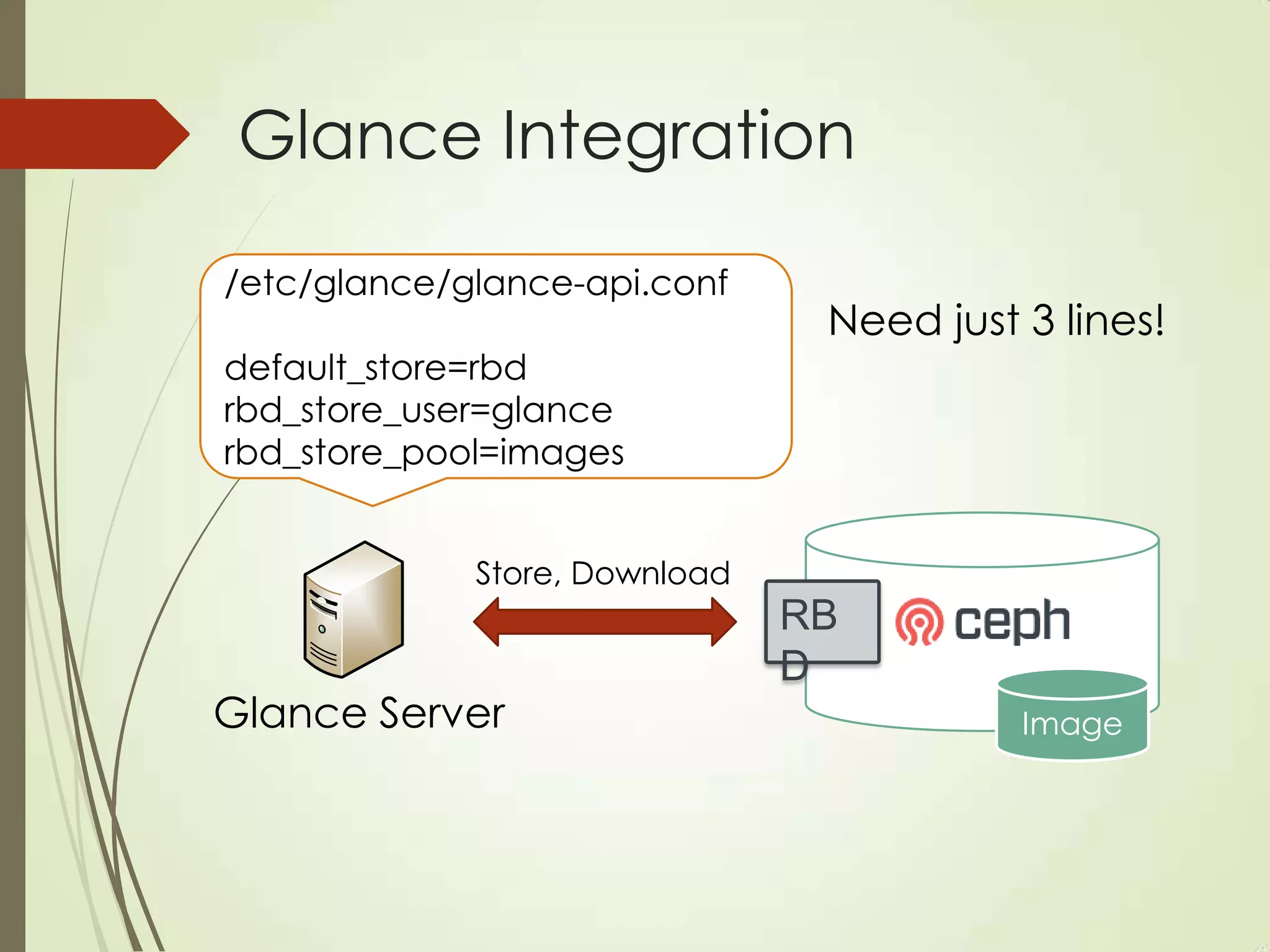

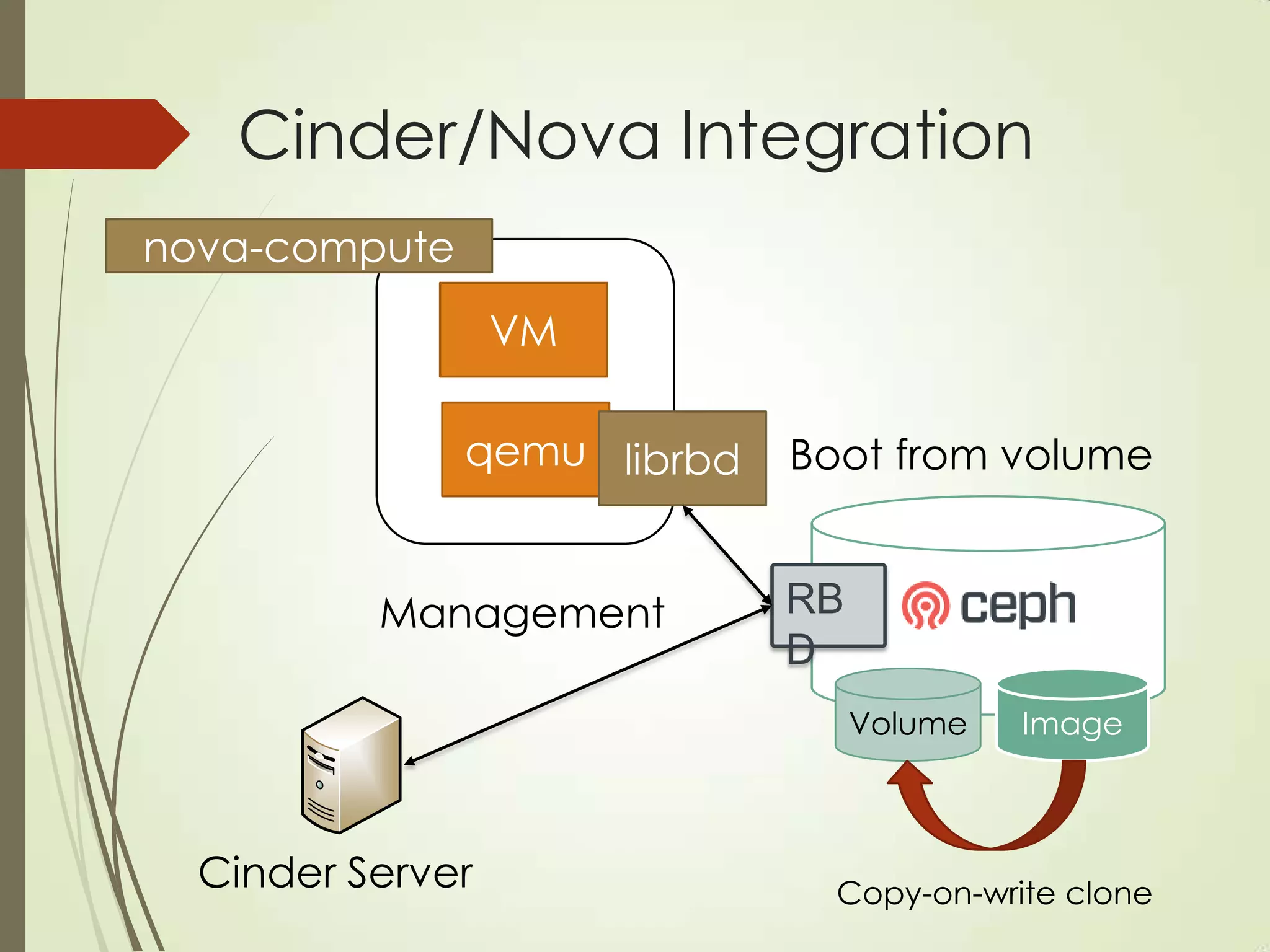

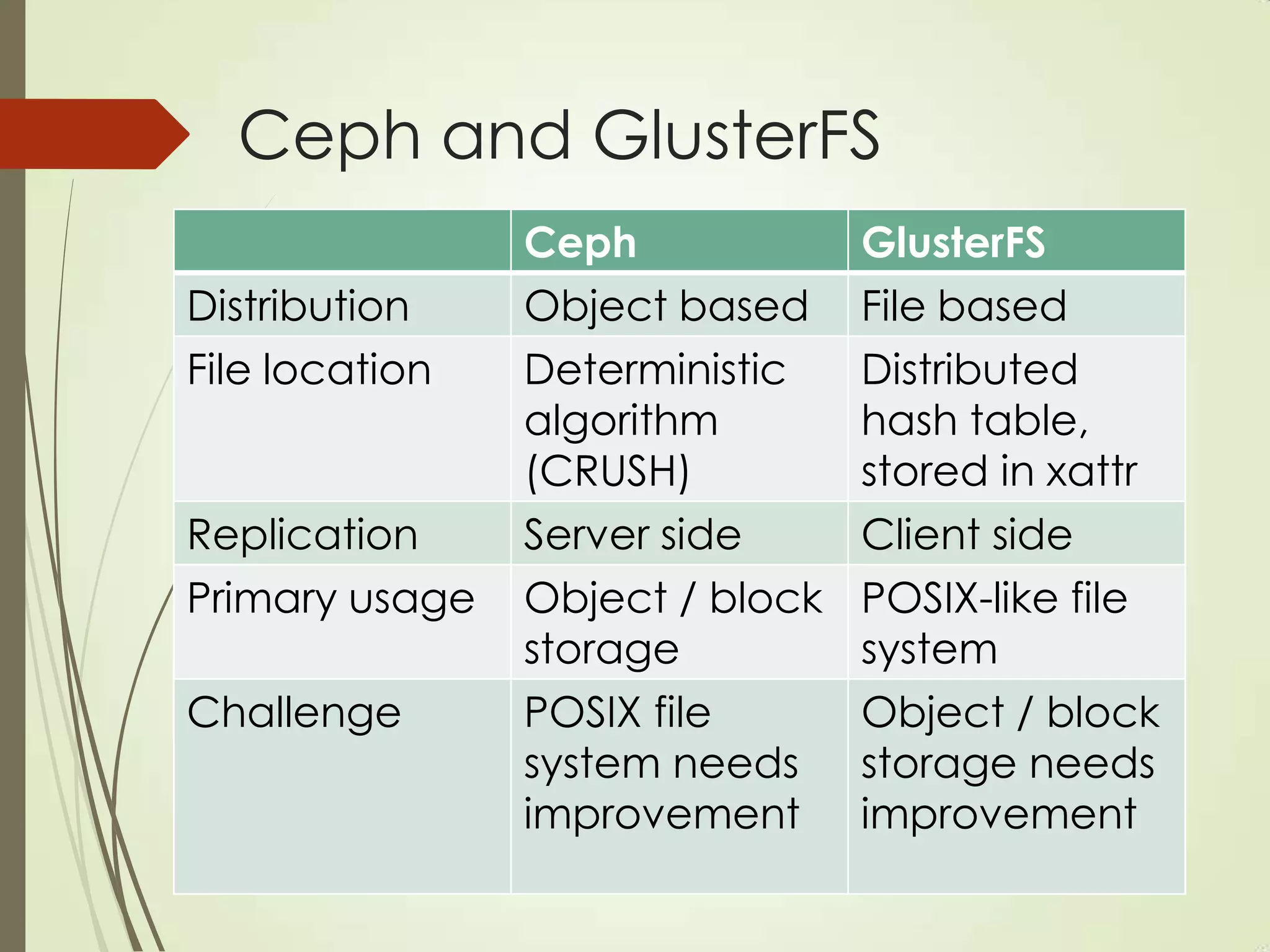

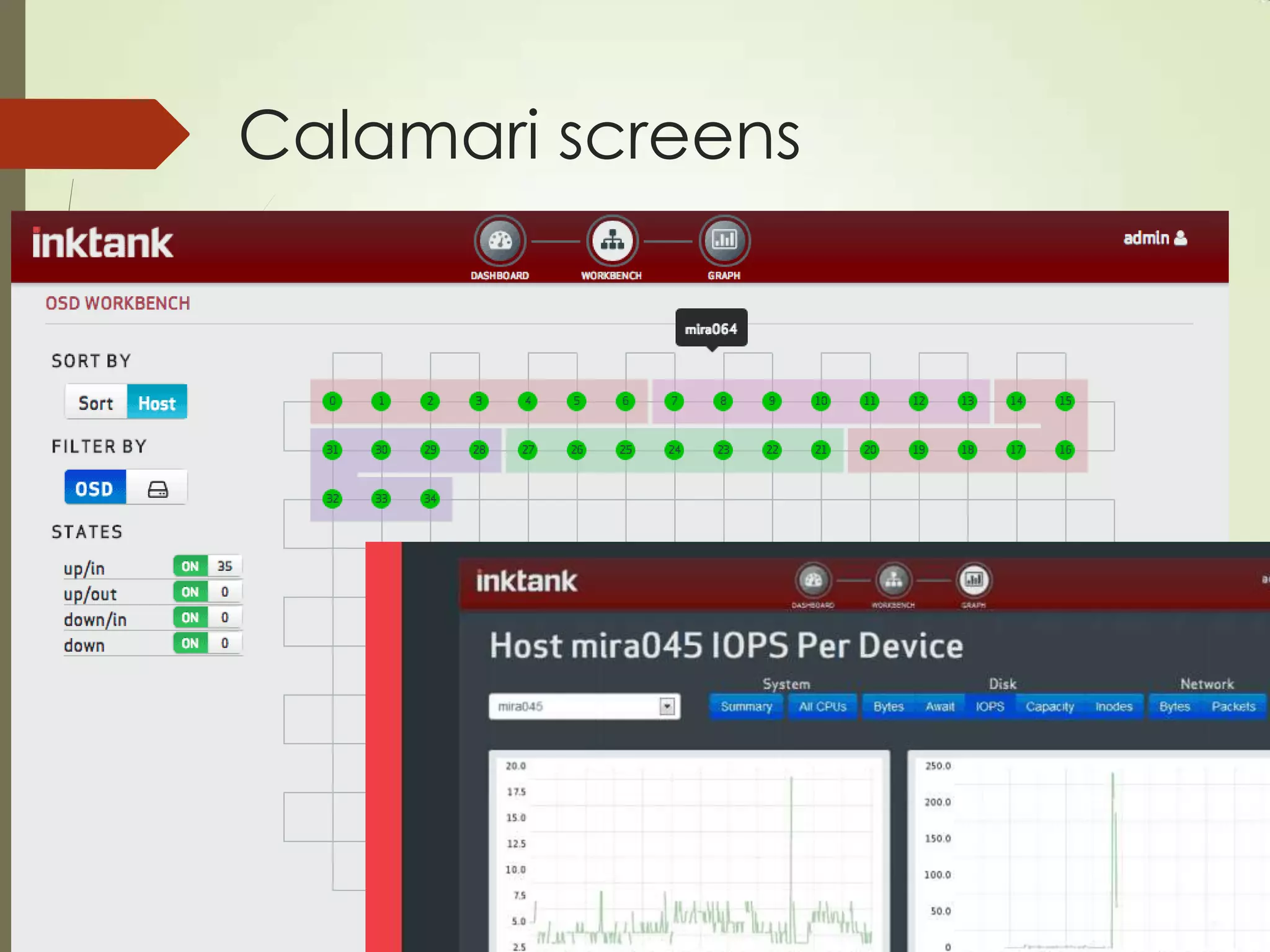

The document provides an overview of Ceph, an open-source storage platform known for its massively scalable architecture and integration with OpenStack. It details the underlying components of Ceph, including RADOS, CephFS, and the CRUSH algorithm that enables distributed storage without centralized metadata. Additionally, it highlights Ceph’s capabilities in unified storage for object, block, and POSIX file systems, and mentions recent developments such as the acquisition of Inktank by Red Hat.