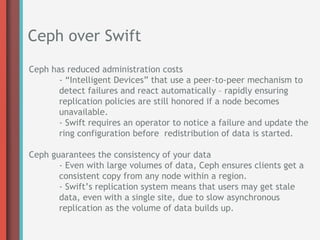

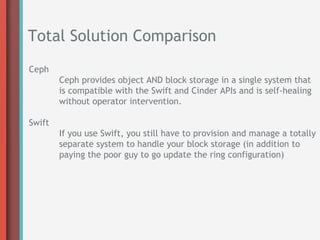

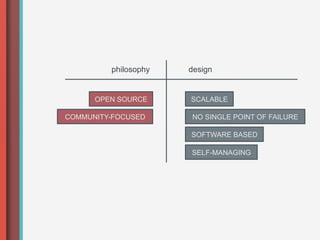

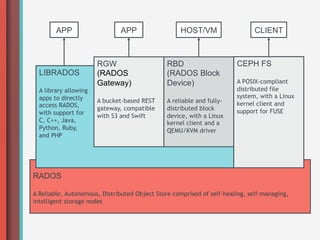

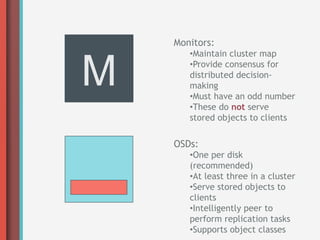

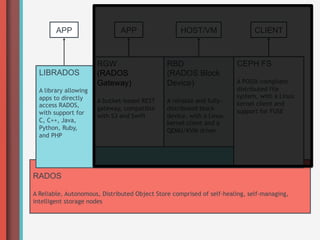

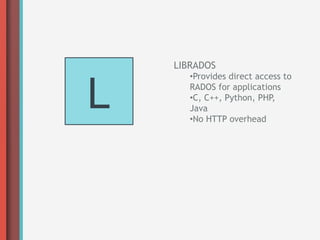

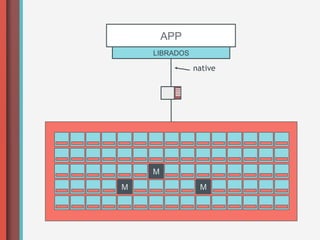

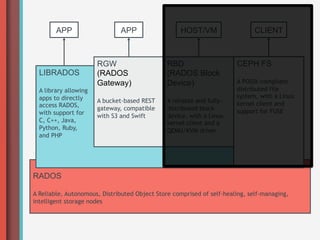

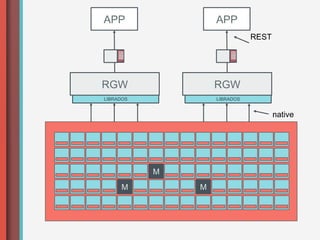

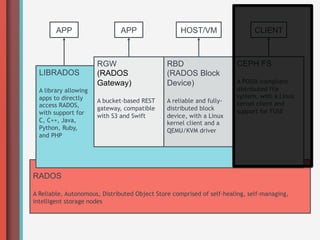

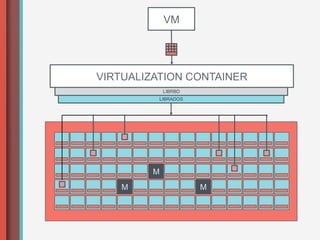

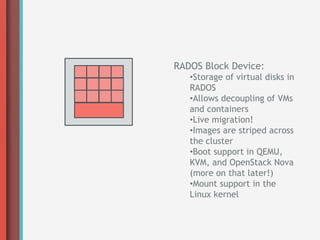

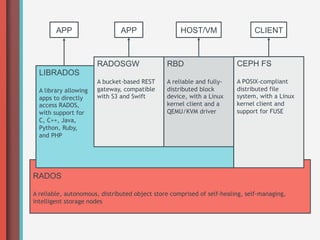

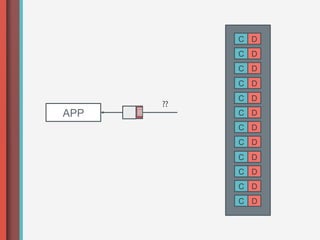

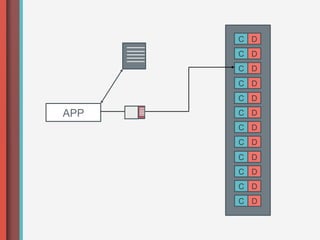

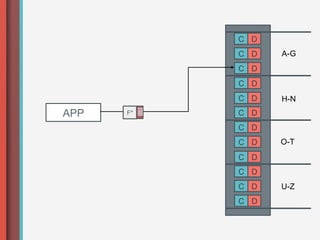

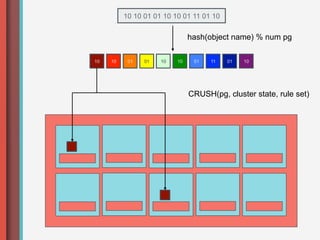

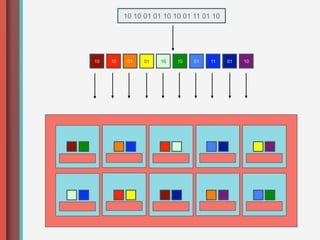

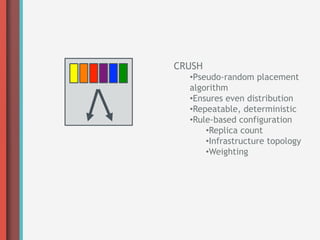

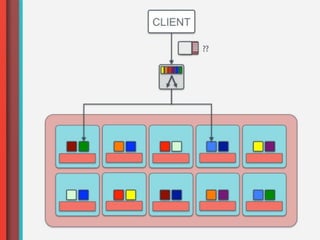

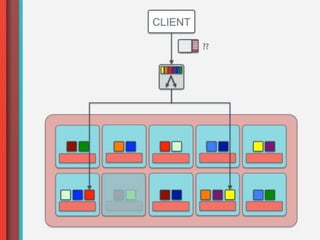

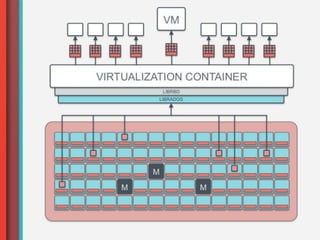

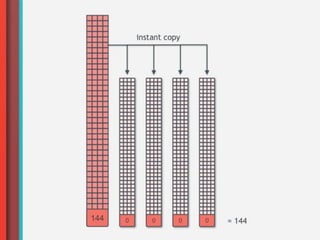

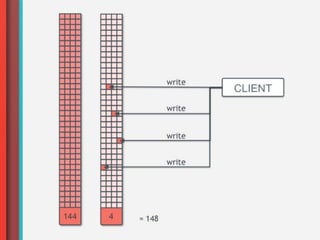

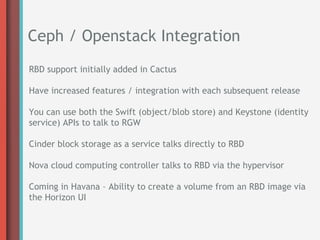

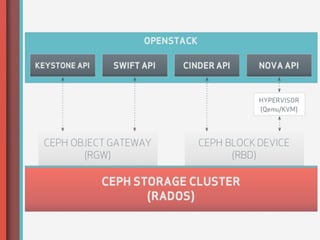

Ian Colle, the Ceph Program Manager at Inktank, gave a presentation on Ceph and how it can be used for cloud storage with OpenStack. Ceph is an open source software-defined storage system that provides object, block, and file storage in a single distributed system. It utilizes a CRUSH algorithm for data distribution, thin provisioning for efficient storage of VMs, and is integrated with OpenStack through the Cinder block storage and Swift object storage APIs. Inktank was formed to ensure the long-term success of Ceph through services, support, and helping companies adopt it.