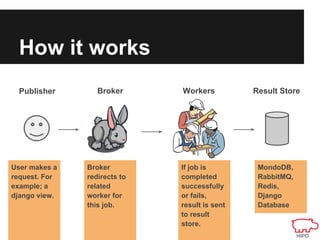

Celery is a distributed task queue that allows long-running processes to be executed asynchronously outside of the main request-response cycle. It uses message brokers like RabbitMQ to distribute jobs to worker nodes for processing. This improves request performance and allows tasks to be distributed across multiple machines. Common use cases include asynchronous tasks like email sending, long database operations, image/video processing, and external API calls.