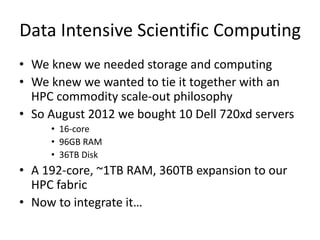

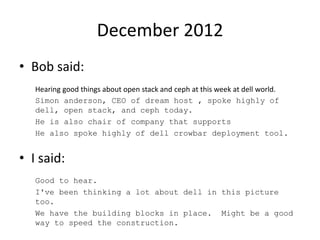

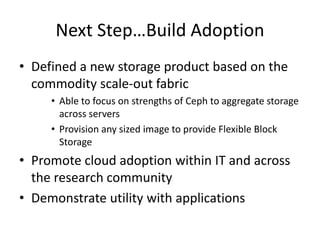

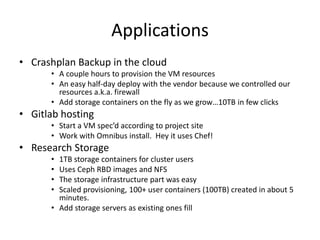

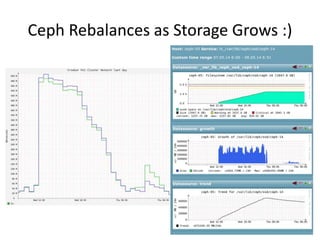

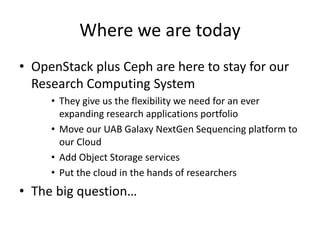

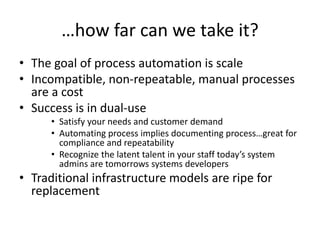

The University of Alabama at Birmingham implemented an OpenStack and Ceph-based platform to centralize and manage growing research data, addressing productivity issues caused by distributed data management. The partnership with Dell/Inktank facilitated this transition, allowing for scalable and cost-effective solutions for researchers. The implementation led to improved cloud adoption, flexible storage solutions, and enhanced computing capacity for complex research applications.