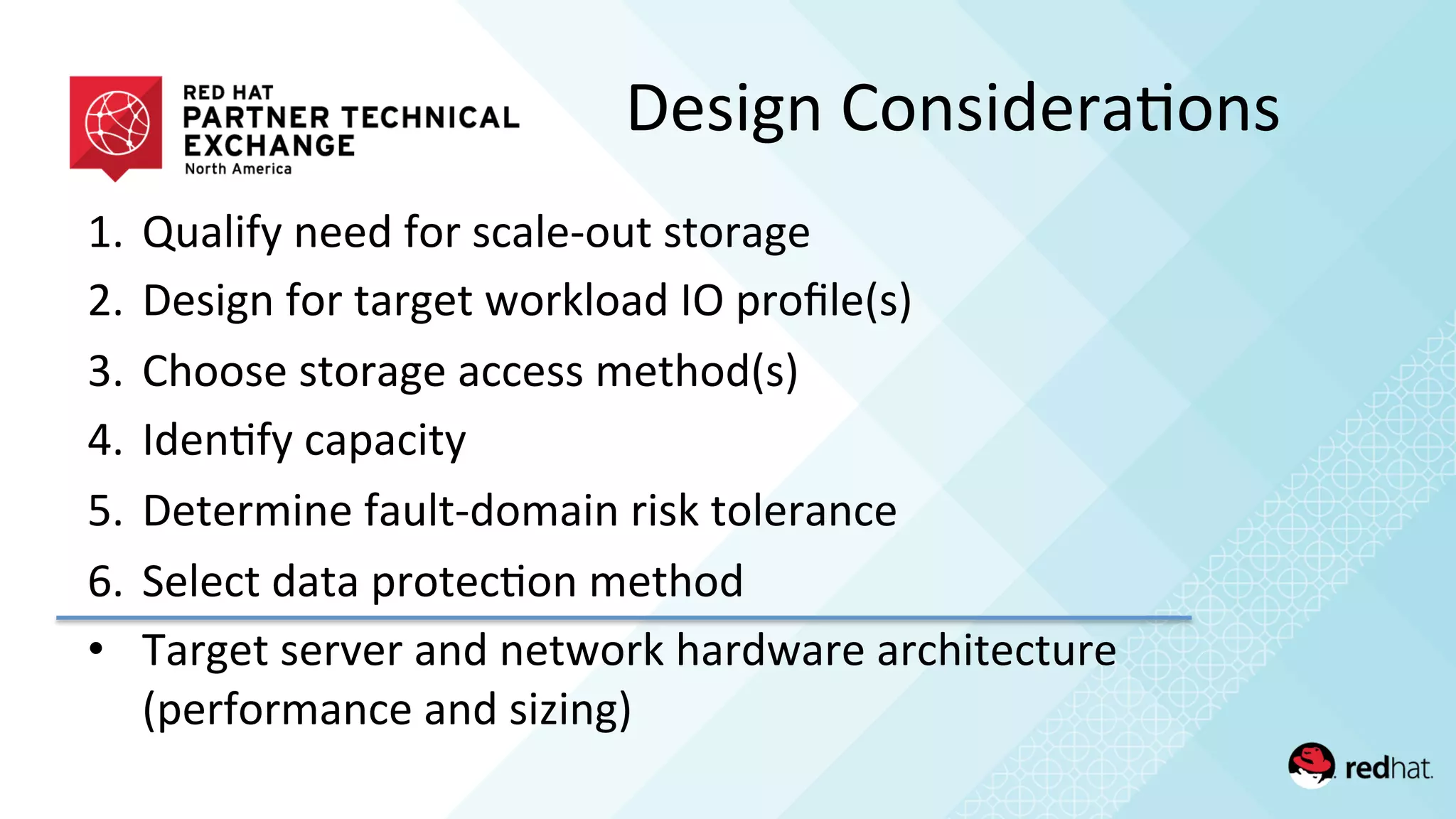

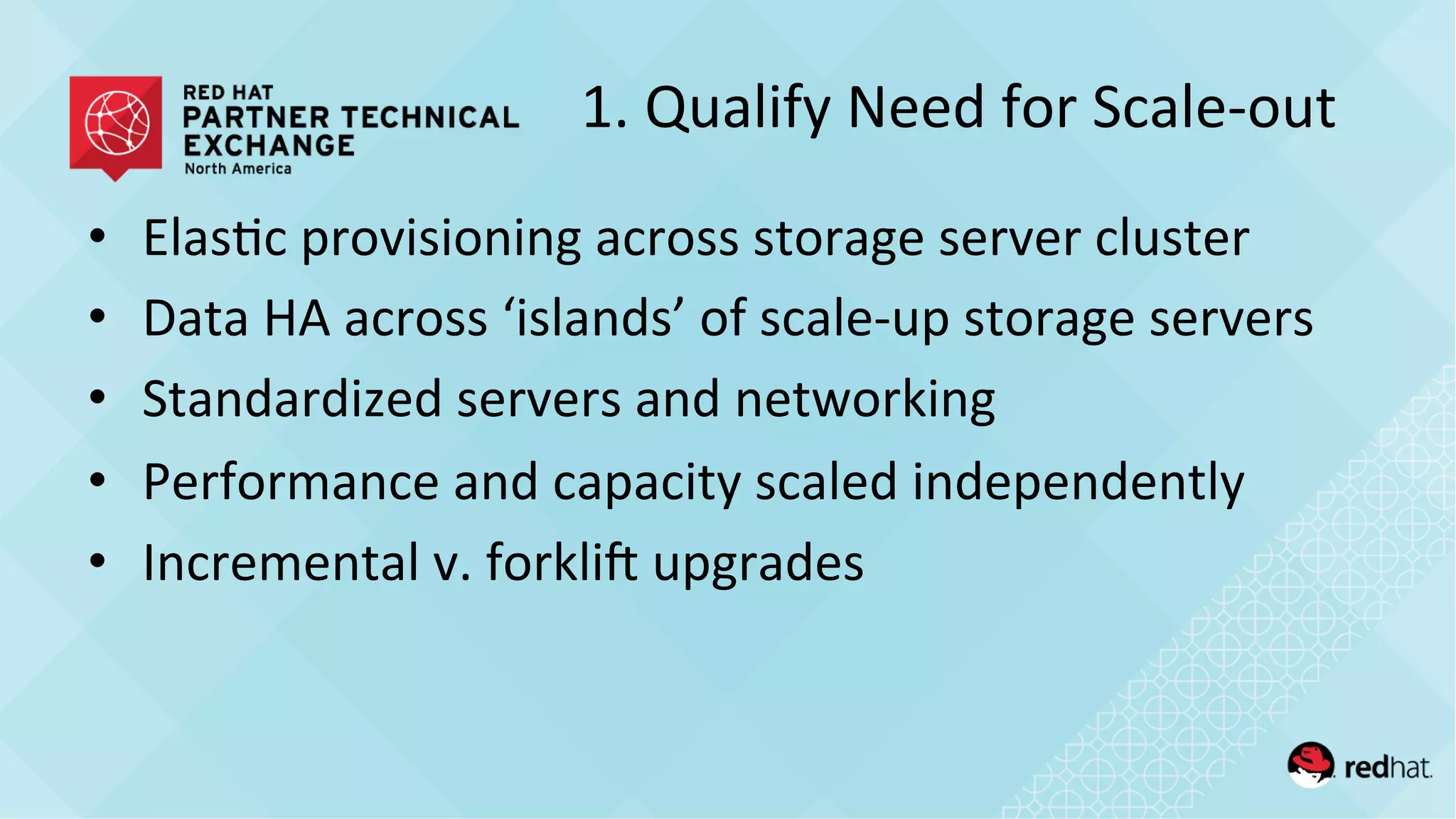

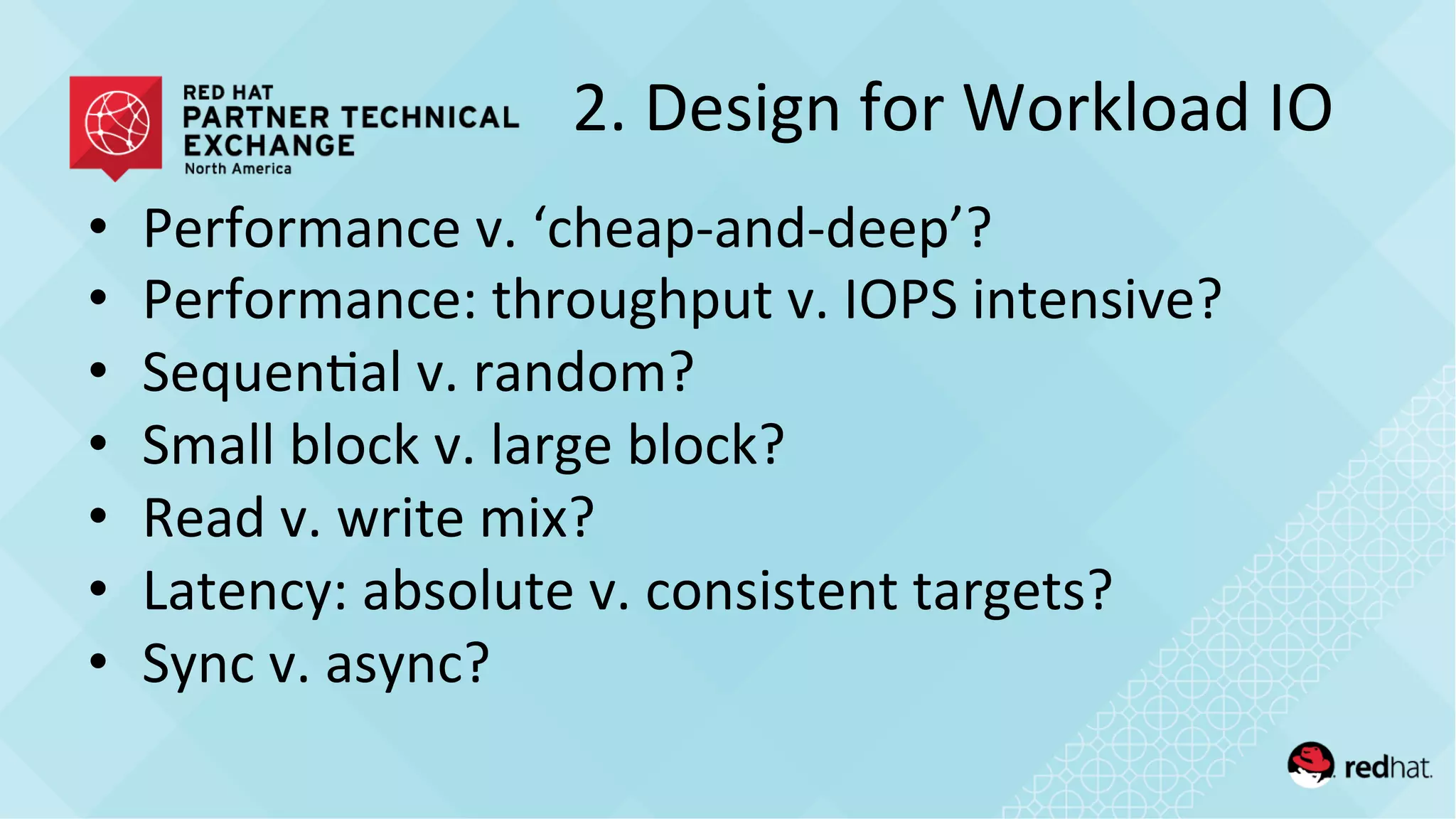

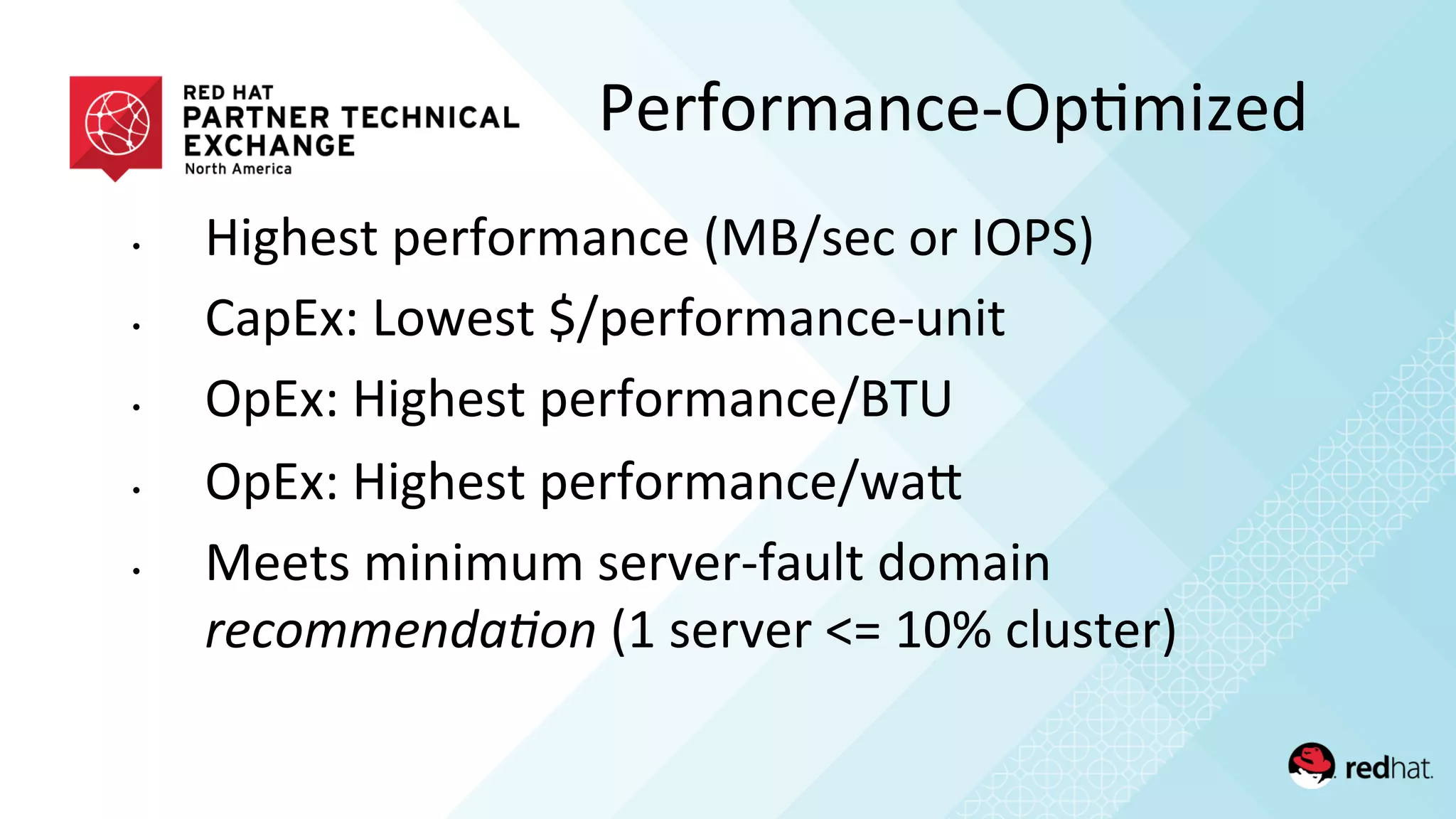

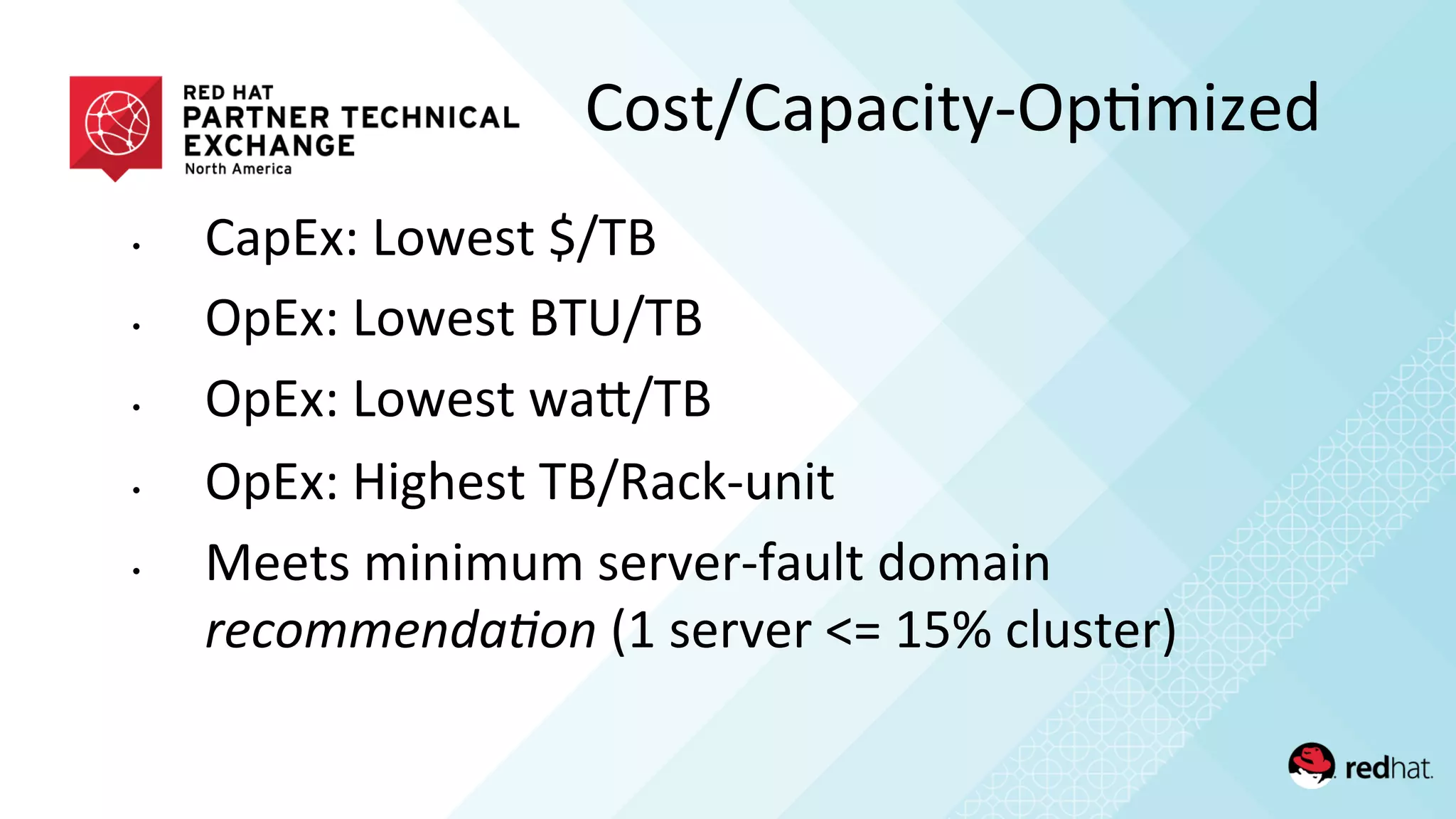

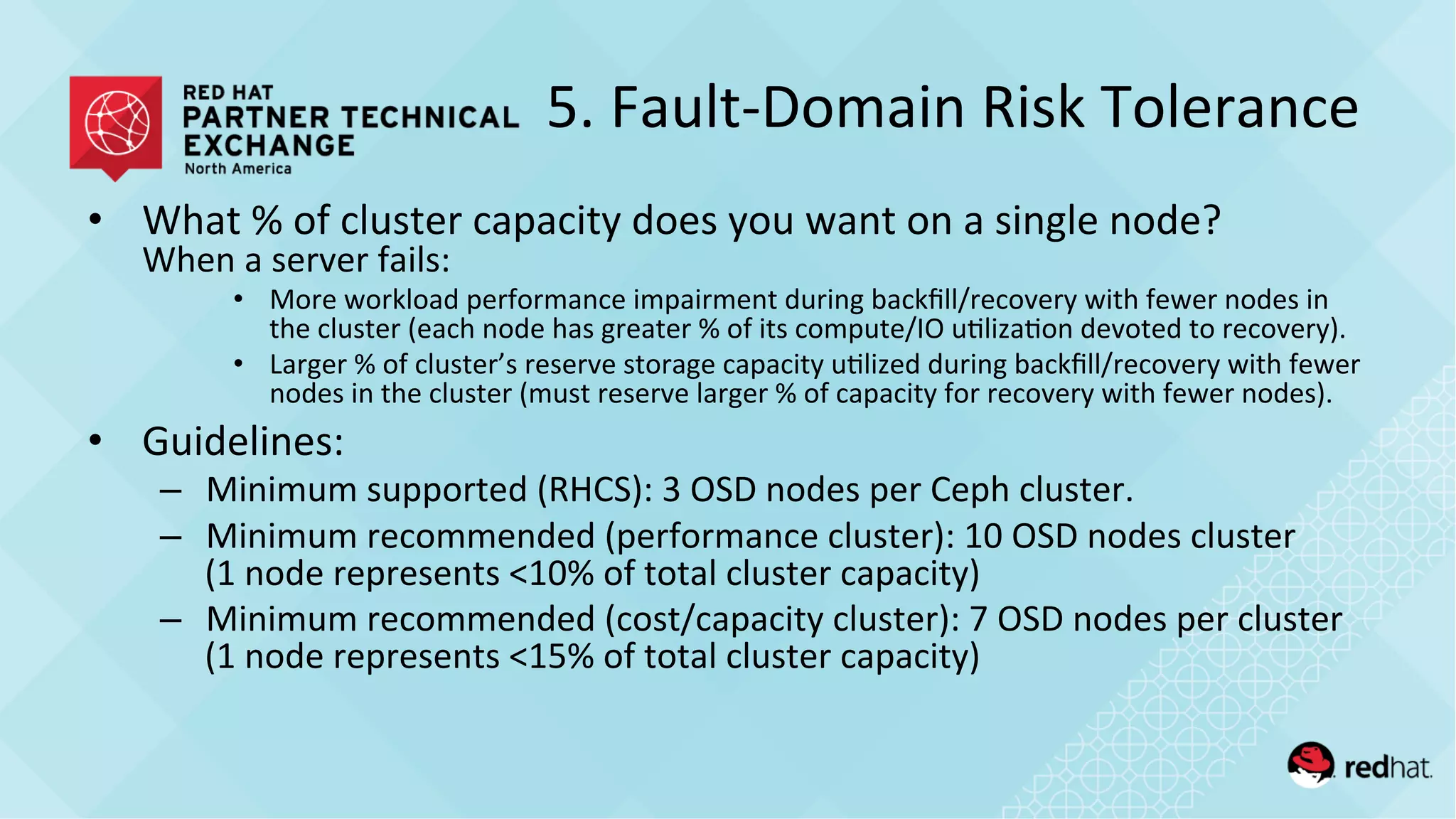

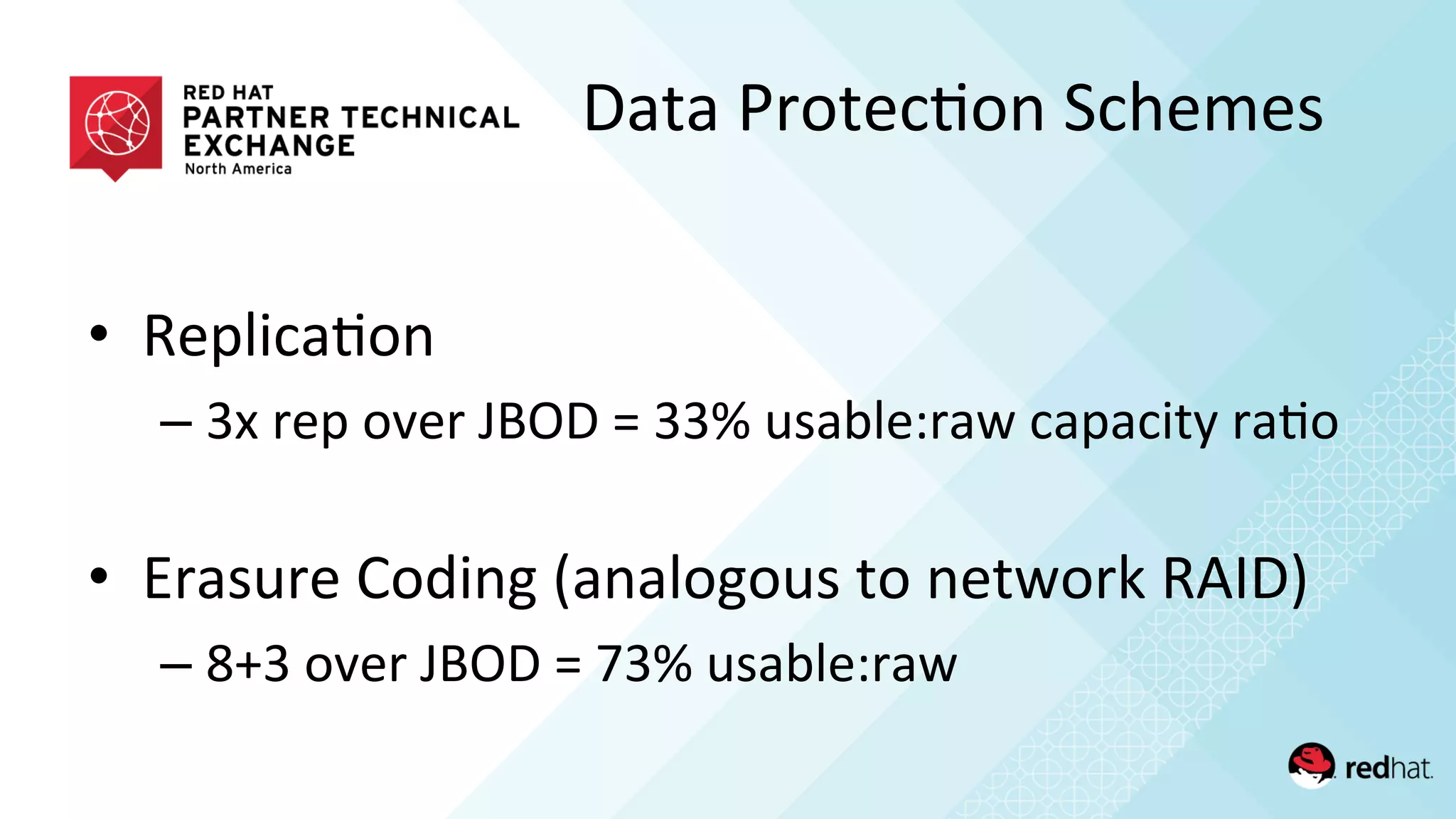

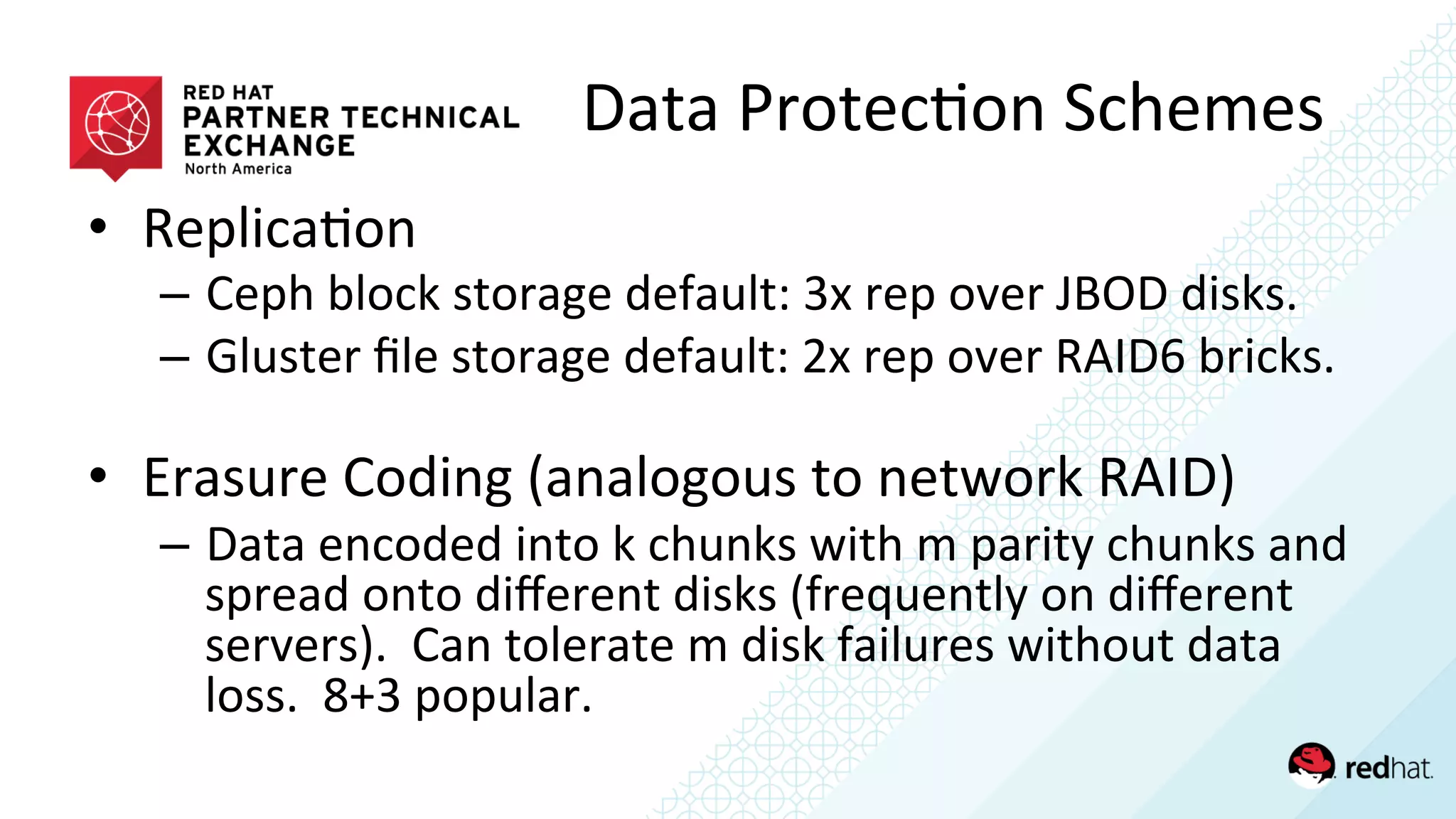

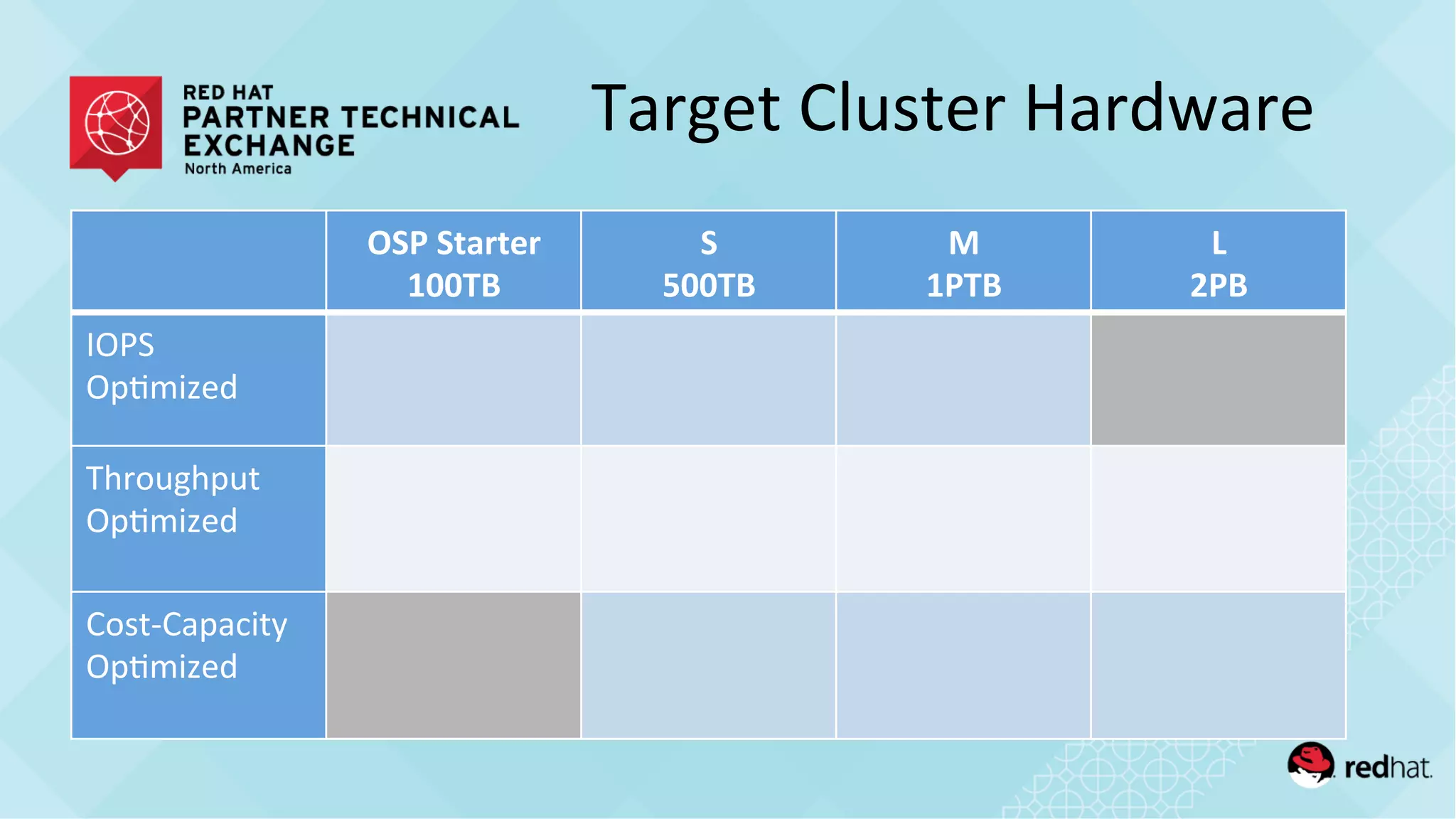

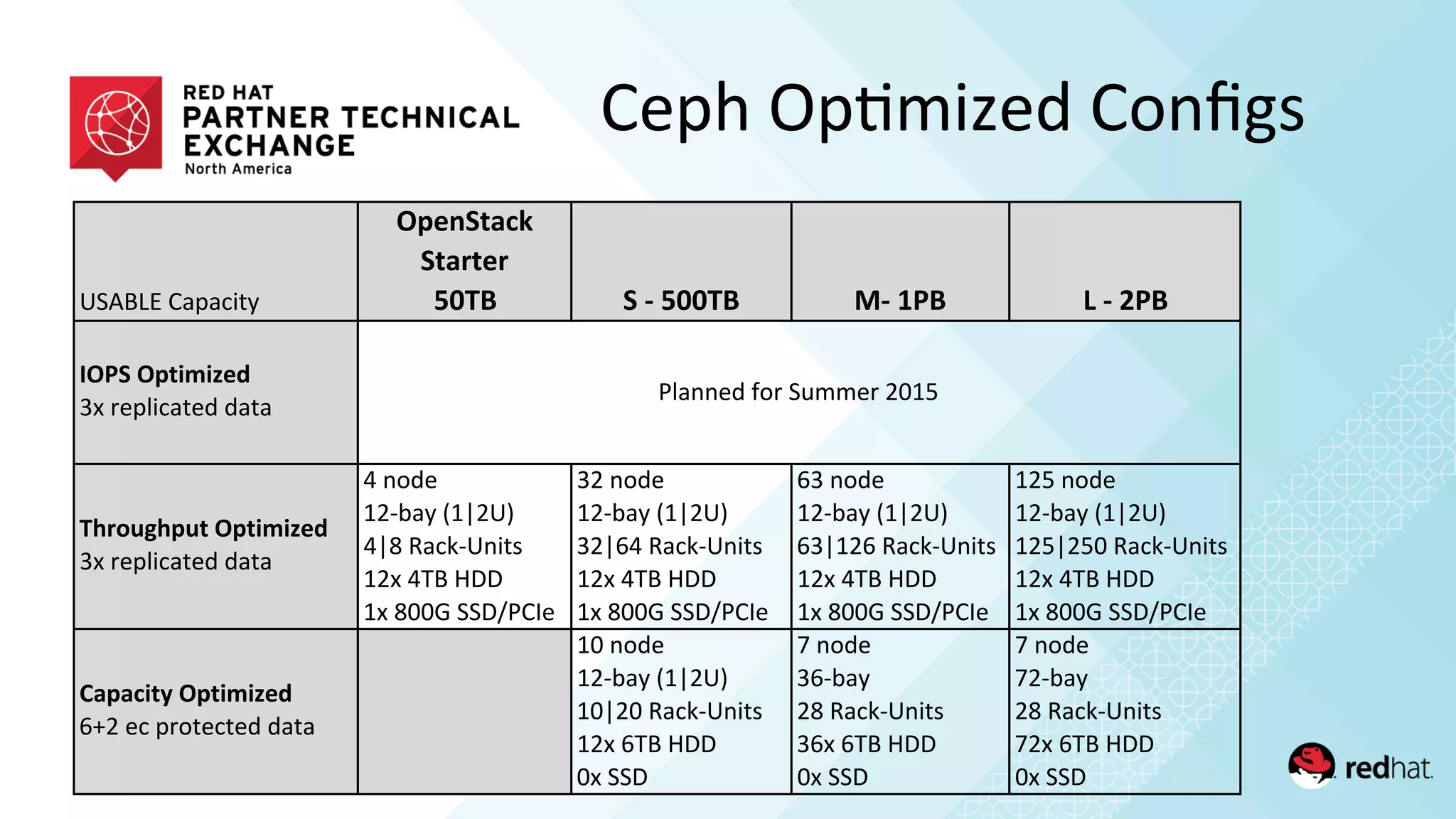

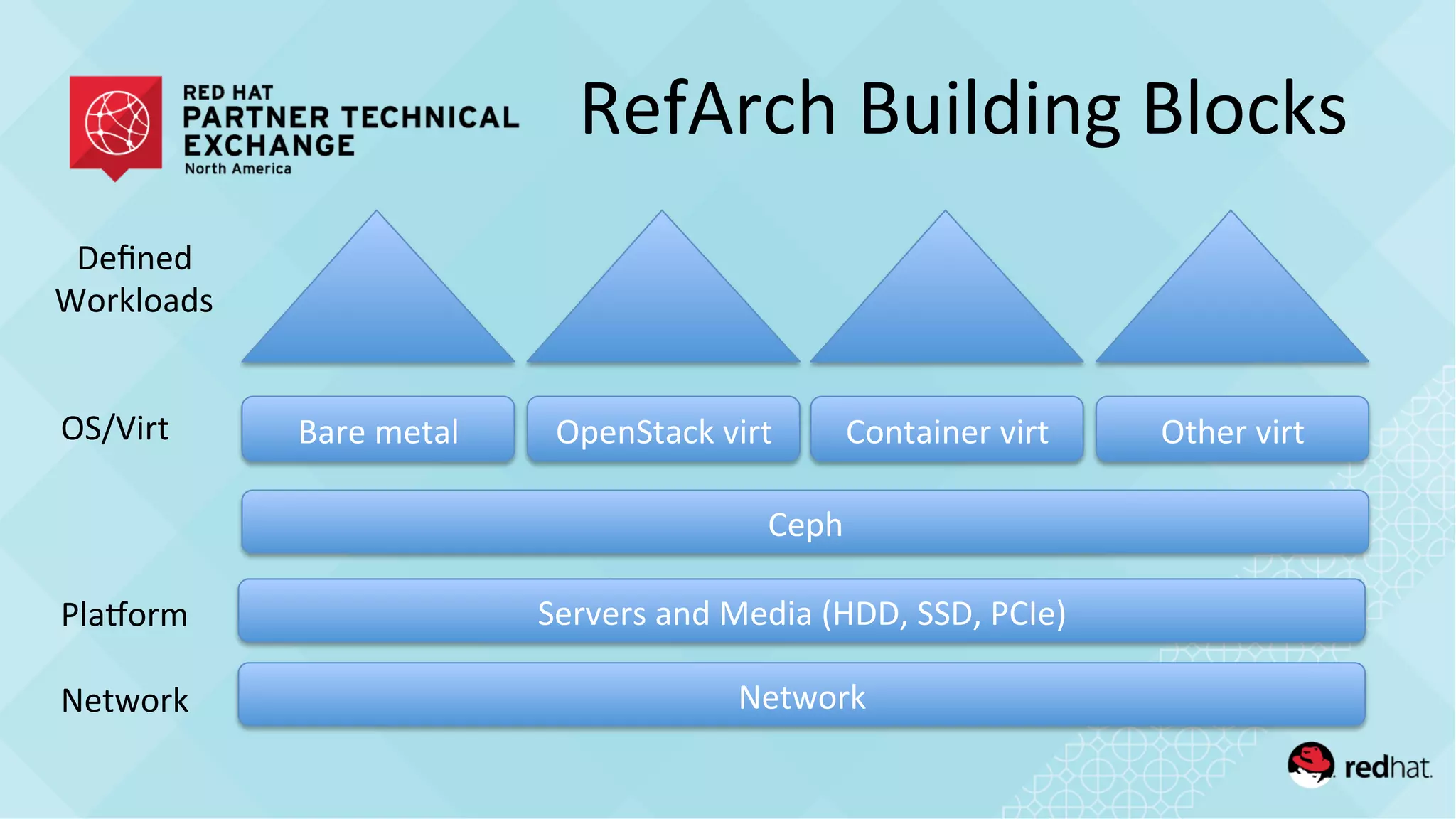

This document discusses reference architectures for Ceph storage solutions. It provides guidance on key design considerations for Ceph clusters, including workload profiling, storage access methods, capacity planning, fault tolerance, and data protection schemes. Example hardware configurations are also presented for different performance and cost optimization targets.

![RefArch

Flavors

• How-‐to

integra/on

guides

(Ceph+OS/Virt,

or

Ceph+OS/Virt+Workloads)

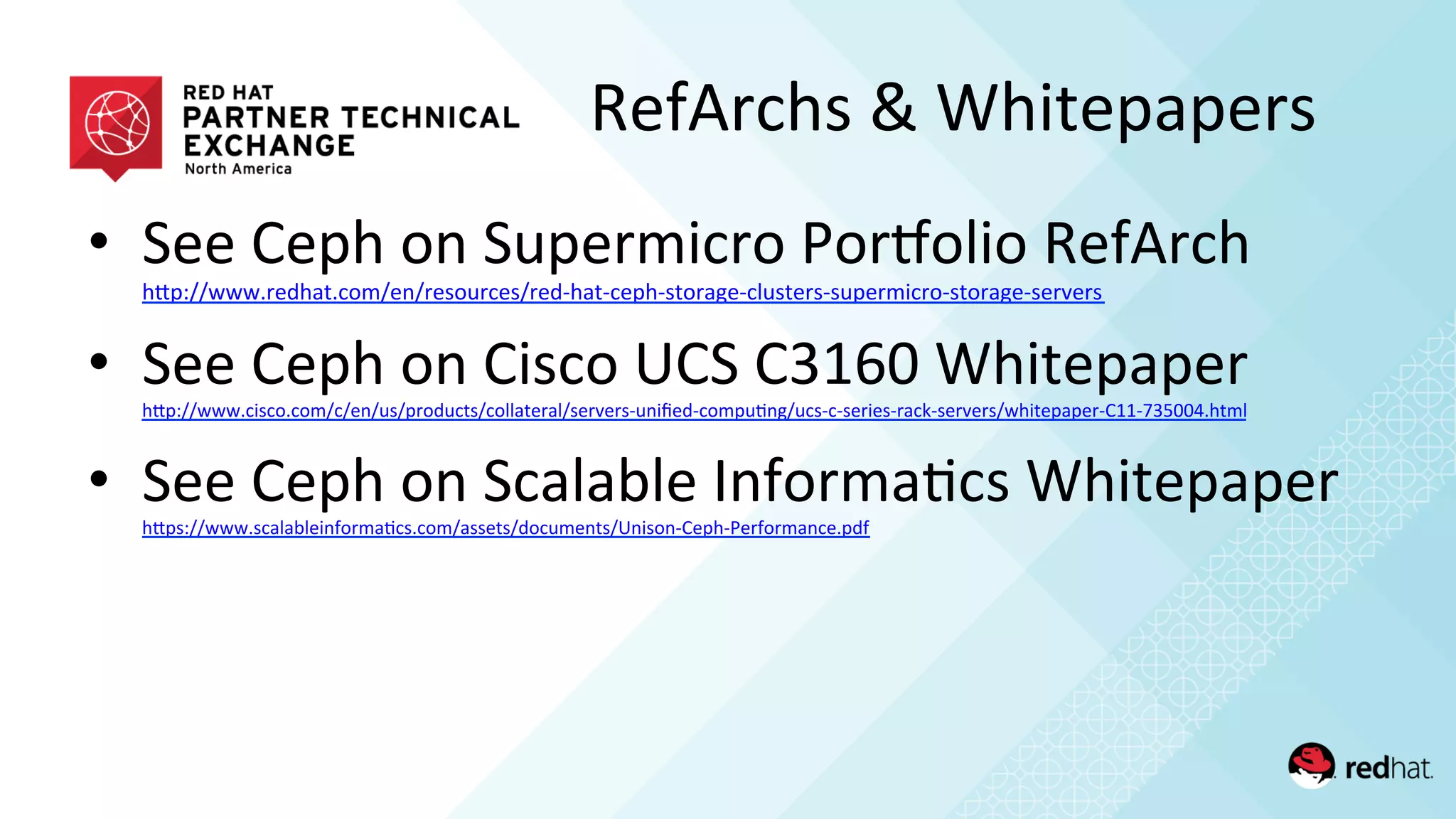

hQp://www.dell.com/learn/us/en/04/shared-‐content~data-‐sheets~en/documents~dell-‐red-‐hat-‐cloud-‐

solu/ons.pdf

• Performance

and

sizing

guides

(Network+Server+Ceph+[OS/Virt])

hQp://www.redhat.com/en/resources/red-‐hat-‐ceph-‐storage-‐clusters-‐supermicro-‐storage-‐servers

hQps://www.redhat.com/en/resources/cisco-‐ucs-‐c3160-‐rack-‐server-‐red-‐hat-‐ceph-‐storage

hQps://www.scalableinforma/cs.com/assets/documents/Unison-‐Ceph-‐Performance.pdf](https://image.slidesharecdn.com/archcephsolutionsv7-150924215217-lva1-app6891/75/Reference-Architecture-Architecting-Ceph-Storage-Solutions-3-2048.jpg)