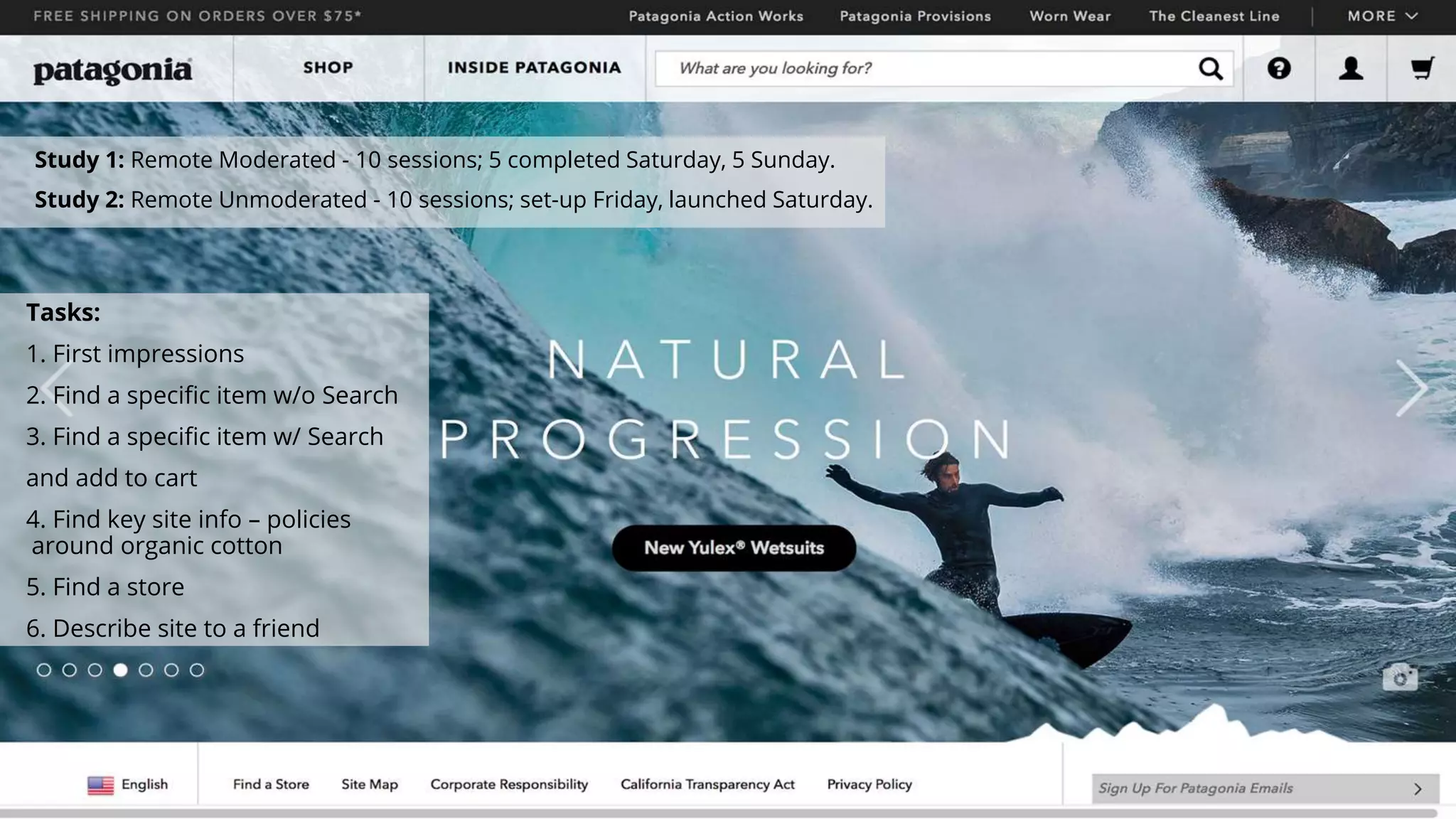

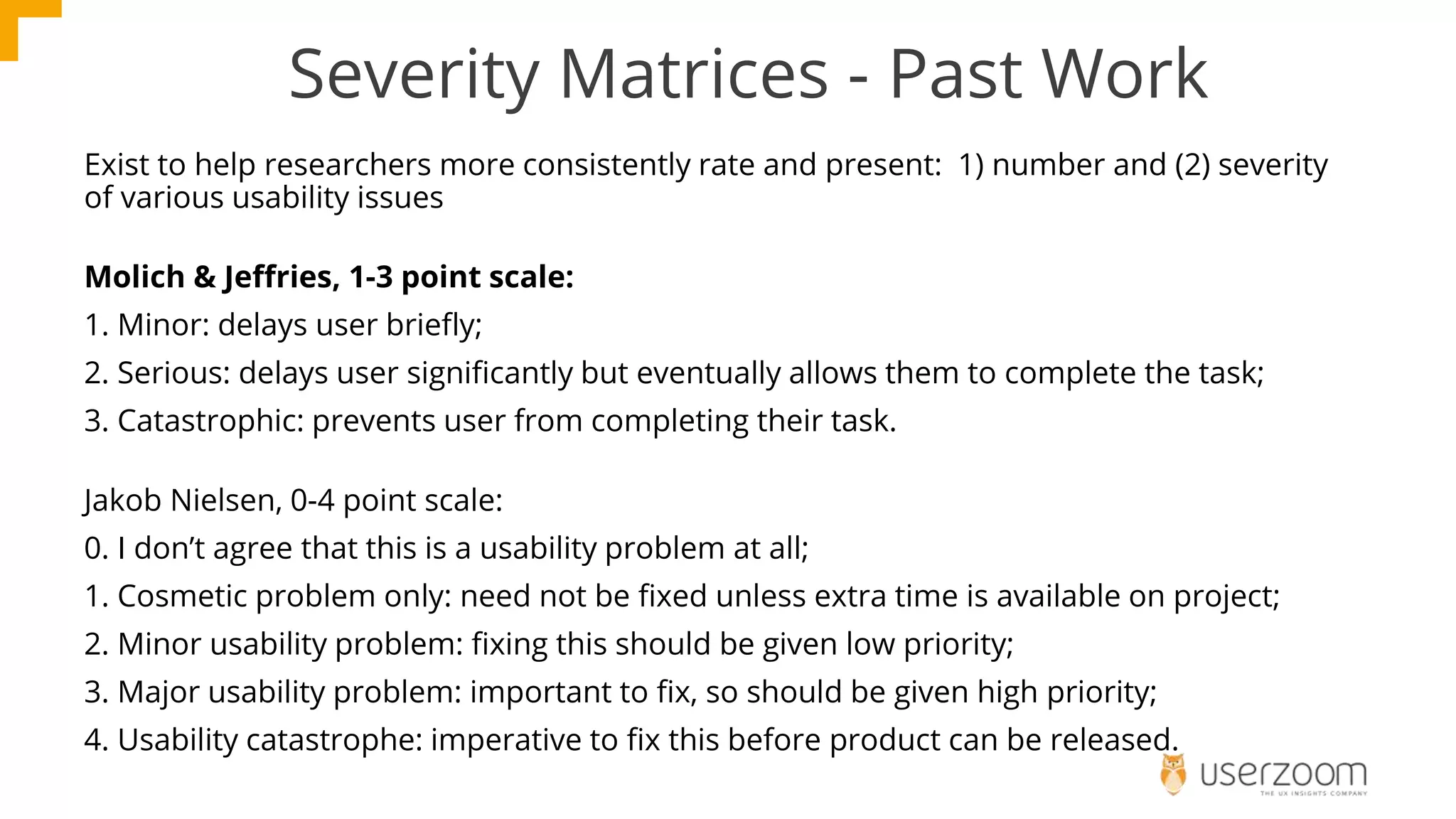

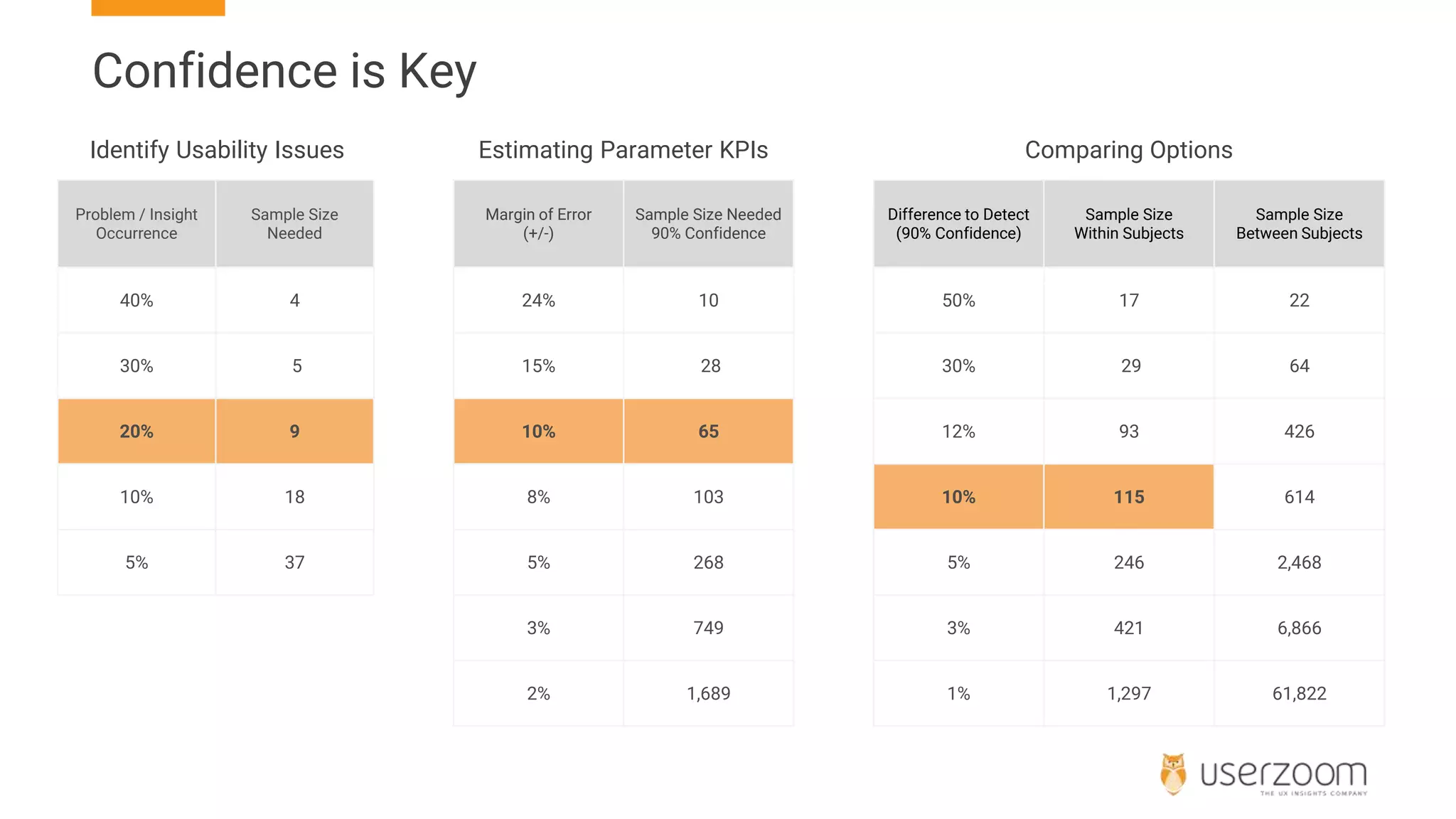

The document discusses a webinar comparing unmoderated and moderated user research, highlighting their differences in discovering usability issues through two studies involving remote sessions. Key insights reveal that moderated sessions identify 20% more issues, though both methods have practical limits regarding time and cost. Recommendations suggest utilizing moderated tests for comprehensive issue identification while acknowledging the role of unmoderated tests in practical scenarios.

![Most & Least problematic Tasks

Most problematic task: Task 4 (Find key site info – policies around organic cotton):

• Number of issues: Most participants had the same difficulty with:

1. The first click

2. Navigating successive pages on the site with no clear site-level-guidance

3. Understanding the mass of text about Patagonia’s policies around organic cotton

• Severity of issues: Most participants had level 2 serious problems with this task – these were

problems that delay “user[s] significantly but eventually allows them to complete the task.”

Least problematic task: Task 5 (Find a store):

• Number of issues: Understanding the meaning of the different-colored location pins.

• Severity of issues: A few participants, for each method, had level 1 minor problems with this

task – that delayed the “user briefly.”](https://image.slidesharecdn.com/timetosayelmo-uxresearchshowdownremotemoderatedversusremoteunmoderateduserresearch-200124102143/75/Moderated-vs-Unmoderated-Research-It-s-time-to-say-ELMO-Enough-let-s-move-on-25-2048.jpg)