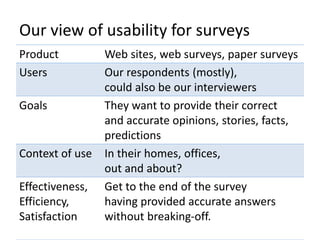

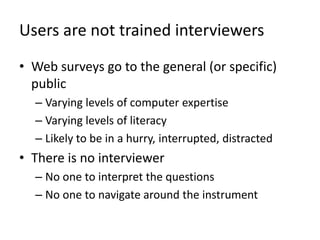

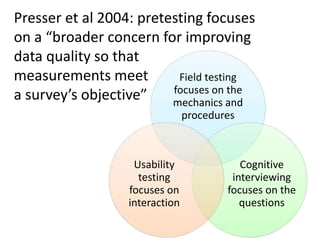

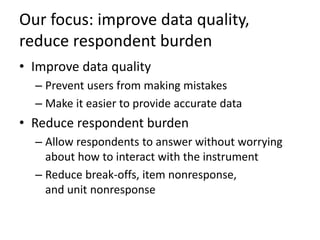

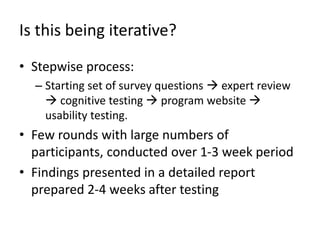

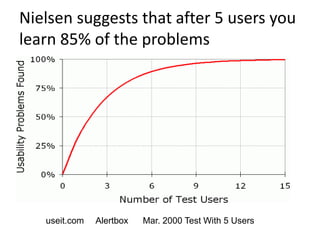

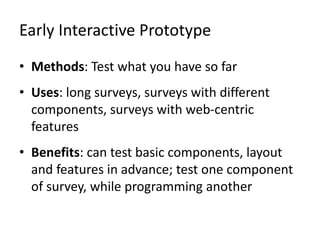

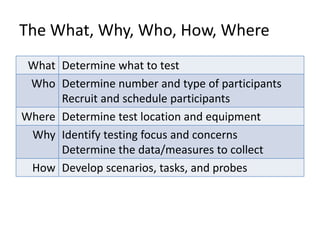

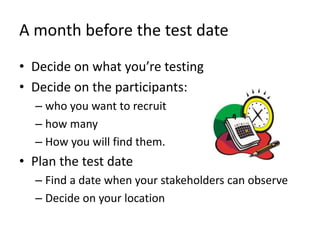

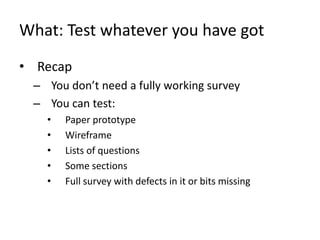

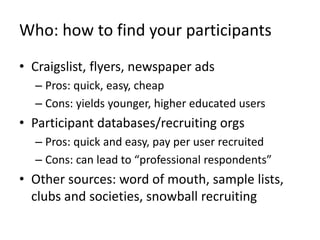

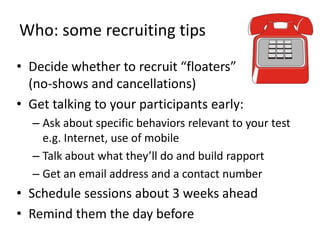

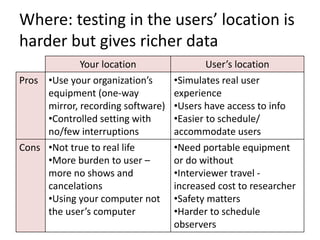

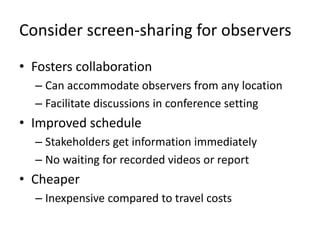

This document provides guidance on planning and preparing for usability testing of surveys. It discusses determining what aspects of a survey to test, who to recruit as participants, and where to conduct the testing. Key recommendations include deciding what to test at least a month before testing, recruiting 5-10 participants to represent intended users, and conducting testing in rounds with revisions between rounds rather than one large test. Locations for testing can either be at the organization conducting the test or in participants' natural environments.