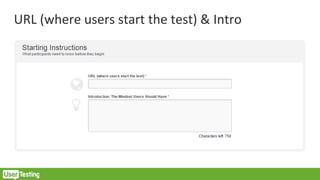

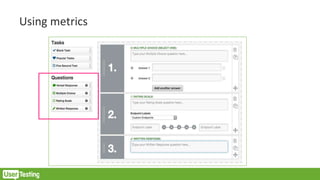

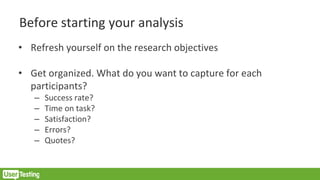

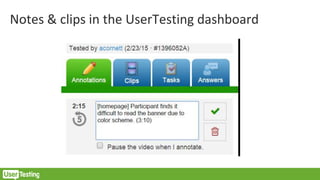

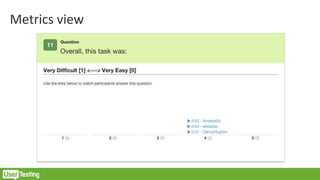

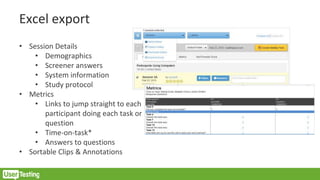

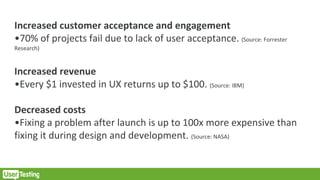

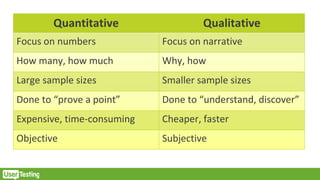

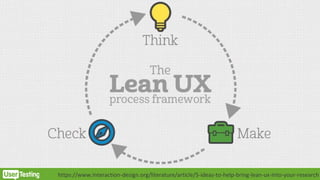

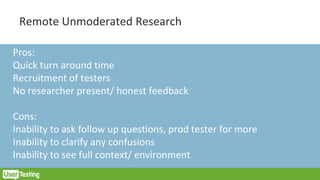

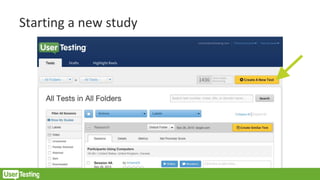

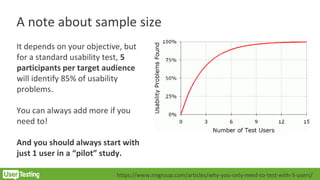

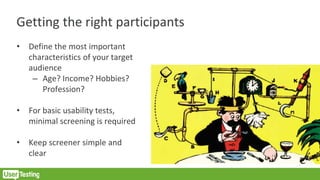

This document provides an introduction to Lean UX and UserTesting. It defines UX and Lean UX, discusses the benefits of user testing such as increased revenue and decreased costs, and outlines the UserTesting process including defining objectives, writing tasks, analyzing results, and using metrics and notes. UserTesting allows remote, unmoderated usability testing of digital products through video recordings of testers interacting with designs. The document provides tips for effective user testing through UserTesting.

![Tip: Avoid leading questions and yes/no answers

Bad

❑ Do you prefer organic cotton sheets?

❑ Yes [Accept]

❑ No

Good

❑ What kind of sheets do you prefer?

❑ Organic cotton [Accept]

❑ Cotton

❑ Sateen

❑ Synthetic

❑ Polyester

❑ Silk

❑ Jersey

❑ Other](https://image.slidesharecdn.com/introtoleanuxwithusertesting-160331234520/85/Intro-to-Lean-UX-with-UserTesting-24-320.jpg)

![Tip: Use multiple, separate questions

Bad

❑ How often do you exercise on a treadmill?

❑ Less than 1 time a week

❑ 1-2 times a week

❑ 3+ times a week [Accept]

Good

❑ How often do you exercise?

❑ Less than 1 time a week

❑ 1-2 times a week

❑ 3+ times a week [Accept]

❑ What kind of exercise do you do most often?

❑ Yoga

❑ Elliptical

❑ Treadmill [Accept]

❑ Free Weights

❑ Other](https://image.slidesharecdn.com/introtoleanuxwithusertesting-160331234520/85/Intro-to-Lean-UX-with-UserTesting-25-320.jpg)

![Tip: Give participants an “out”

Bad

❑ What is your marital status?

❑ Married [Accept]

❑ Not married

Good

❑ What is your marital status?

❑ Married [Accept]

❑ Not married

❑ Other

❑ I prefer not to say](https://image.slidesharecdn.com/introtoleanuxwithusertesting-160331234520/85/Intro-to-Lean-UX-with-UserTesting-26-320.jpg)