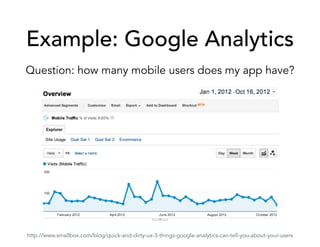

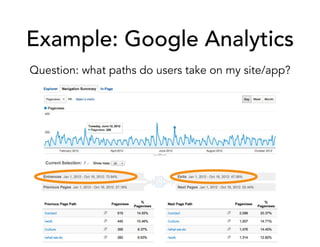

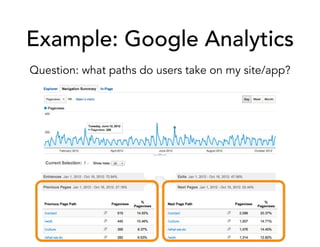

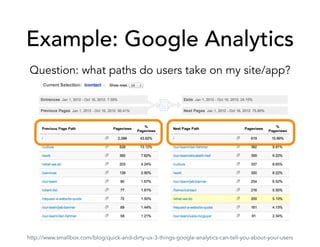

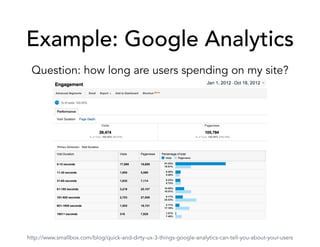

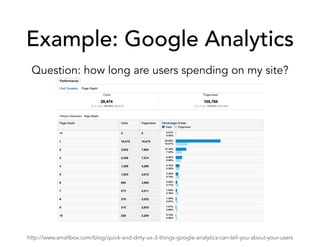

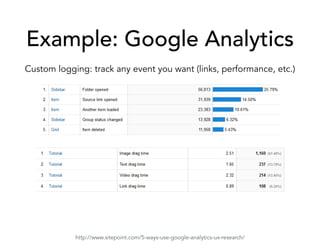

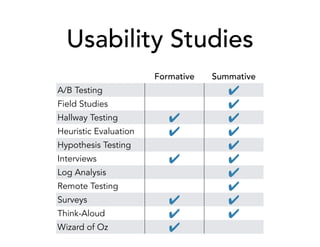

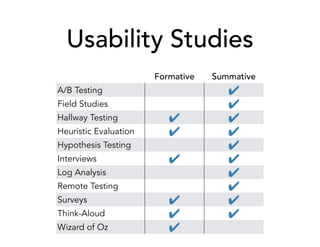

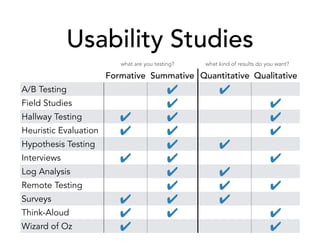

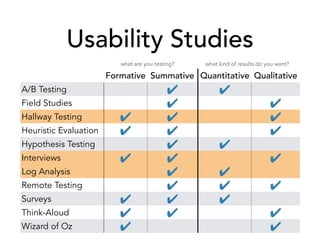

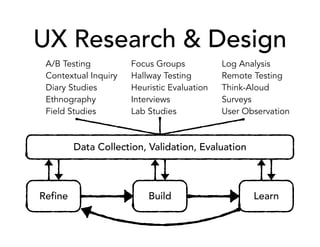

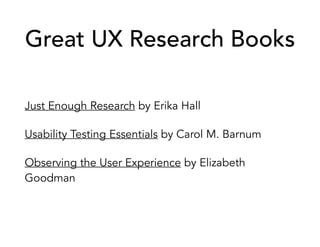

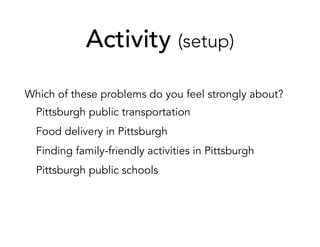

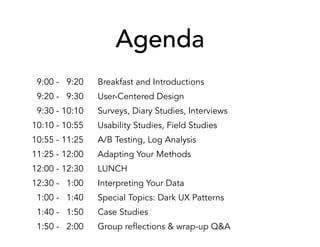

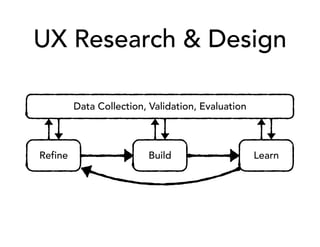

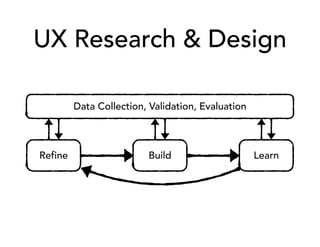

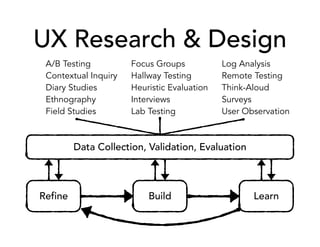

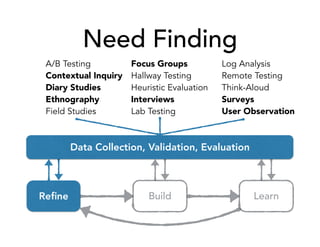

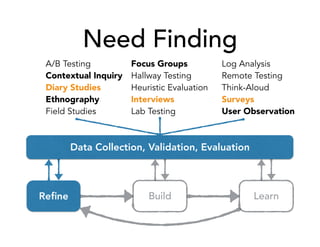

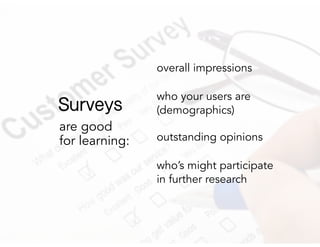

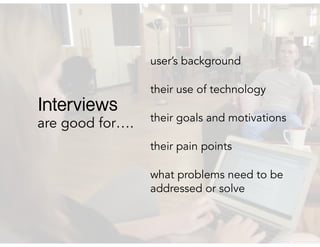

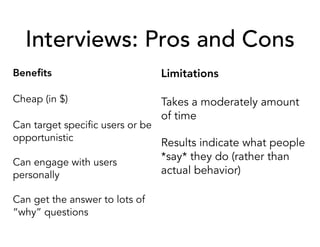

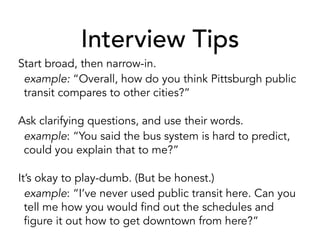

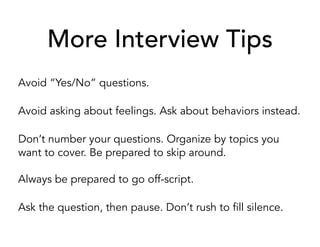

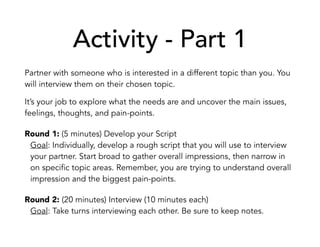

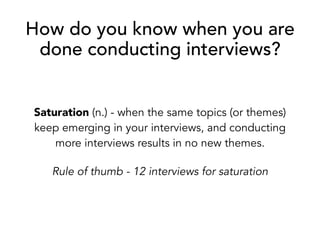

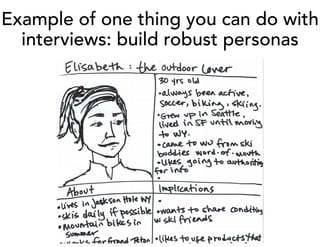

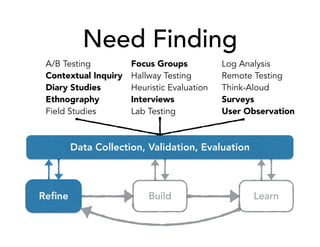

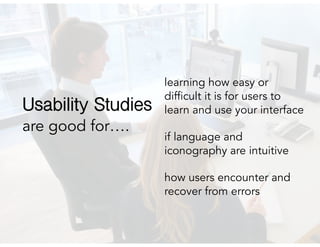

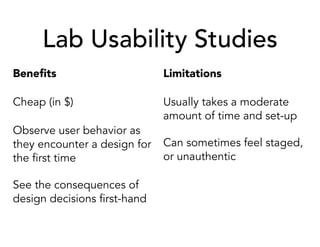

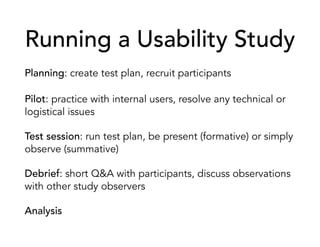

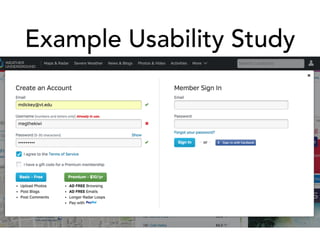

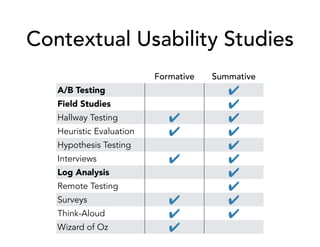

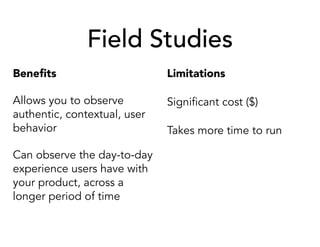

The document outlines a workshop on UI/UX foundations, focusing on research and analysis, with specific activities and agendas designed to build participants' confidence in engaging with UX research. Key topics include user-centered design principles, various UX research methods like surveys and interviews, and practical activities aimed at gathering user insights. The workshop also emphasizes critical understanding of research data and the application of findings in design processes.

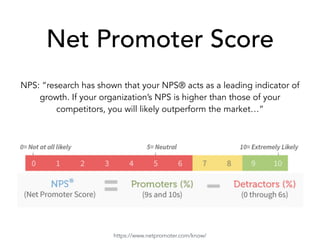

![Common Survey Example

How likely is it that you’d recommend [brand] to a friend?

Not at

all Likely

Neutral

Very

Likely

1 2 3 4 5 6 7 8 9 10](https://image.slidesharecdn.com/uiuxfoundations-research-151014133855-lva1-app6891/85/UI-UX-Foundations-Research-16-320.jpg)

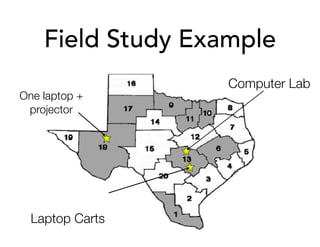

![Field Study Example

[00:24:19.08] Boy says to the girl

on his right: "you cheating”

[00:24:21.19] Girl to the left:

"what? Its fun. ::mumble:: the

simulation. Look.”

[00:24:25.21] The boy looks to

the girl on his left, then back to

the girl on the right, then down to

his workbook in front of him. He

puts his head on the table.](https://image.slidesharecdn.com/uiuxfoundations-research-151014133855-lva1-app6891/85/UI-UX-Foundations-Research-50-320.jpg)