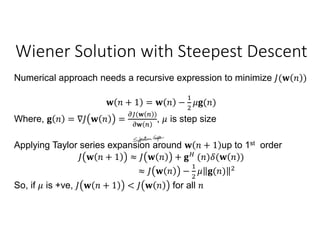

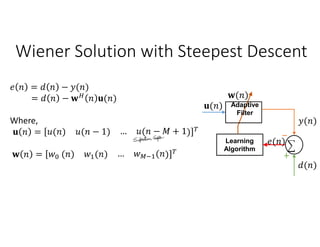

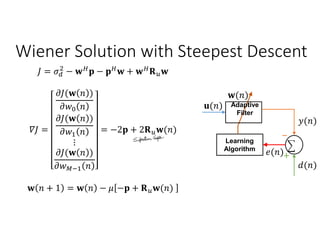

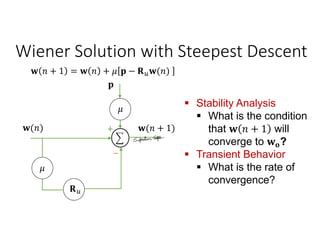

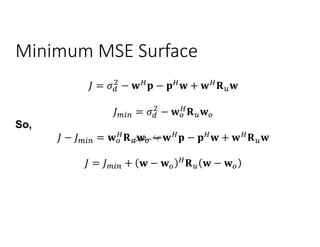

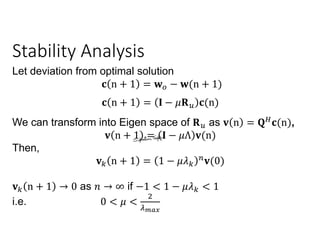

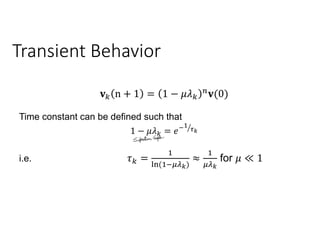

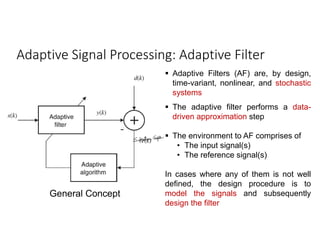

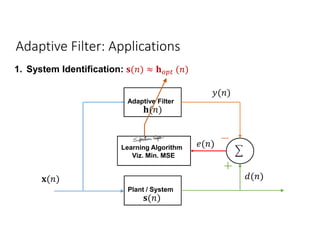

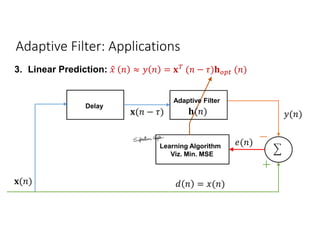

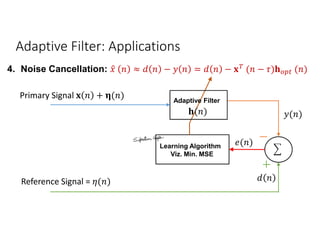

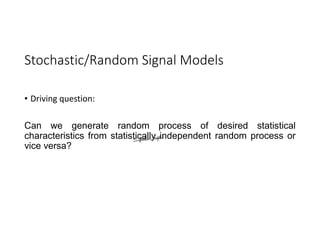

Adaptive filters are time-variant, nonlinear, and stochastic systems that perform data-driven approximation to minimize an objective function. The chapter discusses adaptive filter applications like system identification, inverse modeling, linear prediction, and noise cancellation. It also covers stochastic signal models, optimum linear filtering techniques like Wiener filtering, and solutions to the Wiener-Hopf equations. Numerical techniques like steepest descent are discussed for minimizing the mean square error function in adaptive filters. Stability and convergence analysis is presented for the steepest descent approach.

![Adaptive Signal Processing: Adaptive Algorithm

General Concept

The basic objective of the

adaptive/learning Algorithm is

• To set adaptive filter coefficients

(parameters) to minimize a

meaningful objective function

involving the input, the reference

signal, and adaptive filter output

signals

The meaningful objective function

should be –

• Non-negative:

, , ≥ 0,

∀ ( ), ( ), ( ) ;

• Optimal: [ ( ), ( ), ( )] = 0.](https://image.slidesharecdn.com/lecturenotesonadaptivesignalprocessing-1-220912172526-ff2361af/85/Lecture-Notes-on-Adaptive-Signal-Processing-1-pdf-3-320.jpg)

![Stochastic Models

• General or Auto-regressive Moving Average (ARMA) Model

, [ ]

[ ] [ ]

= [ − ] − [ − ]](https://image.slidesharecdn.com/lecturenotesonadaptivesignalprocessing-1-220912172526-ff2361af/85/Lecture-Notes-on-Adaptive-Signal-Processing-1-pdf-9-320.jpg)

![Stochastic Models

• Moving Average (MA) Model

ℎ

[ ] [ ]

= ℎ [ − ]](https://image.slidesharecdn.com/lecturenotesonadaptivesignalprocessing-1-220912172526-ff2361af/85/Lecture-Notes-on-Adaptive-Signal-Processing-1-pdf-10-320.jpg)

![Stochastic Models

• Auto-regressive (AR) Model

ℎ[ ]

[ ] [ ]

= − ℎ − + [ ]](https://image.slidesharecdn.com/lecturenotesonadaptivesignalprocessing-1-220912172526-ff2361af/85/Lecture-Notes-on-Adaptive-Signal-Processing-1-pdf-11-320.jpg)

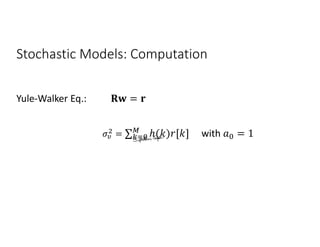

![Stochastic Models: Computation

• Given the desired auto-correlation sequence for = 0, 1, 2, … ,

determine the ℎ( ) and the input process variance

ℎ − = [ ]

Yule-Walker Eq.: ∑ ℎ∗

− = 0 for > 0

∑ ℎ∗

− = for = 0](https://image.slidesharecdn.com/lecturenotesonadaptivesignalprocessing-1-220912172526-ff2361af/85/Lecture-Notes-on-Adaptive-Signal-Processing-1-pdf-12-320.jpg)

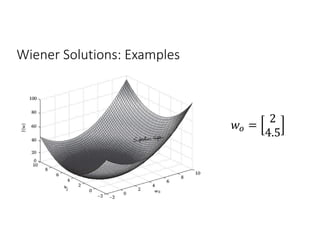

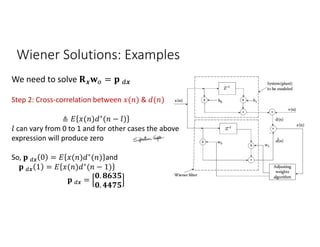

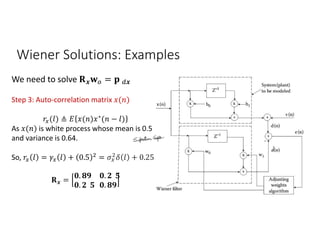

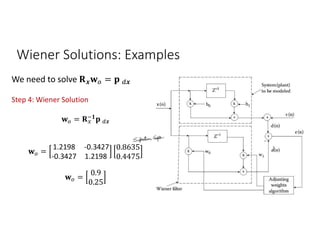

![Wiener Solutions: Examples

Consider the sample autocorrelation coefficients ( (0) = 1.0, (1) = 0) from given

data ( ), which, in addition to noise, contain the desired signal. Furthermore,

assume the variance of the desired signal = 24.40 and the cross-correlation

vector be = [2 4.5] . It is desired to find the surface defined by the mean-

square function ( ).](https://image.slidesharecdn.com/lecturenotesonadaptivesignalprocessing-1-220912172526-ff2361af/85/Lecture-Notes-on-Adaptive-Signal-Processing-1-pdf-23-320.jpg)