This document provides an overview of adaptive filters, including:

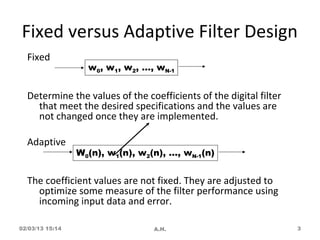

- Adaptive filters have coefficients that are adjusted based on input data to optimize performance, unlike fixed filters.

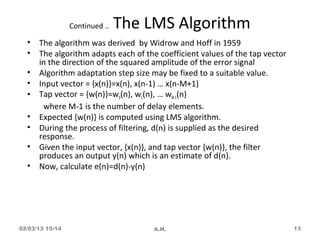

- The LMS algorithm is commonly used to adjust coefficients to minimize the mean square error between the filter output and a desired signal.

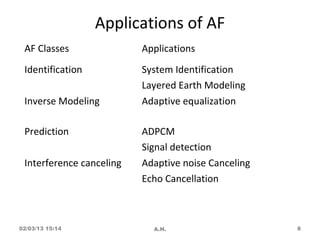

- Key applications of adaptive filters include noise cancellation, system identification, channel equalization, and echo cancellation.

![Introduction to Adaptive Filter [Efea 541]

• An adaptive filter is a digital filter with self-

adjusting characteristics.

• It adapts, automatically, to changes in its

input signals.

• A variety of recursive algorithms have been

developed for the operation of adaptive

filters, e.g., LMS, RLS, etc.

02/03/13 15:14 A.H. 4](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-4-320.jpg)

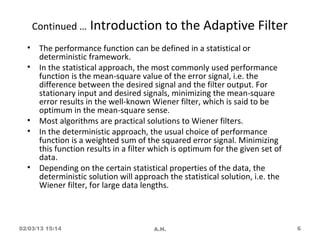

![Continued … Introduction to the Adaptive Filter

[Far 2]

• The figure shows a filter emphasizing the way it is used in

typical problems.

• The filter is used to reshape certain input signals in such a

way that its output is a good estimate of the given desired

signal.

• The process of selecting or adapting in this case the filter

parameters (coefficients) so as to achieve the best match

between the desired signal and the filter output is often

done by optimizing an appropriately defined performance

function.

02/03/13 15:14 A.H. 5](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-5-320.jpg)

![Adaptive Filter Structure [Far 3]

M −1

y (n) = ∑ wi (n) x(n − i )

i =0

02/03/13 15:14 A.H. 9](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-9-320.jpg)

![Adaptive Filter Algorithms [Ifea 648]

• LMS – Least Mean Square

• RLS – Recursive Least Squares

• Kalman Filter Algorithms

LMS

• The most efficient in terms of computation and storage

requirements

• Does not suffer from the numerical instability problem.

• Popular

02/03/13 15:14 A.H. 11](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-11-320.jpg)

![The LMS Algorithm

[Far 138, Ifea 654, Hay 299, Proa 902-905]

02/03/13 15:14 A.H. 12](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-12-320.jpg)

![Continued .. The LMS Algorithm

We write here 3 basic relations of the LMS

algorithm [Hay 303, Far 141]

1. Filter output y ( n) = w T ( n) x ( n)

2. Estimation error e( n ) = d ( n ) − y ( n )

3. Tap-weight update w (n + 1) = w (n) + µx (n)e(n)

02/03/13 15:14 A.H. 14](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-14-320.jpg)

![Summary of the LMS Algorithm [Far 141]

02/03/13 15:14 A.H. 15](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-15-320.jpg)

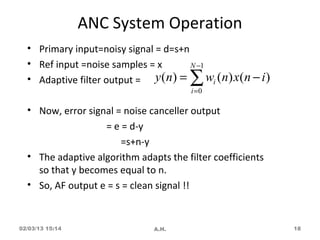

![AF Application: Noise Cancellation

[Hay 48, Far 21, Proa 896]

• Adaptive Noise Cancelling (ANC) is performed by

subtracting noise from a signal (where noise has

been mixed) for the purpose of improved signal-

to-noise ratio.

• The filtering and subtraction are controlled by the

adaptive process.

• Basically an adaptive noise canceller is a dual

input, closed adaptive control system.

02/03/13 15:14 A.H. 16](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-16-320.jpg)

![HOME WORKS

• Application of Adaptive filtering to system

identification(System Modeling) problem [Proa

P882].

• Adaptive Channel Equalization [Proa 883].

• Adaptive Echo Canellation [Proa 887].

02/03/13 15:14 A.H. 19](https://image.slidesharecdn.com/dsplecturevol-7adaptivefilter-130203091414-phpapp02/85/Dsp-lecture-vol-7-adaptive-filter-19-320.jpg)