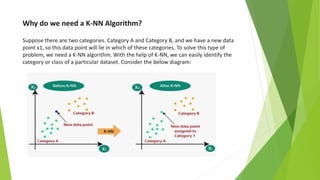

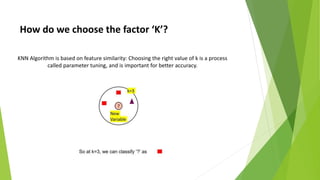

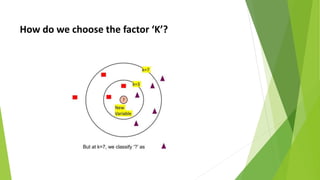

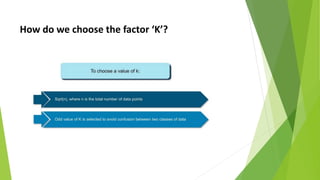

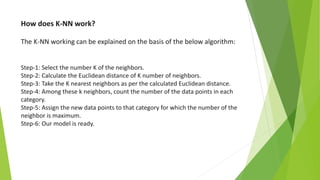

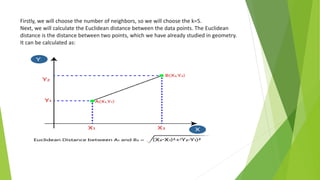

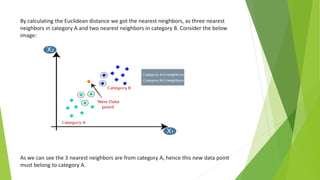

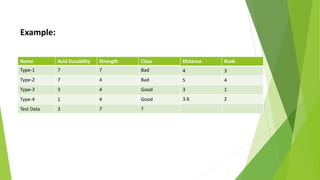

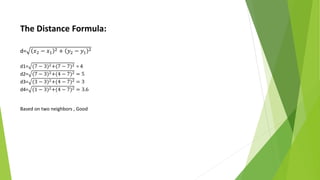

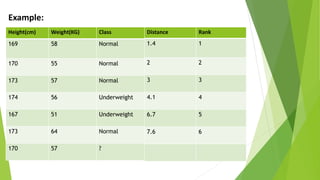

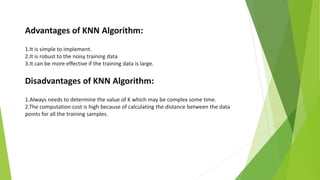

The k-nearest neighbors (k-NN) algorithm is a non-parametric, lazy learner method used for classification and regression by categorizing a new data point based on its similarity to existing data points. Choosing the correct value of 'k' is crucial for accuracy, and the algorithm operates by calculating the Euclidean distance between points to determine the nearest neighbors. Applications of k-NN include banking for loan approval predictions and calculating credit ratings.