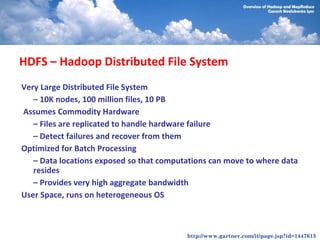

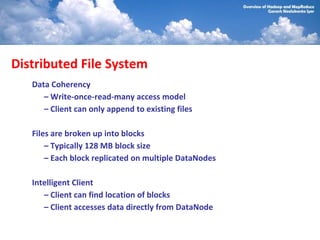

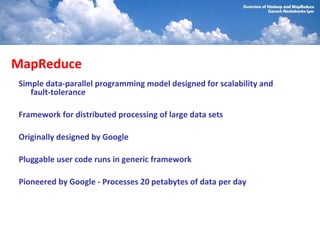

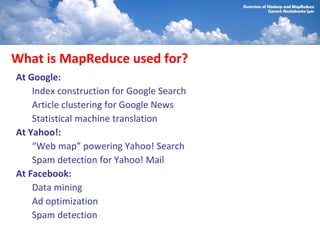

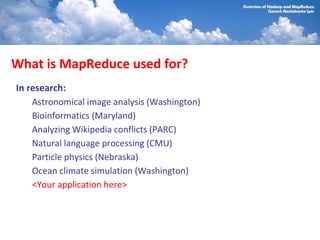

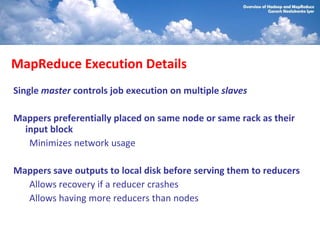

The document presents an overview of Hadoop and the MapReduce paradigm, highlighting their significance in big data processing and analysis. It describes Hadoop's architecture, the Hadoop Distributed File System (HDFS), and practical applications of MapReduce in various fields such as cloud computing and data mining. The author also discusses the fault tolerance mechanisms in MapReduce and concludes with resources for further learning.

![2. Sort

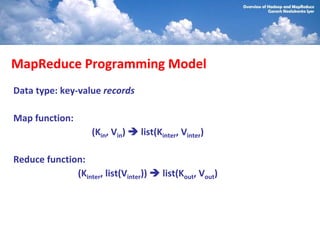

Input: (key, value) records

Output: same records, sorted by key Map

ant, bee

Reduce [A-M]

zebra

aardvark

Map: identity function ant

cow bee

Reduce: identify function cow

Map

elephant

pig

Trick: Pick partitioning Reduce [N-Z]

aardvark,

pig

function h such that elephant

sheep

k1<k2 => h(k1)<h(k2) Map sheep, yak yak

zebra](https://image.slidesharecdn.com/mec-hadoopmapreduce-student-110108043142-phpapp02/85/Introduction-to-Hadoop-and-MapReduce-27-320.jpg)

![Word Count in Python with Hadoop Streaming

import sys

Mapper.py: for line in sys.stdin:

for word in line.split():

print(word.lower() + "t" + 1)

Reducer.py: import sys

counts = {}

for line in sys.stdin:

word, count = line.split("t”)

dict[word] = dict.get(word, 0) +

int(count)

for word, count in counts:

print(word.lower() + "t" + 1)](https://image.slidesharecdn.com/mec-hadoopmapreduce-student-110108043142-phpapp02/85/Introduction-to-Hadoop-and-MapReduce-33-320.jpg)