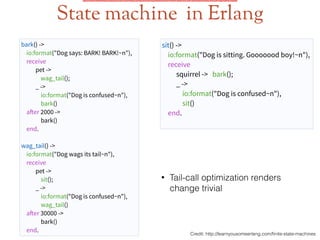

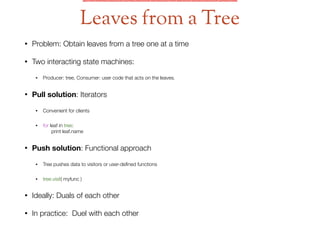

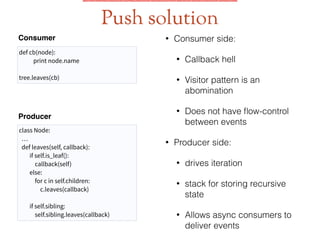

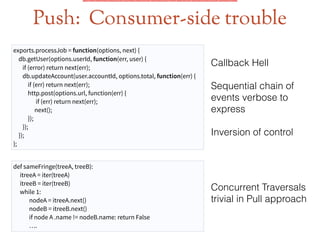

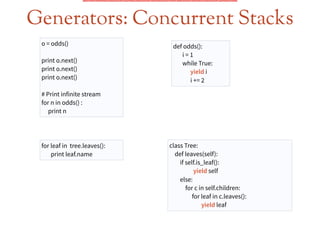

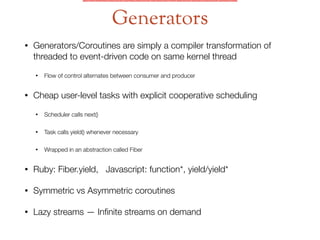

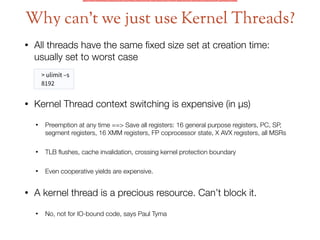

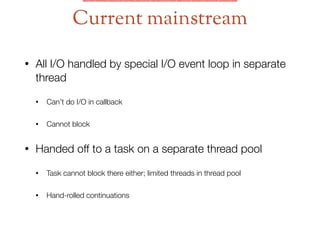

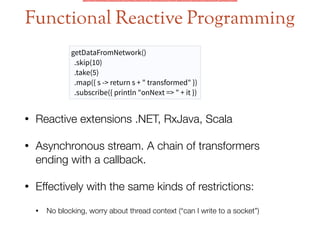

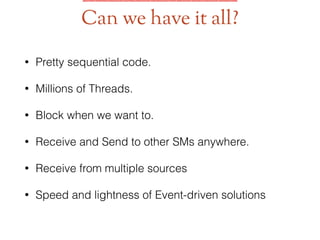

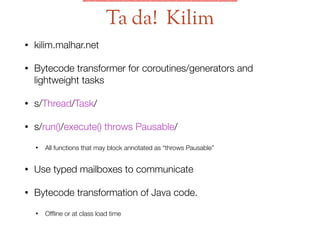

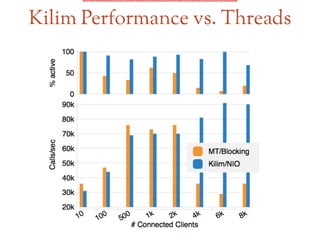

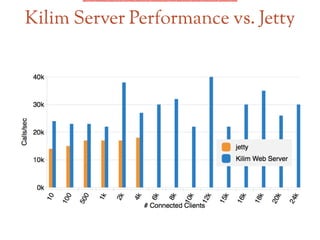

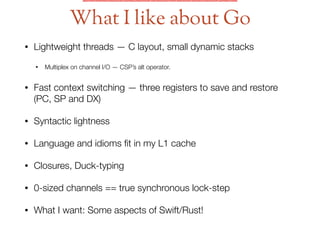

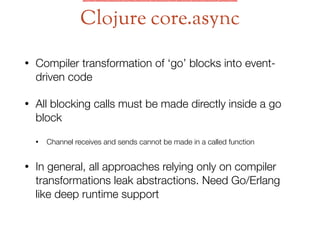

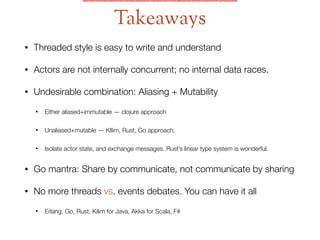

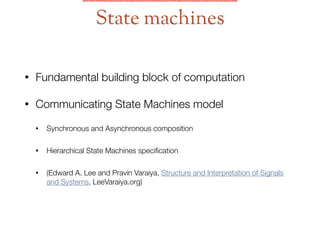

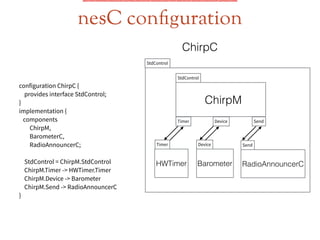

The document discusses the concept of communicating state machines (CSMs) and their applications in various programming contexts, including C++ and TinyOS. It covers synchronous and asynchronous communication, state management, and the advantages of different programming paradigms like event-driven and functional programming. Additionally, it highlights the importance of optimizing performance in concurrent systems and presents examples using languages such as Erlang, Ruby, and Go.

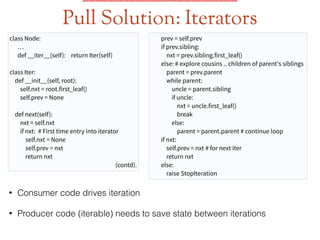

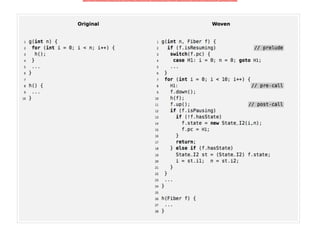

![Stacks as State Machines

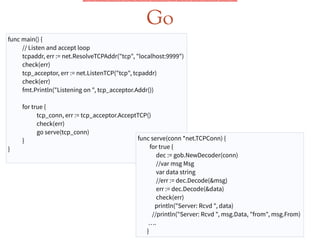

void readMsgs( socket) {

numMsgsRead = 0

while (true) {

msg = readMsg(socket)

dispatch(msg)

log(numMsgsRead++)

}

}

void readMsg(socket) {

len := readLen(socket)

readBody(len)

}

void readLen(socket) {

byte[4] len

for i = 0 .. 4 {

len[i] = readByte(socket)

}

return len

}

• Thread of control

• Control plane = Call Chain (each frame

remembers its pc)

• Sequential flow of control defines hidden states

• Functions define major states

• Data plane = Vars local in each frame

• Blocking semantics == synchronous (lock-

step) communication

• readByte and dispatch interact with network

• Easy API; that’s why Posix and most db

calls are synchronous](https://image.slidesharecdn.com/coroutinesthreadsmeetup-140613133556-phpapp01/85/Communicating-State-Machines-11-320.jpg)