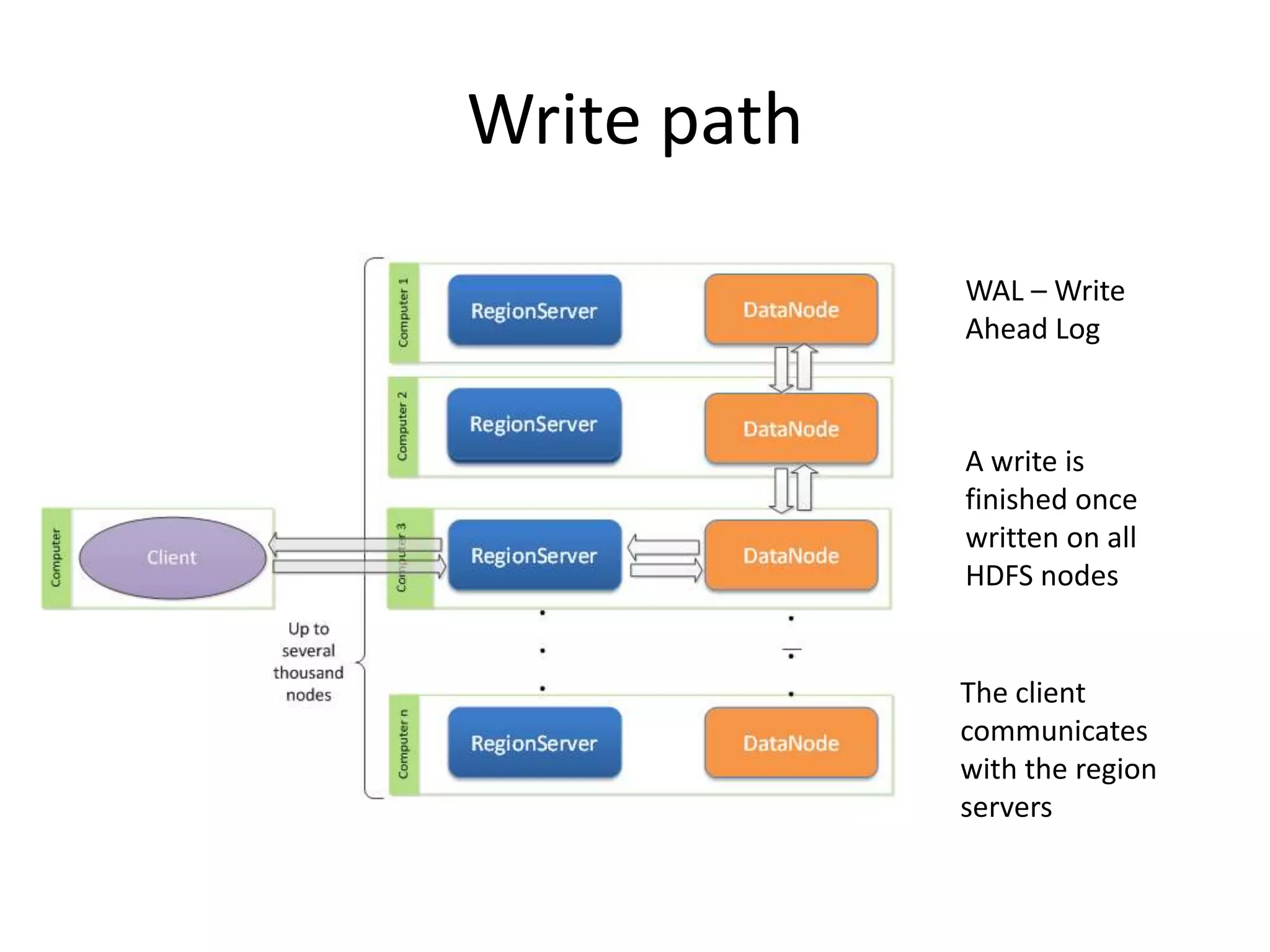

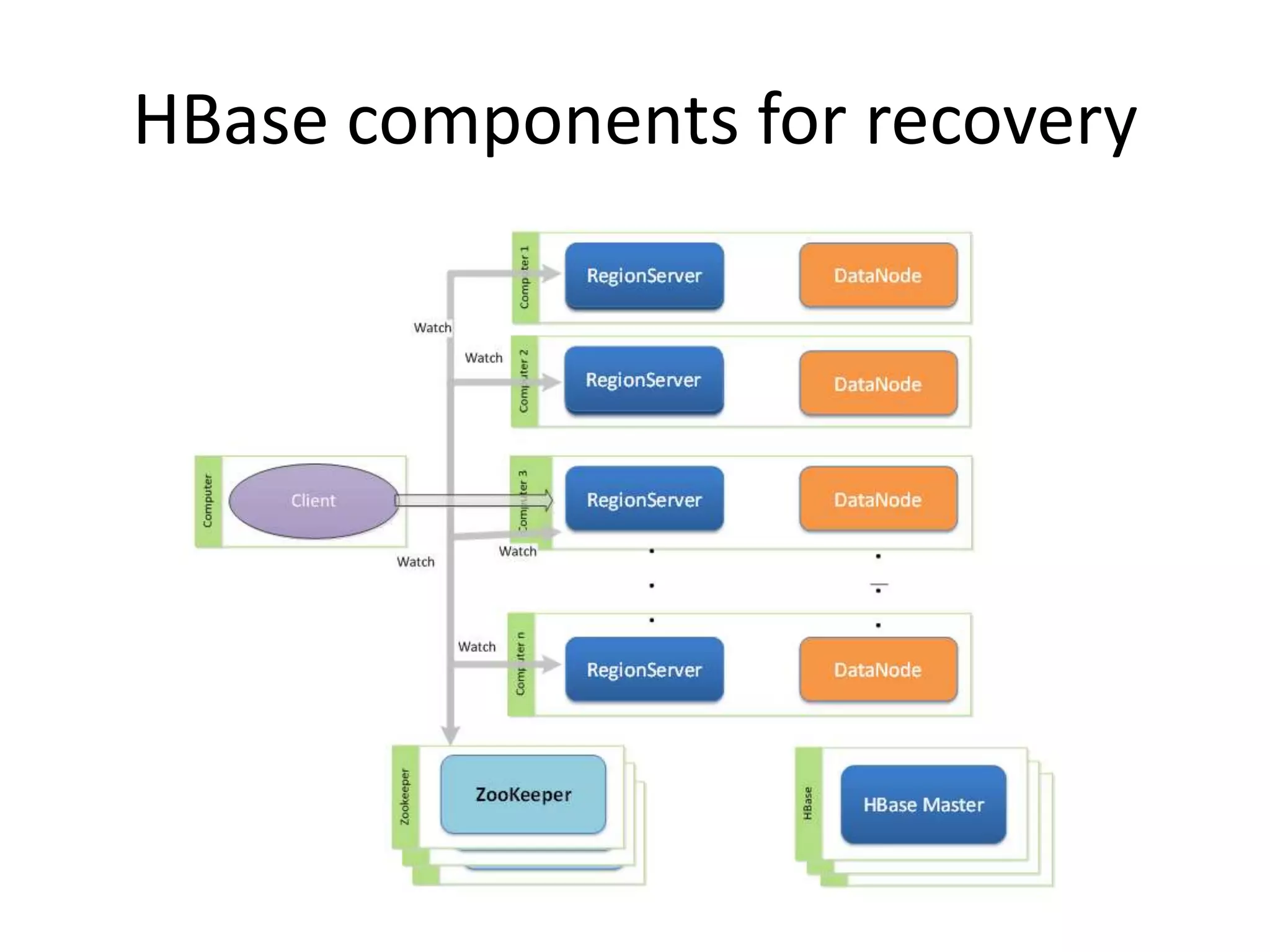

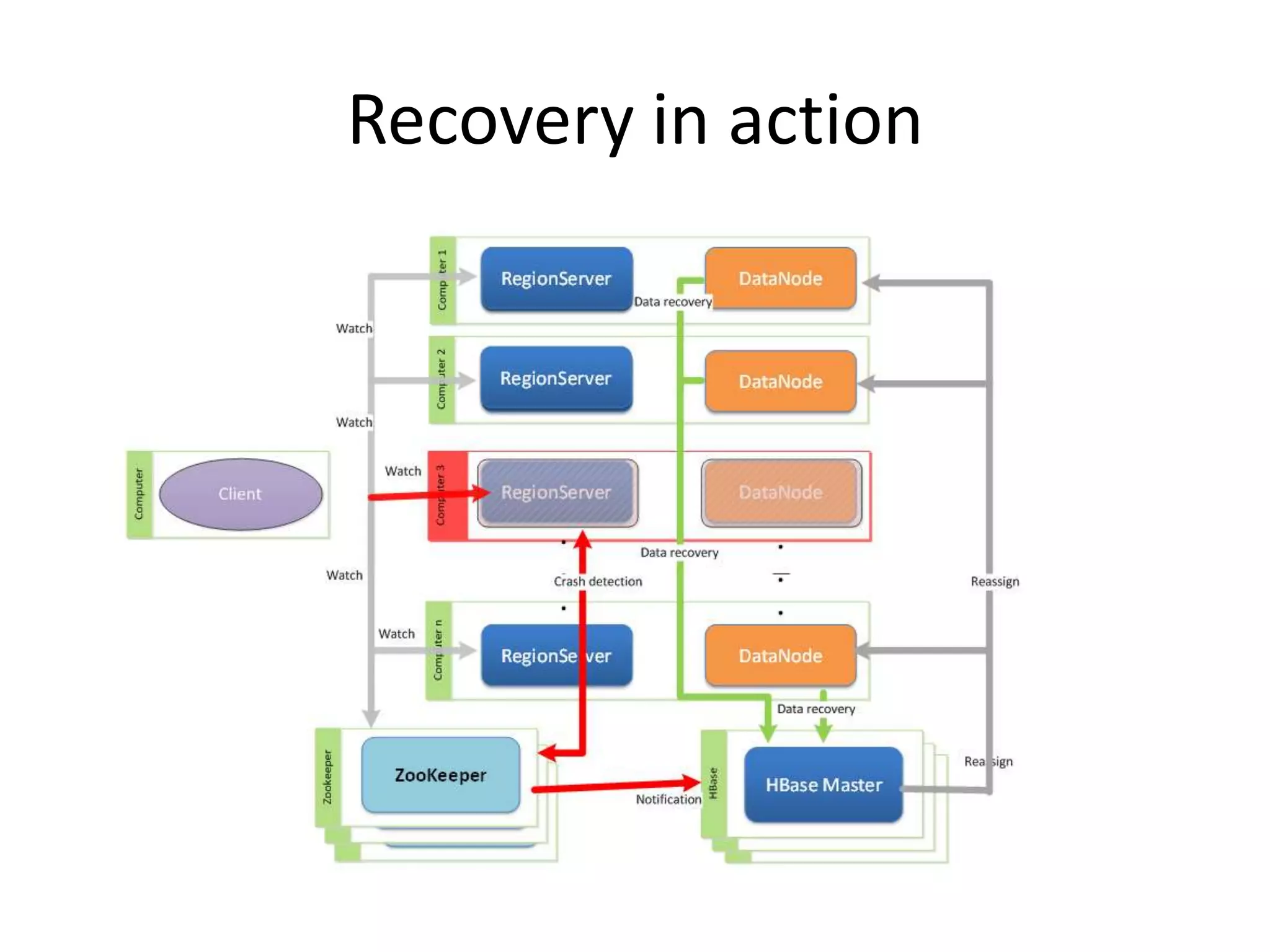

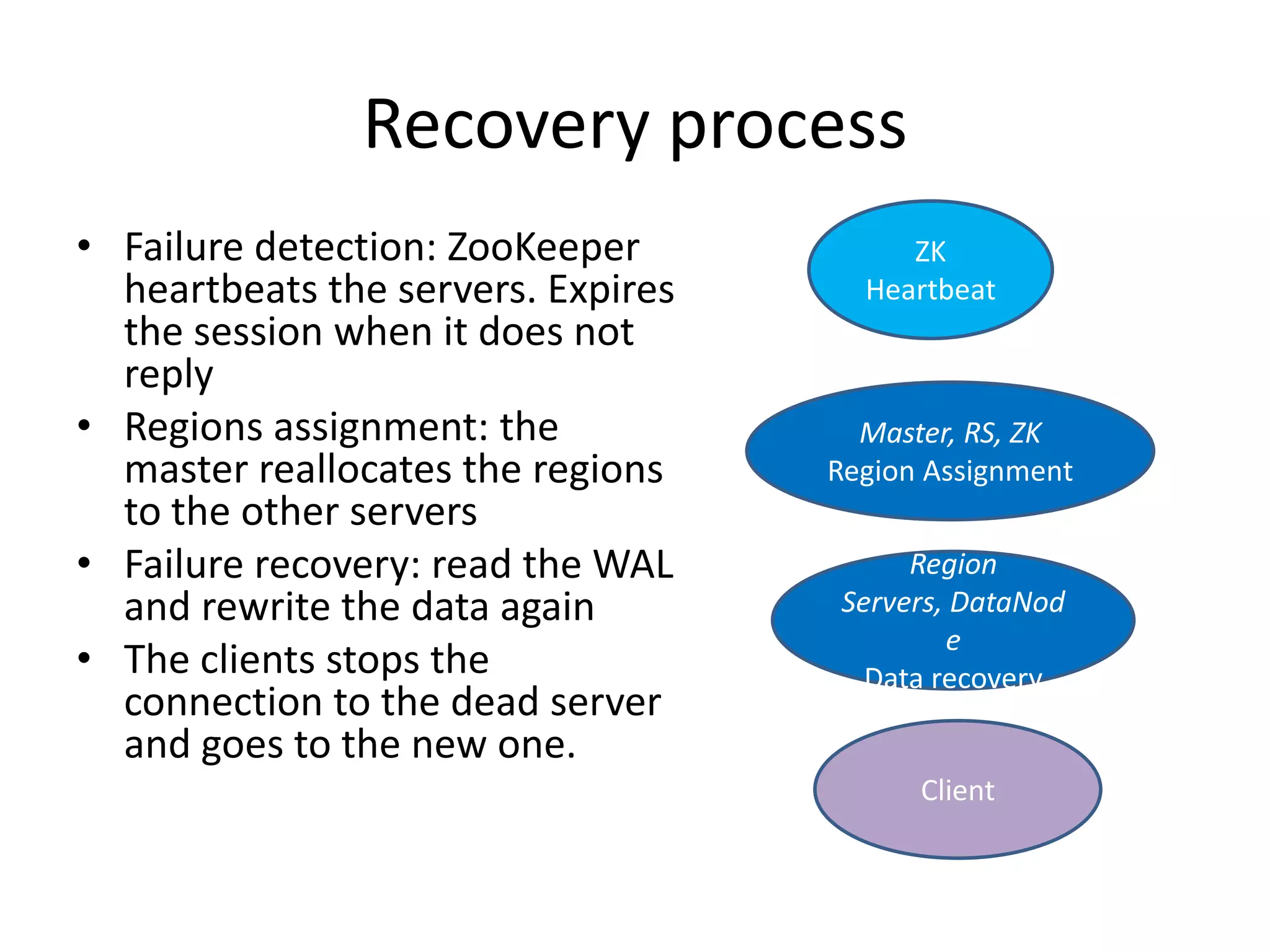

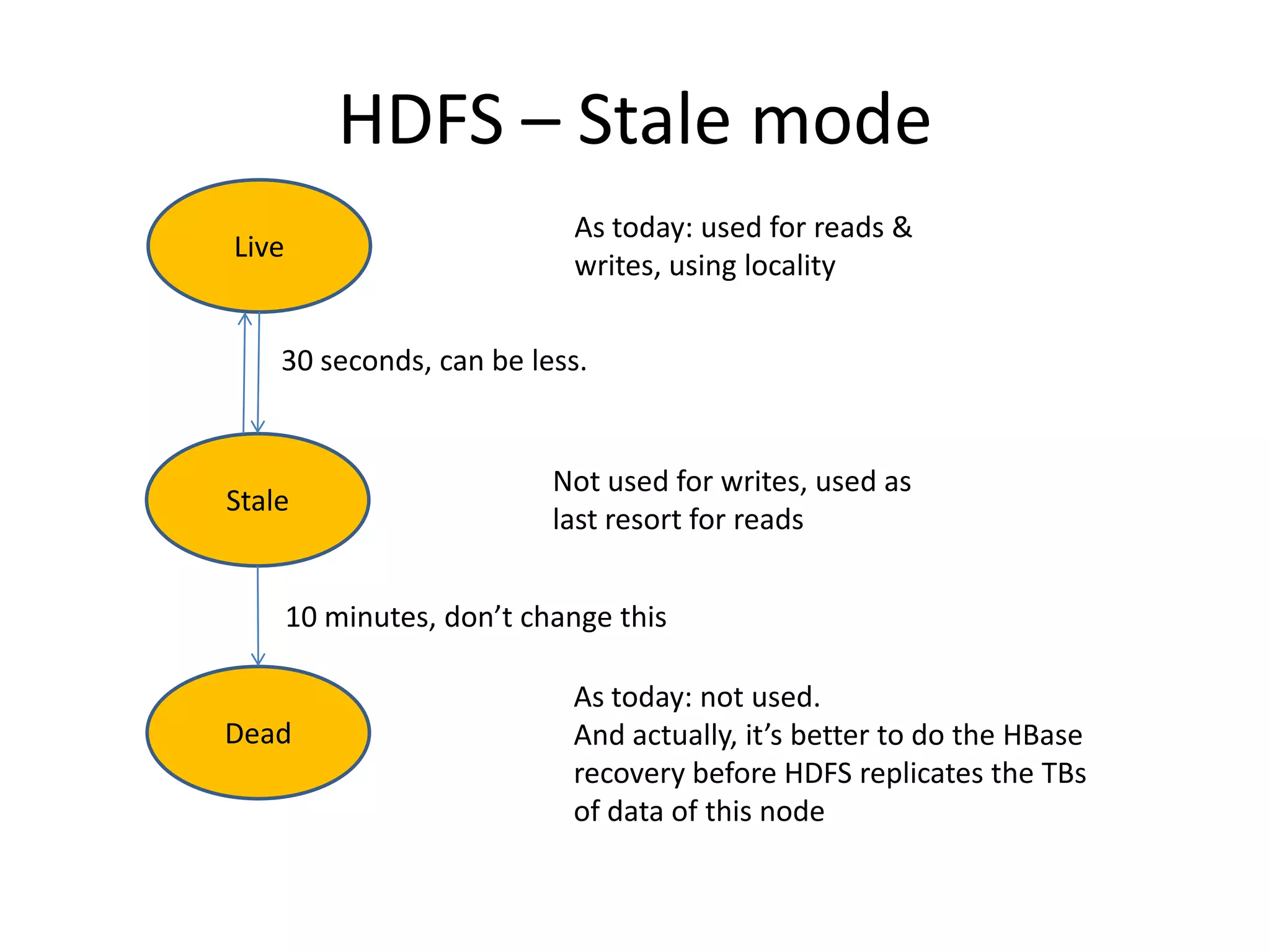

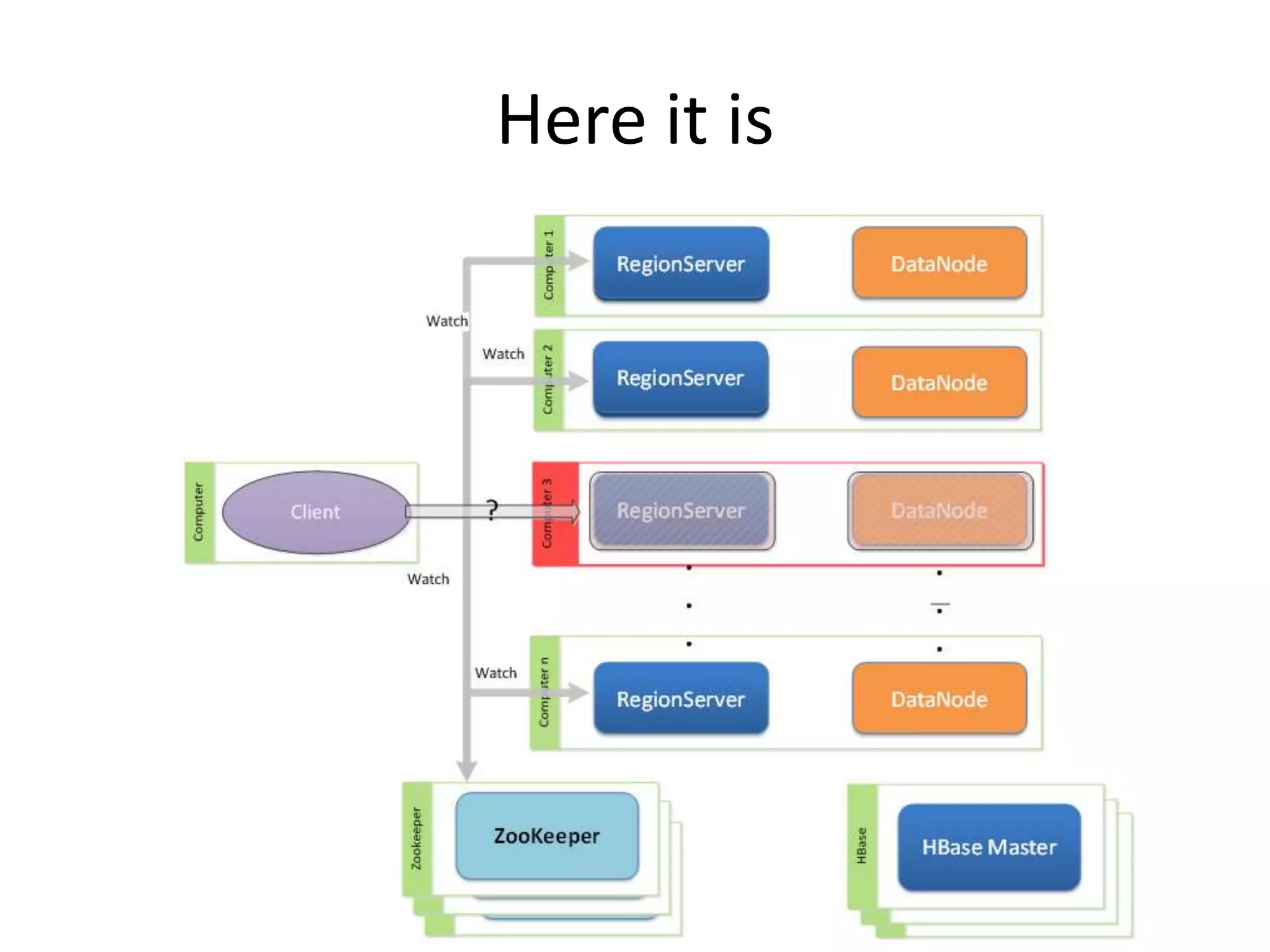

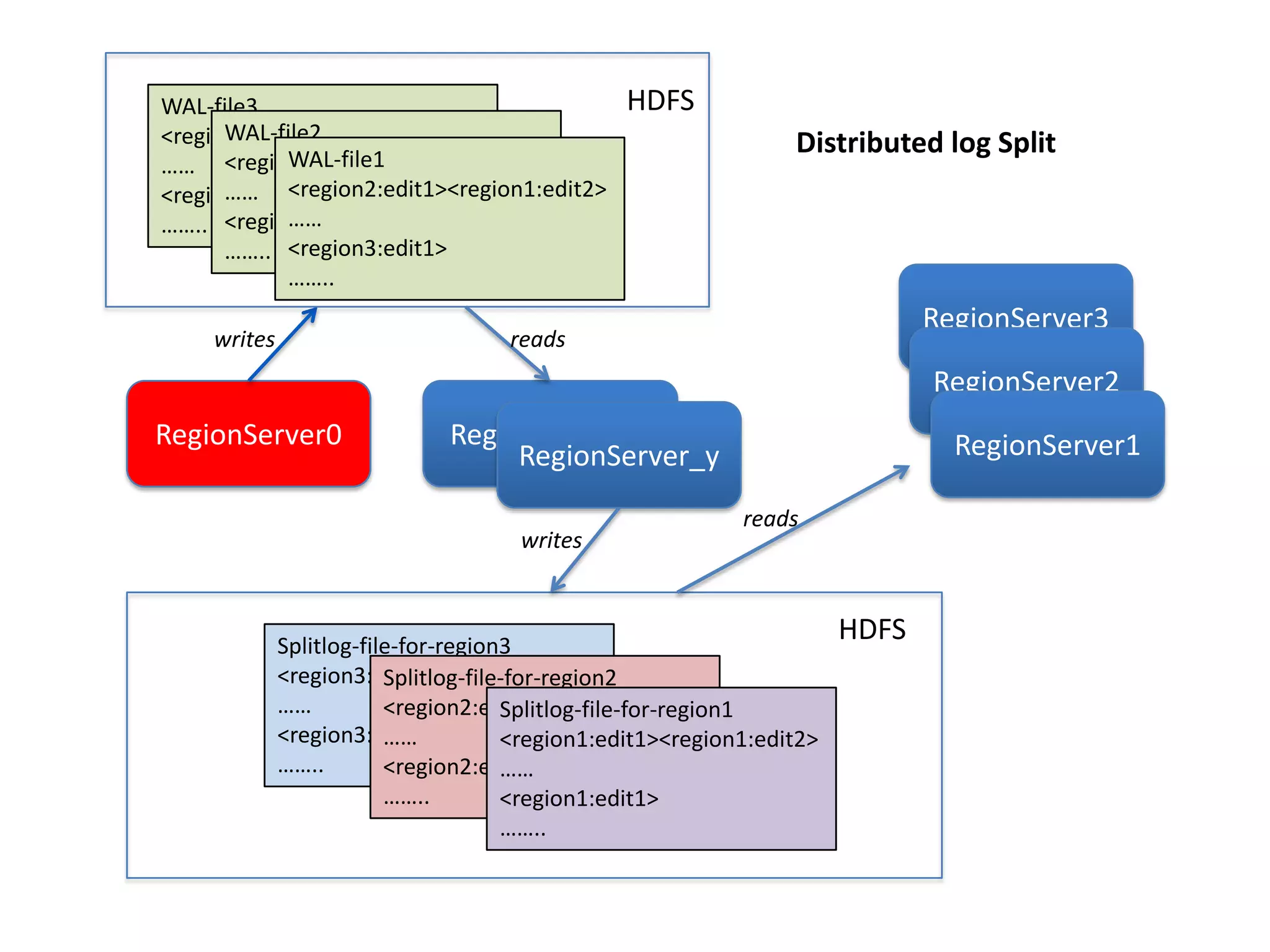

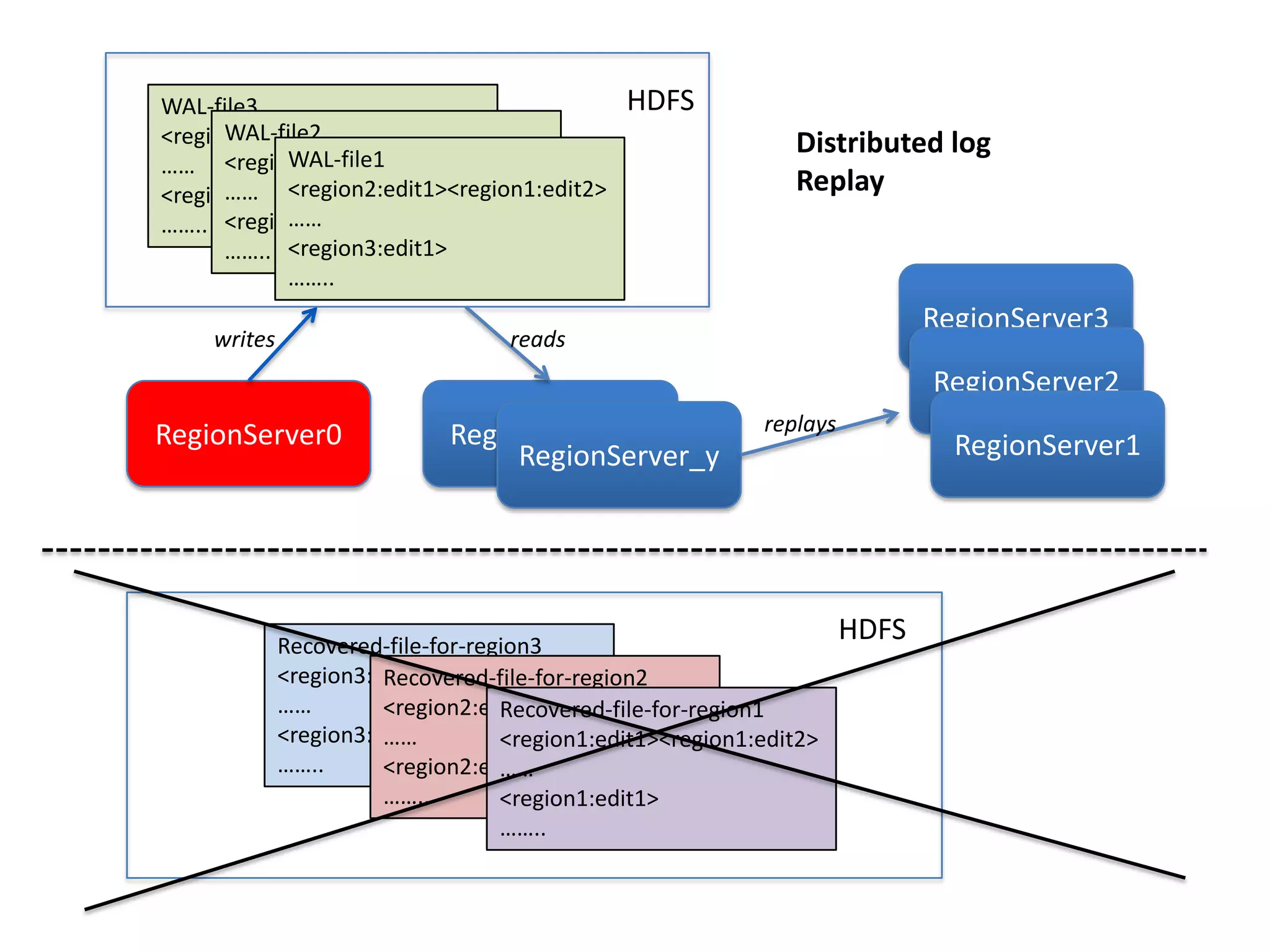

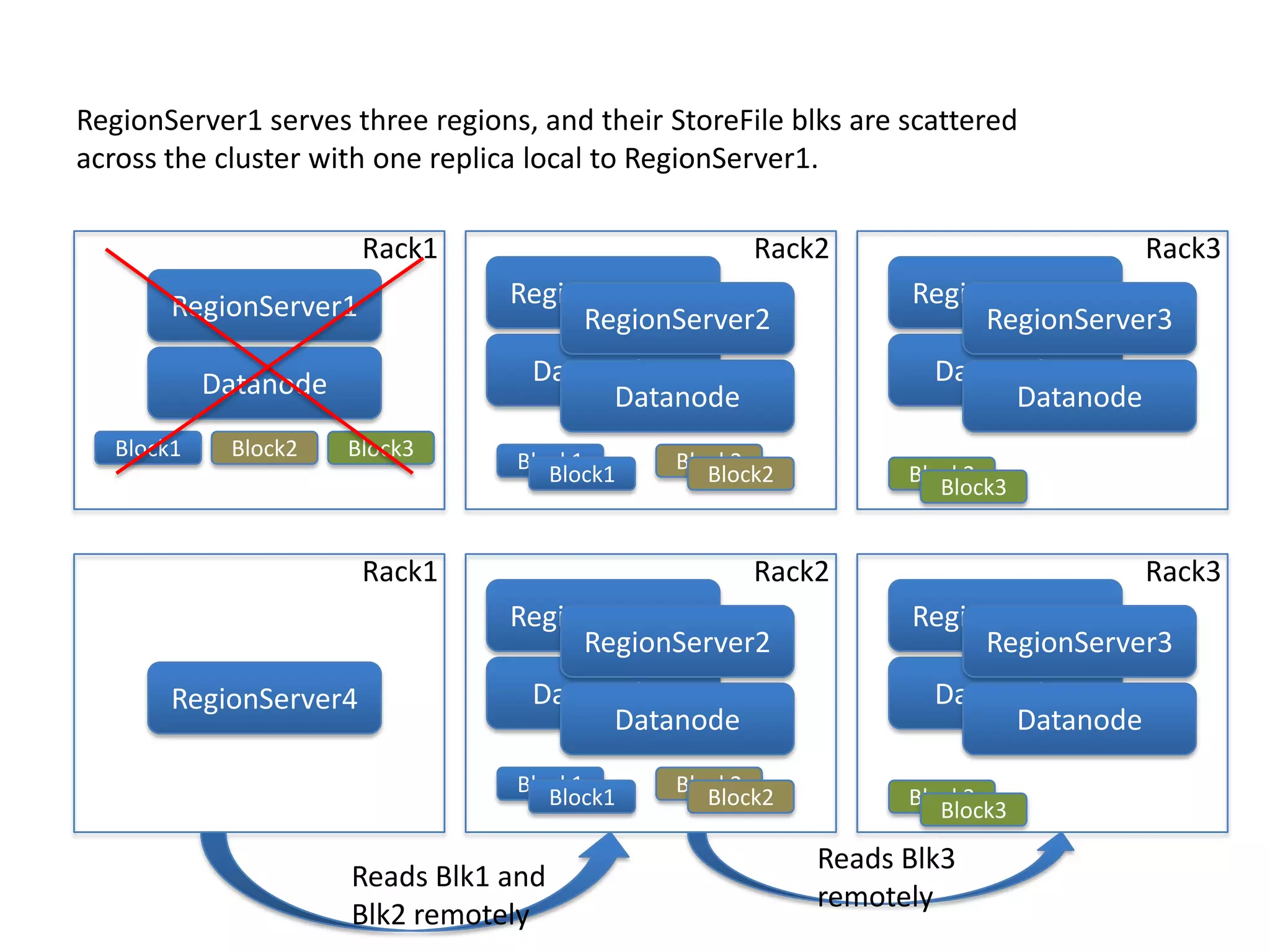

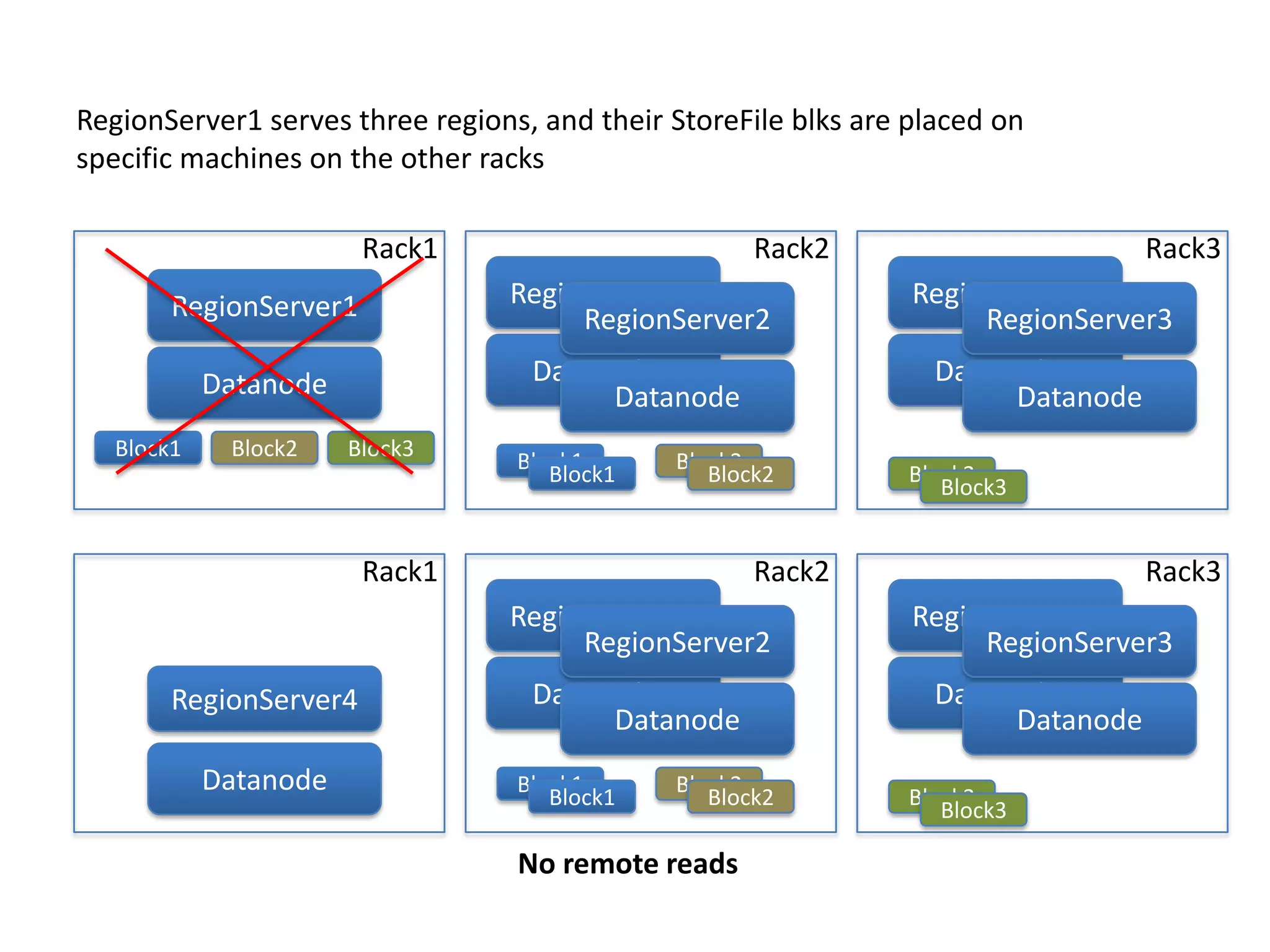

This document discusses ways to reduce the mean time to recovery (MTTR) in HBase to below 1 minute. It outlines improvements made to failure detection, region reassignment, and data recovery processes. Faster failure detection is achieved by lowering ZooKeeper timeouts to 30 seconds from 180. Region reassignment is made faster through parallelism. Data recovery is improved by rewriting the recovery process to directly write edits to regions instead of HDFS. These changes have reduced recovery times from 10-15 minutes to less than 1 minute in tests.