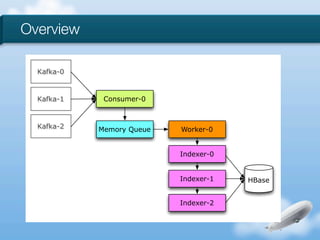

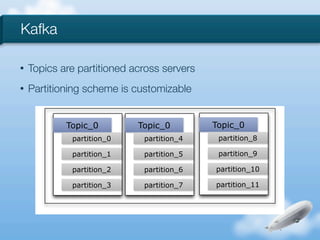

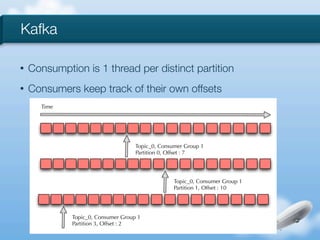

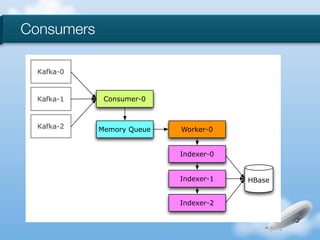

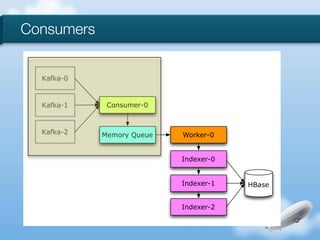

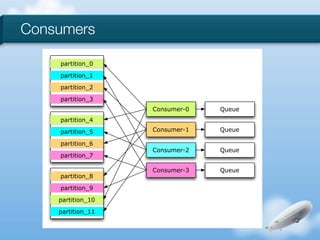

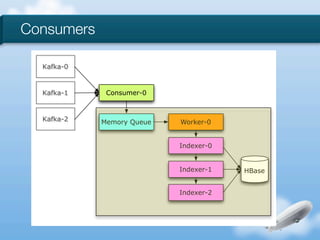

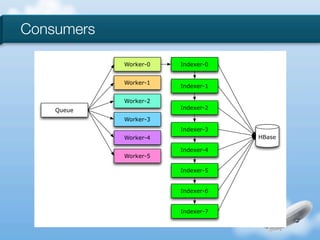

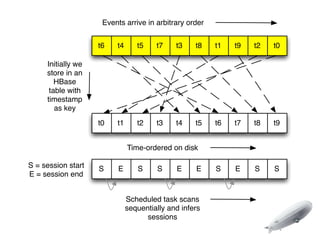

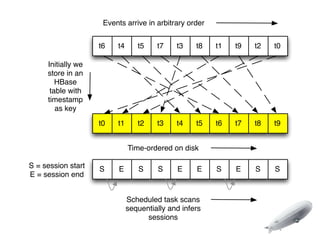

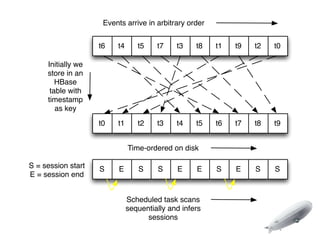

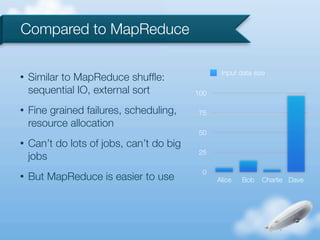

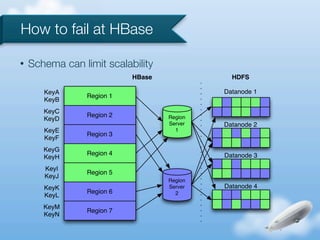

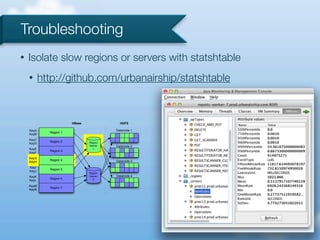

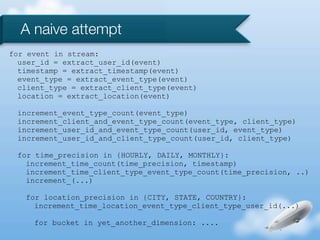

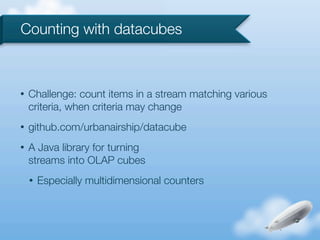

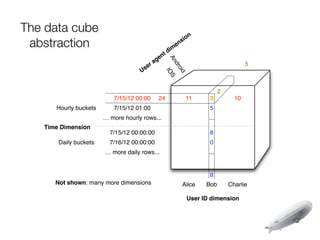

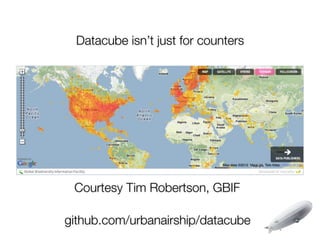

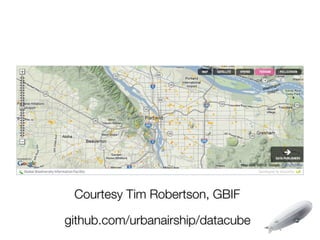

The document discusses the implementation of near-realtime analytics using Kafka and HBase at Urban Airship, highlighting the challenges and methodologies involved in processing large volumes of data from mobile services. It outlines the architecture of Kafka for efficient data streaming and the advantages of HBase for low-latency data querying, as well as the strategies for counting and managing asynchronous event data. Key advantages of the approach include high scalability and fast performance, but it also acknowledges potential difficulties such as schema limitations and the need for careful resource management.